- Community Home

- >

- Storage

- >

- Around the Storage Block

- >

- HPE and Scality ARTESCA deliver best edge-to-cloud...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

HPE and Scality ARTESCA deliver best edge-to-cloud-to-core data management experience for AI/ML

Digital transformation (DX) is the most crucial initiative that many enterprises have undertaken to maintain and grow their business. At its core, digital transformation leverages digital technology to improve the customer experiences as well as business and operational processes.

What are some of the IT challenges posed by the widespread adoption of AI/ML?

While digital transformation provides business advantages to the enterprise, it also creates tremendous stress on how IT organizations manage their data. To support digital transformation, enterprises need data management solutions capable of supporting:

- Unified namespace and data management across the edge, multi-cloud, and core. Data resides everywhere, on-premises, in the cloud, and now, with the diffusion of IoT and sensors, even at the edge. The ubiquitous nature of data requires the capability to transparently access (single namespace) and manage (data management) data wherever it resides.

- Unlimited scalability. AI/ML algorithms require massive datasets to enable adequate training of underlying models that ensure accuracy and speed. AI/ML workloads need a storage solution that can infinitely scale as the data grows.

- High-throughput and latency. As a single GPU can consume up to 8 GB/s, AI training requires storage systems with high throughput. But high throughput isn’t the only performance issue. AI models are trained using a substantial amount of small files – some only a few MB in size – so latency also plays a critical role in the storage system performance. AI storage systems must maintain high data throughput and low latency.

- Metadata search and classification. To build and train effective AI models, dataset preparation is vital. Rich metadata is necessary for data scientists to locate specific data to develop and use with their AI and ML models. Metadata annotations allow the progressive building of knowledge as more information is added to the data.

- Low total cost of ownership (TCO). A storage infrastructure designed for AI/ML workloads must provide low-cost capacity and high-throughput performance – but also must be cost-effective from the operational point of view.

- Multi-tenancy functionality. Multiple teams of data scientists can simultaneously work with the same data sources. AI storage systems must be designed to support these multi-tenant use cases.

- Reliability and built-in data protection. Due to the PB size of the dataset, regular backup is not an option; it’s not practically realistic. The AI storage systems must store data with sufficient redundancy, so it’s always protected.

- Portability. The transition to cloud-native architecture is also underway within AI/ML apps so they can live wherever it makes more sense: in the cloud, on-premises, or at the edge. Interfacing with a storage system that is fully containerized and could be deployed everywhere is a critical factor.

The answer: HPE Solutions for Scality ARTESCA for fast object data stores

Co-designed by Hewlett Packard Enterprise and Scality, ARTESCA solutions usher in a new era for object storage that helps power the next generation of cloud-native analytics in-memory applications.

ARTESCA is a distributed object storage system built using cloud-native methods, such as containerized micro services, in a scale-out architecture. ARTESCA services are deployed and orchestrated on Kubernetes to provide maximum portability and automation capabilities across deployment environments such as data centers and edge locations.

ARTESCA has no single points of failure, and requires no downtime during upgrades, adding new servers, scaling, planned maintenance, or unplanned system events. It is also ideal for the data storage requirements of new cloud-native applications for AI/ML, big data analytics, and edge computing applications.

Let’s see how it works.

ARTESCA federated data management and single namespace

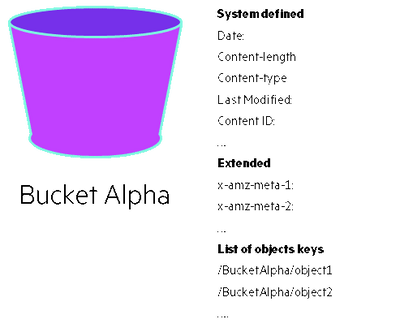

The metadata service is the heart of the ARTESCA system. It provides the object namespace for data in the form of an Amazon S3 compatible view.

ARTESCA namespace consists of:

- The buckets: logical containers for data objects

- The objects themselves: the data payloads

- The system-defined and user-defined (user or application-defined) extended metadata attributes[1].

The logical view of the ARTESCA metadata service databaseThe logical view of the ARTESCA metadata service database

The logical view of the ARTESCA metadata service databaseThe logical view of the ARTESCA metadata service database

ARTESCA uses the metadata service as a critical accelerator for nearly all S3 command requests to provide rapid lookups of object data through the database, versus scanning through large amounts of data on the disk drives. The metadata service and database deployment are across physical machine hosts to ensure that the service remains available during failures or outages. Moreover, the metadata database is implemented as a distributed consensus cluster to ensure that requests are served consistently.

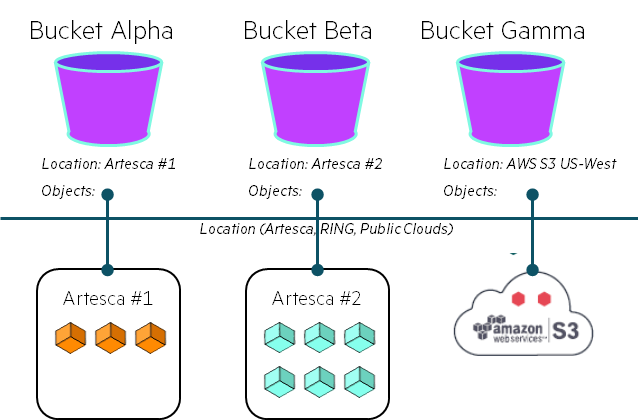

The metadata namespace is also multi-location and multi-cloud aware, as described below.

ARTESCA multi-cloud single namespace

ARTESCA provides a multi-cloud single namespace, data management policies, and metadata search, all automated by a scalable workflow engine. ARTESCA namespace, data management policies, and metadata search can span across multi-cloud, and more generally, multiple “location” configurations.

These capabilities are implemented using the ARTESCA metadata services as follows:

In ARTESCA, a location is described by:

- the endpoint address or URL

- access credentials

- target container/bucket.

Using these location descriptors, the ARTESCA namespace becomes a multi-location/multi-cloud global namespace. Indeed, location information can be used to describe:

- the ARTESCA local instance, or

- the location of other ARTESCA instances, or

- other RING instances, or

- public cloud target regions

The ARTESCA location mechanism enables a multi-cloud namespace and policies for lifecycle transition and cross-region replication (CRR) between ARTESCA and public clouds, or other combinations of sources and target locations.

Data federation

ARTESCA provides integrated data management services. These services provide workflow processing capabilities for managing data across multiple locations and clouds. ARTESCA currently supportsl lifecycle management and cross-region replication as integrated data management policies.

ARTESCA supports asynchronous replication through an extended form of the AWS S3 Cross-Region Replication (CRR) model. Asynchronous replication can be used to create a remote copy of data on the local ARTESCA system. This capability is essential for AI operations, as often remote archived data (e.g., in AWS) are copied on a faster local system to have better performance during model training.

ARTESCA solves both of these problems:

- For metadata search, ARTESCA provides an extended syntax on the GET Bucket S3 API that allows users and applications to query both the system and user-defined metadata attribute values.

- ARTESCA supports the assignment of extended metadata attributes on external locations, including all supported public cloud services. Therefore, users and applications can perform searches on any ARTESCA bucket, independent of its location, and without dealing with differences in data or format based on where the data is stored.

Both system-defined and extended attributes are searchable in ARTESCA via the API and the UI.

No cloud vendor lock-in

Because ARTESCA enables you to move data between clouds in native format and without requiring application re-writes, data is not locked into any single cloud provider.

ARTESCA unlimited scalability

Two primary factors drive storage scalability: the software design and system metadata DB performance.

- The first factor means that the storage system must handle the increase of capacity, or throughput, or workloads, simply by adding nodes linearly.

- The second factor is connected to the problem that system metadata DB can quickly become the storage system’s bottleneck. Indeed, any object request – get, put, delete, and so on – is a query to the metadata DB. In AI/ML training or inferencing, the number of these queries can be highly demanding, e.g., an AleNet model running on NVIDIA A100 GPU can request up to 60,000 images/s[2].

ARTESCA addresses both of these two limitations. It has been architected and implemented using cloud-native design principles and methods. The system is designed as a set of distributed microservices delivered as containers at all layers. Therefore, the system can instantiate new microservice as the system load grows.

ARTESCA metadata is architected as a containerized microservice to guarantee maximum scalability. It distributes both the user data and the associated metadata across the underlying nodes, eliminating the typical central metadata database bottleneck. Moreover, the metadata services are hosted on All-NVMe Flash disks to guarantee the fastest access speed to the metadata database. This enables ARTESCA to be seamlessly scaled out from a single VM or server, to thousands of servers, with 100’s of petabytes – or potentially exabytes – of storage capacity.

ARTESCA performance for data-intensive apps

Three elements characterize the performance of a storage system for AI/ML:

- Aggregated throughput. The aggregated throughput[3] is the total data transfer rate value that the whole data store system and network provides to the servers running AI, ML, DL, and analytics models.

- Parallel access. Machine learning and AI algorithms process data in parallel. Consequently, multiple tasks can read the same data numerous times, and at the same time.

- Latency, file size, and data access profile. Latency is the time it takes to complete an IO request. In the object space, latency is the time that the infrastructure takes to retrieve and deliver the object requested.

In the AI/ML world, latency can have a substantial impact on throughput. Indeed, AI/ML models are trained using large amounts – dozen of millions – of small/few MB files. When a training or inference process starts, millions of these files must be randomly retrieved from disks, producing a massive storm of queries on the metadata services.

ARTESCA, running on high-performance HPE all-NVMe platforms, can provide up to ten of GB/s of throughput per server, and thanks to its microservices architecture and optimized metadata service, described above, its aggregated throughout and can scale up to hundreds of GB/s.

In addition, as ARTESCA hasn’t any object locking or attributes to manage, it is highly performant at parallel read I/O processing.

ARTESCA reliability and data durability

ARTESCA provides the highest levels of durability by protecting data against a wide range of failures. This includes protecting data against loss or corruption due to common errors, and failure scenarios such as bit rot errors, disk drive failures, server failures, power outages, and other component-level errors.

ARTESCA provides the following mechanisms to ensure data is stored efficiently and protected:

- Dual-level erasure coding (EC). One of the main techniques to maximize data durability is an innovative combination of network (distributed) erasure codes and local repair codes within a server. The dual-level erasure coding provides additional protection against failures and accelerated local (non-network) repair times for disk failures within a server.

- Data Extents. Data is stored on disk in fixed-size files referred to as “extents.” The use of extent for grouping many smaller objects avoids fragmenting the disk file system and speeds IO performance.

- Data replication. Multiple copies of objects stored across multiple different disk drives and physical machines, if available.

- Checksums for data integrity assurance. All extents and objects on disk are stored with a corresponding checksum to ensure that any read data is the same as when it was stored, ensuring data integrity.

- Background disk scrubbing. A background process scans extent level checksums to ensure that data is not changed due to unrecoverable bit errors. Moreover, the system validates object-level checksums upon reading the data to verify that data integrity is maintained.

- Self-healing. This set of background services ensures that even if failures occur, the system will revert to its nominal protected state, assuming sufficient resources are available. ARTESCA utilizes any available space in the cluster pool to perform a repair, so it doesn’t need additional disk or storage.

- Flash cache. Used for accelerating writes on storage servers with HDD-based data storage.

For more details on ARTESCA data protection, see the Scality ARTESCA Whitepaper.

ARTESCA enables granular multi-tenancy

ARTESCA provides multi-tenancy and security through an AWS-compatible Identity and Access Management (IAM) capability. It allows defining a multi-tenancy through the following models:

- Accounts. Multiple accounts can be created in a single ARTESCA instance to model multiple tenants, applications, or use-case workloads.

- Users. Each account can define its own set of user identities.

- Groups. Users can be assigned to one or more groups to simplify access control through policies.

- Policies. AWS-compatible user, group, and bucket policies provide powerful and flexible access control capabilities to allow/deny access to particular data or enable full or partial (read-only) access to data.

- Access Keys. AWS-compatible shared and private keys are used in S3 API requests using HMAC based signatures for authentication. Keys may be assigned to accounts or users.

ARTESCA is easy to use

ARTESCA provides rich management and monitoring services for the entire set of distributed software services, platforms, and hardware stack. The UI offers a total view of the system across ARTESCA software services and the underlying platform, including the servers and disk drive hardware layer.

The UI also supports the federated multi-cloud namespace capabilities described previously, with a dashboard view of managed backend storage capacity across deployments of ARTESCA, RING, and public cloud storage services. Through the ARTESCA UI, it is possible to define new policies across the various storage locations, and monitor these policies’ status and performance independently by the location of the data.

The UI provides visualization of extensive utilization analytics and capacity planning metrics tracked by ARTESCA, which are also accessible via API for external tools and applications. Utilization data includes capacity-based (number of objects, storage consumed) and performance-based metrics (S3 ops/sec, bandwidth in/out). The UI also provides time-based trending graphs and visual meters for these metrics, which can aid in overall system health monitoring and capacity planning.

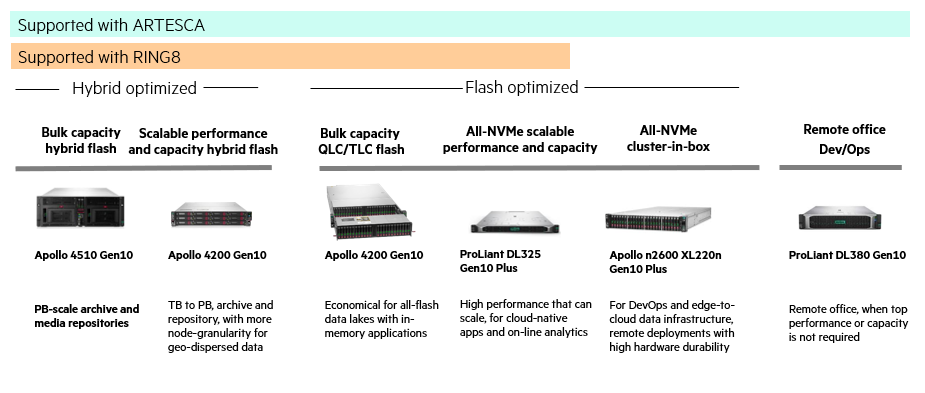

HPE supports ARTESCA on a wide range of intelligent data storage servers

HPE Solutions for ARTESCA offer the right balance of capacity, performance, and security for modern applications, via density and workload-optimization for the full spectrum of object storage workloads, and with configurations starting from just a single node.

There are six ARTESCA configurations available from HPE, suitable for core and edge data center locations, and including Apollo and ProLiant servers in all-flash and hybrid flash/disk versions.

Conclusions

HPE Solutions for Scality ARTESCA provide the right solution for AI storage. It is the world’s first lightweight, enterprise-grade object storage software, purpose-built for the demands of new cloud-native applications and AI/ML training.

- High scalability and capacity. It can start small on a single server, and scale-out easily in performance and capacity as needs grow.

- Performance for data-intensive apps. It delivers essential performance to cloud-native, analytics, and in-memory applications, running on HPE all-NVMe platforms.

- Data management across multiple clouds. It delivers core-to-edge flexibility for modern applications, and provides built-in federated cloud data management to deliver complete data lifecycle management across multiple clouds.

- Efficient data access. It enables applications to access data on-premises – easily and in multiple clouds – for efficient data processing.

Lastly, HPE Solutions for Scality ARTESCA is also available through HPE GreenLake.

Learn more at

hpe.com/storage/scality

[1] Throughput and bandwidth difference. Throughput measures the data transfer rate to and from the storage media in megabytes per second, while the bandwidth is the measurement of the total possible speed of data movement across the network. Therefore, throughput is a measurement of the ultimate amount of data that actually moves along the network path — while bandwidth is the potential capacity of the path, without extenuating factors

[2] See HPE Deep Learning cookbook. For more details on ARTESCA lifecycle management and cross-region replication, see Scality ARTESCA web pages.

[3] ARTESCA supports user and application-defined metadata through the standard AWS S3 API.

- Back to Blog

- Newer Article

- Older Article

- haniff on: Need for speed and efficiency from high performanc...

- haniff on: Efficient networking for HPE’s Alletra cloud-nativ...

- Ron Dharma on: Introducing Language Bindings for HPE SimpliVity R...

- CalvinZito on: Introducing HPE MSA Gen6 Storage: Hands-Free, High...

- 360DigiTMG on: AIOps for VMs: HPE InfoSight Performance Recommend...

- marcusburrows on: How to update HPE Nimble dHCI Compute Node firmwar...

- CalvinZito on: There's more storage coming on July 8 at Discover ...

- Kipp_Glover on: Introducing MSA Health Check: Insights into array ...

- WilMat on: Five great reasons why HPE is #1 for SAP HANA depl...

- StorageExperts on: vSphere 7.0 and HPE Nimble Storage