- Community Home

- >

- Storage

- >

- Around the Storage Block

- >

- HPE makes it easy to deploy Splunk in containers w...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

HPE makes it easy to deploy Splunk in containers with HPE Alletra and Red Hat OpenShift

Discover how easy it is to deploy Splunk in containers using HPE Alletra storage arrays and Red Hat OpenShift Container Platform.

-By Keith Vanderford, Storage Solutions Engineer, HPE

Splunk is a market leader in data analytics, helping users make sense of an endless stream of data coming from a huge variety of sources. Many workloads are being moved to containerized environments, and more and more customers are

While many organizations are choosing to run their workloads in containers in the public cloud, that doesn’t work for everybody for a variety of reasons. Your business may need to keep your data and workloads on-premises instead of in the cloud. Or due to the sensitive nature of your data, or because of security or regulatory requirements, it may be necessary for your to keep your data entirely onsite.

Here’s how HPE enables you to do just that

The solution takes an HPE Alletra all-flash storage array and integrates it with Kubernetes and Red Hat OpenShift Container Platform to build an environment that allows you to take advantage of all the benefits of running Splunk in containers, while still giving you tight control over where your data goes.

Kubernetes has become the de-facto standard for container orchestration, and Red Hat OpenShift Container Platform is a market leader in the container space. Running those together with HPE servers and storage gives you all the benefits of containers and the cloud, with none of the disadvantages or risks associated with moving your data to the public cloud.

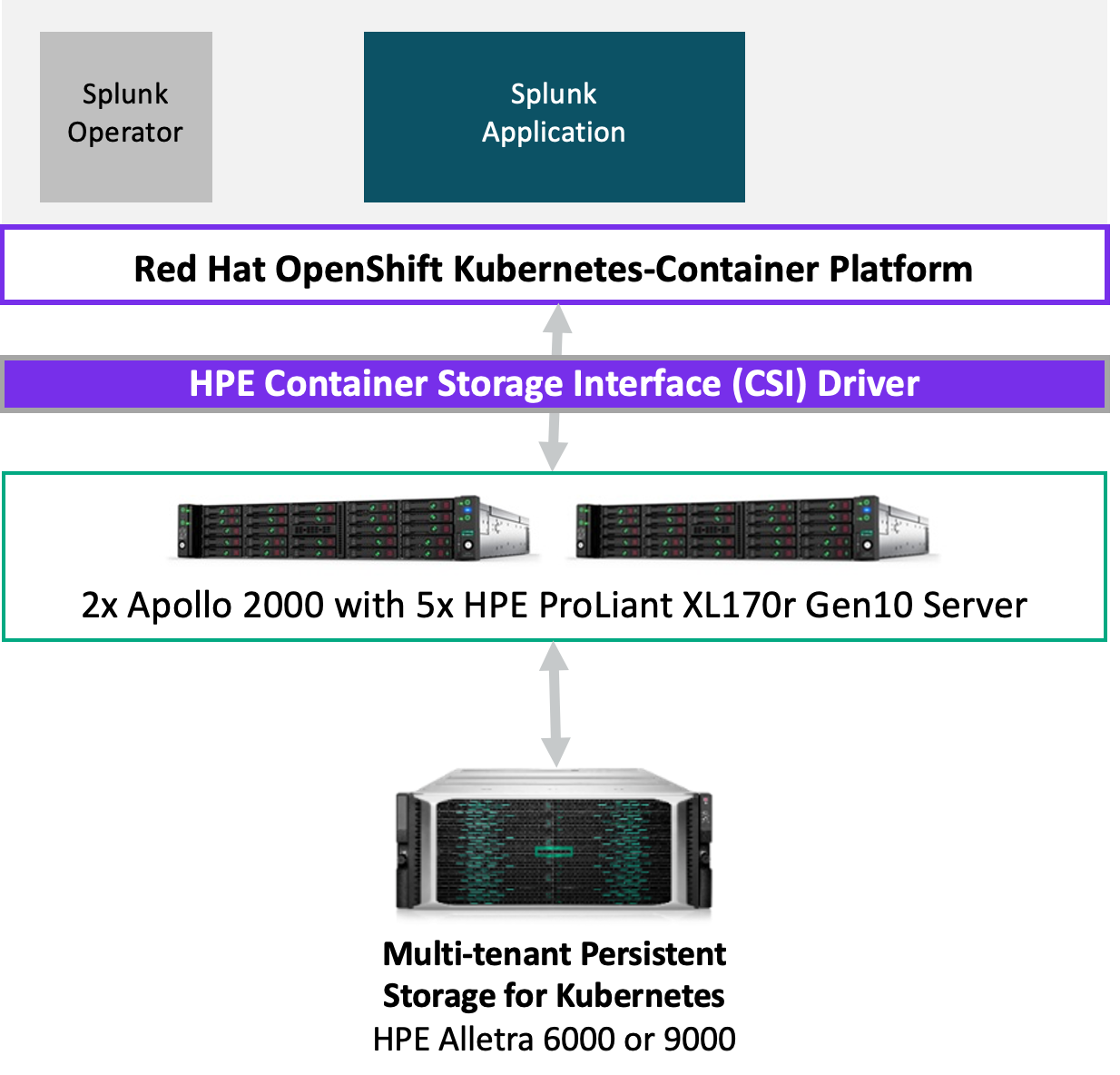

This diagram represents an on-premises deployment of a private cloud infrastructure. You could do the same thing using a hybrid cloud architecture.

It starts with an HPE Alletra storage array at the bottom. You could use either an HPE Alletra 6000 or 9000, depending on your requirements. For the compute resources, we used HPE Apollo 2000 platforms, with five HPE ProLiant XL170r Gen 10 servers. On this infrastructure, we deployed Red Hat OpenShift Container Platform 4.10, which was the latest available release at the time our tests were conducted. Our OpenShift environment contains a 5 node cluster, containing 3 Master Nodes and 2 Worker Nodes.

This environment is fully contained in a data center that is on-premises. This implementation would work just as well in a hybrid cloud environment where you have some elements of your environment on-site, and other parts of your environment in the cloud.

Red Hat OpenShift uses Operators to automate the creation, configuration, and management of instances of Kubernetes-native applications. After making sure the prerequisites are met in the Red Hat OpenShift cluster, the appropriate operator is used to install the HPE Container Storage Interface (CSI) driver. We followed the HPE documentation for deploying the HPE CSI Driver on Red Hat OpenShift. That documentation can be found at: https://scod.hpedev.io/partners/redhat_openshift/index.html#openshift_4

The HPE CSI driver makes the HPE Alletra storage available to the OpenShift cluster. Since the HPE Alletra is built for modern apps, it’s not necessary to configure the array before it can be used in the containerized environment. All you need is the IP address for the management interface of the array, and login credentials for an account on the array with the correct privileges to manage the storage. The OpenShift cluster will dynamically provision volumes on the HPE Alletra as needed to support the workloads you create in the cluster.

We used a YAML file to define the parameters for the stand-alone instance of Splunk Enterprise, and used the Splunk Operator to deploy our Splunk application on one of the worker nodes in the cluster.

Once the Splunk instance is running in the container, it needs to be exposed to the network outside of the cluster, so it can be configured and can ingest your data. You do that by creating a route in the cluster, which just takes one command. Once the route is established, Splunk is accessible and you can log in on Splunk Web to configure it according to your needs. The route also gives you the connection point for sending logs and other event data into Splunk Enterprise to be indexed, searched, and analyzed.

Greater simplicity with HPE Alletra and Splunk

It only takes a few minutes to successfully deploy Splunk in a Red Hat OpenShift cluster. The simplicity of the HPE Alletra all flash array makes it easy to take advantage of all the storage capacity and performance you need for a containerized private or hybrid cloud. This gives you the full cloud experience with the flexibility and benefits of Splunk in containers – and also allows you to keep your sensitive data onsite or in a private hybrid cloud while avoiding the risks of sending it to the public cloud.

Check out this new video where we talk about how easy HPE makes it to deploy Splunk in a Red Hat OpenShift container platform environment with the HPE CSI Driver.

Meet Storage Experts blogger Keith Vanderford, Storage Solutions Engineer, HPE

Storage Experts

Hewlett Packard Enterprise

twitter.com/HPE_Storage

linkedin.com/showcase/hpestorage/

hpe.com/storage

- Back to Blog

- Newer Article

- Older Article

- haniff on: High-performance, low-latency networks for edge an...

- StorageExperts on: Configure vSphere Metro Storage Cluster with HPE N...

- haniff on: Need for speed and efficiency from high performanc...

- haniff on: Efficient networking for HPE’s Alletra cloud-nativ...

- CalvinZito on: What’s new in HPE SimpliVity 4.1.0

- MichaelMattsson on: HPE CSI Driver for Kubernetes v1.4.0 with expanded...

- StorageExperts on: HPE Nimble Storage dHCI Intelligent 1-Click Update...

- ORielly on: Power Loss at the Edge? Protect Your Data with New...

- viraj h on: HPE Primera Storage celebrates one year!

- Ron Dharma on: Introducing Language Bindings for HPE SimpliVity R...