- Community Home

- >

- Servers and Operating Systems

- >

- Servers & Systems: The Right Compute

- >

- Put the storage for the world’s fastest supercompu...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

Put the storage for the world’s fastest supercomputer in your data center

The same storage that supports Frontier—the world’s fastest supercomputer—is now available for any size data center! Learn about the parallel storage systems that cost effectively feed your HPC or AI clusters.

Storage for the Frontier supercomputer

For storage, the Frontier supercomputer relies on an I/O subsystem that consists of two major components: an in-system storage layer of compute-node local storage devices embedded into the HPE Cray EX supercomputer and a center-wide file system based on new hybrid Scalable Storage Unit (SSU) of the Cray ClusterStor E1000 storage system.

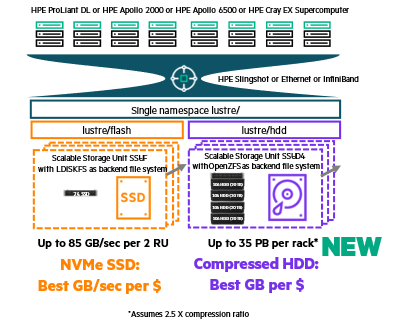

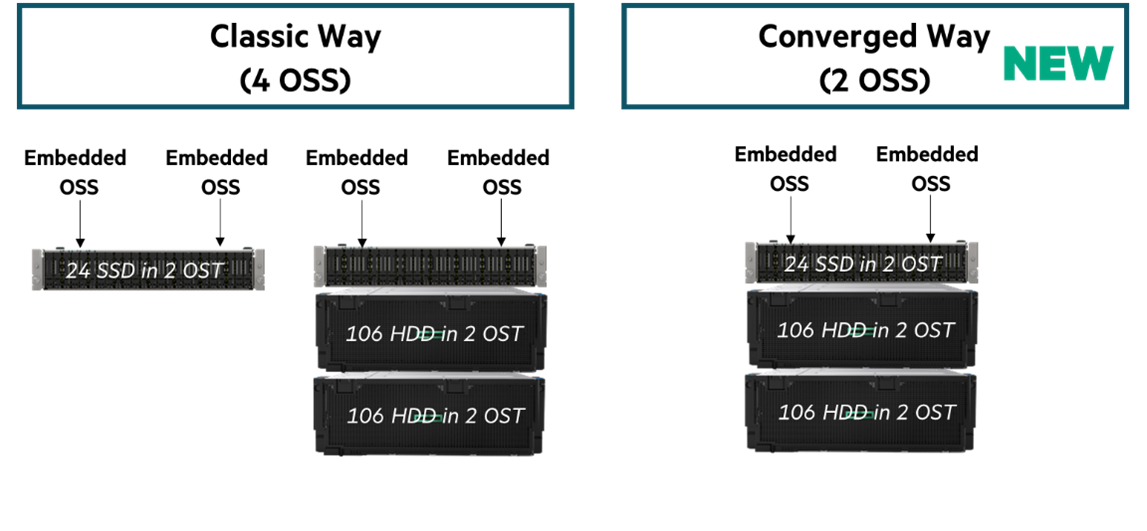

This new hybrid SSU has two Object Storage Servers (OSS) with each OSS node providing one object storage target (OST) device with NVMe SSDs for performance and two HDD-based OST devices for capacity—in a converged architecture as shown in the graphic below.

This is a classic example of innovating together with leadership computing sites like Oak Ridge National Laboratory (ORNL) first to then making the new capabilities generally available to the mainstream HPC market.

The first ClusterStor system was introduced eleven years ago at ISC11 in Hamburg. Who would have thought then that 11 years later it would power both the largest supercomputer in world—Frontier—as well as the largest supercomputer in the European Union—LUMI. Happy birthday, ClusterStor!

A new way to architect for maximum cost effectiveness

Another new capability we’re introducing is the support of OpenZFS as an additional back-end file system option for Cray ClusterStor E1000 storage systems in order to enable even more flexible and cost-effective storage designs.

We are now supporting the following back-end file systems for persistence that have different strengths:

- LDISKFS provides the highest performance—both in throughput and IOPS

- OpenZFS provides a broader set of storage features like for example data compression

The combination of both back-end file systems creates the most cost-effective solution for architecting a single share namespace for clustered high-performance compute nodes running modeling & simulation (mod/sim), AI, or high performance data analytics (HPDA) workloads. (See graphic below.)

This new capability enables you to drive the best performance from the fast NVMe flash pool while providing extremely cost-effective storage capacity from the compressed HDD pool—all in the same namespace.

Many more performance and efficiency-related new capabilities like larger storage drives (30 TB SSD and 20 TB HDD) and NVIDIA Magnum IO GPUDirect® Storage (GDS) support are now available for the Cray ClusterStor E1000 storage system. When combined with NVIDIA networking and GPUs in dense GPU servers like the HPE Apollo 6500 Gen10 Plus system, GDS support increases storage performance accelerating the AI workflow by creating a direct data path between the Cray ClusterStor E1000 storage system and GPU memory.

For details please refer to the QuickSpecs.

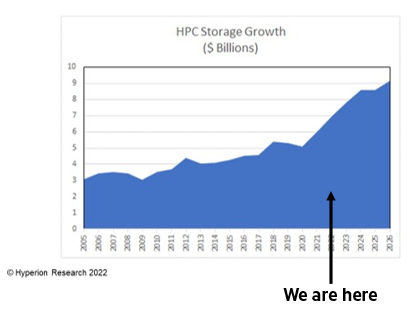

Cost effectiveness will become critical in the next years

Hyperion Research shared in the HPC User Forum in March 2022 that HPC storage spending is forcasted to “go into hypergrowth” in the next years.

HPC storage already was the fastest growing customer spending category in the last years—growing faster than CPU/CPU compute.

That over-proportional spending growth is forecasted to even accelerate going forward. It means that HPC storage will consume an ever increasing portion of the overall system budget for HPC or AI infrastructure.

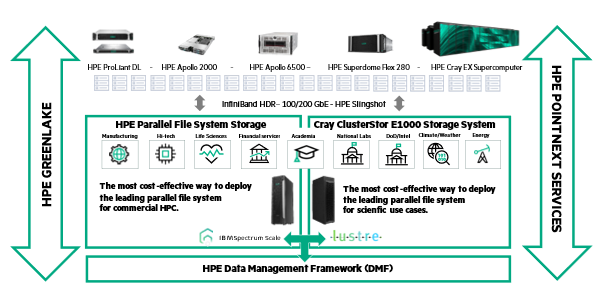

At HPE we saw this coming years ago and took action by assembling the intellectual property and expertise to be able to provide not only fast parallel storage, but also the most cost-effective way to feed CPU and GPU compute clusters with their data—all while giving customer the freedom to chose the parallel file system they prefer, Lustre or IBM Spectrum Scale.

This is the reason why we introduced the Lustre-based Cray ClusterStor E1000 storage system in 2020 and IBM Spectrum Scale-based HPE Parallel File System Storage the following year. HPE Data Management Framework manages and protect the data with native managed namespace support for both Lustre and IBM Spectrum Scale (and more recently NFS) with the ability for seamless data movement between hetereogenous namespace.

Customers love the fact that they can have “one hand to shake” from procurement to support with HPE for their full HPC or AI infrastructure stack from software to CPU/GPU compute connected with low latency interconnects to to their shared storage file systems.

And they also like very much that HPE is giving them to the choice how to source all of this however they want—owned or financed or “as-a-service” with HPE GreenLake for HPC.

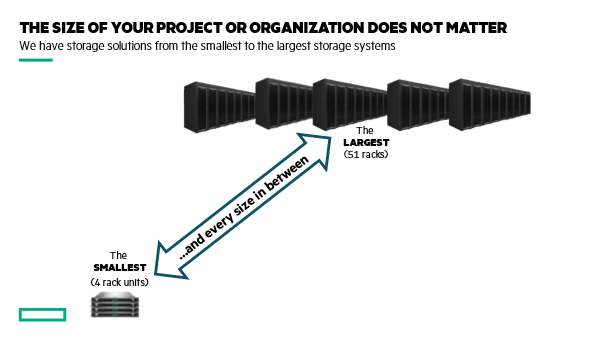

Our HPC storage portfolio addresses the needs of organizations of all sizes. You can start small and then scale to wherever you need to go.

When Aleph Alpha, a German AI startup, needed an end-to-end AI system to train their multimodal AI, which includes natural language processing (NLP) and computer vision, they adopted the recently launched HPE Machine Learning Development System with four 1U flash servers of HPE Parallel File System Storage. Knowing that they can scale out in increments of one rack unit by just adding more 1U server to the namespace. Today, four rack units is the “low watermark” to start consuming parallel and cost-effective storage from HPE.

The 51 storage racks of OLCF’s previously mentioned center-wide parallel file system represents the current “high watermark.”

We can design the perfect solution for your specific needs for everything in between (and beyond).

If you are currently facing one or more of the below HPC/AI storage challenges, don’t wait any longer:

- Job pipeline congestion due to input/output (IO) bottlenecks leading to missed deadlines/top talent attrition

- High operational cost of multiple “storage islands” due to scalability limitations of current NFS-based file storage

- Exploding costs for fast file storage—at the expense of GPU and/or CPU compute nodes or of other critical business initiatives

- Frustrating “finger pointing” of compute and storage vendors during problem identification and problem resolution

Ready for more?

View the infographic: Accelerate your innovation with HPC

Read the white paper: Spend less on HPC/AI storage and more on CPU/GPU compute

Contact your HPE representative today and request your budgetary price information for your next-gen file storage requirements!

Uli Plechschmidt

Hewlett Packard Enterprise

twitter.com/hpe_hpc

linkedin.com/showcase/hpe-ai/

hpe.com/us/en/solutions/hpc

UliPlechschmidt

Uli leads the product marketing function for high performance computing (HPC) storage. He joined HPE in January 2020 as part of the Cray acquisition. Prior to Cray, Uli held leadership roles in marketing, sales enablement, and sales at Seagate, Brocade Communications, and IBM.

- Back to Blog

- Newer Article

- Older Article

- Dale Brown on: Going beyond large language models with smart appl...

- alimohammadi on: How to choose the right HPE ProLiant Gen11 AMD ser...

- Jams_C_Servers on: If you’re not using Compute Ops Management yet, yo...

- AmitSharmaAPJ on: HPE servers and AMD EPYC™ 9004X CPUs accelerate te...

- AmandaC1 on: HPE Superdome Flex family earns highest availabili...

- ComputeExperts on: New release: What you need to know about HPE OneVi...

- JimLoi on: 5 things to consider before moving mission-critica...

- Jim Loiacono on: Confused with RISE with SAP S/4HANA options? Let m...

- kambizhakimi23 on: HPE extends supply chain security by adding AMD EP...

- pavement on: Tech Tip: Why you really don’t need VLANs and why ...

-

COMPOSABLE

77 -

CORE AND EDGE COMPUTE

146 -

CORE COMPUTE

131 -

HPC & SUPERCOMPUTING

131 -

Mission Critical

86 -

SMB

169