- Community Home

- >

- Solutions

- >

- Tech Insights

- >

- Why HPE EPA is the right architecture for future d...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

Why HPE EPA is the right architecture for future data center needs

Learn why the HPE Elastic Platform for Analytics (EPA) is the right architecture to migrate current siloed Hadoop architecture into a more flexible, modular architecture to support the future needs of your data center.

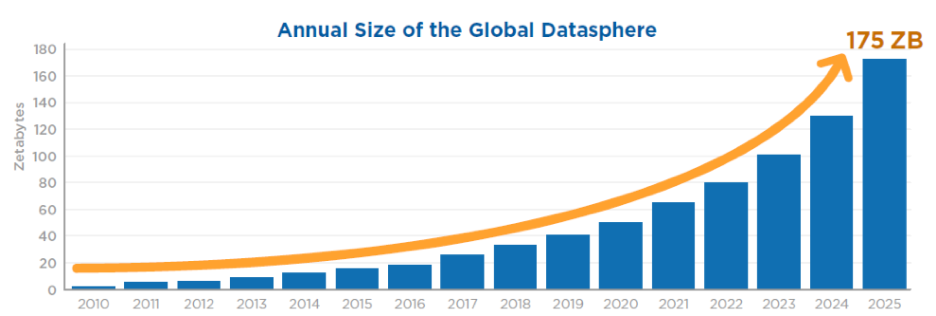

Today, we are witnessing an amazing growth of the information. IDC predicts that expansion of the global datasphere, which is the sum of all digital data produced by humankind, will reach the astonishing value of 175 ZB in 2025.* (For the records, one zettabyte is equivalent to a million petabytes).

IDC predicts that the global datasphere will grow from 33 Zettabytes in 2018 to 175 Zettabytes by 2025.*

The reason for this growth is that data is transforming the way we live, work, and play.

- Companies leverage data to improve customer experiences, open new markets, make employees, and processes more productive, while creating new sources of competitive advantage.

- People monitor activities, produces content, share information and media content on social media.

- Machines and sensors produce data, tracking the status of equipment, environments, supply chains, foods, and more.

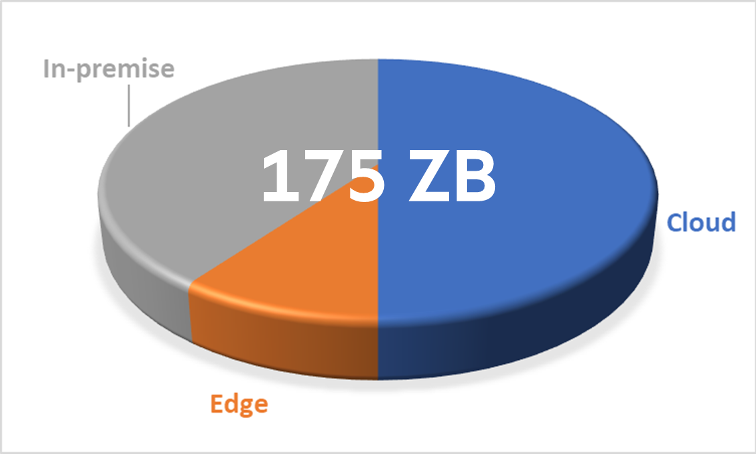

While data may be generated from a huge variety of sources, data will be stored in three primary locations: public cloud, company data center (on premises or core) and edge devices.

By 2025, IDC predicts that half of data will be stored in public cloud, 40% in private data center, while the remaining 10% will be stored in edge devices (including consumer’s devices as camera, phones, and computers).*

Human Datasphere in 2025 (Source: IDC*)

Even if the role of public clouds will continue to grow, a big portion of data (75 ZB in 2025) will continue to be stored in private data centers.

By 2025, IDC predicts that 40% of the whole datasphere (about 75 ZB) will be stored in private clouds and data centers.*

Looking at this number, the natural question is how enterprises will store this enormous quantity of data? The answer is that future enterprise data centers will need to be a mix of next-generation, scale-out storage solutions (e.g. scale-out object, NAS) combined with HDFS infrastructures. Even Hadoop (and in particular HDFS technologies) will continue to play an important role in future data centers. The huge quantity of data already stored in enterprise data lakes that are on premises and the investment already made in Hadoop and HDFS technologies point to the continued need for those environments. It is not only a problem of amortizing existing investments. It is also a problem of data gravity! Moving a PB of data from one storage platform to another, minimally requires a few weeks of planning and additional weeks for deployment and integration. Moving data away from a 10 PB Hadoop cluster can easily requires a year or even more.

Due to data gravity, Hadoop and HDFS in particular will continue to play an important role in future enterprise data centers.

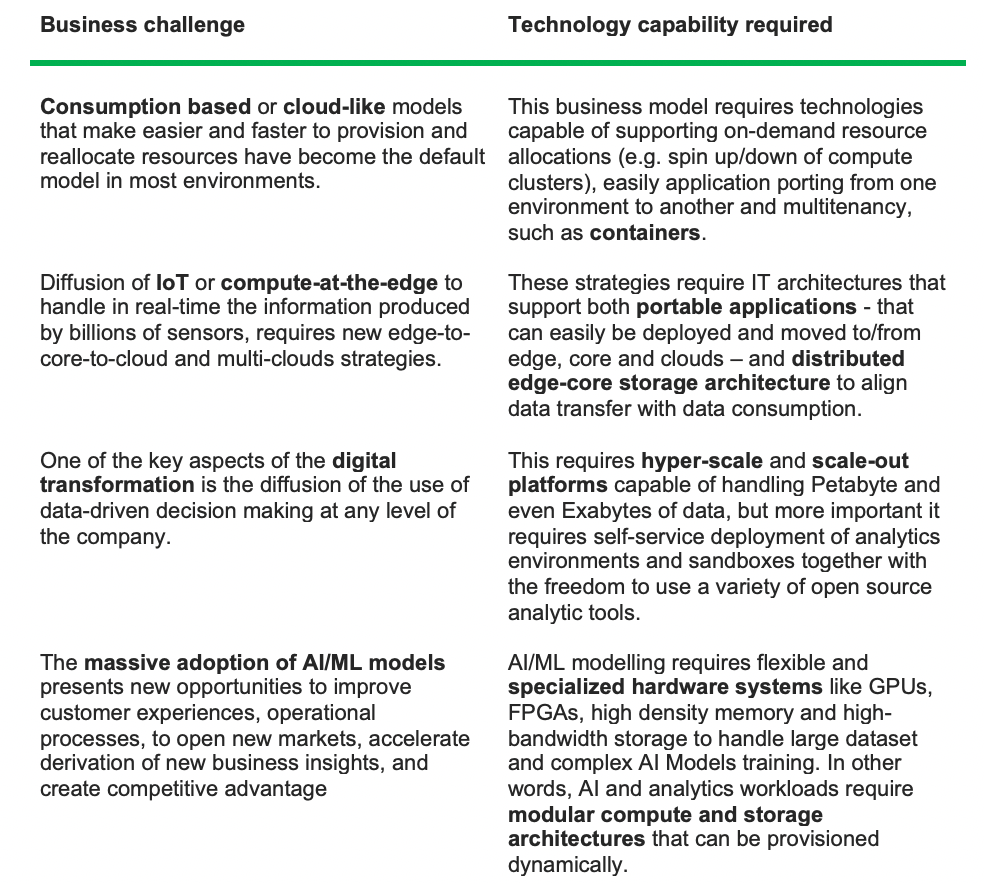

So, the real question is: How can IT support digital transformation leveraging new technology trends and using existing Hadoop data lakes?

Before answering this question, I’ll quickly summarize the main business challenges of any digital transformation and share customer needs in terms of technological capabilities.

In summary, enterprises need the capabilities to run multi-tenant application clusters on a variety of technologies (such as GPU and hardware accelerators) to support different workloads (such as batch analytics, AI/ML training, and streaming). These application clusters need to run in the public cloud, the core, or at the edge. And they need to be able to access data on multiple Hadoop distributions and scale-out data stores, avoiding data duplication or replications.

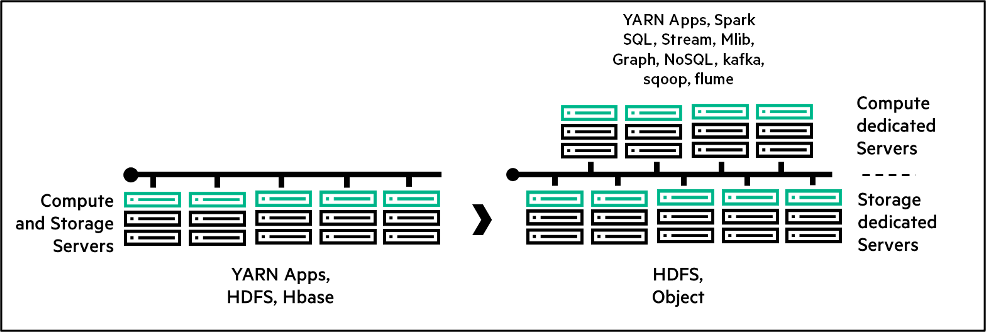

These requirements present a significant challenge to the traditional frameworks used to support Hadoop in the majority of enterprises, where collocated compute and storage inhibit scale of either resource independently, where multi-tenancy of workloads creates scheduling and performance challenges and where specialized hardware can’t be easily added to an existing cluster.

Aware of these limitations for a long time, HPE introduced the HPE Elastic Platform for Analytics (EPA) to overcome them. HPE EPA leverages standard Hadoop and Yarn to enables enterprises eliminating the limitations of a traditional Hadoop symmetric deployment and realizing all the benefits of an asymmetrical, modular architecture.

HPE Elastic Platform for Analytics (EPA) allows to reconfigure traditional symmetric Hadoop deployment in asymmetric and modular ones to support new technological trends.

Why is the HPE Elastic Platform for Analytics the optimal platform for your workloads?

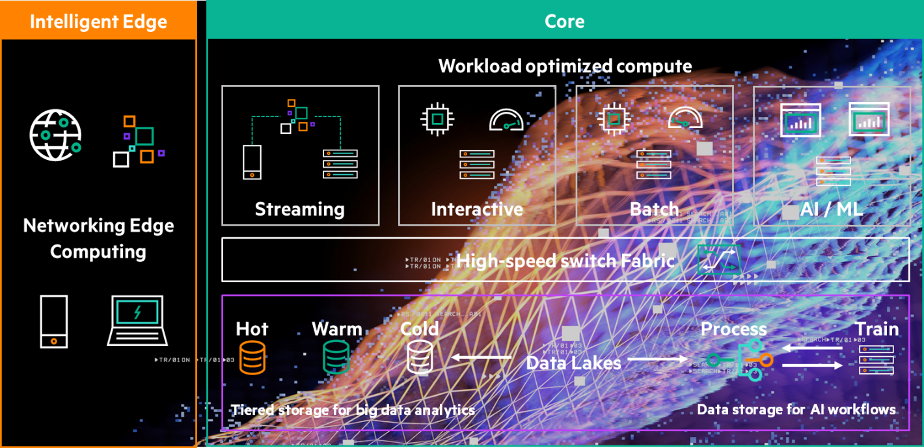

The EPA is designed as a modular infrastructure foundation to address the need for a scalable multi-tenant platform, by enabling independent scaling of compute and storage through infrastructure building blocks that are workload optimized.

HPE Elastic Platform for Analytics offers an “asymmetric” architecture and a different approach to building a data pipeline optimized for big data analytics.

- Asymmetric architectures consist of separated scalable blocks of compute and storage resources, connected via HPE high speed network components (25G, 40G, or 100G), along with integrated management software and bundle support.

- HPE EPA asymmetric architecture allows you to combine heterogeneous compute/storage blocks (e.g. AI-optimized, high-storage density, memory optimized, latency optimized for real-time analytics, standard compute, etc.) to optimize each workload requirements. As workload profiles change, you can add the right compute or storage blocks to satisfy the new profiles needs. All these blocks can operate within the same cluster and share the same data.

- HPE EPA building blocks provide the flexibility to optimize each workload and access the same pool of data for batch, interactive and real-time analytics.

- This architecture can span from edge to core and to cloud.

HPE Elastic Platform for Analytics

The benefit of an HPE elastic design is that it enables you to:

- Independently scale compute and storage nodes as business requirements change

- Run multiple workloads on the same cluster

- Reduce cost and security ramifications of having multiple copies of the data on isolated workload clusters

- Flexibility to repurpose compute nodes to support new services and application requirements from the business

The HPE EPA architecture allows the consolidation of data on isolated workload clusters on a shared and centrally managed platform, providing organizations the flexibility to scale compute and/or storage as required, using modular building blocks for maximum performance and density. HPE EPA building blocks provide the flexibility to optimize each workload and access the same pool of data for batch, interactive and real-time analytics.

HPE EPA allows organizations that have already invested in balanced Hadoop symmetric configurations to repurpose their existing deployments into a more flexible and modular asymmetric architecture capable to support multiple analytics needs, multi-tenancy, the growth compute, and/or storage capacity independently, without building new clusters.

The figure below highlights some of the different server building blocks within the HPE EPA, categorized by edge and core architectures and highlighting consumption-based service offerings from HPE. Using these blocks, you can build your infrastructure—and as workloads/compute and data storage requirements change, you can easily add new blocks to satisfy the new workload needs.

For more information on HPE EPA building blocks, download this reference architecture: HPE Reference Configuration for Elastic Platform for Analytics (EPA)—Modular building blocks of compute and storage optimized for modern workloads

What makes migrating from traditional Hadoop to HPE EPA easy

The adoption of HPE EPA is a straightforward and easy process in which current worker nodes are transformed in storage node only and new compute nodes are added to the cluster (Note: An alternative approach to adding new compute nodes, is to convert existing worker nodes in compute ones).

This transformation is done in three main steps:

- Add configuration groups to YARN

- Add new nodes (compute)

- Remove unwanted services from the worker nodes (now storage nodes)

Example of how to migrate from traditional Hadoop deployment to HPE EPA

Here's an Important factor to keep in mind: HPE professional services has extensive experience in supporting customers transforming their traditional Hadoop environments with HPE EPA.

How your organization can benefit from HPE EPA

Benefits include:

- HPE EPA allows existing Hadoop clusters to support new technology trends such hardware accelerators, containers, scale-out software-defined-storage, and hyperconvergent infrastructures, without moving data. HPE EPA allows enterprises to easily transform current “siloed” Hadoop clusters in modular building blocks to provide the flexibility to optimize any workload and to access the same pool of data for batch, interactive, and real-time analytics.

- HPE EPA can be deployed everywhere: cloud, core and edge. HPE EPA compute and storage nodes can be deployed geographically distributed, in disaster recovery and on edge locations.

- HPE EPA supports multi-tenancy workloads and container deployment.

- HPE EPA leverages HPE’s long experience on HPE EPA deployments. HPE has created an extensive number of reference architectures and configuration documents to support a wide range of use cases and workloads.

- HPE EPA uses an HPE sizing tool to size Hadoop clusters based on applications running on it (e.g. Spark, HBase, Hive, Yarn, Tensorflow) and the compute (e.g. edge, workload-optimized, standard) and storage (e.g. high or standard storage density) building blocks.

Here are additional resources to learn more about HPE EPA:

- Blog on HPE Elastic Platform for Analytics (EPA) with composability for Big Data

- HPE Reference Architecture for AI on HPE Elastic Platform for Analytics (EPA) with TensorFlow and Spark

- HPE Reference Configuration for HPE Elastic Platform for Analytics with composable infrastructure and Cloudera 6.3

- A POWERFUL COMPOSABLE APPROACH FOR YOUR BIG DATA ANALYTICS

- HPE Reference Architecture for Real-Time streaming analytics with HPE Elastic Platform for Analytics (EPA) and MapR Data Platform (MapR)

- HPE Reference Configuration for HPE Apollo 4200 Gen10 with Hadoop 3

- HPE Reference Configuration for networking best practices on the HPE Elastic Platform for Big Data Analytics (EPA) Hadoop ecosystem

*IDC - Data Age 2025 – The Digitalization of the World from Edge to Core

Andrea Fabrizi

Hewlett Packard Enterprise

twitter.com/HPE_Storage

linkedin.com/showcase/hpestorage/

hpe.com/storage

twitter.com/HPE_AI

linkedin.com/showcase/hpe-ai/

hpe.com/us/en/solutions/artificial-intelligence.html

- Back to Blog

- Newer Article

- Older Article

- Amy Saunders on: Smart buildings and the future of automation

- Sandeep Pendharkar on: From rainbows and unicorns to real recognition of ...

- Anni1 on: Modern use cases for video analytics

- Terry Hughes on: CuBE Packaging improves manufacturing productivity...

- Sarah Leslie on: IoT in The Post-Digital Era is Upon Us — Are You R...

- Marty Poniatowski on: Seamlessly scaling HPC and AI initiatives with HPE...

- Sabine Sauter on: 2018 AI review: A year of innovation

- Innovation Champ on: How the Internet of Things Is Cultivating a New Vi...

- Bestvela on: Unleash the power of the cloud, right at your edge...

- Balconycrops on: HPE at Mobile World Congress: Creating a better fu...

-

5G

2 -

Artificial Intelligence

101 -

business continuity

1 -

climate change

1 -

cyber resilience

1 -

cyberresilience

1 -

cybersecurity

1 -

Edge and IoT

97 -

HPE GreenLake

1 -

resilience

1 -

Security

1 -

Telco

108