- Community Home

- >

- Servers and Operating Systems

- >

- Servers & Systems: The Right Compute

- >

- Enable rapid insights and real-time data analytics...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

Enable rapid insights and real-time data analytics pipelines from edge to hybrid cloud

Learn how an enterprise-grade, optimized end-to-end data analytics pipeline solution can help you derive value from your data from edge to hybrid cloud.

IoT. Big Data. Analytics. AI. These are loaded terms which mean different things to different IT departments. As a solutions engineering organization here at HPE, our team regularly meets with customers on these topics. It’s become evident that these terms are underpinned by a single concept: the data pipeline. Data is growing in all dimensions, including its importance to the business, and building an end-to-end data analytics pipeline is the only way to connect the dots and derive value from all that data.

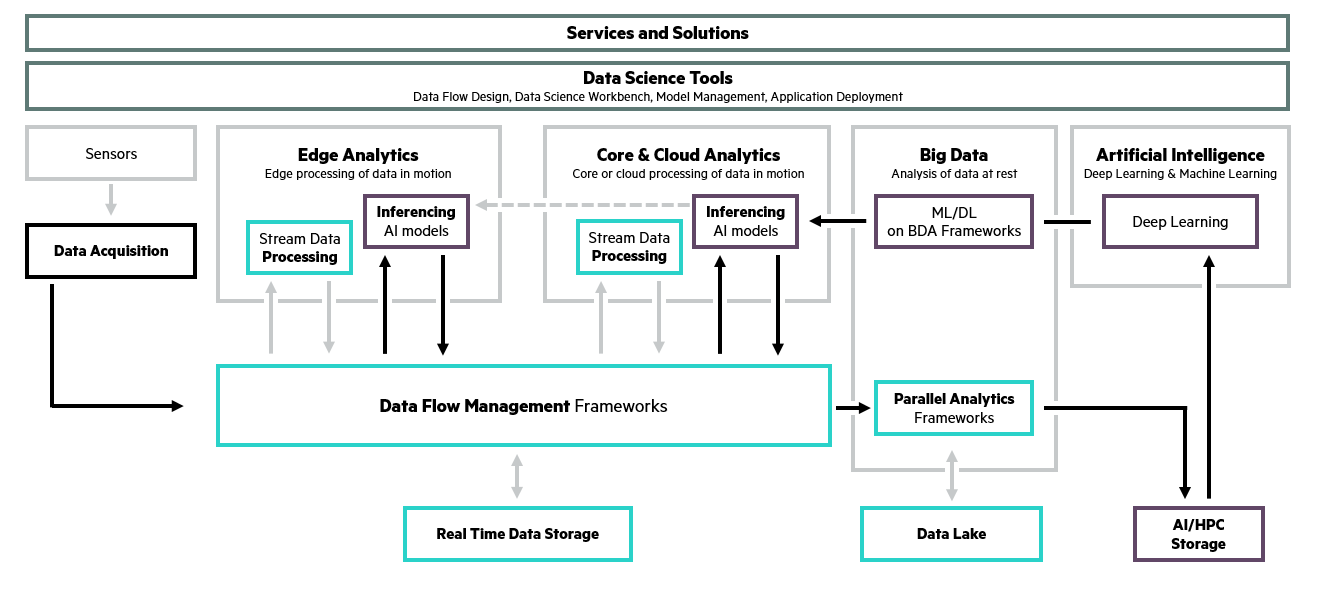

Thid figure below highlights the key elements of a bi-directional data pipeline, spanning from the edge to the cloud.

Unfortunately, as most of you are aware, this is not easy. Speed, scale, data sprawl, security, and framework complexity are just a few of the challenges. There are many tools and different open-source projects that comprise each stage along this data pipeline diagram—and your data scientists, data engineers and analysts will have strong opinions on the correct tool(s) to execute a particular job. These teams need to be able to deploy the tools they need, when they need them, where they need them to run, at production scale, and with high performance. This is the job IT organizations face today, and this is precisely where HPE can help.

HPE has workload-optimized platforms to serve diverse needs across the data pipeline, from inference on the edge to deep learning training in the data center.

Purpose-built server, storage and networking hardware is the foundation to build an infrastructure providing both rapid deployment and scale, and to deliver the highest levels of performance, quality and availability.

Building on these workload-optimized platforms, HPE has partnered with Red Hat to provide an enterprise class solution for both platform and containers-as-a-Service (PaaS or CaaS), using an optimized end-to-end data analytics pipeline with Red Hat OpenShift Container Platform (OCP). Container technology, and Kubernetes specifically, are quickly becoming the enabling abstraction architecture across the data pipeline in hybrid cloud deployments. Containers allow workloads to be rapidly deployed, scaled up or down, and provide flexibility to move between on-premises, public cloud and the edge. Combining Red Hat's OCP distribution with platforms like HPE Synergy and HPE 3PAR and HPE Nimble Storage, HPE delivers a turn-key solution for a composable architecture to rapidly deploy containers supporting new application frameworks, resulting in faster insights for the business. A range of service offerings from HPE Pointnext and HPE GreenLake let you decide whether to purchase upfront (CapEx), or move to a pay-as-you-go consumption model (OpEX).

HPE’s CaaS platform provides the foundation to securely build a data analytics pipeline using the tools you need, resulting in an environment where you can spool up new container resources to provide frameworks for model training, data ingestion and data flow in a secure manner. For example, SAP Data Hub can be provisioned using Red Hat OCP and help connect the disparate data sources in your environment while maintaining data lineage and data governance.

SAP Data Hub is a data orchestration solution that helps you discover, transform, enrich and analyze different types of data across your environment. SAP Data Hub lets you create visual data flow pipelines that connect streams of data—from data flowing from your edge devices to data that resides in HANA or sits in your Hadoop or S3 object data stores. You can build data science models or analytics queries for your data scientists or business analysts with a complete picture of your organization, securely. These are the tools your business needs to move fast.

SAP Data Hub is just one example of where HPE and Red Hat OCP can provide a platform for you to continue to innovate and build your next generation data pipelines. As more and more frameworks and software stacks move to support Kubernetes, having an enterprise ready CaaS architecture will allow you to build your edge to cloud data pipelines and connect your data sources to derive the most value out of your data.

Learn more from our Reference Architectures. And please reach out to your HPE representative if you would like to discuss this further.

Meet I

Insights Experts

Hewlett Packard Enterprise

twitter.com/HPE_Servers

linkedin.com/company/hewlett-packard-enterprise

hpe.com/servers

- Back to Blog

- Newer Article

- Older Article

- Dale Brown on: Going beyond large language models with smart appl...

- alimohammadi on: How to choose the right HPE ProLiant Gen11 AMD ser...

- Jams_C_Servers on: If you’re not using Compute Ops Management yet, yo...

- AmitSharmaAPJ on: HPE servers and AMD EPYC™ 9004X CPUs accelerate te...

- AmandaC1 on: HPE Superdome Flex family earns highest availabili...

- ComputeExperts on: New release: What you need to know about HPE OneVi...

- JimLoi on: 5 things to consider before moving mission-critica...

- Jim Loiacono on: Confused with RISE with SAP S/4HANA options? Let m...

- kambizhakimi23 on: HPE extends supply chain security by adding AMD EP...

- pavement on: Tech Tip: Why you really don’t need VLANs and why ...

-

COMPOSABLE

77 -

CORE AND EDGE COMPUTE

146 -

CORE COMPUTE

129 -

HPC & SUPERCOMPUTING

131 -

Mission Critical

86 -

SMB

169