- Community Home

- >

- Company

- >

- Advancing Life & Work

- >

- Autoencoders and other neural nets: an introductio...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

Autoencoders and other neural nets: an introduction to bespoke AI

By Curt Hopkins, Managing Editor, Hewlett Packard Labs

There is hardly a new manufacturing facility being built these days that does not seek to employ the concepts of the smart factory. These include the manufacturing line as the edge in edge computing, with an emphasis on AI to manage its running and maintenance. The manufacturing sector is well aware that they need to address AI as they build out. But what may not be obvious is that off-the-rack AI usually will not do the trick. For a smart factory, AI has to be bespoke.

The metaphor favored by Tom Bradicich for his role in advising on AI is chef du cuisine.

“If I'm going to be your consultant on cuisine, I must know a lot about what you like and the relationship between that and your health,” says Bradicich, HPE VP, Fellow, and recently appointed head of the IoT and Edgle Lab and Center of Excellence. “In order to serve you well, I have to know the traditions and technologies of my own cuisine. It’s not like showing up at a drive-in burger joint, which may offer just three main items. Contrariwise, the demand for AI compels an extensive menu of choices tailored for the occasion.”

We’re not talking In-N-Out, we’re talking Mirazur.

Hewlett Packard Enterprise has a great deal of experience creating AI for manufacturing and advising producers on what to use and how to use it. (For a recent peek at how the company manages that, one need only look at its work with Seagate.) But Hewlett Packard Labs is now spinning out new neural net configurations, including autoencoders (a technology that verges on magic, in the Clarkian sense), to create a responsive toolbox for AI solutions. Neural net configuration is one of the most promising and exciting areas of machine learning innovation.

Sergey Serebryakov, a senior research scientist at Labs, is engaged in a predictive analytics project utilizing tool instrumentation data that operates without the need for expensive and time-consuming labeled data. This net, called an autoencoder, allows factory management to recognize when its line is about to break before the normal warnings arise. It does so using pattern recognition possibly due to the autoencoder’s net structures.

The smart factory

According to Bradicich, a factory becomes smart via “the 3 Cs”: connect, compute, and control.

“The factory is not in the data center where all the computing power has traditionally resided,” says Bradicich. It’s the edge in edge computing. “So to make an edge smart, and specifically a factory smart, we have to first connect the equipment. We then compute via the AI.” In the specific project Serebryakov is leading, that AI is the type of neural network called an autoencoder. This in turn provides the third C, control – over the moving parts of the factory.

“Ultimately the smartness – connecting, computing, and controlling, addressed by neural networks, like autoencoders – increases production and makes it safer, all of course without reduction in quality,” says Bradicich.

Prediction

“Who doesn’t want to predict the future?” asks Bradicich. “Humans have always been enamored with future prediction. From the mysticism of crystal balls, astrology, and palm reading, to when am I going to get my next raise? Will this person marry me? Will I be healthy? How much money will I need for retirement? We crave knowledge of the future.”

Of course the owners and managers of factories want the same thing. Having a leg up is a distinct advantage in the market. That’s where predictive analysis, a function of AI, comes into its own. And that’s where Serebryakov’s work comes back in.

Factory management has a vested interest in catching malfunctions, breakdowns, and other flaws in their manufacturing systems before it has an opportunity to express itself in breakage, infrastructure damage, work loss, and even injury. So a neural net designed to predict, one which does not require a ton of time and training, is ideal.

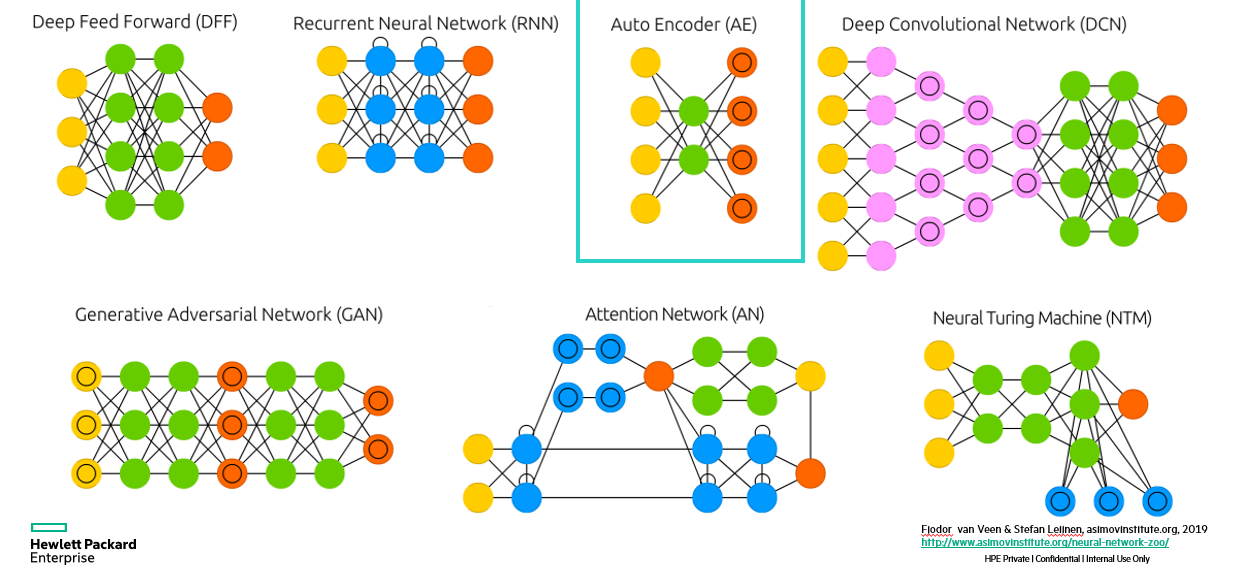

“You can think about neural nets as a composition of different functions,” says Serebryakov. “And depending on which functions you use and how you compose them with each other, they can manifest very different architectures. One may be suitable for detecting cats and dogs in images, another for taking a text in one language and translating that into your other language, and still another to translate an image into text. There are many, many, many possible architectures.”

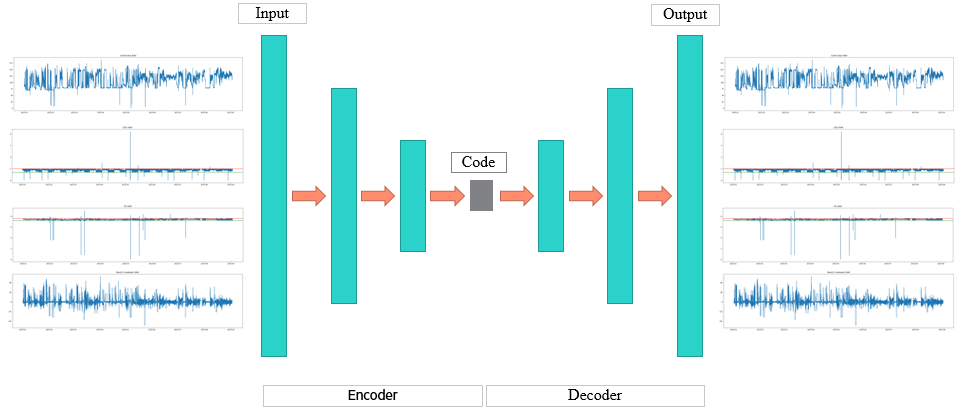

One of those architectures is called an autoencoder, a model that learns to reconstruct its input. In other words, the output of the model is the same as input.

Serebryakov walks us through this process.

“We take several metrics,” he says. “A metric is a time series - a signal with time dependencies associated with one particular sensor like temperature or water pressure. We use the encoder part of the model to compress multiple input metrics into a code – a lower dimensional representation of the input. We then use another part of the model called a decoder to reconstruct the input using its lower dimensional representation – code. Why is that useful? Assuming that a dataset we use to originally train this model contains nominal or normal data, the autoencoder model learns to reconstruct input data corresponding to a nominal regime of operation. If for some input, the reconstruction error (difference between output and input) is large, this tells us that the pattern in input data is unknown (has not been seen during training), and thus, represents an anomaly.”

The long and short of this is the input data may contain an anomaly if the autoencoder does not recognize it, a change from the initial state, that indicates something that may build, if left alone, into a debilitating flaw on the factory floor. It is pattern recognition in the service of problem avoidance. Or it may indicate a security problem. An anomaly can be rather broadly defined. But what it is in any case is a possible problem hiding in the data.

Here’s a practical example. Imagine a cooling system in a data center which has two sensors, one for temperature and another for the angle of the water valve (which determines how much water is let in and therefore how much cooling takes place). The AI model governing this system ideally comes to understand the relationship between the two: If the heat goes up, the angle should change to allow more water in to cool. If the angle is open and the temperature is low, however, something is wrong. An autoencoder should be able to ascertain and communicate that although nothing is currently broken, the current situation is unsustainable and if left alone something will break.

The fully-connected autoencoder architecture depicted above is only one possible architecture, and there are a host of possible variants, including the “stacked recurrent autoencoder” and “encoder-decoder autoencoder.”

Additionally, the non-autoencoder neural nets can provide smart factory owners special tools for special circumstances. For instance, a neural net-based model of a factory, a so-called digital twin, can be used to try various combinations of input metrics not typically used in operations to find inputs that result, for instance, in lower energy consumption preserving all other properties of a manufacturing process.

“To achieve the best results in the use of AI in a smart factory environment, you can’t just buy off the rack,” says Serebryakov. “You need to come up with a model that better represent the problem you need to solve or better represents the dataset.” Coming up with such such models is the one of the things Serebryakov and his colleagues do best.

Curt Hopkins

Hewlett Packard Enterprise

twitter.com/hpe_labs

linkedin.com/showcase/hewlett-packard-labs/

labs.hpe.com

- Back to Blog

- Newer Article

- Older Article

- MandyLott on: HPE Learning Partners share how to make the most o...

- thepersonalhelp on: Bridging the Gap Between Academia and Industry

- Karyl Miller on: How certifications are pushing women in tech ahead...

- Drew Lietzow on: IDPD 2021 - HPE Celebrates International Day of Pe...

- JillSweeneyTech on: HPE Tech Talk Podcast - New GreenLake Lighthouse: ...

- Fahima on: HPE Discover 2021: The Hybrid Cloud sessions you d...

- Punit Chandra D on: HPE Codewars, India is back and it is virtual

- JillSweeneyTech on: An experiment in Leadership – Planning to restart ...

- JillSweeneyTech on: HPE Tech Talk Podcast - Growing Up in Tech, Ep.13

- Kannan Annaswamy on: HPE Accelerating Impact positively benefits 360 mi...