- Community Home

- >

- Servers and Operating Systems

- >

- Servers & Systems: The Right Compute

- >

- Meet the vibrant community driving the Lustre file...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

Meet the vibrant community driving the Lustre file system

It doesn’t matter how big your organization is or what mission or business objective you pursue. If you're using modeling and simulation or artificial intelligence or high performance data analytics, HPE has the best parallel storage for you. You can start wherever you want, then go to wherever you need – without limits.

As a recent study from Hyperion Research found, Lustre® is the most widely deployed parallel file system in high

Open Scalable File Systems, Inc. is the nonprofit organization dedicated to the success of the Lustre file system. OpenSFS was founded in 2010 to advance Lustre development, ensuring it remains vendor-neutral, open, and free. Cray – now part of the Hewlett Packard Enterprise family – was a founding member.

We are honored and proud to contribute to the Lustre community on multiple levels.

First, we contribute code to the community. Actually, as shared at the recent Lustre Administrators and Developers Workshop (LAD’21), HPE contributed more code commits to the current release of Lustre (2.14) than all other contributing organization combined (with the exception Whamcloud (now part of DDN) who traditionally contributes most of the code). In alphabetical order, contributing organizations to the 2.14 release were Aeon Computing, Amazon, CEA, Fujitsu, Google, HPE, Intel, Lawrence Livermore National Laboratory, Mellanox, Oracle, Oak Ridge National Laboratory, Seagate, Suse, Uber, and Whamcloud.

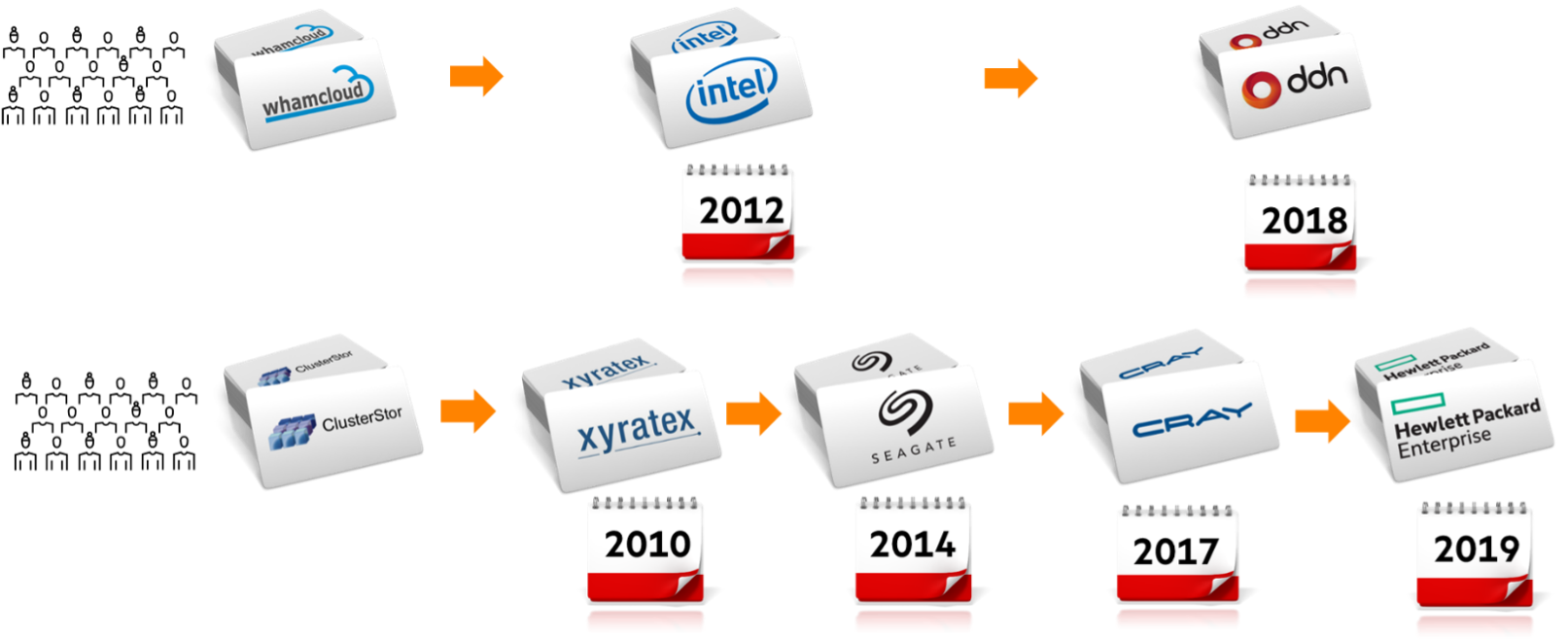

The graphic below shows the history of the two Lustre developer teams who, in addition to contributing user organizations, have contributed most development and testing since Lustre was launched in 2003. Over time, the logos on the business cards have changed. The mission has not.

Here are two specific examples of what we mean by “Lustre at scale”:

- The largest All Flash Lustre file system at NERSC

With 35 petabyte usable All Flash storage capacity the Cray ClusterStor E1000-based file system is moving the goal post when it comes to All Flash (NVMe SSD) Lustre systems. - The largest Lustre file system at Oak Ridge National Laboratory

With 690 petabyte usable All Flash storage capacity the Cray ClusterStor E1000-based file system is moving the goal post when it comes to hybrid (NVMe SSD and HDD) Lustre systems.

The real heroes in this groundbreaking work are our customers who work hand in hand with HPE’s Lustre R&D team during the acceptance and early production phases in order to push the capabilities of HPC storage in general – and Lustre in particular – beyond what was thought possible before.

Thirdly, HPE is sponsoring the annual events of the Lustre community and is sharing best practices with the community during those events.

There are two key community events each year:

- The Lustre User Group (LUG) in spring in the U.S.A

- The Lustre Administrators and Developers Workshop (LAD) in fall in France

At the Lustre User Group 2021 (LUG 2021) that was sponsored by both HPE and DDN, the Argonne Leadership Computing Facility shared in a presentation with the title “Lustre at scale” lessons learned from the deployment and acceptance of the two identical Lustre file systems, “Grand” and “Eagle.” Both are built on Cray ClusterStor E1000 Storage Systems with 100 petabyte of usable storage capacity in ten racks each. If you want to learn more about Lustre at scale, you can view the recording of the presentation here.

At LAD 2021 HPE contributed the following two best practices sharing talks:

- Troubleshooting LNet Multi-Rail Networks – link to presentation recording here

- An Aged Cluster File System: Problems and Solutions – link to presentation recording here

Both annual community events are a great opportunity for worldwide Lustre users, administrators, and developers to gather and exchange their experiences, developments, tools, best practices and more.

Lustre leadership and positioning demonstrated

When it comes to designing, deploying, and supporting Lustre-based storage systems at scale, nobody has more experience and expertise. The example of the 1 terabyte per second Cray ClusterStor system of the Blue Waters supercomputer at the National Center for Supercomputing Applications confirms this. In production now for over eight years, it has served high-speed data for over 40 billion core hours of jobs submitted by thousands of researchers and scientists across the United States of America. And still counting!

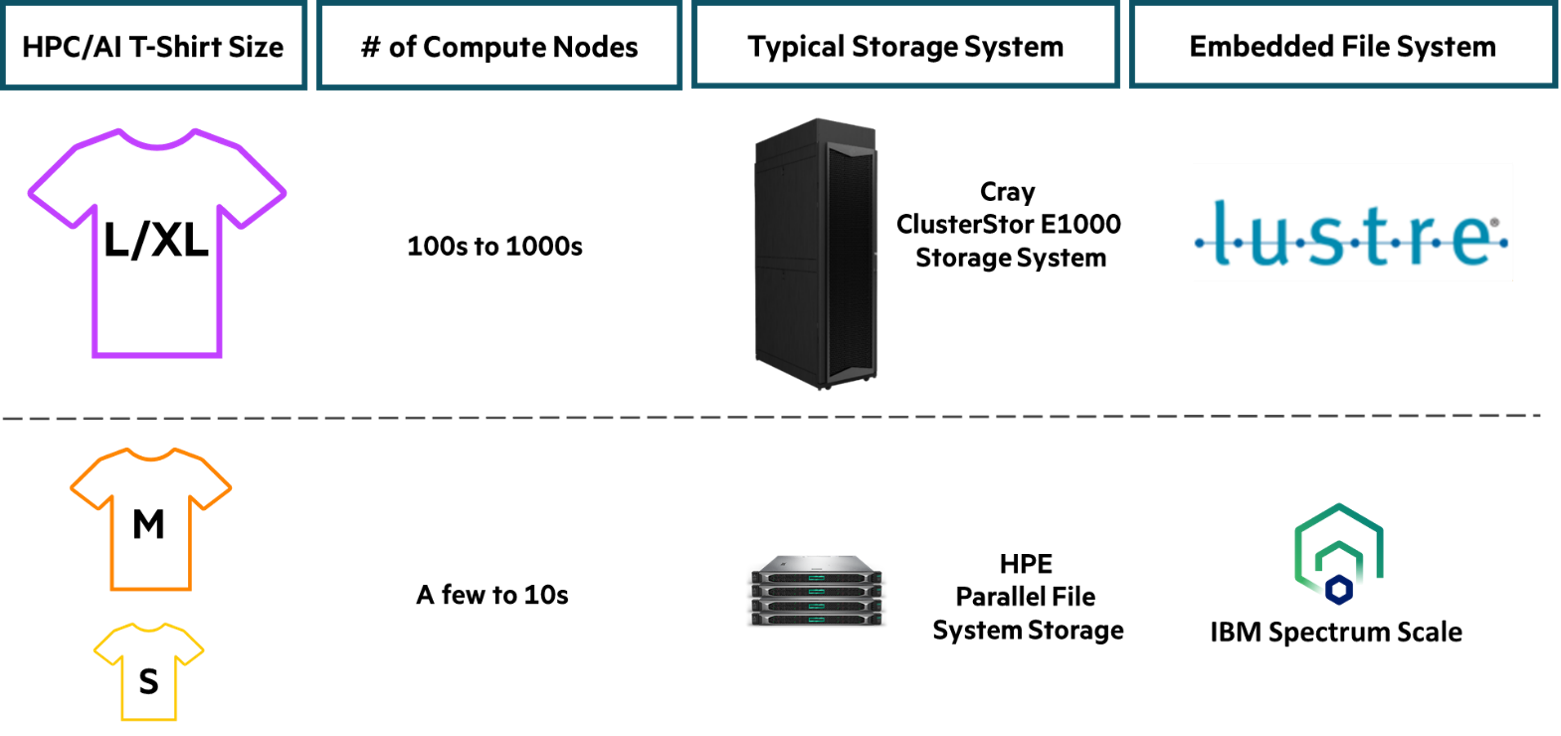

We sometimes get the question: Do you have a simple at-a-glance view of the positioning of your Lustre-based storage systems vs. your IBM Spectrum Scale-based storage systems? We’re using this graphic to answer that question.

Do any of these HPC and AI storage challenges sound familiar? Take action sooner than later

We can provide the right HPC/AI storage solution for organizations of all sizes, businesses, and mission areas. If you are currently facing one or more of the below HPC/AI storage challenges, do not wait any longer.

- Job pipeline congestion due to input/output (IO) bottlenecks leading to missed deadlines/top talent attrition

- High operational cost of multiple “storage islands” due to scalability limitations of current NFS-based file storage

- Exploding costs for fast file storage at the expense of GPU and/or CPU compute nodes or of other critical business initiatives

Contact your HPE representative today for more information.

Learn more now

Business paper: Spend less on HPC/AI storage and more on CPU/GPU compute

Web: New HPC storage for a new HPC era

Uli Plechschmidt

Hewlett Packard Enterprise

twitter.com/hpe_hpc

linkedin.com/showcase/hpe-ai/

hpe.com/us/en/solutions/hpc

UliPlechschmidt

Uli leads the product marketing function for high performance computing (HPC) storage. He joined HPE in January 2020 as part of the Cray acquisition. Prior to Cray, Uli held leadership roles in marketing, sales enablement, and sales at Seagate, Brocade Communications, and IBM.

- Back to Blog

- Newer Article

- Older Article

- Dale Brown on: Going beyond large language models with smart appl...

- alimohammadi on: How to choose the right HPE ProLiant Gen11 AMD ser...

- Jams_C_Servers on: If you’re not using Compute Ops Management yet, yo...

- AmitSharmaAPJ on: HPE servers and AMD EPYC™ 9004X CPUs accelerate te...

- AmandaC1 on: HPE Superdome Flex family earns highest availabili...

- ComputeExperts on: New release: What you need to know about HPE OneVi...

- JimLoi on: 5 things to consider before moving mission-critica...

- Jim Loiacono on: Confused with RISE with SAP S/4HANA options? Let m...

- kambizhakimi23 on: HPE extends supply chain security by adding AMD EP...

- pavement on: Tech Tip: Why you really don’t need VLANs and why ...

-

COMPOSABLE

77 -

CORE AND EDGE COMPUTE

146 -

CORE COMPUTE

130 -

HPC & SUPERCOMPUTING

131 -

Mission Critical

86 -

SMB

169