- Community Home

- >

- Storage

- >

- HPE Nimble Storage

- >

- Array Performance and Data Protection

- >

- Re: Cache Fluctuation

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-11-2014 08:45 PM

10-11-2014 08:45 PM

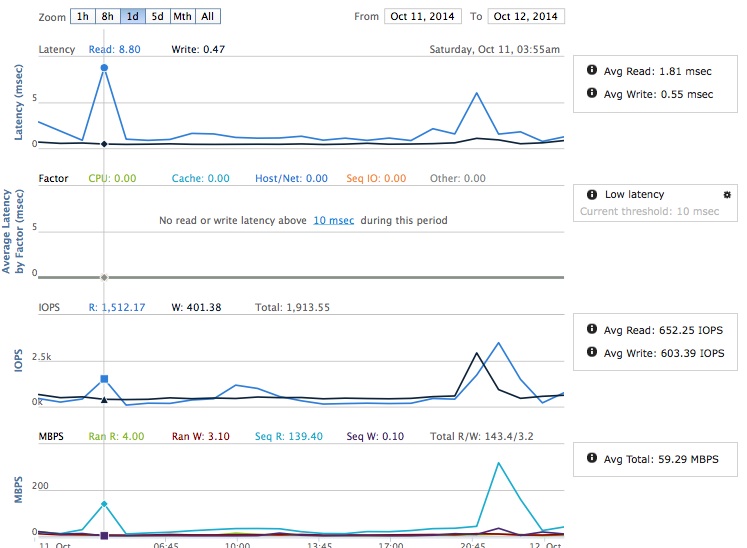

Slightly baffled by the extreme peaks and dips in Cache usage. There is no major variation in our workload but look at the following:-

Currently this array is supporting a mixed load of 122 VM's including 20 SQL servers and Exchange plus the usual Web, file and Citrix servers.

Anything I should be looking at?

Regards,

Mark.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-13-2014 01:17 PM

10-13-2014 01:17 PM

SolutionHey Mark,

This is quite a generic view and presents long-term trends. It therefore tends to roll up and summarise the sample points (and therefore I find it less useful).

I tend to drill down to the Month view and then the 5D and 1D view to get an idea on seasonality of the workload. What you often find is during the night you see increased latencies because of a backup workload (you'd expect this from a sequential workload), sometimes this backup activity can be quite random (depending on the backup application and how it is tracking and reading from it's delta's) so this can sometimes cause a spike in random workloads and cache pressure.

in a 5Day view this looks very seasonal (often peaking in the early hours of the morning - hovering over the chart shows you the timestamps).

This also can be viewed on the 1Day view:

Note: the latency here is a sequential large block workload so it can be disregarded.

During the production day cache and latencies are <1ms.

In essence I always take a high-level view with a little pinch of salt as it's highly summarised. InfoSight will generate automatically alerts for any long-term latencies due to cache and CPU pressure so our customers know exactly when it's appropriate to think about scaling systems or to hold off provisioning new workloads to a highly utilised platform

Ping me a Direct Message tomorrow if you'd like me to look into this is more detail

Cheers

Rich

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-17-2014 10:28 AM

10-17-2014 10:28 AM

Re: Cache Fluctuation

Thanks Rich,

I'm not concerned with the sequential I/O either and the reality is that we are almost a third of the way through moving data and VM's off the Netapp. Not sure whether to increase the cache on the Array or look at getting an All Flash Shelf partially populated but with expansion possibilities.

Regards,

Mark.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-21-2014 03:58 AM

10-21-2014 03:58 AM

Re: Cache Fluctuation

Hi Mark,

I would wait until all your data is migrated from the Netapp before committing to a cache upgrade. It could be that the backups or migration process is having this effect on the cache as it is very up and down. Keep a close eye on Infosight and see whether you are getting a trend of higher latency or not. Infosight will be very handy in this case as it will tell you accurately what the situation is! Let us know how you get on!

Also, what version of NOS are you on?

Regards,

Ezat

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-21-2014 04:34 AM

10-21-2014 04:34 AM

Re: Cache Fluctuation

We are running 2.1.4.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-21-2014 10:35 AM

10-21-2014 10:35 AM

Re: Cache Fluctuation

Hi Mark,

What array are you running and how much used capacity are you expecting once the data has been completely migrated from the Netapp? Also, how much cache do you have in your array today?

Thanks,

Ezat

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-22-2014 12:58 AM

10-22-2014 12:58 AM

Re: Cache Fluctuation

We will expect the uncompressed data to be in the region of 65-70 TBwhen complete. Mixed load of App, SQL, Oracle (some on Solaris), an Exchange system (Already on Nimble) and general utility file/print servers

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-22-2014 03:51 AM

10-22-2014 03:51 AM

Re: Cache Fluctuation

Thanks for the info Mark! I suspect you will need a cache upgrade at some point in the future solely based on the fact that you will be migrating a fair amount of data over to the Nimble array but this will be subject to many factors. I still stick to my original recommendation of keeping an eye on Infosight as you continue to migrate data as I don't want you to invest in something unless you need it! I will get your account team to get in contact with you to monitor how things progress over the coming weeks and months.

On a side note, using my own very rough rule of thumb, with 70TB of data at a compression rate of 1.5x (you are getting higher system compression and even better on your backup data!) you will consume approx 46.7TB of space. Calculating 5% of this for cache to hold your working set gives 2.3TB which sounds about right on average.

The other thing you may want to look at in the interim is ensuring your performance policies are set up correctly for your data types to ensure that cache is not being polluted with data that shouldn't be in there. Your account team can help you look at this.

Regards,

Ezat