- Community Home

- >

- Storage

- >

- HPE Nimble Storage

- >

- Array Performance and Data Protection

- >

- Hyper-V 2012R2 Cluster Through SMB 3.0 Shares

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-07-2014 04:43 AM

10-07-2014 04:43 AM

Anyone utilizing a Hyper-V 2012 R2 environment whereby they are front-ending their nimble group through a highly available 2012 R2 file server cluster and utilizing SMB 3.0 shares versus mapping iSCSI storage to each clustered host and utilizing CSV's? If so, I am curious how this is working for you?

I am asking because like an NFS setup with ESXi for example, such a scenario could go a long ways towards simplifying an environment, making scaling the environment considerably simpler, both from a host perspective as well as from a storage management perspective. I am enjoying the eased simplicity of SMB 3.0 in our lab environment (4 node HV 2012 R2 cluster with 90 or so dev/qa VM's). I also have been finding that performance of SMB 3.0 over 10gb is definitely on par or beyond when compared with CSV's.

Curious to hear from others who may be running or have experimented with such a solution.

And to add to this quickly, anyone else doing something similar whereby creating a number of larger volumes on their arrays and front-ending their Nimble through an NFS gateway for their ESXi environment versus managing iscsi on each host? Curious how the performance would remain if the NFS gateway could be kept from becoming a bottleneck.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-07-2014 10:40 AM

10-07-2014 10:40 AM

Re: Hyper-V 2012R2 Cluster Through SMB 3.0 Shares

Weston FraserPatrick Cimprich are doing exactly that. They are leveraging front end scale out file servers utilizing SMB 3.0 for Hyper-V 2012 (with Nimble on the backend). I'm sure they would be willing to share their experiences so far with you.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-07-2014 03:57 PM

10-07-2014 03:57 PM

SolutionYep - Ben's right. We've been doing that very thing for close to a year now with Nimble (and 2 years with other storage). We run a Hyper-v private cloud and everything is currently running Windows 2012 R2 and we have SOFS servers connected to the Nimble via 10Gbs iSCSI and then server SMB 3.01 out the front-end to our Hyper-v servers.

We started this architecture back with Windows 2012 and went through some major pain as the continuously available file shares feature was maturing with Windows and ended up logging many of the major bugs that got fixed via patches, update rollups, and ultimately Windows 2012 R2.

We made the switch for the very reasons you cited initially above - i.e. ease of use, a simplified connectivity story, better VM portability, etc.

We're currently running about 500 VMs on a single SOFS + Nimble CS460G-X2 with 1 expansion shelf and an SSD expansion shelf. Perf and stability are both excellent.

Happy to talk with anyone about this if they're interested.

Pat

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-08-2014 07:58 AM

10-08-2014 07:58 AM

Re: Hyper-V 2012R2 Cluster Through SMB 3.0 Shares

I'm really interested in hearing about your deployment. Specifically around what RAM/CPU/NIC combination you're using, if you can or are willing to share. If you can't get specific, then generalizations would be fine too, I can extrapolate. We're discussing this internally now, but don't want to run the risk of buying our own SAS storage and potentially having to deal with disk failures etc being handled all by windows (yet). We run multiple Hyper-V clusters and are interested in the idea of having a single O/S to manage the storage, because we have multiple vendors for storage (just started standardizing on Nimble). Any pointers you'd be willing to share would be awesome. We love our Nimble storage, but SMB3.x's continual availability is something that we're really interested in.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-08-2014 08:09 AM

10-08-2014 08:09 AM

Re: Hyper-V 2012R2 Cluster Through SMB 3.0 Shares

Thank you for this information!

I am late getting back to this thread, and will follow-up in more detail later.

But I think this is a very interesting topic and looking forward to further discussion.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-08-2014 08:54 AM

10-08-2014 08:54 AM

Re: Hyper-V 2012R2 Cluster Through SMB 3.0 Shares

Happy to share.

Our SOFS servers are a pair of clustered Dell R520 machines with 2 Intel E5-2440 (2.4Ghz) CPUs and 24GB RAM. The spec of the machine isn't very high-end but it doesn't need to be. With that config supporting 500 VMs and we average around 4000 IOPS to the Nimble and the CPU averages about 6% with some occasional spikes to 15 or 20%. We have CSV caching enabled and that helps out a bit typically saving a decent amount of read IOPs to the array. At 24GB of RAM with CSV cache enabled, we run at around 12GB used (and 12GB free) RAM.

We have dual 10Gbs NICs for iSCSI back to the Nimble and dual 10Gbs NICs out the front end for SMB. All Hyper-V hosts also have dual 10Gbs NICs that we run both SMB traffic, as well as host and guest traffic over and we use Windows QoS policies to prioritize and throttle certain types of traffic (e.g. live migration traffic) (for the record - we've never, ever been anywhere close to network-bound with this network config on our Hyper-V servers). We do not run RDMA NICs or anything like that - just standard Intel 10Gbs. We have a new config coming in that's got RDMA on the SOFS servers and Hyper-V servers (with additional NICs for guest traffic). We'll be testing on that soon to see if there is any appreciable difference using RDMA vs. non-RDMA.

One important bit - we run dev/test workloads in this environment. We're a consulting company where doing development = generating revenue, so this is far from any sort of secondary class workload (it's higher priority than nearly all other systems). That said, the load/demand of these VMs tends to be more sporadic and less consistently loaded than say a typical production workload... just mentioning that to give you more context... We also run this same config with other storage for our production workloads.

We too are multi-vendor for storage and we've standardized on SMB3 as a way to 'equalize' the storage and simplify day-to-day management of the arrays (there basically is none). We just carve off a few large LUNs (~12TB is our current favorite size) and present those to SOFS and run then as continuously available CSVs.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-08-2014 10:18 AM

10-08-2014 10:18 AM

Re: Hyper-V 2012R2 Cluster Through SMB 3.0 Shares

Thanks for the info overload! That's hugely helpful. Do you see any reason to increase the RAM in those boxes in order to get more CSV caching? I ask because we have a couple of servers that are coming off of production use which have 96GB loaded up and I was thinking of utilizing those for our SoFS boxes.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-09-2014 01:14 AM

10-09-2014 01:14 AM

Re: Hyper-V 2012R2 Cluster Through SMB 3.0 Shares

Hi!

We are doing the exact same thing, we some thoughts regarding write performance. We have CS240G-X2 systems and 1 shelf on each.

Running an SQLIO write test we can get very decent results. (See at the end of this post.)

But when we do normal Windows explorer copy jobs or Robocopy jobs from a local disk to a volume on the arrays, the throughput is pretty much really bad to ok, say 100MB/s to 360MB/s and sometimes high latency. Compared to SQLIO's sequential writes this is not very good.

Does anyone experience the same not so good performance regarding the copy jobs?

Has anyone enabled aggressive caching if only the SOFS accesses the volumes?

Kind regards,

Dave

---

C:\Temp\nimble>echo "SQLIO sequential write test - increase run times"

C:\Temp\nimble>sqlio -kW -fsequential -t4 -s240 -b64 -o8 -LS -BH -Fparam.txt

sqlio v1.5.SG

using system counter for latency timings, 1948431 counts per second

parameter file used: param.txt

file E:\file1.dat with 2 threads (0-1) using mask 0x0 (0)

file E:\file2.dat with 2 threads (2-3) using mask 0x0 (0)

4 threads writing for 240 secs to files E:\file1.dat and E:\file2.dat

using 64KB sequential IOs

enabling multiple I/Os per thread with 8 outstanding

buffering set to use hardware disk cache (but not file cache)

using specified size: 30000 MB for file: E:\file1.dat

using specified size: 30000 MB for file: E:\file2.dat

initialization done

CUMULATIVE DATA:

throughput metrics:

IOs/sec: 16227.12

MBs/sec: 1014.19

latency metrics:

Min_Latency(ms): 0

Avg_Latency(ms): 1

Max_Latency(ms): 78

histogram:

ms: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24+

%: 3 55 39 3 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

C:\Temp\nimble>timeout /T 120

"---- 8 min ---------"

C:\Temp\nimble>sqlio -kW -fsequential -t4 -s480 -b64 -o8 -LS -BH -Fparam.txt

sqlio v1.5.SG

using system counter for latency timings, 1948431 counts per second

parameter file used: param.txt

file E:\file1.dat with 2 threads (0-1) using mask 0x0 (0)

file E:\file2.dat with 2 threads (2-3) using mask 0x0 (0)

4 threads writing for 480 secs to files E:\file1.dat and E:\file2.dat

using 64KB sequential IOs

enabling multiple I/Os per thread with 8 outstanding

buffering set to use hardware disk cache (but not file cache)

using specified size: 30000 MB for file: E:\file1.dat

using specified size: 30000 MB for file: E:\file2.dat

initialization done

CUMULATIVE DATA:

throughput metrics:

IOs/sec: 17693.71

MBs/sec: 1105.85

latency metrics:

Min_Latency(ms): 0

Avg_Latency(ms): 1

Max_Latency(ms): 79

histogram:

ms: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24+

%: 4 70 25 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

C:\Temp\nimble>timeout /T 120

"---- 15 min ---------"

C:\Temp\nimble>sqlio -kW -fsequential -t4 -s900 -b64 -o8 -LS -BH -Fparam.txt

sqlio v1.5.SG

using system counter for latency timings, 1948431 counts per second

parameter file used: param.txt

file E:\file1.dat with 2 threads (0-1) using mask 0x0 (0)

file E:\file2.dat with 2 threads (2-3) using mask 0x0 (0)

4 threads writing for 900 secs to files E:\file1.dat and E:\file2.dat

using 64KB sequential IOs

enabling multiple I/Os per thread with 8 outstanding

buffering set to use hardware disk cache (but not file cache)

using specified size: 30000 MB for file: E:\file1.dat

using specified size: 30000 MB for file: E:\file2.dat

initialization done

CUMULATIVE DATA:

throughput metrics:

IOs/sec: 17274.21

MBs/sec: 1079.63

latency metrics:

Min_Latency(ms): 0

Avg_Latency(ms): 1

Max_Latency(ms): 56

histogram:

ms: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24+

%: 3 66 29 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

---

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-09-2014 09:55 AM

10-09-2014 09:55 AM

Re: Hyper-V 2012R2 Cluster Through SMB 3.0 Shares

David Jay wrote:

But when we do normal Windows explorer copy jobs or Robocopy jobs from a local disk to a volume on the arrays, the throughput is pretty much really bad to ok, say 100MB/s to 360MB/s and sometimes high latency. Compared to SQLIO's sequential writes this is not very good.

Copy jobs (and Robocopy without the correct flags) are a single CPU threaded process, whereas SQLIO is generating load via multiple CPU instances. This is the most likely reason why you're not seeing high throughput.

Have you opened a case for Support to take a look at your setup?

twitter: @nick_dyer_

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-09-2014 01:07 PM

10-09-2014 01:07 PM

Re: Hyper-V 2012R2 Cluster Through SMB 3.0 Shares

Hi!

Yes we know this we run it RoboCopy:

robocopy /mir /mt:6 c:\somedir f:\somedir

Still we have not so great performance... I just find it quite strange.

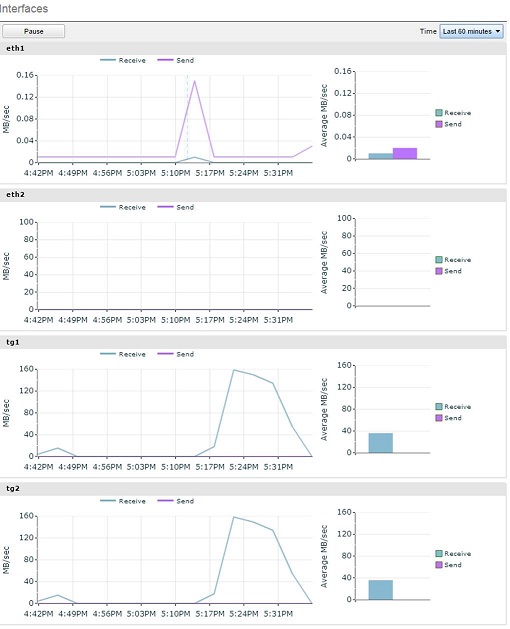

This is the graph from our array during one of these copy job tests

Yeah support are having a go at it... during these we see latencies of up to 100ms which is not so good.

We will be trying with a Linux server do file copies to the volumes on the Nimble array tomorrow morning. And see if this is just an issue with Windows (2012 R2) or not.

/Dave