- Community Home

- >

- Storage

- >

- HPE Nimble Storage

- >

- Array Setup and Networking

- >

- Re: What is the best way to prepare my network for...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-08-2013 02:19 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-12-2013 10:46 PM

02-12-2013 10:46 PM

Re: What is the best way to prepare my network for my Nimble Storage array?

Some high level best practices:

1)separate mgmt from data traffic (have dedicated VLAN for each type of traffic)

2)configure flow control for the network ports

3)if jumbo frame were to be used, ensure it is enabled end-to-end (from host NIC to access switch ports to nimble data interface ports)

4)K.I.S.S (keep it simple st*pid): stay with default settings in ESX and/or physical hosts (unless told by tech support explicitly)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-21-2013 05:47 AM

02-21-2013 05:47 AM

Re: What is the best way to prepare my network for my Nimble Storage array?

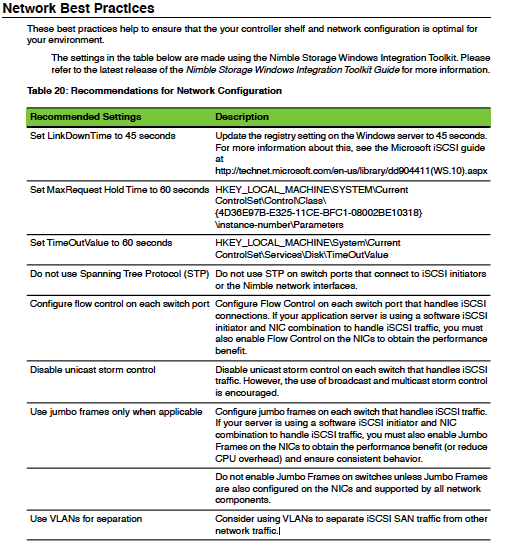

A few more recommendations (current as of 1.4.4.0)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-28-2013 02:02 PM

02-28-2013 02:02 PM

Re: What is the best way to prepare my network for my Nimble Storage array?

Got the following set of tips from Eddie Tang (thanks Eddie):

- A single subnet cannot be used should the switches not be connected\stacked. The resulting behavior is a mismatch in # of connections found on Admin GUI -> Connections list to what is expected. Also, ping tests between ports will be appear to be inconsistent (pings from host port 1 to tg1 may not work even though on the diagram they show to be well connected)

- if iSCSI discovery is placed on the management subnet, every host that uses the iSCSI discovery to connect to Nimble volumes will have to routable to the management subnet as well. This may not always be the case in larger environments where a management network may be secured and not accessible by all hosts (A quick note to add on this one: larger/enterprise shops will almost always have management network on a separate subnet, thus, it is best to put iSCSI discovery on the data interface. The network admins will throw a fit if they were asked to route between management network and data/private subnets!)

- iSCSI discovery can be assigned to one of the data subnets with the tradeoff of losing discovery functionality should that data subnet no longer be available (I.e. switch down or loss of host port)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-28-2013 03:21 PM

02-28-2013 03:21 PM

Re: What is the best way to prepare my network for my Nimble Storage array?

another point from Adam Herbert on ISL vs. stacking switches, on this very same subject - here's my opinion, most enterprise class switches can be stackable - and yes, it is best to stack the switches. If customer is using Cisco Nexus switches, then recommend customers to configure vPC (virtual port channeling)

From Adam:

Be cautious about just simply adding a couple of ISL links between switches that also uplink into a core. Spanning tree will elect the ports that are closest to the root node for uplinks. The ISL links will have a higher cost and will be disabled unless configuration is done to override this. In this scenario iSCSI traffic will traverse the core rather than the ISL links. This cannot be fixed by simply disabling spanning tree on the uplinks due to a risk of a layer 2 loop. This is why a stacked switch design is better because the stack will share a MAC bridging table.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-04-2013 09:51 PM

03-04-2013 09:51 PM

SolutionHere are some best practices to follow when configuring your network. Also remember if using stacked switches, to map your ports, and fully understand what happens on a firmware upgrade to your network stack. Most stacked switches don't allow for rolling updates, and will require the entire stack to be rebooted. If this is longer than your timeouts allow you will need to plan accordingly.

iSCSI Switching best practices

- Disable spanning tree for ports connected to storage devices and storage HBAs/NICs.

- While you can use Jumbo frames you will see a 5-6% improvement on 1G and a 1-2% improvement on 10G networking. The levels of complexity this brings to your network can make troubleshooting more complicated.

- It is best to get a test environment setup without Jumbo frames before converting to Jumbo frames. This makes sure everything was working before enabling Jumbo frames.

- Enable FC on the switch and the Connected Hosts. The Nimble device will automatically respect your FC settings

- Storm Control ·

- Unicast storm control should be disabled, this is because iSCSI traffic is unicast traffic and can easily utilize the entire link in a 1G network configuration

- The use of broadcast and multicast storm control is still encouraged.

- Unbind File and Print sharing from iSCSI Nics.

- If your switch supports it you should enable Cut-Through switching. This will eliminate delays in iSCSI Switch fabrics. You may only want to do this if you have a dedicated iSCSI Network.

- MPIO ·

- When using MPIO you should pay attention to the type of MPIO.

- If Round Robin is the only type available you will need to use that.

- RR is the method VMware uses at this time, and you need to be careful if mixing 1G and 10G iSCSI links in your VMware stack, as you will never benefit on the additional bandwidth on the 10G link. When using VMware in this configuration it is best to change network configurations to have the 10G as active and the 1G as standby.

- If least queue depth is available this is the preferable method.

- LQD will allow for a host to balance based on disk queue size. So if you have a link that is running slow for some reason more work would balance to the other link in MPIO.

- LQD is also the preferable method when mixing 1G and 10G networking. With LQD the MPIO stack will be able to max out a 1G link and continue to use the 10G link for additional bandwidth.

- The preferred configuration would be to have an isolated switch infrastructure for your iSCSI Network. However if this isn't possible you should use VLANs on your network to isolate and prioritize iSCSI traffic.

- You would then need to setup QoS on your network to give iSCSI traffic a higher priority. If you are running VoIP traffic on the same network you should make sure to setup you VoIP traffic to get a higher priority than iSCSI to ensure you don't drop VoIP traffic.