- Community Home

- >

- Servers and Operating Systems

- >

- HPE BladeSystem

- >

- BladeSystem - General

- >

- Virtual Connect Flex-10 problem?

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-30-2015 02:07 PM

03-30-2015 02:07 PM

Virtual Connect Flex-10 problem?

A Virtual Connect question from Zdravko:

**************

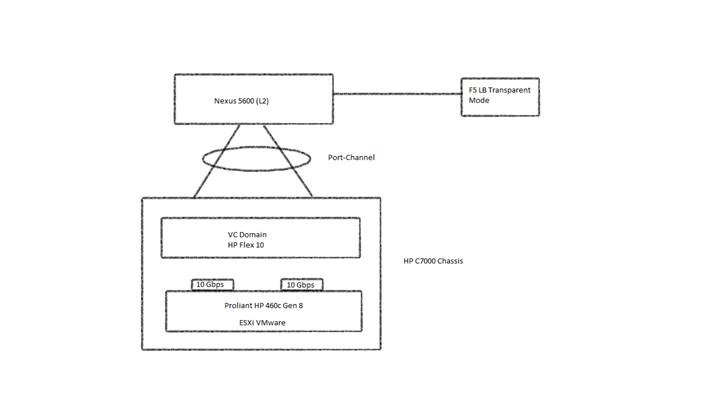

On the picture is shown diagram of deployment which we are trying to implement using HP C7000 Chassis with 2 HP Flex 10 and HP Proliang 460c Gen 8 with VMware ESXi on it.

On Vmware we have approximate 126 VLANs, and Nexus 1000v as a DVS. We’ve tried two scenarios to worked this out.

Scenario 1:

We’ve configured VC connect Domain with vNet in Tunnel mode. In this scenario, the upstream network switch is configured to pass multiple VLANs to two ports on each VC module. The upstream switch ports is configured as “trunk” ports (using LACP) for several VLANs. However in this Scenario we’ve experienced partial or total loss of connectivity for servers in VLANs which are in “bridged-transparent” mode by F5 LB.

We believe we have encounter a scenario described in: http://h20565.www2.hp.com/hpsc/doc/public/display?calledBy=&ac.admitted=1410467226755.876444892.492883150&docId=emr_na-c02684783-2&docLocale=

Scenario 2:

According to recommendation we’ve disabled tunneling of VLAN tags and implemented map VLAN tags instead with Shared Uplink Set. With this, problem with loss of connectivity was solved.

However again we’ve encounter another limiting factor for scalability, number of VLANs – 162 Unique Server Mapped VLAN; now we are using 126. (http://h20565.www2.hp.com/hpsc/doc/public/display?docId=emr_na-c03278211 – page 4). This scenario is unacceptable since we have growth plans of nearly triple in next 4 years, so we might hit the limit of 162 VLANs very soon. We use this HW for private/custom Cloud solutions, and every customer is a separate VLAN, or a possible tweak/workaround to scenario 1 so that we don’t encounter those problems?

Is there another recommended/supported deployment for our Scenario using HP Flex 10, which does not have above limitation/problems?

************

Input from Dan:

**************

First off, you cannot use LACP to Aggregate 2 uplinks from different Flex-10 modules as their picture and text describes. LACP requires that the ports on each side terminate in the same switch. Each VC module is considered a different switch. So if they only have 1 uplink from VC Bay 1 and 1 uplink from VC Bay 2, they need to delete the Port Channel interface from the Cisco side.

As far as the Customer Advisory, you need to confirm with them if they are using 2 VLANs or just 1 for their Load Balancer.

There were some VLAN enhancements in 4.30, but if they are truly USING 126 VLANs on each server, then these enhancements won’t help them break the 162 barrier.

The enhancements are such that you can plumb more than 1000 VLANs into VC Mapped Mode as long as the servers are using less than 162 per port and the total in use per VC Domain is less than 1000.

In a pinch, an alternative might be Broadcom NPAR with a 6125XLG instead of Flex-10.

I would have them test that though before they make the decision to switch.

***********

Other comments?