- Community Home

- >

- Software

- >

- HPE Ezmeral: Uncut

- >

- Analytic model deployment too slow? Accelerate dat...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

Analytic model deployment too slow? Accelerate data science with self-service.

While organizations are eager to adopt and reap the benefits of AI, they are facing challenges that make developing and deploying analytic models painfully slow.

Lack of team coordination

Data science is a team sport – requiring coordination and cooperation between multiple players and disciplines (data engineers, operations, analysts, and scientists.) across a distributed organization. Unfortunately, this team sport is cumbersome because players are using different tools and many of the steps and processes throughout the analytic lifecycle are done manually with dependencies on groups with different responsibilities and priorities. As a result, delays occur at numerous handoff points and a lot of time is wasted waiting for things to happen. For example, before data scientists can build and train their models, they must wait for operations to provision the new infrastructure and tools. This one step can take months! Then they must wait for data or permission and approvals for the data, which can take weeks.

Imagine these bottlenecks happening in a triathlon, where the overall completion time consists of your swim/bike/run segment times with the time transitioning between the disciplines included. As you exit out of the water, and into the transition area, imagine waiting for someone to provision your bicycle and cycling gear. Then, as you complete your cycling segment, you enter the transition area again, and wait for someone to order and fit your running shoes. Clearly, something needs to be done to address the delays that occur at these transition (handoff) points – or else you won’t win against the competition.

Resource underutilization

Another challenge organizations face is that ML workloads have unique infrastructure requirements – like GPUs. GPUs are specialized microprocessors that work behind the scenes to deliver the high-performance computing (HPC) power and processing capabilities that can handle the data- and compute-intensive workloads of enterprise AI and ML. GPUs are expensive, and for most analytics teams, are in short supply. To solve this challenge, many analytics teams request to purchase additional GPUs, but declining IT budgets and lengthy approval cycles make this either impossible or prone to more delays. As a result, data scientists often wait in the queue for their turn to run their jobs on dedicated servers.

In talking with customers, the problem is NOT a short supply of GPUs. The problem is a lack of sharing of available GPU resources across the organization – which means in many parts of the organization, GPUs are actually underutilized! That’s because most infrastructure environments today are set up as functional silos with dedicated GPU servers provisioned for individual applications, teams, and geographies. Analytics Team A might be training models using the maximum number of GPUs provisioned to them and must wait until the process is finished before running their next project. Meanwhile, Analytics Team B might be working on an experiment utilizing only half of their dedicated GPUs; the other half sits idle.

What if there were a way to pool the GPUs within the organization and allow the various teams to share GPU resources and provision and de-provision them as needed? This kind of system would allow infrastructure to be provisioned more efficiently, analytic model development and experimenting to be done faster, and all parties involved to be far more productive in their jobs.

Containers to the rescue

Containers provide such a solution. Containerization has become widely popular as it can help data science teams leverage existing infrastructure to spin up flexible environments quickly (on-premises, in multiple public clouds, in a hybrid model, or at the edge). Containerization can also tap into existing data lake sources with minimal IT involvement and speed up the process of training, deploying, and running AI models. Due to its many advantages, Gartner predicts by 2022, containers will become integral to 85% of enterprise AI pipelines.

Designed to accelerate the deployment of containerized applications at scale running on any infrastructure, HPE Ezmeral Container Platform is a hybrid cloud platform that brings speed, agility, and ease to data science. Built on 100% open source Kubernetes, users now have the flexibility to build and deploy Kubernetes clusters across the hybrid IT landscape and manage them from a unified control plane. This means the challenges mentioned earlier – the long infrastructure provision times and the misallocation of resources like GPUs – can be solved if the infrastructure and resources can all be managed from one unified control plane that provides visibility and management through self-service across the organization.

Self-service application and infrastructure provisioning

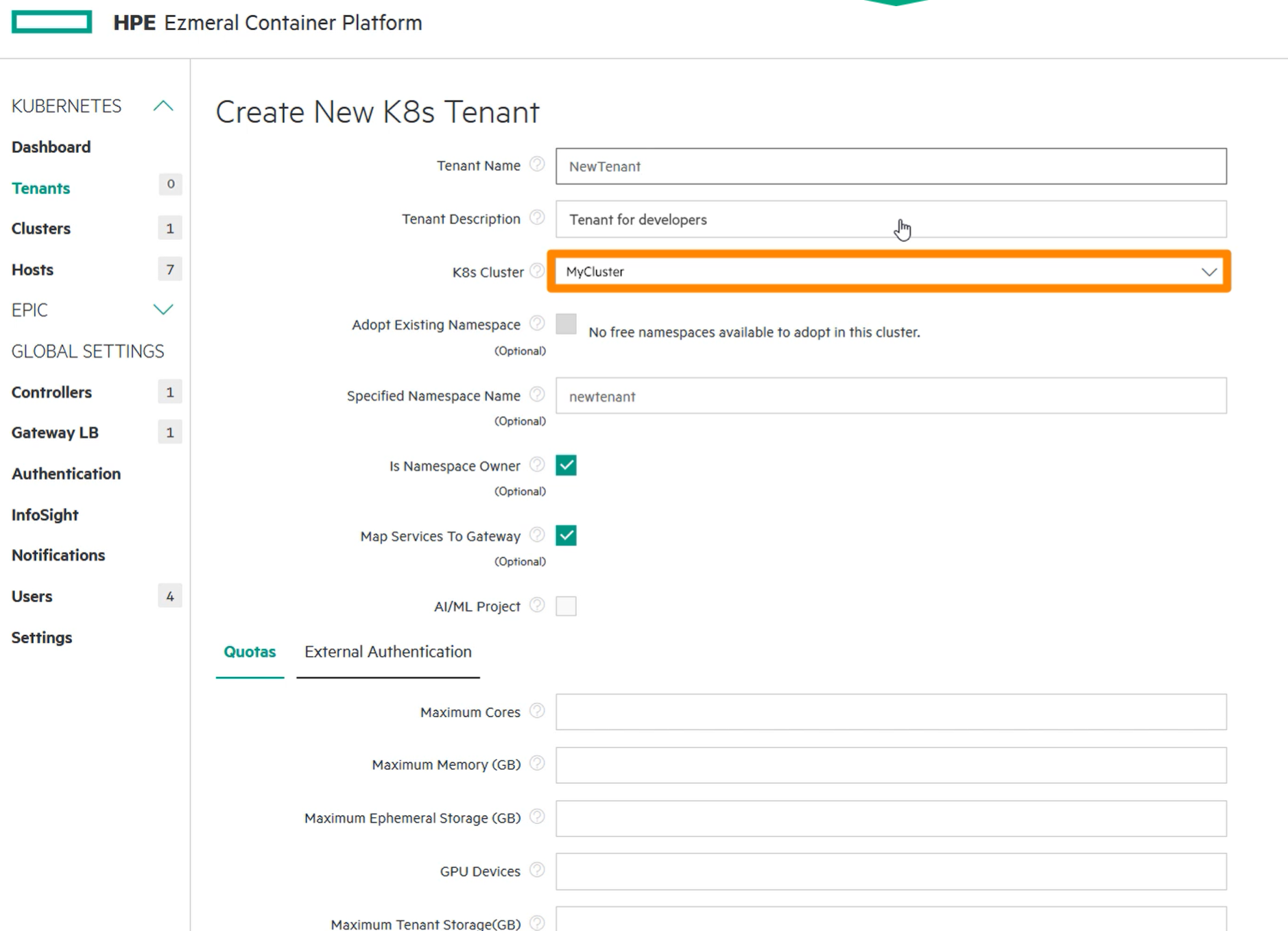

With HPE Ezmeral Container Platform, data scientists can enjoy the ease and agility of self-service. From the unified control plane (Figure 1), users can choose their own tools, applications, and storage needs for the job. They can also spin up GPU-accelerated clusters to provision their environments in minutes, instead of months – eliminating delay and wait times at the various handoff points throughout the ML lifecycle.

GPU optimization

The problem of GPU resource underutilization can be solved as organizations can now take the GPU resources they already own, consolidate them as a logical pool, and allocate them as needed to deliver on-demand GPU-accelerated applications with cloud-like ease and agility.

For enterprises that want to deploy virtualized GPU resources on demand, HPE offers a GPU optimization solution that increases utilization and maximizes productivity. This solution delivers the unique capability to pause containers (where GPU, CPU, and memory resources are released while the overall application state is persisted), allowing data science teams to run multiple different ML/DL applications on shared GPU infrastructure without recreating or reinstalling their applications and libraries. Plus, GPU usage across the organization can now be tracked and chargebacks can be administered easily and quickly.

Watch this demo to see how the unified control plane within the HPE Ezmeral Container Platform simplifies resource allocation, user authentication, workload deployment, and monitoring for any Kubernetes cluster, on premises or in the cloud.

App store

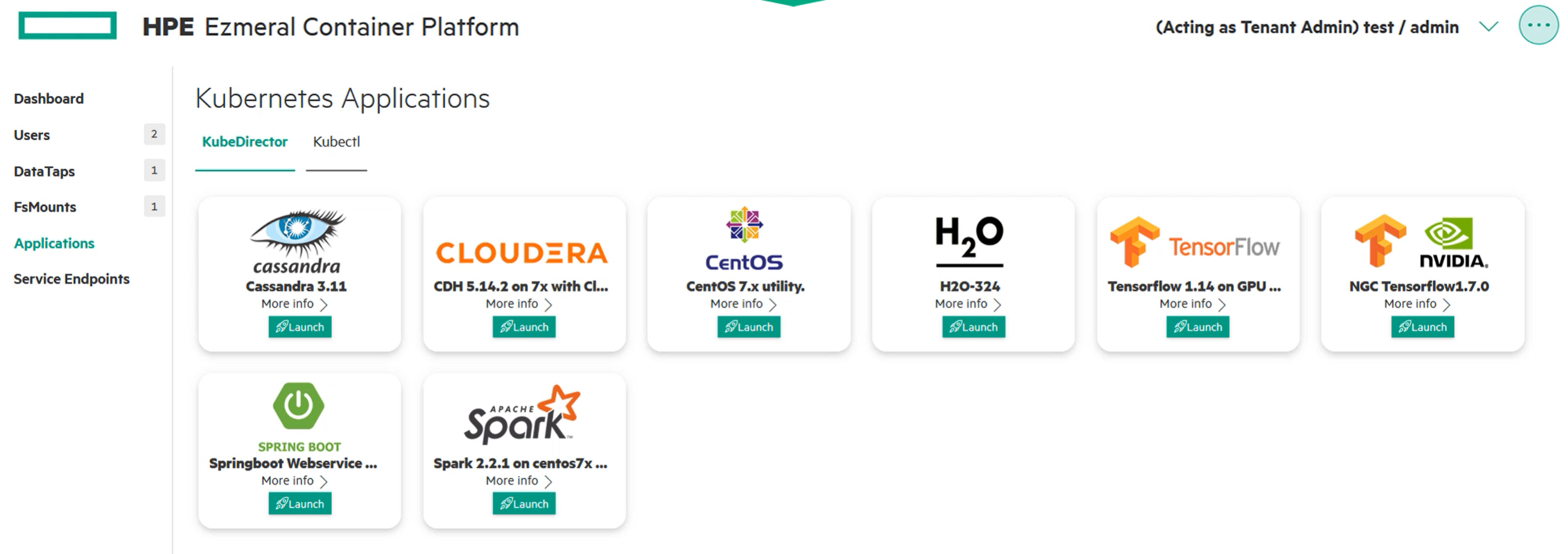

Designed to promote easy and convenient access to tools and applications, the HPE Ezmeral Container Platform app store (Figure 2) comes out of the box with over 20 curated, pre-built, one-click application images for a variety of use cases, plus a self-service process to download, configure, install, and add the latest open source images to the app store. Data scientists can now spin up a cluster on-premises or in the cloud in minutes.

Accelerating AI across industries

By enabling self-service in the provisioning of infrastructure and resources like GPUs, organizations benefit from improved compute performance, lower infrastructure costs through the efficient use of resources, and increased productivity of their data scientists. They can now unleash the power of AI to uncover insights into customer behavior and what is happening in the world around us. Businesses, government agencies, and organizations across industries – from healthcare, financial services, and manufacturing to retail – can respond to customer needs, diagnose diseases, discover drug treatments, detect fraud, and prevent security breaches – quickly and accurately.

For more information on how HPE Ezmeral simplifies infrastructure management and modernizes data science, register in advance to attend our upcoming World Watch webinar on February 17th, Self-Service Data Science and GPU Optimization.

[1] ML is a branch of AI that uses algorithms and statistical models to analyze data and make predictions from patterns in data.

[2] DL is an advanced AI technique that utilizes neural networks to analyze a variety of data types, including audio, video, speech, and text.

Lola Tam

Follow us on social:

HPE Ezmeral on LinkedIn | @HPE_Ezmeral on Twitter

@HPE_DevCom on Twitter | @HPE_Storage on Twitter

LolaTam

Lola Tam is a senior product marketing manager, focused on content creation to support go-to-market efforts for the HPE Enterprise Software Business Unit. Areas of interest include application modernization, AI / ML, and data science, and the benefits these solutions bring to customers.

- Back to Blog

- Newer Article

- Older Article

- Back to Blog

- Newer Article

- Older Article

- SFERRY on: What is machine learning?

- MTiempos on: HPE Ezmeral Container Platform is now HPE Ezmeral ...

- Arda Acar on: Analytic model deployment too slow? Accelerate dat...

- Jeroen_Kleen on: Introducing HPE Ezmeral Container Platform 5.1

- LWhitehouse on: Catch the next wave of HPE Discover Virtual Experi...

- jnewtonhp on: Bringing Trusted Computing to the Cloud

- Marty Poniatowski on: Leverage containers to maintain business continuit...

- Data Science training in hyderabad on: How to accelerate model training and improve data ...

- vanphongpham1 on: More enterprises are using containers; here’s why.

- data science course on: Machine Learning Operationalization in the Enterpr...