- Community Home

- >

- Software

- >

- HPE Ezmeral: Uncut

- >

- Data pipelines: here, there, and everywhere from e...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

Data pipelines: here, there, and everywhere from edge to cloud

Deploy dynamic data pipelines across multiple locations with a unified data fabric and a dynamic, containerized compute environment

For an enterprise, gaining insights from data should have similar odds. Data pipelines are at the center of keeping an enterprise winning with data. Enterprises have the added obstacle of having deployed data pipelines across multiple locations. Wherever a data pipeline exists, enterprises need to maneuver risk of creating data silos and keep each location connected. But no matter the intricacies of data with the correctly built data pipeline, enterprises should always win.

Challenges in building data pipelines

Enterprises build data pipelines to get valuable information out of their data; yet this process can be complex and fragile. The initial construction of a data pipeline requires excessive manual work to access and transform various data sources. And what happens if you need to change something? Changing or fixing an existing data pipeline is often time-consuming and error prone. Nevertheless, flexibility is a necessity for connecting new data sources or getting answers to ever-changing business requirements.

Traditionally enterprises have focused on the data inside their data center, establishing their data pipelines first. Now, less than 40% of data is in the data center. Data is at the edge, in data lakes, warehouses, and the cloud. This dimension of complexity comes into play when expanding data pipelines to these multiple locations where data exists to support global operations. Different data silos and technologies along multiple locations make this a difficult undertaking.

The mission remains the same: to always to solve analytical business questions, and these are not limited to a single location. To really get value out of a pipeline, it becomes a requirement to extend and tie into existing environments independent of the location -- whether the pipeline is in the datacenter, a remote location, or somewhere in the cloud. When extending or tying into environments, these data pipelines need to have frictionless access to their data sources—one that doesn’t require copying data from one silo to another or fractioning data before use.

Stacking the odds with better data pipelines

A well-built data pipeline ensures the enterprise stays winning by using it to make insightful decisions. An enterprise can stack the odds by using the HPE Intelligent Data Pipeline (IDP), as it solves data pipeline intricacies by providing a flexible framework based on microservices. IDP uses containerization and Kubernetes as building blocks. This platform supports any stage of the data pipeline from data ingestion, data processing, data persistence, data visualization, and data store, along with ML development for implementing various analytic use-cases. (For a deeper look at HPE’s Intelligent Data Pipeline, read Bhuvaneshwari Guddad’s blog, Intelligent data pipeline implementation for predictive maintenance.)

Go further with the HPE Ezmeral Data Fabric

If an enterprise has frictionless access to data, the intelligent data pipeline can be deployed as needed in multiple locations. The HPE Ezmeral Data Fabric delivers a consistent user experience and frictionless access to trusted data for legacy and modern users and applications. It provides a unified approach to data management and overcomes data access and management hurdles that impede successful analytic and machine learning projects. It provides the capability to expand and harness the benefits of being at the edge and cloud. Frictionless access to data facilitates easy use of the intelligent data pipeline at multiple sites, making the journey to data insights a smooth one.

See it in action

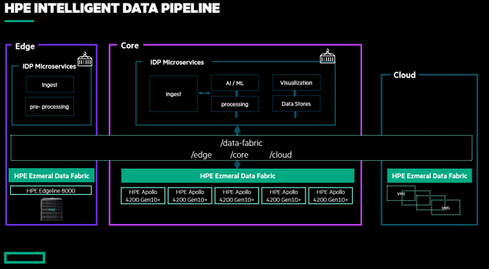

We created an exemplary solution stack to demonstrate the capabilities of the intelligent data pipeline and the HPE Ezmeral Data Fabric. This stack consists of a core, edge, and cloud components. At the core, we are using the HPE Apollo 4200 Gen10+ systems, as they are designed for higher data storage capacity and density as well as performance-demanding analytics AI/ML workloads. The HPE Edgeline 8000 is used to validate edge use-cases, such as in a telco environment, a mobile vehicle, or a manufacturing floor. In addition, we include public cloud resources to handle peaks workloads.

This scenario depicts a holistic solution stack deploying IDP across different locations, as illustrated in Figure 1 above. Using the HPE Ezmeral Data Fabric as a foundation framework allows businesses to create a global namespace across different sites. Applications can access data from anywhere, using multiple methods and APIs. All data can be accessed as if it were under the same roof or location.

Read more about HPE Ezmeral Data Fabric: HPE EZMERAL DATA FABRIC MULTI-SITE ON-PREMISES AND CLOUD INTEGRATION

Now that a data strategy is implemented, the HPE Intelligent Data Pipeline composed of interchangeable micro services can be customized based on need. They are delivered and pre-integrated by helm charts and can be used to analyze data at the edge, core, and cloud.

At the edge, data ingestion and preprocessing can occur. Using the global namespace, data can be accessed at the core, where deeper analyses can take place. CI/CD frameworks can be used to build, manage, and deploy data models and pushed out to remote locations. Due to the containerization and micro services, new pipelines can be spun up quickly and applied in parallel, using the latest cloud-native tools and frameworks.

Enabling the future

Whether you start at the core, edge, or cloud, you should be able to expand your system without having to rearchitect. With the wide use of Kubernetes and micro services, the compute strategy for deriving value from data is a question answered with the intelligent data pipeline. And by implementing the HPE Ezmeral Data Fabric, you will be able to expand your system as business needs change.

To build an efficient system, it’s best not to leave data strategy to a pieced-together process. Instead, develop a comprehensive data strategy. The HPE Ezmeral Data Fabric provides a singular data platform layer enabling the compute strategy to run on top of it. The HPE Intelligent Data Pipeline with the HPE Ezmeral Data Fabric solves the challenges when expanding data pipelines to multiple locations -- wherever analytic capabilities are needed for your enterprise.

View more about HPE Intelligent Data Pipeline, learn more about HPE Ezmeral Data Fabric, and contact us for more information!

Denise Ochoa

Hewlett Packard Enterprise

twitter.com/HPE_Ezmeral

linkedin.com/showcase/hpe-ezmeral

hpe.com/software

About the author:

- Back to Blog

- Newer Article

- Older Article

- SFERRY on: What is machine learning?

- MTiempos on: HPE Ezmeral Container Platform is now HPE Ezmeral ...

- Arda Acar on: Analytic model deployment too slow? Accelerate dat...

- Jeroen_Kleen on: Introducing HPE Ezmeral Container Platform 5.1

- LWhitehouse on: Catch the next wave of HPE Discover Virtual Experi...

- jnewtonhp on: Bringing Trusted Computing to the Cloud

- Marty Poniatowski on: Leverage containers to maintain business continuit...

- Data Science training in hyderabad on: How to accelerate model training and improve data ...

- vanphongpham1 on: More enterprises are using containers; here’s why.

- data science course on: Machine Learning Operationalization in the Enterpr...