- Community Home

- >

- Software

- >

- HPE Ezmeral: Uncut

- >

- The cost of data recovery: Does your disaster reco...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

The cost of data recovery: Does your disaster recovery plan really work?

Disasters happen. The question is, what happens next?

No matter how well you design your large data system to protect against catastrophic occurrences, some events are beyond your control. Flooding from hurricanes, fire damage, destruction of data center buildings from an unlikely and unlucky tornado strike are all natural disasters for which you must be prepared long in advance.

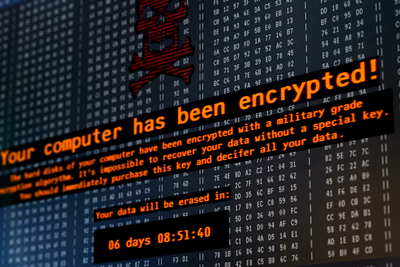

And it’s not just natural disasters from which you need a recovery plan. An equally serious but more ubiquitous threat is human-driven: a cyberattack and particularly ransomware. Evil actors constantly launch attacks against valuable data, and you have no guarantee they won’t breach your walls, even if you are careful.

That’s why a key part of dealing with day 2 issues is to have a practical plan for disaster recovery (DR). You make a plan for recovery and hope you never need to use it. But does your DR plan really work? And at what cost?

Efficient recovery from ransomware attack

Consider the following example of a ransomware attack. It illustrates how you can optimize your disaster recovery process to reduce costs incurred by downtime. This example also shows how to reduce the burden on IT required to set up and maintain an effective DR cluster.

Suppose your system is breached, and attackers encrypt your data, demanding ransom. Hopefully, you don’t have to be vulnerable to their demands because in advance, you built a back-up cluster at another location as part of a disaster recovery plan. But how long will it take to restore your system? With critical business processes interrupted, downtime is expensive. In a large-scale system, it could take days or weeks (or more) to restore data and restart processes if you don’t have data infrastructure engineered for efficient data recovery.

The speed of reinstating a system or your recovery time objective (RTO) is part of the cost of data recovery, so it’s important to do it quickly. Ideally, your data infrastructure should get you up and running in a matter of hours rather than days or weeks, even for a very large-scale system. In some cases, you may even need the RTO to be minutes or less. This consideration applies whether the catastrophic event is from a ransomware attack or from natural disasters such as a flood or tornado.

In addition to the expense associated with downtime, setting up a DR data center comes with a cost. It takes effort to implement a secondary data center in the first place, plus it places additional burdens on IT to update the DR cluster periodically as data changes.

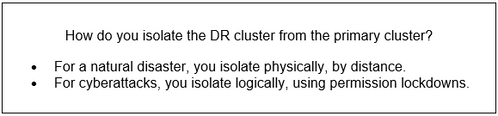

These costs apply to any type of DR, but in the case of ransomware, there’s a special challenge. With ransomware, what’s to prevent the attackers, once inside, from reaching and encrypting data in the secondary cluster as it communicates with the primary data center? It’s as though a tornado hits and damages your primary cluster, but you also have another tornado hiding in your system, ready to destroy the DR cluster as well at any moment.

You need a way to set up and update a secondary data center that is efficient and cost-effective, while allowing a small set of users or administrators to tightly control the ability to modify data in the secondary cluster. In other words, you must isolate the primary and recovery data centers in a way that’s appropriate for the disaster in question.

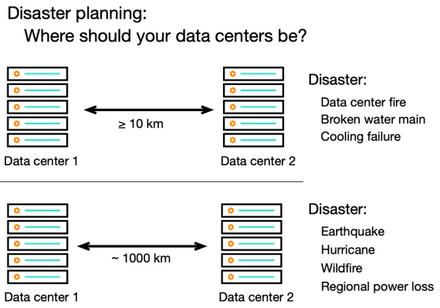

Location strategy depends on the disaster

The basic idea underlying a DR plan is to have a secondary data center isolated from the primary one in such a way that it is likely to survive whatever catastrophic event damages or disables the primary center. In the case of ransomware, your system should provide a way for you to isolate the clusters logically, via strict data access control. For natural disasters, the DR strategy uses distance to isolate the primary and secondary clusters physically. Choices about how much distance or what is an appropriate location depend in part on the type of natural disaster your system needs to survive, as illustrated in Figure 1.

Figure 1. Strategy for data center isolation varies for different types of disaster

For disasters that have limited scope beyond the data center building itself, a secondary data center at a nearby location may be appropriate, as suggested by the upper part of the diagram. But for disasters that may affect an entire region, the best DR plan is to locate the recovery cluster at a considerable distance, usually in another city unlikely to experience the same catastrophic event at the same time as the primary center (lower part of the figure). When hurricane Sandy came to New York, companies with secondary facilities in New Jersey learned this lesson the hard way.

What capabilities must your data infrastructure have to meet these requirements in a practical and cost-effective way while providing the performance needed for a very short recovery time?

Fast disaster recovery using data fabric

One data technology engineered to meet the needs of effective disaster recovery is the HPE Ezmeral Data Fabric File and Object Store, a highly scalable, software-defined solution that spans your enterprise from edge to data center, on premises, or in the cloud. HPE Ezmeral Data Fabric stores, manages, and moves data. For the major data motion required to set up a secondary data center, data fabric provides reliable and efficient data mirroring, as shown in Figure 2. In this diagram, triangles represent a data management construct known as a data fabric volume. Volumes act like directories with superpowers, and both snapshots and mirrors are implemented at the volume level.

For more on data fabric volumes, read What’s your superpower for data management?

Figure 2. Mirroring of data fabric volumes in HPE Ezmeral Data Fabric File and Object Store

To establish a secondary data fabric cluster at a remote location, data is mirrored from a source volume (green triangle at the New York data center in the figure) to a destination volume (orange triangles in the figure) on a remote data fabric cluster on premises or in the cloud. All data motion is handled by the data fabric itself.

HPE Ezmeral Data Fabric File and Object Store reduces the cost of disaster recovery by making it easy to set up and maintain a DR data center and – most importantly – by speeding up the process of restoring operations after a disaster, thus minimizing downtime. Here’s how:

- Mirroring can be scheduled to occur automatically, scripted, or initiated manually.

- Keeping the mirrored volumes up to date is fast and efficient. Updates are incremental, and only data that has changed is copied to the destination volume.

- If a disaster happens, you have several options to restore operation in the primary data center or to switch operations to the secondary data center.

° You can read data from the mirrored volumes on the DR cluster instantly.

° You can modify data on the DR cluster by promoting the mirrored volumes to read/write in minutes.

° You can restore a primary cluster or set up a new cluster by copying data via mirroring from the DR cluster in a matter of hours.

Data fabric’s versatility and flexibility of data access mean you can use a single system in your DR strategy for a variety of data types. Data fabric includes files, tables, event streams, and object store all together within the same system, the same volumes, and under the same management.

One extreme real-world example of the convenience, speed, and efficiency of using HPE Ezmeral Data Fabric for disaster recovery is a large European retailer. As part of critical infrastructure for their country, they are required to validate their disaster recovery plan by testing the system every few weeks, performing a full data center failover with no business impact. They really take seriously the idea that disaster recovery without practice leads to disasters without recovery.

Logical isolation via data fabric to deal with ransomware attacks

All these aspects of disaster recovery apply in the case of a ransomware attack, but data fabric also provides capabilities to deal with the special challenges imposed by this type of disaster. Fine-grained control of data access via data fabric’s Access Control Expressions (ACEs), Access Control Lists (ACLs), and policy-based security features all make it possible to set up logical isolation of a DR cluster even while data is automatically mirrored from a primary data center. This isolation can protect the backup data even against compromised administrative accounts.

Read “Security that scales: 3 ways policy-based security improves data governance”

Watch the video demo “HPE Ezmeral Data Fabric 6.2 policy-based security”

Data fabric also provides a bonus in the case of ransomware: a subtle way to detect data encryption by attackers based on detection of unexpected increase in storage use. For example, snapshots in the HPE Ezmeral Data Fabric work by using redirect-on-write, so all changed data is written to new blocks on disk. If you completely overwrite the data in a volume, as ransomware might do, the space required will effectively double because the snapshots will retain the original data. Because you can put quotas on data fabric volumes, snapshots, and mirrored volumes, you can detect sudden and unexplained increases in data volume sizes as unauthorized encryption gets underway, flagging a possible attack. Faster detection means faster protection and gives you the chance to minimize the impact of an attack, possibly even by restoring snapshots in the primary data center.

Read “Business continuity at large scale: data fabric snapshots are surprisingly efficient”

Making the least of a disaster

Being prepared to deal with catastrophic events in an efficient and cost-effective way won’t prevent a disaster, but it can prevent it from being a disaster to your business. Having a data platform that provides the right capabilities is a key tool to make this possible.

To find out more about business continuity with data fabric volumes, watch the video replay of the workshop “Data Fabric 101”

For more real-world use cases built on HPE Ezmeral Data Fabric, download a free pdf of the ebook AI and Analytics at Scale: Lessons from Real-World Production Systems by Ted Dunning and Ellen Friedman.

For an overview of data fabric, read the technical paper “HPE Ezmeral Data Fabric: Modern infrastructure for data storage and management”

Ellen Friedman

Hewlett Packard Enterprise

Ellen_Friedman

Ellen Friedman is a principal technologist at HPE focused on large-scale data analytics and machine learning. Ellen worked at MapR Technologies for seven years prior to her current role at HPE, where she was a committer for the Apache Drill and Apache Mahout open source projects. She is a co-author of multiple books published by O’Reilly Media, including AI & Analytics in Production, Machine Learning Logistics, and the Practical Machine Learning series.

- Back to Blog

- Newer Article

- Older Article

- SFERRY on: What is machine learning?

- MTiempos on: HPE Ezmeral Container Platform is now HPE Ezmeral ...

- Arda Acar on: Analytic model deployment too slow? Accelerate dat...

- Jeroen_Kleen on: Introducing HPE Ezmeral Container Platform 5.1

- LWhitehouse on: Catch the next wave of HPE Discover Virtual Experi...

- jnewtonhp on: Bringing Trusted Computing to the Cloud

- Marty Poniatowski on: Leverage containers to maintain business continuit...

- Data Science training in hyderabad on: How to accelerate model training and improve data ...

- vanphongpham1 on: More enterprises are using containers; here’s why.

- data science course on: Machine Learning Operationalization in the Enterpr...