- Community Home

- >

- Storage

- >

- Around the Storage Block

- >

- HPE Nimble Storage dHCI and Peer Persistence

Around the Storage Block

1752740

Members

5430

Online

108789

Solutions

Forums

Categories

Company

Local Language

back

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Discussions

Discussions

Forums

Discussions

back

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Blogs

Information

Community

Resources

Community Language

Language

Forums

Blogs

Subscribe to RSS Feed

|

Blog Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

HPE Nimble Storage dHCI and Peer Persistence

Fred_Gagne

03-12-2020

11:01 AM

I have been pretty quiet over the last 2 month mainly because I was working on our HPE CSI driver validation against Google Cloud’s Anthos (Yes, a blog post is coming). In the meantime, one of the most asked questions was regarding Peer Persistence: do you support Peer Persistence with HPE Nimble Storage dHCI? If so, what are the requirements? First, we do support Peer Persistence with dHCI and in this blog post I will share various requirements and provide a high-level overview of the deployment.

Requirements

HPE Nimble Storage dHCI support for Peer Persistence has the following requirements:

-

Two HPE Nimble Storage dHCI arrays are required.

-

The arrays must be of the same model (for example, AF40 and AF40).

-

The arrays must be running the same version of NimbleOS. (5.1.2 minimum)

-

No more than 32 servers can be used for dHCI. (This maximum total does not mean 32 per site, but 32 for both sites together.)

-

A VMware vSphere cluster can only spread across two sites.

-

Only one VMware vCenter server is required.

Deployment

Planning your network and dHCI deployment is a critical piece of the puzzle and I can’t say enough that before starting your deployment, please read our deployment guide and make sure that you have your worksheet ready. The worksheet is available at the end of our deployment guide. There are a lot of data that will be needed when you do the deployment. It will be useful, believe me!

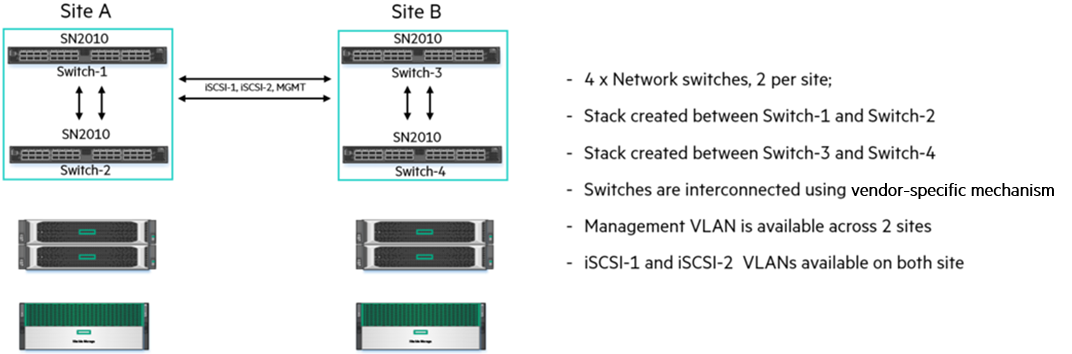

Before moving forward with the deployment, you must complete the network configuration between your sites. One specific requirement for Peer Persistence with HPE Nimble Storage dHCI is the vlan configuration. The management subnet and both iSCSI subnets must be available between the two sites.

Your networking configuration should be similar to the one shown below

To summarize, you need to make sure that your management vlan and your two iSCSI vlans are available on both sites. As you can see, I have used HPE M-Series switches to configure the solution. To avoid any loop in my environment, I have created an mlag port-channel between my sites so that I can have all my vlans available on both site. An mlag port-channel can be used to interconnect your HPE M-Series to your network as well, from M-Series to M-Series or to other switch vendor so it’s a useful concept and CLI command!

Now that you have your network in place, you can start the deployment! But, did you fill out your worksheet? If yes you are awesome. If no, I still believe that you’re awesome but please take a couple of minutes to fill it out J .

Let’s go over the deployment steps for Peer Persistence at a high level.

-

Perform the steps from the section Deploying the HPE Nimble Storage dHCI solution from our deployment guide on the first dHCI system. Make sure that you select all the servers from both sites during the deployment. This step is really important, as it will assure a successful deployment.

-

On the second dHCI system, perform only the task Discover the array from the section Deploying the HPE Nimble Storage dHCI solution.

-

When the Setup Complete message appears, you can close the window. Yes, you read it correctly, after the Nimble array configuration, we close the browser and you can move back to the first dHCI solution.

-

Log in to the HPE Nimble Storage web UI on the first dHCI solution.

-

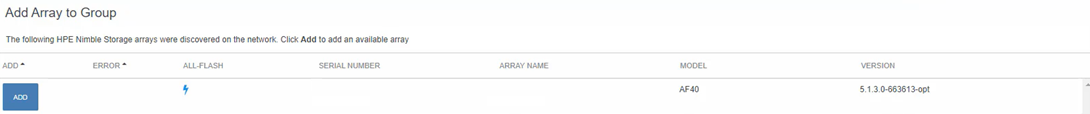

Click Hardware, Action, Add Array to Group, and look for your second array in the list.

-

Click Add and provide the login and password of your second array.

-

Click Finish.

At this point, the relation is created between the two arrays. You can use the HPE Nimble Storage dHCI vCenter plugin to create a volume collection and datastore.

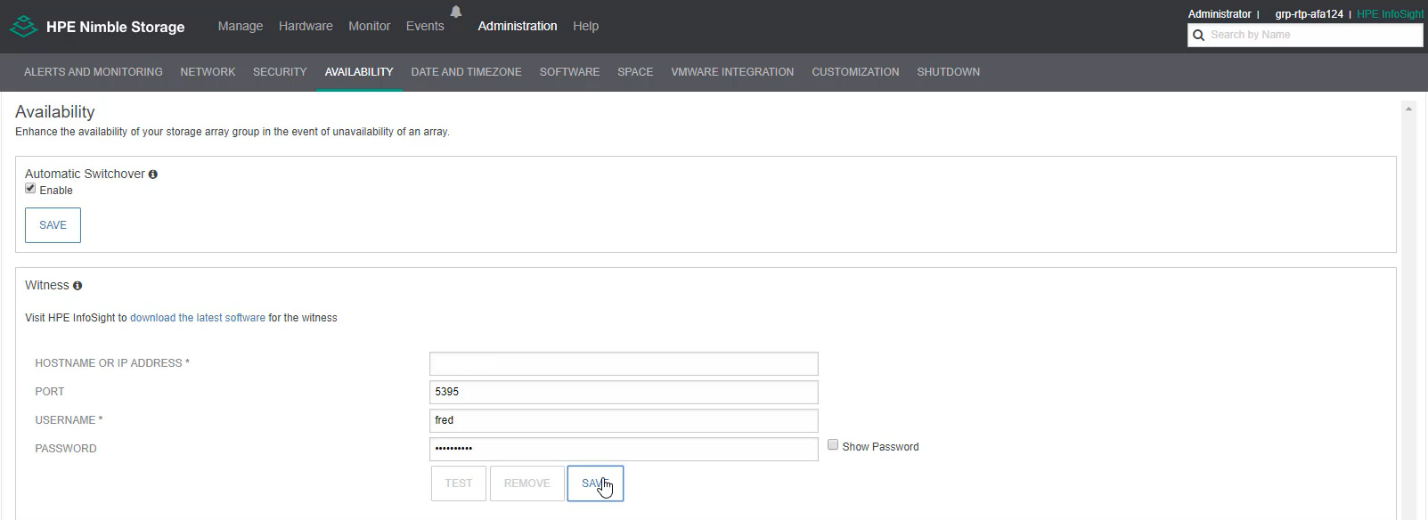

Isn’t that simple? In a few steps, you have two HPE Nimble Storage dHCI deployed with Peer Persistence on top of it. Now you can decide if you would like to use Automatic Switch Over (a.k.a ASO) or only use Synchronous replication. If you plan to use ASO, you need a third site where you can deploy a witness vm. In case you would so, please refer to the deployment guide on InfoSight available here.

In my lab, I have deployed a witness vm and I also have enabled ASO

Management:

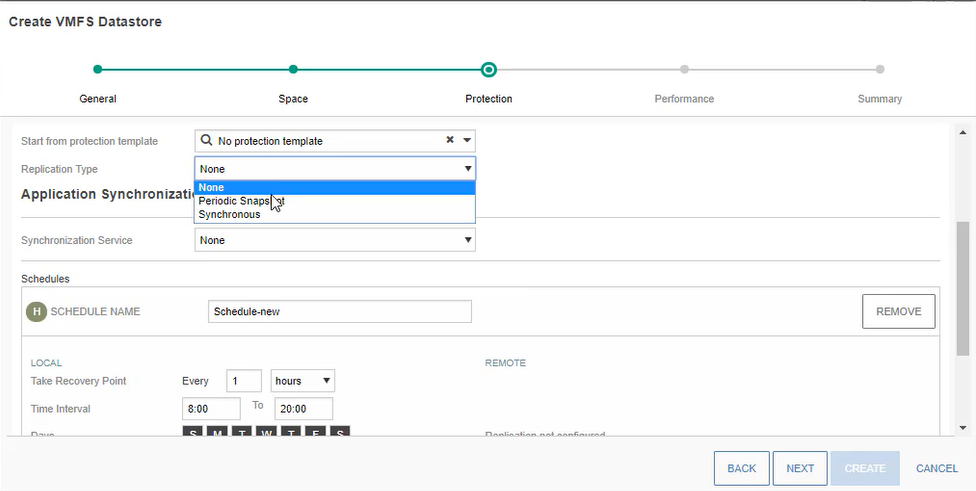

Let’s create a VMFS datastore that uses Peer Persistence.

-

Open a web browser and connect to vCenter (HTML5).

-

Click Menu and select HPE Nimble Storage.

-

Click Nimble Groups and select your group.

-

Click Datastores and select VMFS.

-

Click the plus sign (+) to add a new datastore.

-

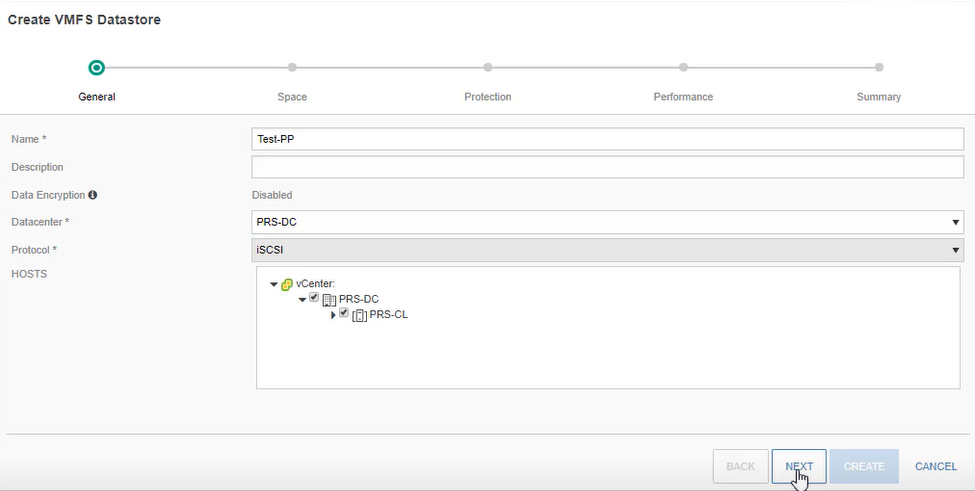

In the Datastore dialog box, provide the following information and then click Next:

-

Specify a name for the datastore.

-

Optionally, you can provide a short description of the datastore.

-

Select the datacenter where you want the VMFS datastore to be created.

-

Under Protocol, select iSCSI.

-

Under Host, select your HPE Nimble Storage dHCI cluster.

-

-

Specify a size for the VMFS datastore.

-

Click Location to specify the array on which want to create the volume. As you can see, I have two different pools, which is exactly the behavior expected here. You can select on which pool you would like to create the volume

-

Select Create a new volume collection to use with this datastore and then click Next. The dialog box expands to enable you to create a volume collection and a schedule for it. You must provide a name for the volume collection. You can then use that volume collection with another datastore, if you choose. Next, complete the information in the Create Volume Collection section of the dialog box. You might need to use the scroll bar to see all the options. The same volume collection can be used with multiple volumes.

-

Replication type. Select Synchronous

-

Replication partner. The replication partner should be auto selected.

-

-

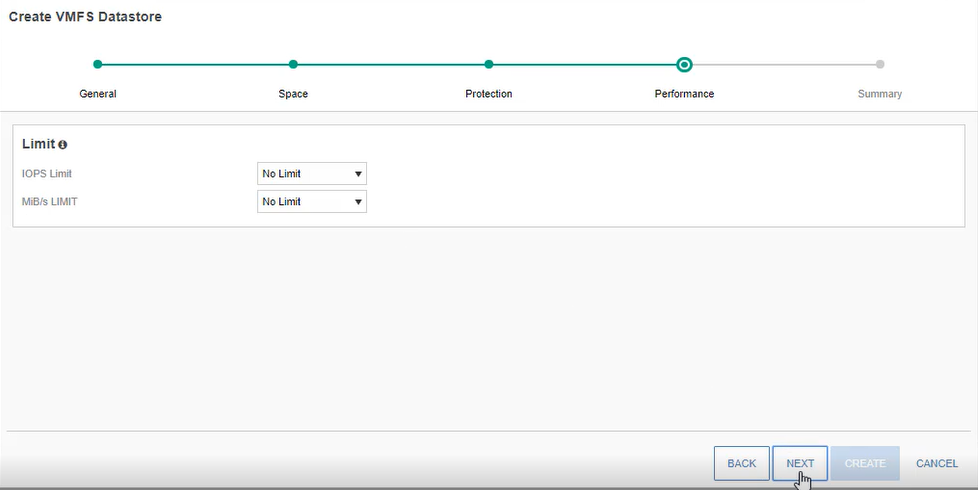

Optionally set limits for IOPS and throughput and click Next. You can select either No Limit or Set Limit, which allows you to enter a value for that option.

-

View the settings summary and click Finish.

-

The new datastore is now created and automatically replicated synchronously on your second array.

You can easily create a datastore on array 1 or array 2 at your convenience. One of the best practices that I can share is that you should make sure that the VMs running on site 1 have their datastore from the array which is also running on this site and vice-versa. This will ensure a lower latency, since the arrays write their data locally on their site.

At this point, you have a fully configured HPE Nimble Storage dHCI solution using Peer Persistence!

Stay tuned, more to come!

Labels:

- Back to Blog

- Newer Article

- Older Article

Latest Comments

- haniff on: High-performance, low-latency networks for edge an...

- StorageExperts on: Configure vSphere Metro Storage Cluster with HPE N...

- haniff on: Need for speed and efficiency from high performanc...

- haniff on: Efficient networking for HPE’s Alletra cloud-nativ...

- CalvinZito on: What’s new in HPE SimpliVity 4.1.0

- MichaelMattsson on: HPE CSI Driver for Kubernetes v1.4.0 with expanded...

- StorageExperts on: HPE Nimble Storage dHCI Intelligent 1-Click Update...

- ORielly on: Power Loss at the Edge? Protect Your Data with New...

- viraj h on: HPE Primera Storage celebrates one year!

- Ron Dharma on: Introducing Language Bindings for HPE SimpliVity R...

The opinions expressed above are the personal opinions of the authors, not of Hewlett Packard Enterprise. By using this site, you accept the Terms of Use and Rules of Participation.

News and Events

Support

© Copyright 2024 Hewlett Packard Enterprise Development LP