- Community Home

- >

- Storage

- >

- Around the Storage Block

- >

- Tech Preview: Docker Volume plugin for HPE Cloud V...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

Tech Preview: Docker Volume plugin for HPE Cloud Volumes

You can’t go to DockerCon without anything new and exciting to talk about. This year is no different in that regard but I’d say this year our engineering teams have been busier than ever. Since the inception of HPE Cloud Volumes (former Nimble Cloud Volumes) we’ve talked about how we would be able to support containerized workloads. From day one we’ve been able to provide storage to instances running Docker to serve the local registry and local volumes. When we published the REST APIs we made it fairly trivial to provision Docker Swarm clusters running on HPE Cloud Volumes using Ansible. Today I’m pleased to share the next milestone as we preview the Docker Volume plugin for HPE Cloud Volumes on AWS!

The plugin runs like any other Docker Volume plugin on each of the cluster members and allows users to provision persistent storage for containerized workloads seamlessly from HPE Cloud Volumes. For our current customer base it means that you don’t have to compromise on storage capabilities when moving workloads to the public cloud (we can replicate your on-premise data from any HPE Nimble Storage array onto HPE Cloud Volumes for use with the Docker Volume plugin instantly). For net new customers and prospects you can think about this as a many times more reliable EBS with unmatched data services such as instant snapshots, clones and the ability to instantly restore a volume to a known state - there is no transferring to S3 involved which seem to be a popular pattern for some of the software-defined storage solutions you can run on top of EBS in a BYOD fashion for container-native storage solutions. That also brings an important point across as HPE Cloud Volumes is a true cloud-native storage-as-a-service.

Using the ‘hpecv' Docker Volume plugin

Without further ado, let’s dig into the details. Please bear in mind that what you see below is tech preview software and may change without notice before release. The Docker Volume plugin installs on your Linux EC2 instances and registers a volume driver named ‘hpecv’. You need to generate an access and secret key from HPE Cloud Volumes that you store on the host itself to allow seamless interaction with our APIs. There’s also a lot of other minutia relative to your cloud we need to know about when we provision resources. Luckily, all this can be preconfigured and will be taken as defaults when creating new volumes.

A default ‘docker-driver.json’ file may container something similar:

# cat /opt/hpe-storage/etc/docker-driver.json

{

"global": {

"volumeDir": "/opt/nimble/",

"nameSuffix": ".docker",

"snapPrefix": "BaseFor",

"initiatorIP": "172.31.32.89",

"automatedConnection": true,

"existingCloudSubnet": "172.31.32.0/24",

"region": "us-west-1",

"privateCloud": "vpc-34070850",

"cloudComputeProvider": "Amazon AWS"

},

"defaults": {

"sizeInGiB": 1,

"limitIOPS": 300,

"fsOwner": "root:root",

"fsMode": "600",

"perfPolicy": "Other",

"protectionTemplate": "twicedaily:4",

"encryption": true,

"volumeType": "GPF",

"destroyOnRm": true,

"mountConflictDelay": 10

},

"overrides": {

}

}

Having all these defaults set, we can now very elegantly create and inspect a volume:

$ docker volume create -d hpecv myvol1

myvol1

$ docker volume inspect myvol1

[

{

"CreatedAt": "0001-01-01T00:00:00Z",

"Driver": "hpecv",

"Labels": {},

"Mountpoint": "",

"Name": "myvol1",

"Options": {},

"Scope": "global",

"Status": {

"block_size": 4096,

"connection_provisioning": "automated",

"cv_region": "us-test",

"destroy_on_detach": false,

"destroy_on_rm": true,

"encryption": true,

"limit_iops": 300,

"limit_mbps": 3,

"marked_for_deletion": false,

"mount_conflict_delay": 10,

"name": "myvol1.docker",

"perf_policy": "Other Workloads",

"protection_schedule": "snap:twicedaily:4:retention",

"serial": "404b8c2140965e206c9ce90060514147",

"size_in_mib": 1024,

"volume_type": "GPF"

}

}

]

Attaching this volume to a stateful workload is quite trivial:

$ docker run --rm -d -v myvol1:/var/lib/mysql --name mariadb mariadb

09a10d6ee8ac8ad2c8720e2af238e0bd6130e2471f8a5d1732322d7631e8cb46

$ docker exec mariadb df -h /var/lib/mysql

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/mpatha 1014M 162M 853M 16% /var/lib/mysql

So, what we have here is a fairly modest 1GB, 300 IOPS volume served from our General Purpose Flash (GPF) tier with automatic snapshots taken twice daily with a revolving retention of 4 snapshots.

We pride ourselves with making things as simple as possible but don’t want to limit the end user. The system administrator sets up a set of default parameters but may also provide overrides for parameters that the end user can’t manipulate, such as protection schedules, IOPS caps and volume types. The parameters available for volume creation is always available through the help parameter:

$ docker volume create -d hpecv -o help

HPE Cloud Volumes Docker Volume Driver: Create Help

Create or Clone a HPE Cloud Volumes backed Docker Volume or Import an existing Volume or Clone of a Snapshot into Docker.

Universal options:

-o mountConflictDelay=X X is the number of seconds to delay a mount request when there is a conflict (default is 0)

Create options:

-o sizeInGiB=X X is the size of volume specified in GiB

-o size=X X is the size of volume specified in GiB (short form of sizeInGiB)

-o fsOwner=X X is the user id and group id that should own the root directory of the filesystem, in the form of [userId:groupId]

-o fsMode=X X is 1 to 4 octal digits that represent the file mode to be applied to the root directory of the filesystem

-o perfPolicy=X X is the name of the performance policy (optional)

Performance Policies: Other, Exchange, Oracle, SharePoint, SQL, Windows File Server

-o protectionTemplate=X X is the protection template in the form of schedule:retention

Protection Templates: daily:3, daily:7, daily:14, hourly:6, hourly:12, hourly:24

twicedaily:4, twicedaily:8, twicedaily:14, weekly:2, weekly:4, weekly:8, monthly:3, monthly:6, monthly:12 or none

-o encryption indicates that the volume should be encrypted (optional)

-o volumeType=X X indicates the cloud volume type(optional)

Volume Types: PF, GPF

-o limitIOPS=X X is the IOPS limit of the volume. IOPS limit should be in range [300, 50000]

-o destroyOnRm indicates that the cloud volume (including snapshots) backing this volume should be destroyed when this volume is deleted

Clone options:

-o cloneOf=X X is the name of Docker Volume to create a clone of

-o snapshot=X X is the name of the snapshot to base the clone on (optional, if missing, a new snapshot is created)

-o createSnapshot indicates that a new snapshot of the volume should be taken and used for the clone (optional)

-o destroyOnDetach indicates volume to be destroyed on last unmount request

-o destroyOnRm indicates that the cloud volume (including snapshots) backing this volume should be destroyed when this volume is deleted

Import Volume options:

-o importVol=X X is the name of the existing Cloud Volume to import

-o forceImport forces the import of the volume. Note that overwrites application metadata (optional)

Import Clone of Snapshot options:

-o importVolAsClone=X X is the name of the Cloud Volume and Snapshot to clone and import

-o snapshot=X X is the name of the snapshot to clone and import (optional, if missing, will use the most recent snapshot)

-o createSnapshot indicates that a new snapshot of the volume should be taken and used for the clone (optional)

-o destroyOnRm indicates that the Cloud Volume (including snapshots) backing this volume should be destroyed when this volume is deleted

-o destroyOnDetach indicates that the Cloud Volume (including snapshots) backing this volume should be destroyed when this volume is unmounted or detached

As you can see, we have not spared any functionality. All the life-cycle controls, snapshot, cloning and importing operations are all there as implemented by our HPE Nimble Storage Docker Volume plugin for on-premise arrays.

Use cases

We see a number of different trends in the DevOps space where our integration can provide tremendous value for a broad spectrum of use cases. Lift & shift workloads to the cloud — replicate your data from on-premises and import the data into a Docker Volume. Do cloud bursting, again replicate your data to the cloud, run long-running reporting jobs and analysis by using cloud computing as a complement to on-premises compute infrastructure. Enable Enterprise applications in the public cloud using containers without sacrificing traditional reliability, availability and serviceability normally found on Enterprise-grade storage arrays but consume in a cloud-native model. Use the public cloud for dev and test and present your datasets to ETL and CI/CD pipelines running in containers.

One of the demos we’re bringing to DockerCon leverages our snapshot, cloning and automatic lifecycle controls to build, ship, test and deploy an application that relies on an 800GB database with about 10 million rows. The CI/CD pipeline is setup in Jenkins and a build job from push to GitHub to deployed and running in production completes in less than 2 minutes —in those two minutes, the staging environment is torn down and re-provisioned with the new image running of a fresh clone and later pushed to production after successful tests. Obviously, the tests aren’t that sophisticated, but it proves the point you no longer have to copy data around to do reliable testing with data from production which will in turn boost quality and developer productivity.

What about Kubernetes?

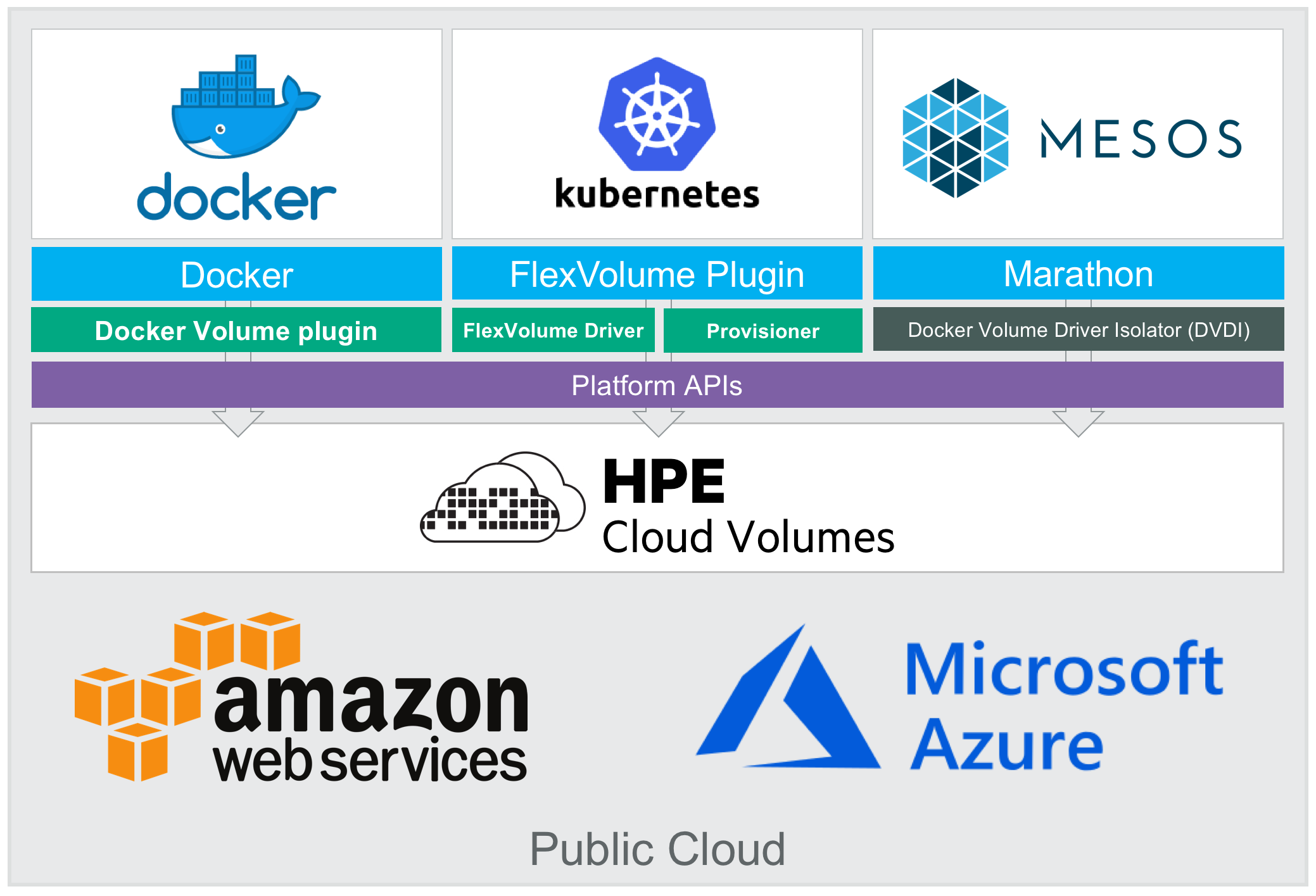

The Docker Volume plugin for HPE Cloud Volumes is fully compatible with the HPE Nimble Kube Storage Controller and FlexVolume driver. It’s therefore possible out of the gate to leverage Kubernetes, Red Hat OpenShift and Docker Enterprise Edition 2.0 as the container orchestrator. All the parameters available to the Docker Volume plugin may be used with the FlexVolume driver directly or leveraged by StorageClasses in Kubernetes.

Here’s an example StorageClass crafted to serve transactional workloads on Kubernetes using the HPE Nimble Kube Storage Controller for HPE Cloud Volumes:

---

kind: StorageClass

apiVersion: storage.k8s.io/v1beta1

metadata:

name: transactionaldb

provisioner: hpe.com/cv

parameters:

description: "Volume provisioned by HPE Nimble Storage Kube Storage Controller from transactionaldb StorageClass"

perfPolicy: "SQL"

limitIOPS: "5000"

volumeType: "PF"

This is nothing new for users familiar with the NLT-2.3 release we announced some time ago. The Docker Volume plugin for HPE Cloud Volumes will have a very similar interoperability across container orchestrators as the HPE Nimble Storage array integration.

Docker Enterprise Edition 2.0

We are going to DockerCon and I want to congratulate our partner Docker for their flagship product release with Docker Enterprise Edition 2.0! Innovation never stops and Docker basically re-invented themselves by introducing Kubernetes on Docker as a complement to Docker Swarm. We’ve been working closely with Docker the last few months to ensure that our integration with both Docker Swarm and Kubernetes may co-exist in the same cluster and users can enjoy persistent storage for both Swarm and Kubernetes, but for on-premises HPE Nimble Storage arrays but also for HPE Cloud Volumes. Yes, that’s right, all the tech preview demos we’ve prepared for DockerCon around HPE Cloud Volumes runs on the officially supported Docker Enterprise Edition for AWS! Unfortunately we don’t have a firm release date yet for the HPE Cloud Volumes integration but it will be coming out later this year.

We just quite recently published the HPE Nimble Storage Integration Guide for Docker Enterprise Edition for customers on HPE InfoSight. It contains all steps needed to successfully deploy a supported persistent storage solution both for Docker Swarm and Kubernetes on Docker EE 2.0 with HPE Nimble Storage.

I hope to see you at DockerCon!

- Back to Blog

- Newer Article

- Older Article

- haniff on: High-performance, low-latency networks for edge an...

- StorageExperts on: Configure vSphere Metro Storage Cluster with HPE N...

- haniff on: Need for speed and efficiency from high performanc...

- haniff on: Efficient networking for HPE’s Alletra cloud-nativ...

- CalvinZito on: What’s new in HPE SimpliVity 4.1.0

- MichaelMattsson on: HPE CSI Driver for Kubernetes v1.4.0 with expanded...

- StorageExperts on: HPE Nimble Storage dHCI Intelligent 1-Click Update...

- ORielly on: Power Loss at the Edge? Protect Your Data with New...

- viraj h on: HPE Primera Storage celebrates one year!

- Ron Dharma on: Introducing Language Bindings for HPE SimpliVity R...