- Community Home

- >

- Servers and Operating Systems

- >

- Servers & Systems: The Right Compute

- >

- Memory-Driven Computing designed for Next-Gen In-M...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

Memory-Driven Computing designed for Next-Gen In-Memory Analytics Solutions

Discover the advantages of Memory-Driven Computing for Next-Gen In-Memory Analytics. Learn about

Current enterprise challenges around handling growing data volume

Businesses are experiencing an exponential increase in data, coming from an explosion of sources. We have a vanishingly small time to turn that data into meaningful action. In past, the fact that data will double every two years led to a very famous law of electronics: Moore’s law which states that transistors will double in integrated circuit every two years. This prediction is already proving wrong as data growth and data analytics requirements are outpacing the compute and storage technologies that provided the foundation of processor-driven architectures for the last five decades.

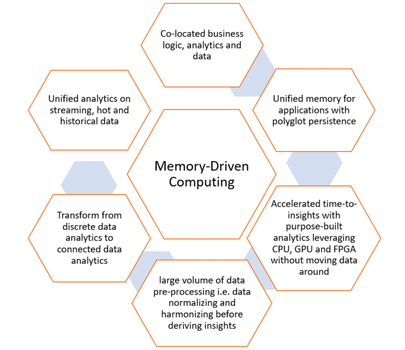

Deriving such time-critical insights requires an architectural shift from compute-driven analytics to memory-driven analytics. Most recently, this architectural redesign is driven by the challenges and experiments shown here.

Memory-Driven Computing is an almost infinitely flexible and scalable architecture that can complete any computing task much faster while using much less energy than conventional systems. The performance of Memory-Driven Computing is possible because now any combination of computing elements can be composed and can communicate at the fastest possible speed—the speed of memory.

Only through a new architecture like Memory-Driven Computing will it be possible to simultaneously work with every digital health record of every person on earth, every trip of Google’s autonomous vehicles and every data set from space exploration all at the same time—getting to answers and uncovering new opportunities at unprecedented speeds.

In this blog, I’m highlighting key New-Gen Analytics workloads taking advantage of Memory-Driven Computing architecture and scaling for real-time analytics requirements. Here are a few industry use cases that take advantage of architectural principals of compute-driven analytics.

Use case 1: Cybersecurity analytics

Cybersecurity analytics involves identifying cyber intrusion behaviors in a deployed infrastructure comprising of complex network of servers, routers, gateways, and storage.

Developing such cybersecurity analytics involves analyzing massive volume of Infrastructure network traffic information from network connection logs, http logs, dhcp logs, smtp logs and, netflow information, thereby establishing a network of infrastructure entities and relationships. This is achieved by building a network graph which enables detection of network anomaly patterns leading to identifying threats like Zombie reboot or RDP hacking.

Typical size of these network graphs includes billions of graph nodes and properties and relationship between graph nodes. Deriving anomaly patterns across these billions of nodes in real-time requires existence of entire graph in-memory with TBs of large memory infrastructure.

Use case 2: Real-time recommendation engine

In this day and age, the need to build scalable real-time recommendation is increasing day by day. With more internet users using e-commerce sites for their purchases, these e-commerce sites have realized the potential of understanding the patterns of the users' purchase behaviors to improve their business and to serve their customers on a very personalized level. To build a system which caters to a huge user base and generates recommendations in real time, we need a modern and fast scalable system.

Deploying a real-time recommendation engine involves In-Memory data processing unifying user data from social-media data sources, existing customer management solutions, and existing warehouse historical data.

Building such unified analytics platform can be achieved with a combination of Spark based In-Memory data processing and transformed representation of customer data in graph models. These unified analytics built with Spark involves application of massive transformation and action. These phases of transformation and action undergo massive data shuffle operation to pre-process which is very costly in cluster operation.

Typical scale of shuffle spans across TBs of data undergoing Repartition, GroupBy, and Join operations which are very expensive owing to frequent I/O operations. Making these TBs of data available In-Memory with large memory infrastructure enables Spark to perform data pre-processing operations faster to meet the requirements of fast data analytics.

Use case 3: Fraud detection

Fraud management has been known to be a very painful problem for banking and finance firms. Card-related frauds have proven to be especially difficult for firms to combat. Technologies such as chip and PIN are available and are already used by most credit card system vendors, such as Visa and MasterCard. However, the available technology is unable to curtail 100% of credit card fraud.

Building a fraud prevention solution requires analyzing credit card transaction in sub-millisecond time frame, detecting outliers in which data set is verified to identify potential anomalies in the data. With the rise of artificial intelligence (AI), machine learning (ML), and deep learning (DL), it becomes feasible to analyze massive volume of transactions feeding into enterprise credit card network. These machine learning models are first trained against historical transactions and live inference is achieved by building machine learning pipeline for data acquisition, feature engineering and model serving.

Achieving real-time fraud detection against streaming credit card transactions requires building machine learning pipeline in-memory to perform data collection and transformation, feature engineering, hyper-parameter optimization, and model serving to make high-precision predictions. This can be achieved by implementing Spark and Tensorflow with TensorFrames and taking advantage of co-located GPUs for faster model training.

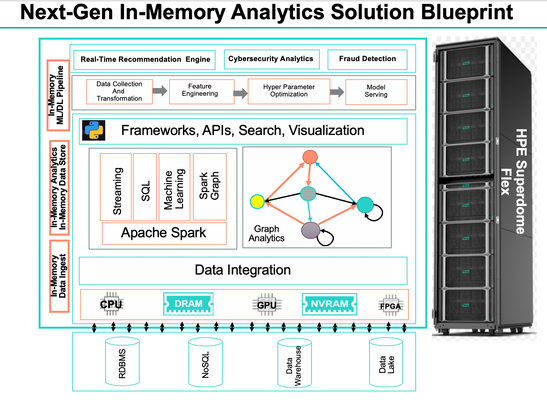

Building upon these use cases, here is the high-level architecture for Next-Gen In-Memory Analytics Solution designed to take advantage of scale-up Memory-Driven Computing Infrastructure with HPE Superdome Flex Servers.

Key features of In-Memory Analytics solutions

- Adopt and build large scale In-Memory data pipeline with Spark taking advantage of large memory off-heap cache for shuffle operations for data preparation

- Accelerate machine learning training and inference against massive volume of train and test data and achieve high precision accuracy for predictive analytics

- Accelerate connected data analytics leveraging Graph Databases using search algorithms like Page Rank or Single Source Shortest Path (SSSP) taking advantage of large memory Superdome Flex infrastructure.

How In-Memory Analytics is achieved leveraging Memory-Driven Computing for real customer implementations

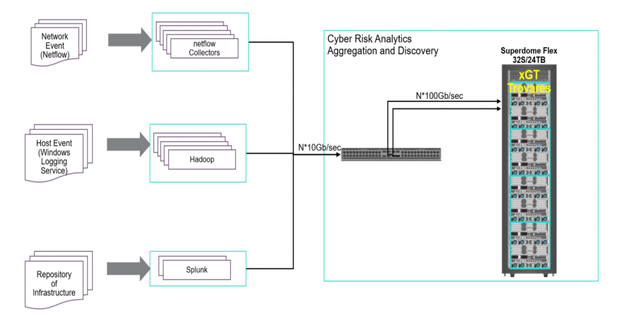

A large financial services organization implemented cybersecurity analytics to identify cyber threats in their operational network by detecting network hacks, zombie reboots, or RDP hacking.

In order to establish these cybersecurity analytics, network-related data was captured over a 90-day period. These datasets included network connection logs, http logs, dhcp logs, smtp logs, netflow information, and packet capture (pcap) data from the operational network. Historical infrastructure operational data was also available in the existing Hadoop-based data lake.

Challenges to address while implementing deployment

Multiple challenges needed to be addressed while implementing the above deployment.

The first challenge was to integrate all this data. This was achieved by aggregating all the data from these multiple sources into large memory infrastructure hosting terabytes of data.

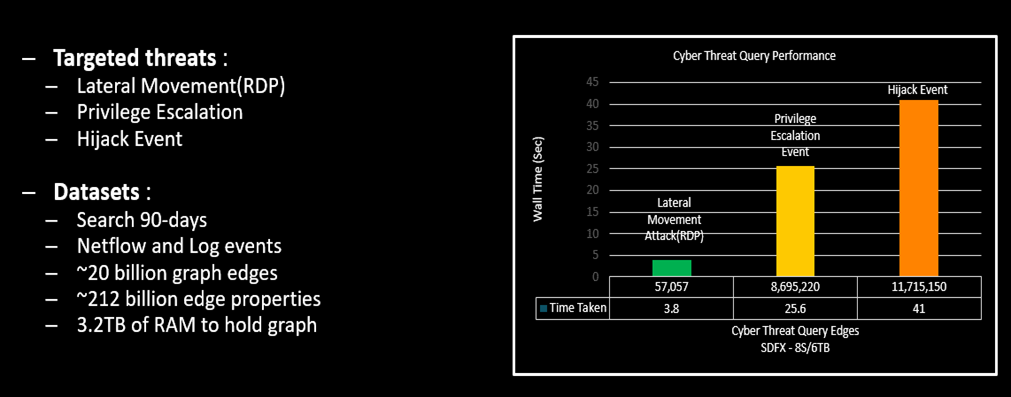

Subsequently, aggregated data was transformed into a Graph Data Model and a network graph was built to represent these network entities. The size of the network graph included 20 Billion Graph edges (17.9 Billion Netflow Edges and 1.5 Billion log edges) and 212 billion graph edge properties with 3TB In-Memory Graph. In order to build such network graph, an enterprise software product was implemented in an HPE Superdome Flex large-memory environment to read the input data from multiple data sources as highlighted above. The tool generated a powerful network graph with billions of edges and edge properties.

Finally, a pattern matching operation was performed to detect anomalies. This was achieved by developing complex pattern matching queries. Query response time was measured with deployed in-memory graph.

Achieving such scale of performance for complex pattern matching operations leaves Superdome Flex well positioned to grow in new areas such as next-generation data analytics, in-memory databases, and AI inferencing, and equally well suited for expansion into the high-performance computing (HPC) space. These next-gen analytics solutions all work to increase the market demand for this type of platform for time-critical use cases like real-time recommendation engines, security analytics, and fraud detection across multiple industries.

If you would like to learn more, please contact your HPE representative.

More information resources

- 6-cybersecurity Mega Trends

- Scale Up Graph Analytics with Trovares

- Scale Up Unsupervised Machine Learning with Reservoir Lab

- Memory Driven-Computing in Superdome Flex

- Mission-Critical Infrastructure for the Data-Driven Enterprise

Server Experts

Hewlett Packard Enterprise

twitter.com/HPE_Servers

linkedin.com/showcase/hpe-servers-and-systems/

hpe.com/servers

- Back to Blog

- Newer Article

- Older Article

- Dale Brown on: Going beyond large language models with smart appl...

- alimohammadi on: How to choose the right HPE ProLiant Gen11 AMD ser...

- Jams_C_Servers on: If you’re not using Compute Ops Management yet, yo...

- AmitSharmaAPJ on: HPE servers and AMD EPYC™ 9004X CPUs accelerate te...

- AmandaC1 on: HPE Superdome Flex family earns highest availabili...

- ComputeExperts on: New release: What you need to know about HPE OneVi...

- JimLoi on: 5 things to consider before moving mission-critica...

- Jim Loiacono on: Confused with RISE with SAP S/4HANA options? Let m...

- kambizhakimi23 on: HPE extends supply chain security by adding AMD EP...

- pavement on: Tech Tip: Why you really don’t need VLANs and why ...

-

COMPOSABLE

77 -

CORE AND EDGE COMPUTE

146 -

CORE COMPUTE

130 -

HPC & SUPERCOMPUTING

131 -

Mission Critical

86 -

SMB

169