- Community Home

- >

- Storage

- >

- Around the Storage Block

- >

- Data Protection with HPE SimpliVity, Part 2: The b...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

Data Protection with HPE SimpliVity, Part 2: The best disaster is one that never happens

You cannot prevent disaster, but you can prepare

Creating backups of data is great for capturing data in a single point in time for later recovery, but ensuring the availability of the data means that the hardware that stores and provides access to the data needs to be as resilient to failure as possible. HPE SimpliVity 380 and 2600 platforms are built on the HPE ProLiant DL380 and HPE Apollo 2000 hardware platforms respectively, and those platforms are designed and tested for the utmost resiliency. HPE hardware features such as certified RAM, redundant power and cooling, and hardware-level security that ensures the integrity of BIOS and firmware, make for the start of a highly resilient hyperconverged node.

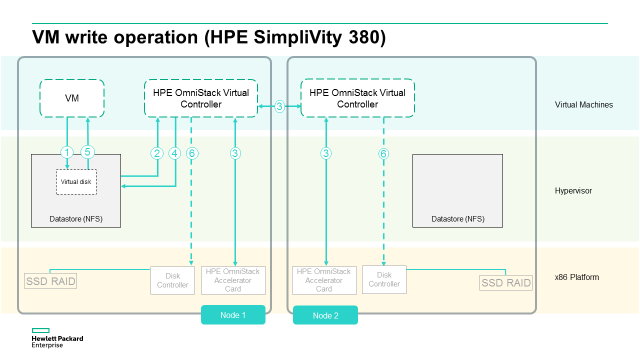

Data integrity is improved by only acknowledging writes when the data is properly persisted, and by performing regular integrity checks of the data after it is written to disk. Data can be persisted directly to disk on the HPE SimpliVity 2600 or to the flash and super capacitor protected RAM on the HPE OmniStack Accelerator Card in the HPE SimpliVity 380. Once the data is persisted to local storage, it is protected from disk loss by utilizing hardware RAID.

HCI technology built to withstand data center disasters

In spite of the careful planning and engineering that goes into resilient systems, hyperconverged infrastructure (HCI) nodes do fail. In order to protect against this eventuality, every VM and backup within an HPE SimpliVity cluster has two complete copies stored on two nodes within the cluster. As this illustration shows, HPE SimpliVity mirrors every write from the primary node to the secondary node immediately upon accepting the write from the VM. This occurs inline before deduplication. All data processing then occurs independently on each node with the write acknowledgment to the VM only occurring after both nodes have successfully persisted the write.

If the primary storage node for a VM fails, all reads and writes simply failover to the secondary node for the VM’s storage. If the VM was not affected by the failure - for example, it was running on a compute node – the failover will occur transparently and the applications running inside the VM will not notice. If the VM failed with the storage, then a hypervisor VM restart on a healthy node will occur, if properly configured within vSphere. Most often, HPE SimpliVity subsystem – the OmniStack Virtual Controller or Accelerator Card – on a node could fail without affecting the ability to run VMs on that node, in which case the applications running inside the VMs will experience no downtime.

Putting resiliency to the test

All of this data protection is built into the core functionality of the HPE SimpliVity Data Virtualization Platform and requires no configuration or additional licensing on the part of customers. It was designed from the beginning to be highly tolerant of failures. Prospects performing a proof of concept are often encouraged to put a full load on all nodes, then pull one disk in each node at the same time. Which disk doesn’t matter. This is because the use of RAID in each node means that the storage in each node is tolerant to disk failure.

Customers can then fail an entire node while the disks are still removed. VMs will go down, but – assuming the hypervisor is properly configured – they will restart on another node will full access to all their data. This is possible because the full storage for the VM is stored on a second node, and the hypervisor management can automatically fail the VM to a healthy node. This combination minimizes the outage experienced when a node fails.

The HPE SimpliVity hyperconverged platform has even built in features that can resurrect lost data when something truly disastrous occurs. More on that in a future post. Until then, the Data Protection on HPE SimpliVity Platforms white paper provides an even deeper view of the technology.

Data Protection with HPE SimpliVity blog series

- Part 1: Hyperconvergence designed to protect data

- Part 2: The best disaster is one that never happens

- Part 3: When it comes to data recovery, time is critical

Rakesh

Guest blogger Rakesh Vugranam, HPE SimpliVity Product Manager, Software-Defined and Cloud Group

Learn more about Intel™

- Back to Blog

- Newer Article

- Older Article

- haniff on: High-performance, low-latency networks for edge an...

- StorageExperts on: Configure vSphere Metro Storage Cluster with HPE N...

- haniff on: Need for speed and efficiency from high performanc...

- haniff on: Efficient networking for HPE’s Alletra cloud-nativ...

- CalvinZito on: What’s new in HPE SimpliVity 4.1.0

- MichaelMattsson on: HPE CSI Driver for Kubernetes v1.4.0 with expanded...

- StorageExperts on: HPE Nimble Storage dHCI Intelligent 1-Click Update...

- ORielly on: Power Loss at the Edge? Protect Your Data with New...

- viraj h on: HPE Primera Storage celebrates one year!

- Ron Dharma on: Introducing Language Bindings for HPE SimpliVity R...