- Community Home

- >

- Storage

- >

- Around the Storage Block

- >

- Defining hyperconverged infrastructure Part 3: The...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

Defining hyperconverged infrastructure Part 3: The importance of data locality

The introduction of hyperconverged infrastructure caused a major shift in the way IT operates. Reducing technology silos, capital expenditures, and time dedicated to the IT equipment refresh cycle are all common outcomes seen by HPE SimpliVity 380 customers. These customers also see improved data protection and operational efficiency in the IT environment. While the simplification of the infrastructure creates many obvious advantages, bringing the data closer to the virtual machines (VMs) can create a performance advantage. How data locality, or the proximity of the VM storage to the VM compute, is managed can make a big difference to the performance of business-critical applications.

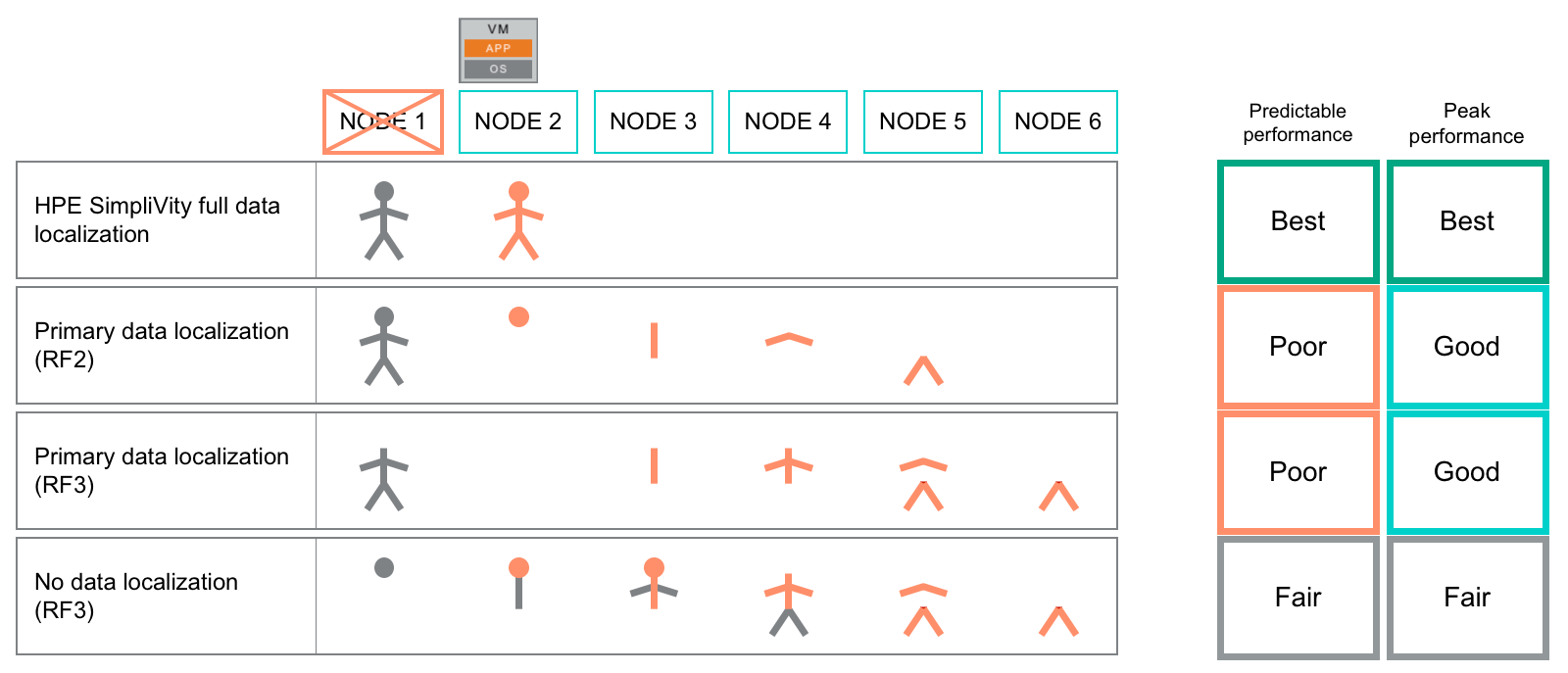

Data locality is an architectural challenge unique to hyperconverged infrastructures, because current hypervisor resource balancing features don’t have full awareness of how data is laid out across the nodes. Locating the data and compute resources of a VM on the same host can be a distinct advantage since it will minimize latency, thus providing peak performance of the storage layer. There are several ways hyperconverged infrastructure vendors are choosing to address this challenge.

Some choose to avoid the problem altogether and instead stripe all data across all nodes. This has the advantage of distributing the data processing across all nodes when serving data for every VM. It also provides inconsistent performance no matter where the compute resources for the VM exist because some percentage of the data will always come across the network—depending on the number of nodes in the cluster and the number of blocks being accessed. The downside is that this performance is bound by the performance of the network, and every VM is susceptible to cluster-wide data performance or availability impacts due to issues on a subset of the cluster nodes.

Others have chosen to allow VMware Distributed Resource Scheduler (DRS) to do its thing and fix the data locality problem under the covers. While this will certainly optimize the CPU and memory resources of the applications in the VMs, it does so with complete ignorance of the location of the data. This leaves the vendor to choose between:

- Leaving the data and compute where they are and simply accessing data across the network. This will impact storage performance since all data will be accessed across the network.

- Migrating the data to “follow the VM.” This is no small task since all data must be read from the original node, copied across the network, and written to the new node.

Either one of these choices will cause unpredictable performance impacts. They are very resource-intensive operations that will consume excess CPU, network I/O, and storage I/O on both nodes, particularly with the “follow the VM” option given all the overhead incurred with every VM migration. These resources aren’t available to the business applications during this process, and could cause the storage subsystem of the hyperconverged node to become a noisy neighbor.

On the other hand, the HPE SimpliVity Intelligent Workload Optimizer allows customers to utilize the power of DRS to maximize CPU and memory resources and provide it with full awareness of the location of the data within the cluster. Within an HPE SimpliVity 380 infrastructure, the data for every VM is stored as a complete unit on two separate nodes. In order to maintain data locality, DRS groups and rules are created to identify the relationship between the VM and the placement of data across the hosts. These rules are defined as “suggested affinity” rules, so DRS will keep VMs on these hosts unless CPU and memory demands cannot be properly satisfied. The selection of the two nodes that will house the data is done intelligently at the moment the VM is created based on current capacity and a history of the I/O performance on each node. Once this placement is determined, the proper DRS groups are updated. A regularly scheduled task ensures that these rules and group memberships are maintained in case of user-initiated changes.

This approach allows DRS to work as it was intended, while minimizing the separation of VM compute and storage resources. By providing VMware’s existing tools this level of awareness, HPE SimpliVity not only integrates better with the vSphere environment with no additional administrative complexity, but also allows DRS to load balance VMs to maximize storage performance along with CPU and memory performance. By choosing a lower impact approach to data locality, HPE SimpliVity powered by Intel® minimizes the impact to the virtual machine resources in the customers’ cluster.

Curious about the kind of performance levels the HPE SimpliVity 380 can achieve? Download ESG Technical Validation: All-Flash HPE SimpliVity 380 delivers simplicity, performance, and resiliency.

Make sure to read the other articles in this series:

- Defining hyperconverged infrastructure Part 1: The anatomy of the HPE Simplivity 380

- Defining hyperconverged infrastructure Part 2: Lifecycle of a write I/O on HPE SimpliVIty 380

- Defining hyperconverged infrastructure Part 4: HPE SimpliVity data storage built for resiliency

Brian Knudtson

Sr. Technical Marketing Manager

Hewlett Packard Enterprise

brianknudtson

A former administrator, implementation engineer, and solutions architect focusing on virtual infrastructures, I now find myself learning about all aspects of enterprise infrastructure and communicating that to coworkers, prospects, customers, influencers, and analysts. Particular focus on HPE SimpliVity today.

- Back to Blog

- Newer Article

- Older Article

- haniff on: High-performance, low-latency networks for edge an...

- StorageExperts on: Configure vSphere Metro Storage Cluster with HPE N...

- haniff on: Need for speed and efficiency from high performanc...

- haniff on: Efficient networking for HPE’s Alletra cloud-nativ...

- CalvinZito on: What’s new in HPE SimpliVity 4.1.0

- MichaelMattsson on: HPE CSI Driver for Kubernetes v1.4.0 with expanded...

- StorageExperts on: HPE Nimble Storage dHCI Intelligent 1-Click Update...

- ORielly on: Power Loss at the Edge? Protect Your Data with New...

- viraj h on: HPE Primera Storage celebrates one year!

- Ron Dharma on: Introducing Language Bindings for HPE SimpliVity R...