- Community Home

- >

- Storage

- >

- Around the Storage Block

- >

- How VM data is managed within an HPE SimpliVity cl...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

How VM data is managed within an HPE SimpliVity cluster, Part 2: Automatic management

This blog is the second in a 5-part series on VM data storage and management within HPE SimpliVity powered by Intel®.

A customer recently asked how virtual machine data is stored within HPE SimpliVity hyperconverged infrastructure, and how that data affects the overall cluster capacity. This 5-part blog series dives into HPE SimpliVity architecture, explaining how VM data is stored and managed within a cluster.

In the first blog of this series, I explained how data that makes up the contents of a virtual machine is stored within an HPE SimpliVity Cluster.

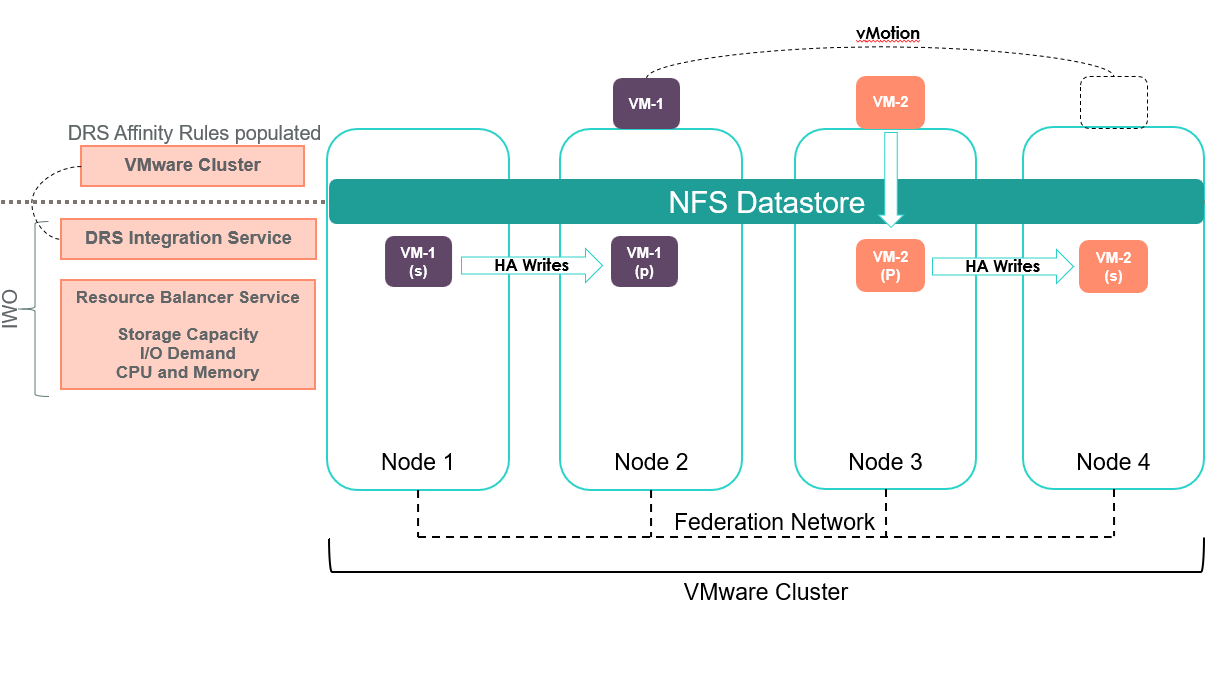

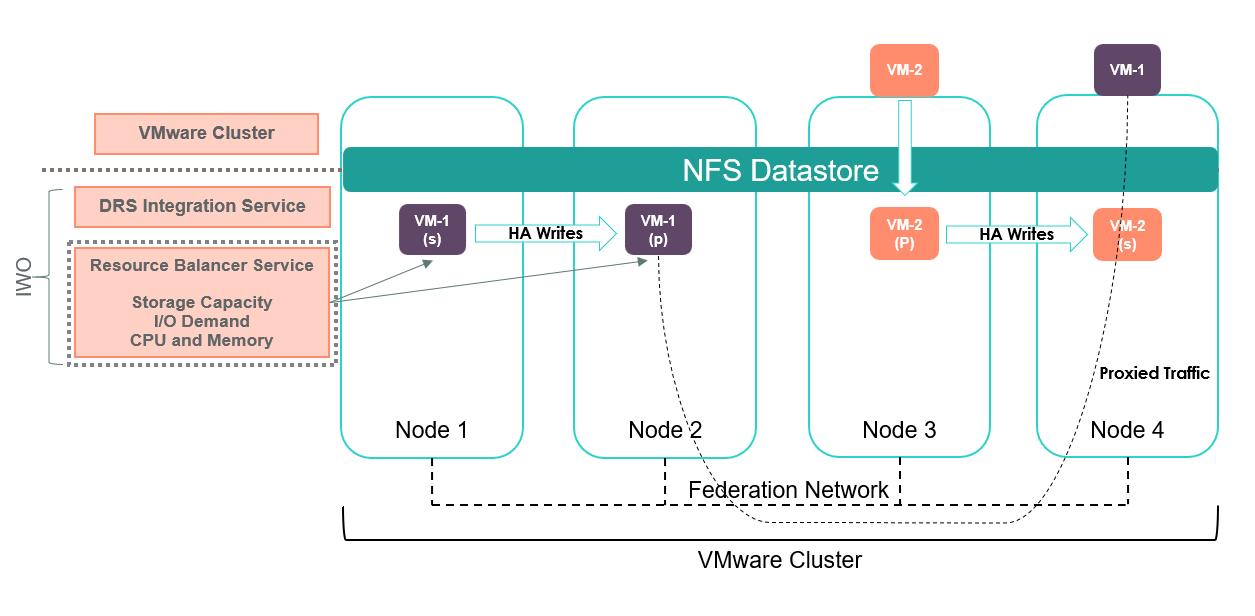

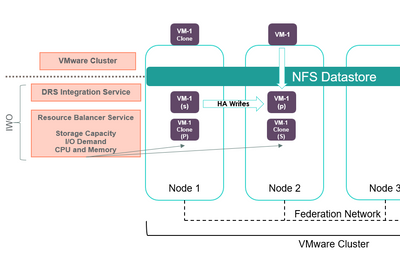

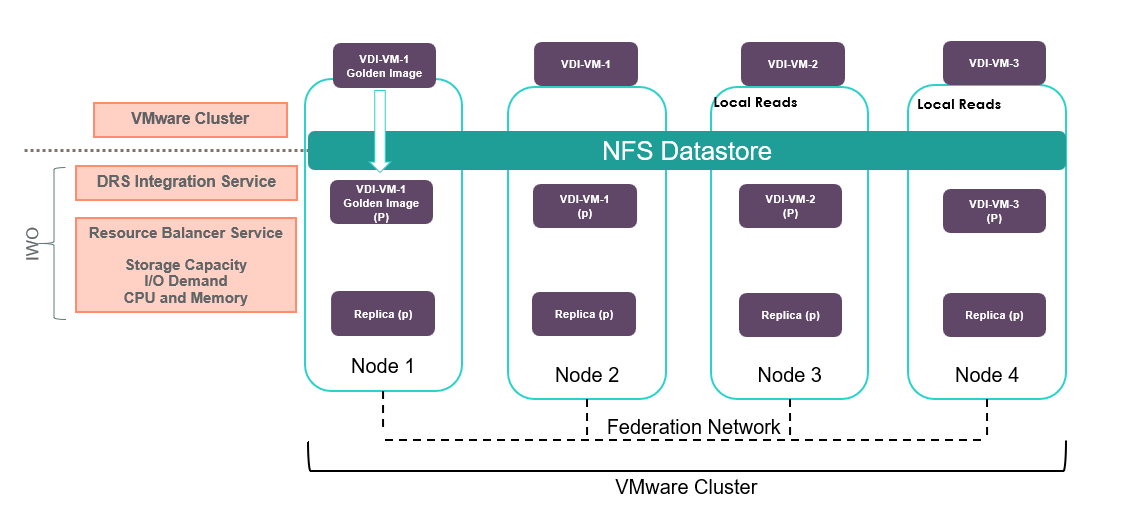

In this article, I describe in detail how the HPE SimpliVity DVP (Data Virtualization Platform) automatically manages this data, and how administrators can manage it as the environment grows. In particular, I will focus on the Intelligent Workload Optimizer (IWO), a core feature of the HPE SimpliVity DVP. IWO is responsible for the automatic management of data containers, intelligently placing VMs across HPE SimpliVity nodes.

By bringing awareness of the location of the data to the VMware Distributed Resource Scheduler (DRS), IWO can boost the performance of the infrastructure’s storage, compute and networking resources.

A closer look at IWO

IWO is comprised of two sub-components, the Resource Balancer Service and the VMware DRS integration service. IWO's aim is twofold:

- Ensure all resources of a cluster are properly utilized.

This process is handled by the Resource Balancer Service -- both at initial virtual machine creation and proactively throughout the life cycle of the VM and its associated backups. This is all achieved without end-user intervention. - Enforce data locality.

This process is handled by the VMware DRS integration service by pinning (through VMware DRS affinity rules) a virtual machine to nodes that contain a copy of that data. Again, this is all achieved without end user intervention.

Note: Resource balancing is an always-on service and does not rely on VMware DRS to be active and enabled on a VMware cluster. The service works independently from the DRS integration Service.

Ensuring all resources of a cluster are properly utilized – VM & Data Placement scenarios

The primary goal of IWO (through the underlying Resource Balancer Service, a feature of IWO) is to ensure that no single node within an HPE SimpliVity cluster is over-burdened: CPU, memory, storage capacity, or I/O’s.

The objective is not to ensure that each node experiences the same utilization across all resources or dimensions (that may be impossible), but instead, to ensure that each node has sufficient headroom to operate. In other words, each node must have enough physical storage capacity to handle expected future demand, and no single node should handle a much larger number of I/O’s relative to its cluster peers.

The Resource Balancer Service will use different optimization criteria for different scenarios. For example, initial VM placement on a new cluster (i.e., migration of existing VMs from a legacy system), best placement of a newly created VM within an existing system, Rapid Clone of an existing VM, and VDI-specific optimizations for handling linked clones.

Let’s explore the different scenarios.

Scenario #1: New VM Creation – VMware DRS enabled and set to Fully Automated

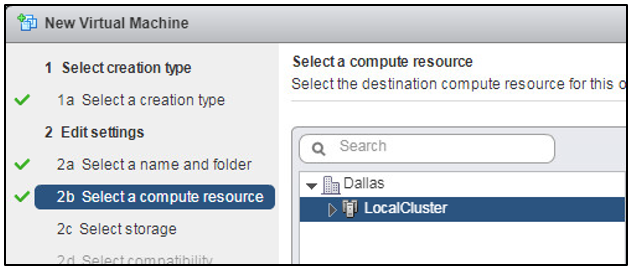

When creating or storage vMotioning a virtual machine to an HPE SimpliVity host or datastore, vSphere DRS will automatically place the VM on a node with relatively low CPU & memory utilization according to its own algorithms (default DRS algorithms). No manual selection of a node is necessary.

In the below diagram, VMware DRS has chosen Nodes 3 and 4 respectively to house VM's 1 and 2. Independently, the DVP has chosen a pair of ‘least’ utilized nodes within the cluster according to storage capacity and I/O demand for the data containers of those VM's to be placed.

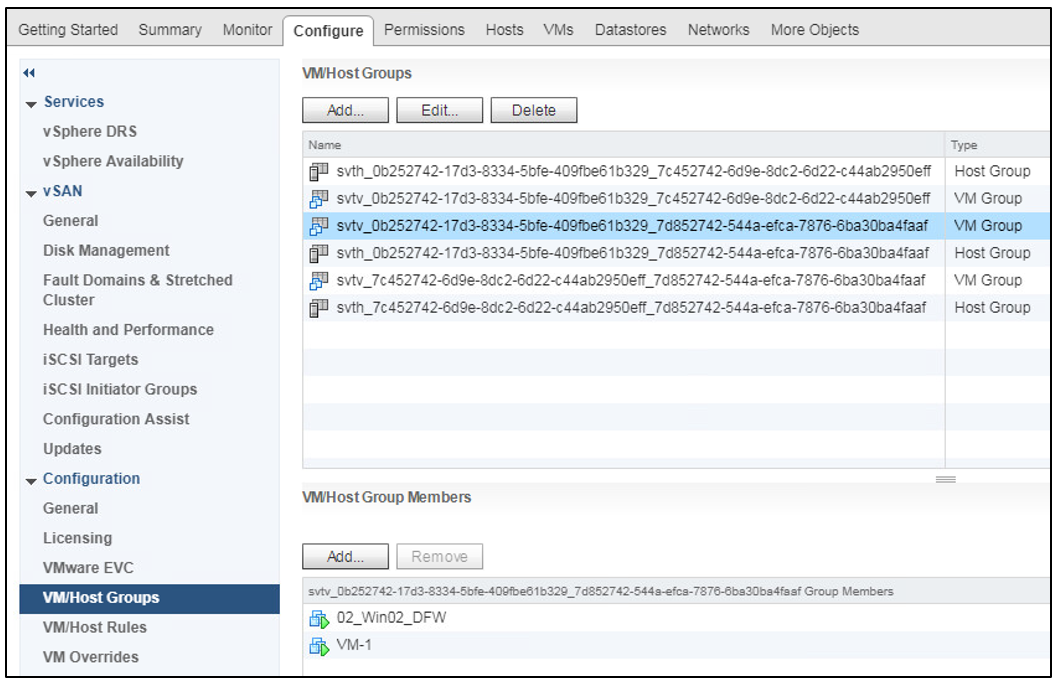

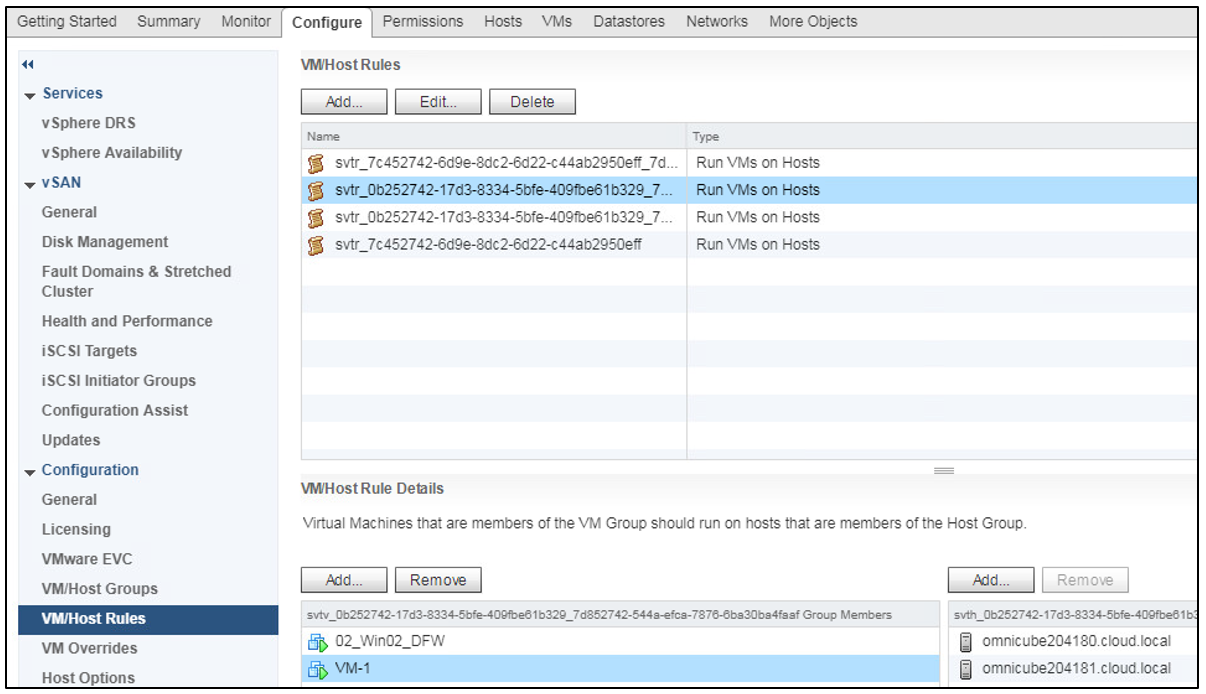

IWO through the DRS integration service will pin VM-1 to Node 1 & 2 and VM-2 to Nodes 3 & 4 by automatically creating and populating DRS rules into vCenter. First, let’s look at how DRS rules are created.

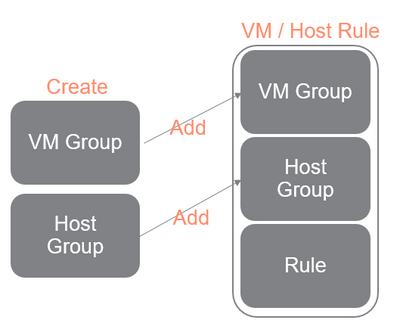

Each DRS rule consists of the following components:

- A Virtual Machine DRS Group.

- A Host DRS Group.

A Virtual Machines to Hosts rule. This consists of “must run on these hosts,” “should run on these hosts,” “must not run on these hosts,” or “should not run on these hosts.” HPE SimpliVity uses the "should run on these hosts" rule.

In this example, it is not optimal for VM-1 to be running on Node 4 as all of the I/O for the VM must be forwarded to either Node 1 or Node 2 in order to be processed. If the VM can be moved automatically to those nodes, then one hop is eliminated from the I/O path.

First, a Virtual Machine DRS Group is created, as you’re looking to make a group of virtual machines that will run optimally on two nodes. In this case, the name of the Virtual Machine DRS Group will be SVTV_<hostID2>_<hostID3>, as you’re looking to make a group of virtual machines that will run on these two nodes.

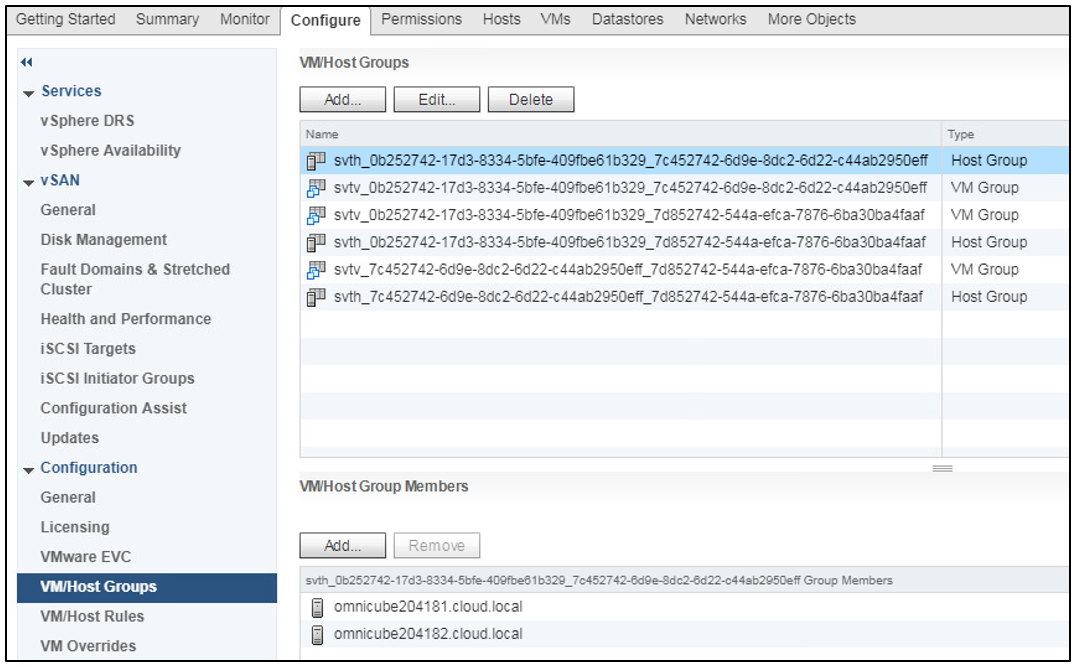

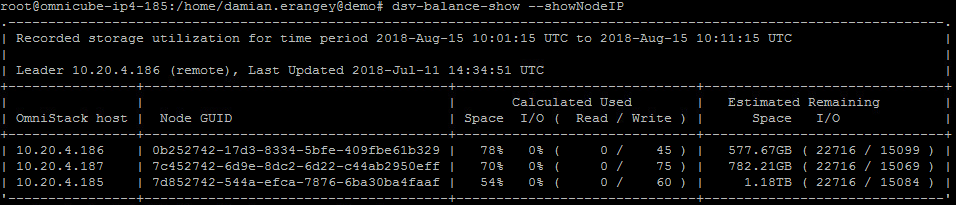

Below, you can see VM-1 assigned to this VM Group. VM-1 will share this group with other Virtual Machines that have their data located on the same nodes. Note the Host ID is a GUID and not an IP address or Hostname of the node. While this may appear confusing to the end user, this is the actual GUID of the HPE SimpliVity node. Mapping the GUID to the hostname or IP address of the node is not possible through the GUI. You can use the command "dsv-balance-show --showNodeIP" if you do wish to identify the Node IP.

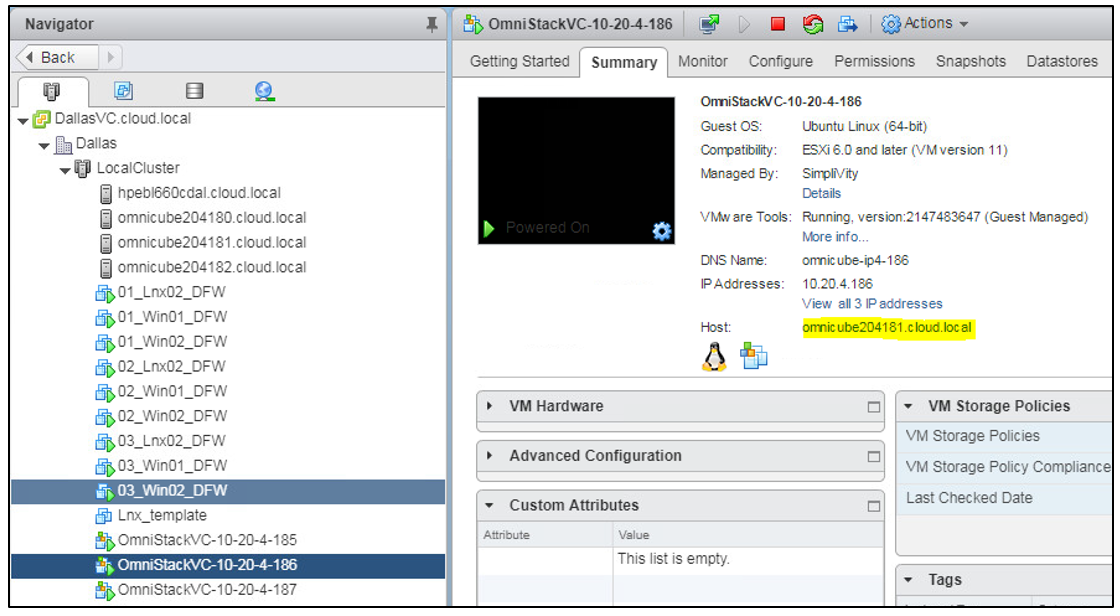

Looking at the VM Group for VM-1, you can deduce that the data is stored on nodes ending in "aaf and 329," which in turn equate to OVC .185 and .186 on ESXi nodes ending in 81 and 82 respectively, as shown below.

Again, all of this is handled automatically for you. However, for this blog to make sense, it is important for me to let you know where these values come from.

You can also deduce that, as the Host DRS Groups are created, they are named SVTH_<hostID2>_<hostID3>. The host groups will only ever contain two nodes as the virtual machines only contain data on two nodes. There will be several host groups created depending on how many hosts there are in the cluster, one host group for each combination of nodes. Here I have highlighted the host group for Hosts 81 and 82 which the VM-1 will be tied to.

Lastly, a “Virtual Machines to Hosts” rule is made, an HPE SimpliVity rule that consists of a SimpliVity host group and an HPE SimpliVity VM group. This rule directs DRS that the VM-1 “should run on” Hosts 81 and 82.

NOTE: The DRS affinity rules are should rules and not must rules.

If set to Fully Automated, VMware DRS will vMotion any VM's violating these rules to one of the data container holding nodes, thus aligning the VM with its data. In this case, VM-1 was violating the affinity rules by being placed on Node 4 and is automatically vMotioned to Node 2 via DRS.

Scenario #2: New VM Creation – VMware DRS Disabled

As stated previously, if VMware DRS is not enabled, the Resource Balancer Service continues to function and initial placement decisions continue to operate. However, DRS affinity rules will not be populated into to vCenter.

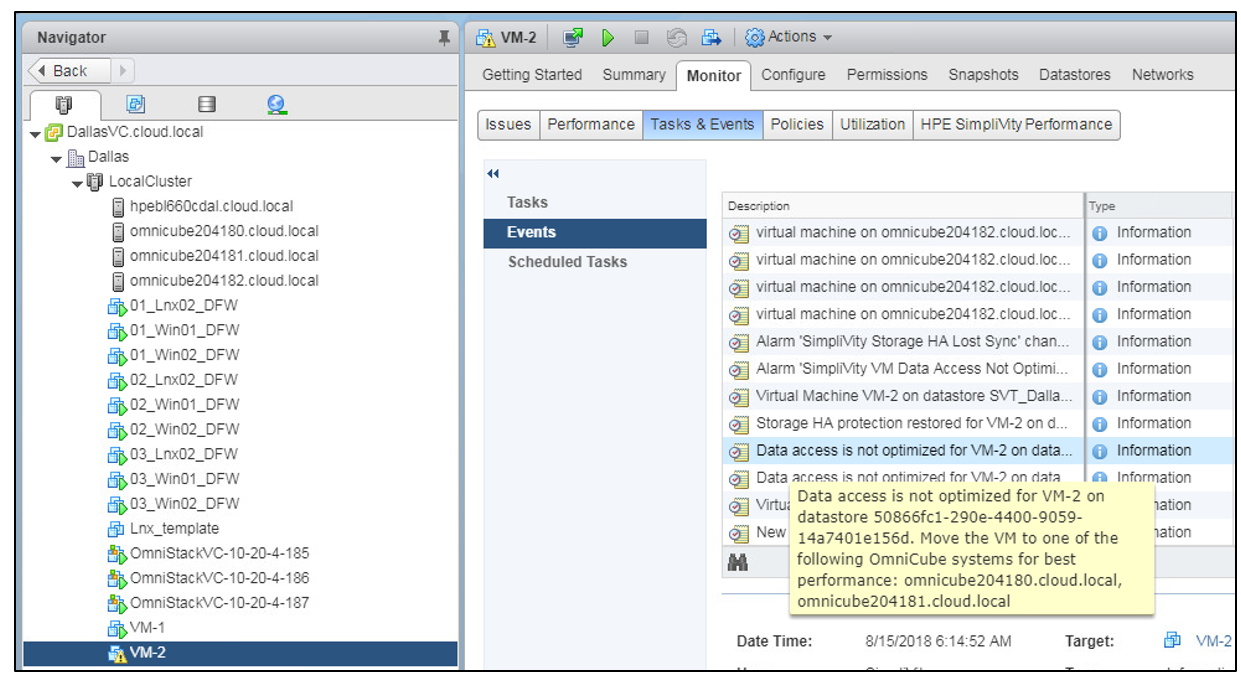

In this scenario when virtual machines are provisioned, they may reside on a node where there is no data locality. To help users remediate this issue, an HPE SimpliVity alert "VM Data Access Not Optimized" will be generated at the VM object layer within vCenter.

For remediation, the HPE SimpliVity platform will generate an event through vCenter Tasks and Events, directing the user to nodes that contain a copy of the virtual machine data. In the below diagram, I have highlighted the "Data access is not optimized" event that directs the user to vMotion the VM to the outlined hosts.

Scenario #3: Rapid clone of an existing VM

I have shown how the Resource Balancer Service behaves in regard to new VM creation. The service takes a different approach for HPE SimpliVity clones and VMware clones of virtual machines (VMware clones can also be handled by HPE SimpliVity via the VAAI plugin for faster operation).

In this scenario, the Resource Balancer Service will leave cloned VM data on the same nodes as the parent because this achieves the best possible cluster-wide storage utilization & de-duplication ratios.

If I/O demand exceeds node capabilities, the DVP will live-migrate data containers in the background to less-loaded node(s).

NOTE: Live migration of a data container does not refer to VMware storage. It refers to the active migration of a VM Data Container to another node.

Scenario #4: VDI-specific optimizations for handling linked clones

The scope of VDI and VDI best practices is beyond this post; however, I did want to mention how the HPE SimpliVity platform handles this scenario. More information on this topic can be found at www.hpe.com/simplivity or in this technical whitepaper: HPE Reference Architecture for VMware Horizon on HPE SimpliVity 380 Gen10.

A single datastore per HPE SimpliVity node within a cluster is required to ensure even storage distribution across cluster members. This is less important in a two node HPE SimpliVity server configuration; however, following this best practice guideline will ensure a smooth transition to a three (or greater) node HPE SimpliVity environment, should the environment grow over time. This best practice has been proven to deliver better storage performance and is highly encouraged for management workloads. It should be noted that this is a requirement for desktop-supporting infrastructure. VDI environments typically clone a VM, or golden image, many times. These clones (replicas) essentially become read-only templates for new desktops.

As VDI desktops are deployed, linked clones are created on random hosts. Linked clones mostly read from the read-only templates and write locally, which causes proxying and adds extra load to the nodes that host the read-only templates.

To mitigate against this uneven load distribution, the Resource Balancer Service will automatically distribute replica images evenly across all nodes. This service aligns linked clones with their parents to ensure node-local access.

More information about the HPE SimpliVity hyperconverged platform is available online:

- HPE SimpliVity Data Virtualization Platform provides a high level overview of the architecture

- You’ll find a detailed description of HPE SimpliVity features such as IWO in this technical whitepaper: HPE SimpliVity Hyperconverged Infrastructure for VMware vSphere

In the next post, I will dig a little deeper on the IWO mechanism to help you understand how you can manage the IWO service, if required.

Damian

Guest blogger Damian Erangey is a Senior Systems/Software Engineer for HPE Software-Defined and Cloud Group.

How VM Data Is Managed within an HPE SimpliVity Cluster

- Part 1: Data Creation and Storage

- Part 2: Automatic Management

- Part 3: Virtual Machine Provisioning Options

- Part 4: Automatic Capacity Management

- Part 5: Analyzing Resource Utilization

Learn more about Intel™

Intel is an HPE partner

...

- Back to Blog

- Newer Article

- Older Article

- Back to Blog

- Newer Article

- Older Article

- haniff on: High-performance, low-latency networks for edge an...

- StorageExperts on: Configure vSphere Metro Storage Cluster with HPE N...

- haniff on: Need for speed and efficiency from high performanc...

- haniff on: Efficient networking for HPE’s Alletra cloud-nativ...

- CalvinZito on: What’s new in HPE SimpliVity 4.1.0

- MichaelMattsson on: HPE CSI Driver for Kubernetes v1.4.0 with expanded...

- StorageExperts on: HPE Nimble Storage dHCI Intelligent 1-Click Update...

- ORielly on: Power Loss at the Edge? Protect Your Data with New...

- viraj h on: HPE Primera Storage celebrates one year!

- Ron Dharma on: Introducing Language Bindings for HPE SimpliVity R...