- Community Home

- >

- Storage

- >

- Midrange and Enterprise Storage

- >

- StoreVirtual Storage

- >

- Re: HP P4300 SAN cluster seems slow. Am I expectin...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-25-2013 04:50 AM

07-25-2013 04:50 AM

HP P4300 SAN cluster seems slow. Am I expecting too much?

Hi,

We have got 4x P4300 G2 nodes running SANiQ 10.0 connected to a 2 node Hyper-V cluster. P4300 1 & 3 at the 'HQ' site and P4300 2 & 4 at the 'HA' site. This has 2 volumes set up, a (RAID5, Network RAID10) 1GB fully provisioned 'Witness' volume for the Hyper-V cluster it's attached to, and also a (RAID5, Network RAID10) thinly provisioned 5.47TB volume for the Hyper-V vhds.

The cluster nodes have 4x 1Gb NICs dedicated and the 10.0 DSM installed on them.

I have seen this thread in which the last post shows that it's possible to saturate the 1GB connection at 125MB/s:

In the StoreVirtual console I'm seeing a Queue Depth Total peaking at about 85, maximum IOPS at 2k and the Throughput Total shows a maximum of around 150MB/s.

The DSM is obviously doing it's job as I'm seeing +125MB/s but theoretically I should be able to 500MB/s. The performance monitor of the cluster server is showing a high Average Disk Queue Length so it appears to be waiting for the disk.

Am I setting my expectations too high by expecting to be able to get 250MB/s from four nodes?

Thanks,

James.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-25-2013 05:57 AM

07-25-2013 05:57 AM

Re: HP P4300 SAN cluster seems slow. Am I expecting too much?

you don't mention some details about the way you are testing this. file size and drive cluster size play a big role in determining throughput as you may be limited by IOPS which could cause the issue you are seeing. Beyond that, I'm not aware of any link agrogation method (DSM, LCAP, ALB, whatever...) that is 100% effective so 1Gb+1Gb does not equal 2Gb throughput exactly and might be as low as 1.5Gb depending on what is going.

If you are really curious, just make sure that you are getting balanced throughput on each NIC on your clusters and that each NIC is talking to each Node. Beyond that, you can play with cluster sizes and watch IOPS and latency in CMC to maximize whatever stat you care about most (most people its IOPs while some care more about throughput).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-25-2013 07:28 AM

07-25-2013 07:28 AM

Re: HP P4300 SAN cluster seems slow. Am I expecting too much?

I can anwser your question on queue depth

p4300 g2 sas drives should have a queue depth of 4 x 8 x 2 = 96 that would be healthy.

How does your applications seem to be responding?

Make sure you have the dsm setup properly and are seeing traffic for those nics.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-26-2013 02:48 AM

07-26-2013 02:48 AM

Re: HP P4300 SAN cluster seems slow. Am I expecting too much?

We've been struggling with the VMs being laggy for some time and thought that it may be the server that was at fault. I downloaded the Microsoft PAL tool and Windows is complaining that the Average Disk Queue Length to the Hyper-V volume is too high. So there's no real testing as such I'm just monitoring real-world performance.

I'm not expecting to see the full 2GB wire-speed to a single unit because I understand that there are overheads involved, but I have 4x 1GB server NICs and 8x 1GB NICs across the 4 nodes. I was expecting to see the 4x server NICs operating flat out in Task Manager (1GB to each node) but I've not seen them top about 35% (they are balanced though). Reason being is that we have another vendor's SAN and that frequently hits 90% on both server iSCSI NICs to the 2x NIC ALB SAN when it's under a heavy load.

I've started to wonder if this could be due to the setup of our LUNs. We have one LUN spread over a pair of nodes and that is network RAIDed with another pair of nodes. Should I chop this up into smaller LUNs? Preferably ones that don't span two nodes?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-26-2013 02:13 PM - edited 07-26-2013 02:14 PM

07-26-2013 02:13 PM - edited 07-26-2013 02:14 PM

Re: HP P4300 SAN cluster seems slow. Am I expecting too much?

que is about I/O... have you setup your drives correctly for cluster size? I would watch CMC to see what I/O your luns and your nodes are seeing for read and write as well as your que depths for each as well. maybe there is a performance issue or maybe the setup you have configured is causing excessive I/O requests to the SAN.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-29-2013 02:58 AM

07-29-2013 02:58 AM

Re: HP P4300 SAN cluster seems slow. Am I expecting too much?

Hi,

This was initially installed by a 3rd party and I would have assumed they would have installed everything in line with best practices (you know what they say about assumption though). What would be the best way of checking?

I've made sure that the LUNs presented to the server were formatted with 512byte sectors if that's what you mean?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-29-2013 06:19 AM

07-29-2013 06:19 AM

Re: HP P4300 SAN cluster seems slow. Am I expecting too much?

So if we have 4 Node Cluster, we should at least create 4 Volumes, So each Node can act as a Gateway for the Volume.

Ideally create 2 or 3 times the number of Volume so each Node acts as a Gateway for at least 2-3 volumes.

This would help in distributing the load from one/some volumes to many which is handled by many Nodes.

Not 100% sure but isn't 512Bytes quite small.

--------------------------------------------------------------

How to assign points? Click the KUDOS! star!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-29-2013 06:49 AM

07-29-2013 06:49 AM

Re: HP P4300 SAN cluster seems slow. Am I expecting too much?

Jitun, he is using hyper-v not ESX so I don't think that need for one lun per server is needed as all talk to all nodes assuming the HP DSM is setup.

Jay, you can check to make sure the DSM was setup and is working indirectly through CMC by opening up each/any LUN and going to the iSCSI sessions tab. In that tab, it shows you all connections to the LUN, you should see every server and every NIC on the servers represented here and you should have one connection per NIC that just says the gateway connection and then one connection per NIC per SAN NODE that says the gateway connection+DSM. If you don't see this, then it wasn't setup correctly.

As for the cluster size, 512 is REALLY small and incorrect for hyper-v. it should be 64k for CSV drives. I forget the size of the stripe as defined by lefthand, but I"m sure the network raid stripe is NOT 512b so trying to do random I/O of size 512b ends up causing significantly more I/O on the storage nodes as it might be spread across up to 64k clusters which means you could end up doing somethink like 120+ IO per actual I/O request. One easy way to determine this is if you watch node I/O and LUN I/O in CMC and watch drive I/O on your servers and see how they compare.... I bet your nodes are doing way more I/O than your drives and its because of cluster format size.... that and if you aren't acutally using the HP DSM that can slow things down as well.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-31-2013 04:54 AM - edited 07-31-2013 05:04 AM

07-31-2013 04:54 AM - edited 07-31-2013 05:04 AM

Re: HP P4300 SAN cluster seems slow. Am I expecting too much?

Hi,

Sorry for not getting back sooner. I thought I'd subscribed to the thread, but hadn't...

I'm definitely going to chop up the LUN. I've been reading that only one controller can access a LUN at a single time. This would mean that all traffic would have to go through a single node, drastically reducing the performance (if this is true). Also from a backup perspective, it's a lot better to restore a lot of smaller LUNs as I can pick the order to restore them (or the VMs on them) in the event of failure this way.

One of the other things I read was that the LUNs cluster size should be 512 bytes because that is the size of the sectors on the physical disks. But, Looking at this thread:

it would suggest that 64K easily outperforms 4K in all tests. I'll make sure my LUNs are formatted the same.

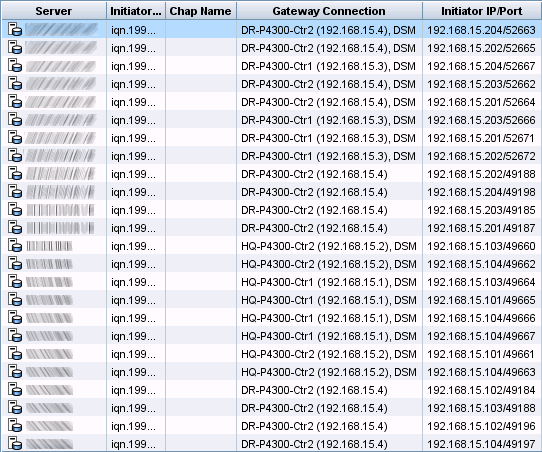

Here's what I'm seeing on the iSCSI sessions tab:

The top server (DR server) seems to be configured nicely. All 4 NICs with a gateway connection (no DSM) to 192.168.15.4 then a DSM connection for each NIC to .15.3 and .15.4. But should this also have a DSM connection for each NIC to .15.1 and .15.2 as well though?

The bottom server (HQ server) however, seems to be missing a DSM connection from 192.168.15.102 to .15.1 and .15.2 and it also appears to be missing a gateway connection (no DSM) from .15.101 to .15.4 which strikes me as a little odd.

I'm wondering why only node 4 is showing as a gateway connection (no DSM). Is this because, as Jitun implies, only one node can act as a gateway for a LUN and in my case it happens to be .15.4?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-31-2013 02:36 PM

07-31-2013 02:36 PM

Re: HP P4300 SAN cluster seems slow. Am I expecting too much?

can you re-sort that picture using initiaor IP? My eye are going cross trying to match everything up.

You should have one non-DSM connection to one node per NIC as you see there and then you should have one DSM connection for every nic connected to every node in the cluster. In this case I see four nics (ending 201,202,203,204) and four mode connections (ending 1,2,3, 4), so you should have 4 DSM connections per nic. If you see some nics missing DSM connections it generally means one of three things. 1) that iSCSI session was not configured for MPIO and/or the load balancing setting was left at vendor specific and not changed to round-robin. 2) its temporary and the DSM is still discovering its connections. 3) you are using the HP DSM with multi-sites which would then only connect to the local site nodes unless there was a local path failure.

I attached a full correct connection for two VSA nodes with two servers (with two nics each) connected to them. As you can see each NIC has two DSM connections and one primary connection. you should see 1 primary and 4 DSM per NIC.

As for cluster size, it all depends. if you want max IO, 512 may work for you, but that will be very limiting for throughput. It seems like 64k is getting more and more popular by way of its requirement for CSV or ReFS drives so I've moved most of my LUNs to 64k, but there are so many combinations that you will go madd trying to figure out a best option and just need to understand what raising one option over another means for your performance.