- Community Home

- >

- Software

- >

- HPE Ezmeral: Uncut

- >

- How HPE Data Fabric (formerly MapR) maximizes the ...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

How HPE Data Fabric (formerly MapR) maximizes the value of data

Read highlights from a new IDC report concluding that the MapR Data Platform (now HPE Data Fabric, a solution for AI and analytics from edge to core to cloud) has a substantial positive impact on aspects of large-scale data usage and related business processes for the organizations interviewed. Then register to download the complete report.

Simplicity is a strength. That’s often true, but especially so in the increasingly complicated landscape of new data sources, advanced analytics applications, and a burgeoning interest in machine learning and artificial intelligence (AI) in businesses across a wide range of sectors. While simplicity is not always a given, with the right choices, it can be a practical reality.

Simplicity is a strength. That’s often true, but especially so in the increasingly complicated landscape of new data sources, advanced analytics applications, and a burgeoning interest in machine learning and artificial intelligence (AI) in businesses across a wide range of sectors. While simplicity is not always a given, with the right choices, it can be a practical reality.

Simplicity is particularly useful in large-scale data collecting, curating, and feature extraction for modern data-driven applications being developed and managed by multiple teams simultaneously. Without it, you can drown in all the froth that doesn't actually involve getting something useful done.

This simplicity comes from understanding and conquering the complexity. As Steve Jobs once said, “It takes a lot of hard work to make something simple, to truly understand the underlying challenges and come up with elegant solutions.”

Fortunately, HPE provides technology that meets the need for simplicity in data-driven applications. MapR, recently acquired by Hewlett Packard Enterprise, built a data platform engineered from the ground up to address these needs in a simple, robust, and cost-effective way that avoids the complexity of other distributed solutions. MapR has been integrated within the broader HPE business—with continued focus and investment in the MapR product roadmap, engineering talent, and customer success. The MapR Data Platform—now renamed HPE Data Fabric—provides a way to access or move data and applications across the entire landscape from edge to core to cloud. These capabilities also make it ideal to serve as the persistent storage layer of the recently announced HPE Container Platform. The impact of the data fabric for persistence and management of large-scale data, including interactions with containerized applications, has been demonstrated in a variety of real world use cases.

In order to see exactly how HPE Data Fabric (MapR) maximizes the value that businesses can extract from data, let’s start with a new IDC study. Analysts at IDC interviewed a group of organizations that are long-time users of MapR in order to discover the impact of this technology on business outcomes across a range of sectors including financial services, manufacturing, pharmaceutical, and other industries. The enterprise organizations they interviewed are substantial businesses, with the majority of these customers having multi-billion dollar revenues.

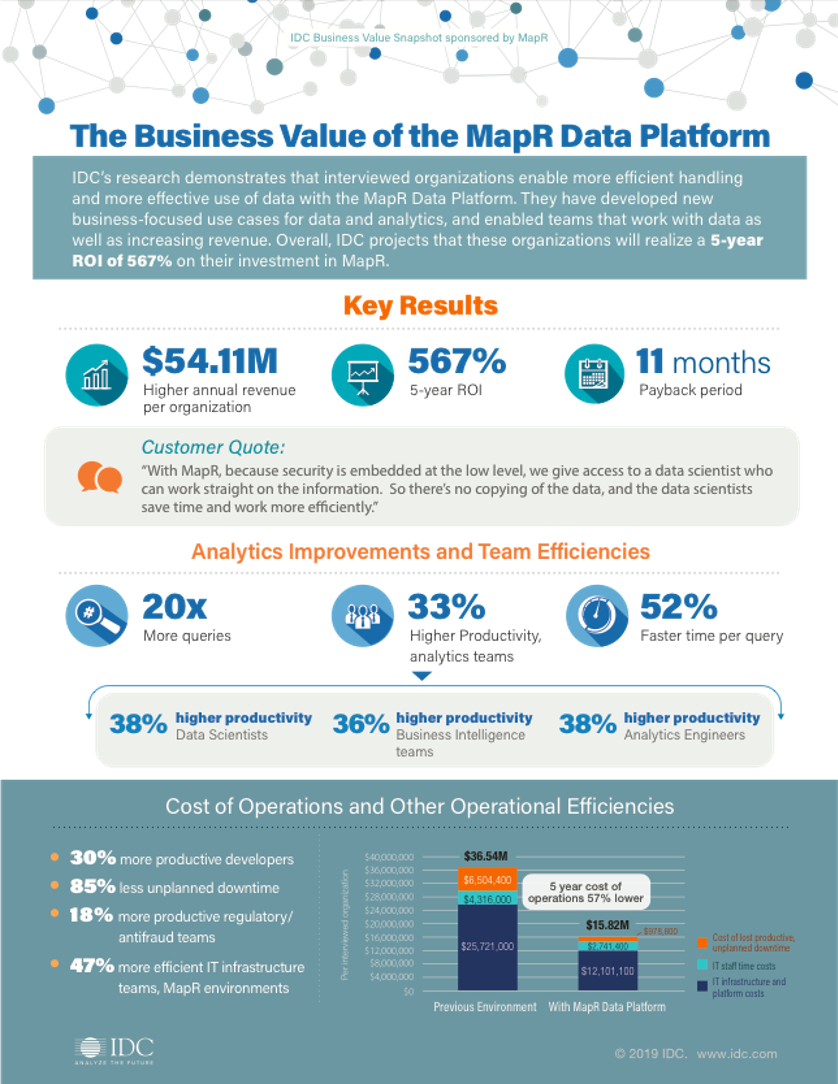

The IDC findings, published in a detailed report, were clear-cut and significant: MapR had a substantial positive impact on a number of aspects of large-scale data usage and on related business processes for the organizations interviewed. (As you refer to the report, keep in mind that it describes this technology by the original name, MapR Data Platform, but the capabilities and their impact still apply to the HPE Data Fabric.) Among a group of positive benefits, the report states an estimated 567% five-year ROI with an eleven-month payback period.

This is an impressive conclusion. How then does this technology produce these benefits?

For the past seven years, I’ve been in contact with both the users and the teams that engineered the MapR Data Platform, so while I’m gratified by the IDC study, I’m not at all surprised by the results. Let's examine some of those results in light of what I’ve observed about this technology.

Benefits for machine learning and AI

Thanks to the high value that machine learning and AI potentially can deliver, these approaches are rapidly being adopted across a wide range of businesses. HPE Data Fabric is uniquely suited to accelerate the development and management of large-scale AI and machine learning systems. One dramatic customer example that springs to mind is that of internationally known car manufacturers. A number of these car companies run key aspects of their autonomous car projects on this technology.

These projects involve petabytes of data per day from geo-distributed sources (i.e. autonomous cars in the field) of data that is used by a variety of sophisticated AI applications. The technology originally developed by MapR helps make this feasible by providing fast, reliable, convenient mirroring of data from edge clusters back to core data centers for aggregation and analysis. It also supports machine learning directly by allowing high-speed access to archived data during the training process.

Autonomous car projects provide a dramatic example of how customers are leveraging HPE software for their AI and machine learning systems, but they aren’t the only ones. This technology also helps with machine learning projects being developed by many types of businesses. How? The distributed file system capability of the MapR Data Platform is unusual because systems that actually produce data usually can write directly to MapR without intervening transformations or additional storage systems. The ability to collect data directly improves efficiency and productivity. Having large data sets collected together on the same platform and even on the same cluster also provides data scientists with a comprehensive view of data, a big advantage for building effective AI and machine learning systems, as shown in the results reported for the IDC study.

Keep in mind that the effort needed for data logistics is a major part of the overall effort needed to build and maintain an AI or machine learning system, so doing data logistics efficiently provides a huge advantage. MapR (HPE Data Fabric) makes it possible for a wide variety of machine learning tools to directly access the same data using whatever method is easiest.

Other distributed data storage platforms (such as HDFS-based platforms) generally require copying data out to specialized systems for analytics and machine learning; this is an unnecessary data movement that wastes effort. In contrast, the MapR technology simplifies and speeds up development by eliminating the need for time-consuming data copying into or out of analytics or machine learning environments. A data scientist, for instance, can use Python for feature extraction or data processing on smaller scale data sets and can also use Apache Spark for data processing of large-scale data, all on the same platform. It helps to use the tool you want for each situation, without having to change platforms.

This convenience, flexibility and efficiency with data handling all contribute to improved development time and productivity, as observed in the IDC study. The IDC report cites 33% to 38% improved productivity for analytics teams and data scientists using MapR.

Use cases showcase the surprising benefits of reliability and availability

Having a system that is reliable and highly available is a good thing, but how do reliability and availability actually pay off?

Take the example of a Fortune 500 U.S. retail company. This customer has said that business teams using MapR handle their workloads with fewer machines, fewer administrators, and less downtime as compared with teams using other data systems. This contrast is particularly evident during the crush of holiday seasons, a crucial but potentially stressful period in their business cycle. Other teams set up a so-called “war room” to deal with reliability issues that the holiday crush causes with their other data clusters, but administrators of the MapR cluster had no such need: it handled increased demand easily.

It’s not just holiday season when reliability and robustness of this technology make life better for IT teams. Companies using MapR over a long time have mentioned that many support teams no longer do on-call rotations because system problems are so rare. It is fine to put everybody on 24-hour call duty if nobody gets a call.

An example of the extreme reliability and availability of MapR-based systems is the authentication process of the Aadhaar service, the Unique Identification Authority of India (UIDAI). This government supported service requires low-latency biometric authentication of a unique 12-digit identification number that must be available to individual users 24 hours a day, 7 days a week, from anywhere in India. Authentication is widely used in banking, employment and providing government services, and thus the uptime requirements are particularly stringent. After experiencing difficulties meeting requirements for availability and reliability using other distributed data platforms, the Aadhaar project switched the authentication process to MapR back in 2014. It’s been functioning without scheduled or unscheduled downtime ever since. That's good because Aadhaar is critical infrastructure for much of India's economy. Being able to meet this level of reliability opens the way to new projects you might otherwise never have thought to do.

These real-world stories about the reliability and availability of the MapR Data Platform fit with observations in the IDC study, which reported 85% less unplanned downtime for MapR users they interviewed. Better reliability reduces the need for workarounds, such as multiple clusters set up to try to cover gaps. This, in turn, avoids cluster sprawl. Simplifying architecture and reducing cluster sprawl not only save on hardware and software costs; risk from human error is also reduced.

Is multi-tenancy practical?

Less cluster sprawl, less administrative and IT costs, and less downtime all contribute to better ROI—all these and more benefits now also extend to the HPE Container Platform, which embeds HPE Data Fabric (MapR) as its data plane.

Another advantage is the ability to support high levels of multi-tenant usage, even on production clusters. The design of the MapR Data Platform is unique: rather than a collection of components integrated via connectors, MapR was engineered as a single system that includes fully distributed meta-data for files, database tables, and event streams. All these capabilities are built into one technology that accepts diverse data types from a wide variety of data sources. This design avoids traffic jams even with complex workloads involving many applications from multiple teams. Distributed meta-data means you can have many more files on a cluster and increase the number of operations that applications can do simultaneously and reliably. The MapR design also protects against data loss. These capabilities, combined with core security, sophisticated load-balancing, and efficient data management, all contribute to making multi-tenancy practical and desirable.

We’ve seen the impact: almost all customers with a MapR production cluster have multiple applications running. The IDC report mentions that, for the organizations interviewed, the average number of business applications running on MapR is 16. We’ve seen that the total number of applications and the number running on the same cluster increases over time.

Customers usually start with one application but soon find other opportunities to extract value from the same data, taking advantage of sunk costs from initial projects. Subsequent applications have a lower hurdle in terms of payback (after all, the cluster is already paid for). At the extreme end is one large financial institution, a long-time MapR customer, that runs hundreds of applications on a single, multi-tenant cluster, taking advantage of the MapR technology to safely mix development and production applications.

Complete results of the IDC report

This infographic highlights some of the key results of the IDC study.

Register to download the complete IDC report. We recently co-presented a webinar with IDC to review the results and implications of the report findings. You can watch the full on-demand replay.

Here you have seen the unique MapR Data Platform track record for providing competitive advantages in a variety of business sectors. Now find out how, as HPE Data Fabric, it can address the scale, reliability, and mobility data needs of your business—from edge to core to cloud. Watch this short lightboard video about HPE Data Fabric to find out more.

Ellen Friedman

Hewlett Packard Enterprise

twitter.com/Ellen_Friedman

linkedin.com/showcase/hpe-ai

hpe.com/info/data-fabric

Ellen_Friedman

Ellen Friedman is a principal technologist at HPE focused on large-scale data analytics and machine learning. Ellen worked at MapR Technologies for seven years prior to her current role at HPE, where she was a committer for the Apache Drill and Apache Mahout open source projects. She is a co-author of multiple books published by O’Reilly Media, including AI & Analytics in Production, Machine Learning Logistics, and the Practical Machine Learning series.

- Back to Blog

- Newer Article

- Older Article

- SFERRY on: What is machine learning?

- MTiempos on: HPE Ezmeral Container Platform is now HPE Ezmeral ...

- Arda Acar on: Analytic model deployment too slow? Accelerate dat...

- Jeroen_Kleen on: Introducing HPE Ezmeral Container Platform 5.1

- LWhitehouse on: Catch the next wave of HPE Discover Virtual Experi...

- jnewtonhp on: Bringing Trusted Computing to the Cloud

- Marty Poniatowski on: Leverage containers to maintain business continuit...

- Data Science training in hyderabad on: How to accelerate model training and improve data ...

- vanphongpham1 on: More enterprises are using containers; here’s why.

- data science course on: Machine Learning Operationalization in the Enterpr...