- Community Home

- >

- HPE AI

- >

- AI Unlocked

- >

- Industrialized efficiency in the data science disc...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

Industrialized efficiency in the data science discovery process

Explore the data science discovery process and examine some of the challenges faced when attempting to scale up the process.

In my previous post, The benefits of industrializing data science, I took a look at the overall production flow through the system and discussed some critical points where time is wasted either handing off work between groups or waiting for things to happen (such as infrastructure provisioning). I also identified a number of key questions that you will need to have answers to if you are to have a hope of closing the insight and executions gaps I identified in Mind the analytics gap—a tale of two graphs. In this fourth blog in my series, I’m digging into the data science discovery process to understand more about the challenges and look at how they can be addressed.

The first thing to point out about the data science discovery process is that it contains a fair amount of “craft” as well as the science element. Although scientific principles of hypothesis generation and testing will be common, each data scientist will tend to approach the problem differently, may prefer different tools, code differently, and will end up with different results. And to put it bluntly, some are just naturally quicker or better at it than others.

Secondly, production line flexibility is another key aspect to consider. If the mission is to leverage data science to optimize every business process, it stands to reason that you will need to have an adaptable production line that allows you to tackle every kind of business problem, not just the simple ones. This will require flexible infrastructure, tools and people—and the flexibility needs to be a design principle as opposed to an optional feature.

Method in the madness

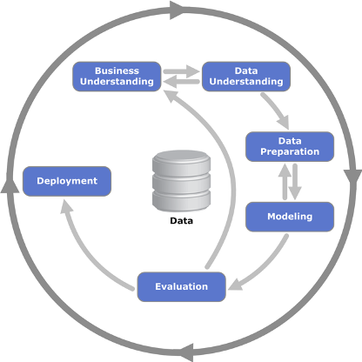

If you are a practicing data scientist, I’m sure you will have adopted or adapted one of the many methods that are out there “in the wild.” For others, and just as a guide to the kind of steps involved in the process, I’m going to use cross-industry

The first two steps of business and data understanding are key, of course. It’s the data science equivalent of “reading the exam question twice and answering it just once.” It’s important to note that often a business problem may need to be broken down into multiple parts in order to answer it effectively. For example, when presented with a customer churn problem in a telecoms company, you would need to find out whether the problem is related to business or retail customers, whether it is voluntary or involuntary churn, pre-pay or post-pay (contract) churn, etc. Each of these different types of churn may have a distinct “signature” and will need to be thought about differently.

The planned action will also have a bearing on the data that’s available to address the challenge. If you were planning on sending a direct mail to each customer at (high) risk of churn, you would most probably have all the data you would need available. But if instead you wanted to deploy the model to your inbound call center, you would only have access to all the data once the customer’s identity was verified. More recent data such as problems with dropped calls or “bill shock” may only be known to the call center operator during the call, so any churn prediction would need to be updated and acted upon in real time.

The data challenge

Most large organizations have put in place a data governance and/or business glossary of some kind to help better manage data and comply with regulatory demands. This is often a good place for a data scientist to start in order to survey the potential data that supports the analysis. In many cases, other data is required in order for the value of analysis to be surfaced. Access to “other” data, whether internal or external, can be a real issue because of regulatory and/or security concerns, and production data rules. These are problems that need to be overcome at an organizational level, or the same problem will be revisited every time. This frustrates all concerned and can impact what data science can achieve. Essentially the data science process has to be seen as critical and of a "production" nature with access approvals managed through automation rather than a manual request each time.

If this is data your data scientist hasn’t seen before, then even at this early stage they are likely to need a modest environment with access to the data so it can be quickly assessed to avoid nasty surprises. Does is look like the data you were expecting? Are there outliers? Missing values? Can I easily integrate it with my other data? What does the data distribution look like?

A data science discovery assembly line

The next three steps of data preparation, modeling, and evaluation are what you might think about as data science discovery. Here, the data scientist will repeatedly iterate through these steps, perhaps modifying model parameters, the data

Even though there will be many similarities, the discovery part of the data science process is essentially a personal one. As this is the case, to maximize productivity and support innovation, it makes sense to give your data scientist everything they need. If one prefers to use Jupyter notebook and another Zeppelin, does it really matter if you can accommodate both? Imposing a standard set of tools (and versions) or standard-sized infrastructure will only serve to frustrate people which is the last thing you want if you’re trying to grow the discipline in your business. I’m not advocating you give your data scientists endless resources to burn as that would be wasteful, but you need to strike the right balance.

The way to address this conundrum is through containerization of the data science environment—allowing each user to work in isolation from each other, especially if you can do so within an enterprise framework that might allow you to facilitate the adoption of newer tools while also ensuring integrity, compliance, and security.

Data management costs and delays can also be a real challenge because of the way data science infrastructure has been added onto existing data lakes based on direct attached storage (DAS) and 3-way replication, as is common in traditional Hadoop-based platforms. This is sub-optimal to say the least, as the data is typically replicated to provide a writable copy before the data scientist can begin work. This task is typically performed by another group, introducing delays in scheduling and replication as well as excess storage costs. Worse, each time the data scientist wants to revise the data included, they may need to go back “cap in hand” to request the new data also be copied. More cost and more delay! These delays are further exacerbated by the need for the data governance and/or data security teams to also be involved in the process.

How do you scale data science?

I’ve already talked about the craft involved in data science, but perhaps a better way of thinking about it is as a team sport but with individual, highly skilled players. To get the most out of them, they need to play in the right position, with space to “do their thing.” This includes the training facilities and backroom staff that support them.

This approach to a single, simple, and standardized environment causes several issues that will inevitably hamper innovation and productivity. One speed and length for the production line and one set of tools for everyone is going to limit what can be built, however good the team huddled around it is. You also need to avoid the situation where one data scientist has to stop the production line because of an issue they are having as this will impact all the other projects underway. Data science can be messy, so it’s not unexpected for experiments to result in runaway activities which will impact others, especially if the infrastructure is underprovisioned.

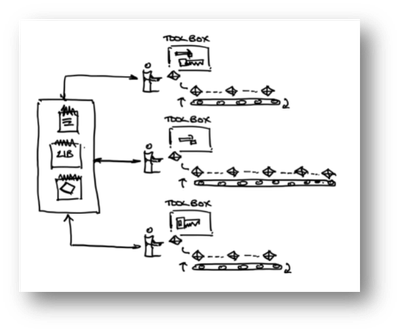

A better approach is to provision each data scientist with an independent and isolated environment for each new project they need to tackle. In this way, you can ensure the production line length, speed, and tooling is designed to meet their

Despite the data scientists working independently, it’s still important that they operate as a team through mentoring and sharing of best practices and discoveries. Through their work they will find new features in data, interesting results or write important blocks of code that could help others. This represents the organizational learning that should be preserved through code repositories and model libraries as well as wikis and other sharing tools.

In many cases, having found a model and feature combination that looks promising, the data scientist will go through a second phase to optimize the model hyperparameters or, in the case of a neural network, determine the optimal architecture. As this phase is computationally heavy, the data scientist will typically need a much larger environment that is often GPU based to iterate through each possible combination in a reasonable chunk of time, as well as the hyperparameter optimization or neural architecture search tooling to manage the process. This second provisioning process for the environment, tooling, code, and data can be another point of delay in the process which needs to be avoided if you are to keep your data scientists being as productive as possible.

And in most cases, the discovery process will be time-boxed at the beginning of the project. A model can always be improved through more iterations and diligence, but development investment needs to be balanced with the need to apply the model in production and the data science backlog (getting other models into production). Fixing the project duration in this fashion also significantly simplifies the coordination and scheduling of the downstream deployment step.

A quick recap

In this blog, I unpacked the data science discovery process to take a look at some of the challenges faced when attempting to scale up the process. In particular, I discussed the long delays that are typical—because of the often manual, multi-step, and human workflows involved in providing the data and physical environment needed for the data scientist to even start their work.

By adopting a more modern containerized approach to these workloads, you can gain significant flexibility over the tools and versions on offer to data scientists. You can also have varying sizes of environment and benefit from being able to optimize complimentary workloads and timings—and even deploy workloads across a number of environments, including on-premises and cloud.

Through automation within an enterprise framework, you can then provision within minutes the environments needed by data scientists and others, delivering the throughput required and improving quality. More importantly, by automating in this fashion, you get the chance to embed the robust auditing and check procedures required to ensure access to data and systems complies with corporate standards—something that is genuinely hard to achieve when data is fractured and replicated 100s or even 1000s of times across the enterprise.

Catch up on my previous blog post—and watch for the next blog post where I will take a look downstream to understand how the outputs from the data science discovery process are deployed and operationalized to create value for the business. In particular, the focus will be on how this process can also be transformed so it can keep pace with the new discovery process.

This blog is part of a series of blogs on industrializing data science. The best place to start is the first blog on the topic:

Other blogs in the series include:

- Industrializing the operationalization of machine learning models

- Transforming to the information factory of the future

You might also like to take a look at two earlier blogs exploring how IT budgets and focus needs to shift from business intelligence and data warehouse systems to data science and intelligent applications.

Doug Cackett

Hewlett Packard Enterprise

twitter.com/HPE_AI

linkedin.com/showcase/hpe-ai/

hpe.com/us/en/solutions/artificial-intelligence.html

Doug_Cackett

Doug has more than 25 years of experience in the Information Management and Data Science arena, working in a variety of businesses across Europe, Middle East, and Africa, particularly those who deal with ultra-high volume data or are seeking to consolidate complex information delivery capabilities linked to AI/ML solutions to create unprecedented commercial value.

- Back to Blog

- Newer Article

- Older Article

- Dhoni on: HPE teams with NVIDIA to scale NVIDIA NIM Agent Bl...

- SFERRY on: What is machine learning?

- MTiempos on: HPE Ezmeral Container Platform is now HPE Ezmeral ...

- Arda Acar on: Analytic model deployment too slow? Accelerate dat...

- Jeroen_Kleen on: Introducing HPE Ezmeral Container Platform 5.1

- LWhitehouse on: Catch the next wave of HPE Discover Virtual Experi...

- jnewtonhp on: Bringing Trusted Computing to the Cloud

- Marty Poniatowski on: Leverage containers to maintain business continuit...

- Data Science training in hyderabad on: How to accelerate model training and improve data ...

- vanphongpham1 on: More enterprises are using containers; here’s why.

-

AI

18 -

AI-Powered

1 -

Gen AI

2 -

GenAI

7 -

HPE Private Cloud AI

1 -

HPE Services

3 -

NVIDIA

10 -

private cloud

1