- Community Home

- >

- Storage

- >

- Around the Storage Block

- >

- Need for speed and efficiency from high performanc...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

Need for speed and efficiency from high performance networks for Artificial Intelligence

With an increase in the number of mission-critical workloads – such as AI/ML running on denser and faster datacenter infrastructure – there is a greater need for speed and efficiency from high performance networks.

The arrival of faster storage in the form of solid state devices such as Flash and NVMe is having a similar effect. We find the need for increased bandwidth all around us as our lives increasingly intersect with technology. Leading this charge are Artificial Intelligence (AI) workloads, which necessitate solving complex computations that require fast and efficient data delivery of a vast amount of data sets. The use of lightweight protocols such as Remote Direct Memory Access (RDMA) can further help to complete the fast exchange of data between computing nodes, and streamlines the communication and delivery process.

It was only a few years ago when a majority of data centers started deploying 10GbE in volume, reducing the required training times. Today, we see a shift toward 25 & 100GbE, and adaption for 200/400GbE, to answer emerging bandwidth concerns. Overall, the Ethernet switch market is undergoing a transformation. Previously, Ethernet switching infrastructure growth was led by 10/40Gb, but the tide is turning in favor of 25 and 100Gb.

Analysts agree that soon 25 and 100Gb – and emerging 400Gb Ethernet speeds – are expected to surpass all other Ethernet solutions as the most deployed Ethernet bandwidth. This trend is driven by mounting demands for host-side bandwidth as data center densities increase, and pressure grows for switching capacities to keep pace. More than just bandwidth, 25 & 100GbE technology is helping to drive better cost efficiencies in capital and operating expenses, compared to legacy 10/40Gb, and enabling greater reliability and power usage for optimal data center efficiency and scalability.

Emergence of processing and analyzing of data at the Edge

Edge computing allows data from devices to be analyzed at the edge before being sent to the data center. Using Intelligent Edge technology can help maximize a business’s efficiency. Instead of sending data out to a central data center, analysis is performed at the location where the data is generated. Micro data centers at the Edge – with the integration of storage, compute and networking – delivers the speed and agility needed for processing the data closer to where it’s created.

According to Gartner, an estimated 75% of data will be processed outside traditional centralized data centers by 2025. Edge computing has transformed the way data is being handled, processed, and delivered from millions of devices around the world. Now, the availability of faster networking is enabling intelligent edge computing systems to accelerate the creation or support of real-time applications, such as video processing and analytics, factory automation, artificial intelligence and robotics.

The availability of faster servers and storage systems connected with high speed Ethernet networking allows for efficient intelligent processing of this data at the edge. This is where AI – applied at the Edge and enabled by a high speed networking – can transform how we collect, transport, process and analyze data with speed and agility. As data becomes more distributed, with billions of devices at the Edge generating data, it will require real time processing to effectively analyze and draw actionable insights.

There is a paradigm shift from collect-transport-store-analyze to collect-analyze-transport-store, in real time. The Intelligent Edge enables the processing power closer to where the data being created. It can further solve latency for real time applications. Streaming analytics can provide the required intelligence needed at the edge where data can be cleansed, normalized, and streamlined before being transported to the central data center or cloud for storage, post-processing, analytics, and archiving. Then, once data is sent to central data center from multiple edge locations, it can be further combined and correlated for trend analysis, anomaly detection, projections, predictions, and insights, using machine learning and AI.

GPUs improve the performance required for parallel processing

GPUs improve the performance required for parallel processing

The rapid increase in the performance of graphics hardware, coupled with recent improvements in its programmability, has made graphics accelerators a compelling platform for computationally demanding tasks, in a wide variety of application domains. GPU based clusters are used to perform compute intensive tasks, like finite element computations, and computational fluids dynamics[1]. Since the GPUs provide high core count and floating point operations capability, high-speed networking is required to connect between the GPU platforms. This provides the needed throughput and the lowest latency that GPU to GPU communications require.

Using AI, organizations are developing, and putting into production, process and industry applications that automatically learn, discover, and make recommendations or predictions that can be used to set strategic goals and provide a competitive advantage. To accomplish these strategic goals, organizations require data scientists and analysts who are skilled in developing models and performing analytics on enterprise data. These data analysts also require specialized tools, applications, and compute resources to create complex models and analyze massive amounts of data.

Deploying and managing these CPU intensive applications can place a burden on already taxed IT organizations. The Red Hat OpenShift Container Platform, running on HPE ProLiant DL servers and powered by NVIDIA® T4 GPUs, can provide an IT organization with a powerful, highly available, DevOps platform that allows these CPU intensive data analytics tools and applications to be rapidly deployed to end users. This is accomplished through a self-service OpenShift registry and catalog. Additionally, OpenShift compute nodes, equipped with NVIDIA T4 GPUs, provide the compute resources to meet the performance requirements of these CPU- intensive applications and queries.

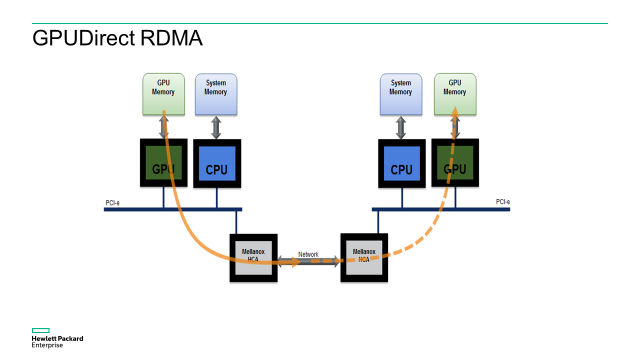

GPUDirect for GPU-to-GPU communication

The main performance issue when deploying clusters that consist of multi-GPU nodes, involves the interaction between the GPUs, or the GPU-to-GPU communication model. Prior to the GPU-direct technology, any communication between GPUs had to involve the host CPU, and required buffer copies. CPU involvement in the GPU communications and the need for a buffer copy created bottlenecks in the system, slowing the data delivery between the GPUs. New GPUDirect technology enables GPUs to communicate faster by eliminating the need for CPU involvement in the communication loop and eliminating buffer copies. The result is increased overall system performance and efficiency that reduces the GPU-to-GPU communication time by 30%. This allows for scaling out to 10s, 100s, or even 1,000s of GPUs across hundreds of nodes. To accommodate this type of scaling and for it to work flawlessly, requires a network with little to no dropped packets, the lowest latency, and the best congestion management.

Artificial intelligence, machine learning, data analytics and other demanding data-driven workloads requires amazing computational capabilities from servers and the network. Today’s screaming fast multi-core processors provide a solution by accelerating computational processing. Consequently, all this places a huge demand on the network infrastructure.

Let’s walk through a checklist you can reference for the needs of high performance networking infrastructure in data centers for moving AI/ML data sets:

- Highest bandwidth. 25/100/200GbE and upcoming 400GbE speeds

- ZERO packet loss. Ensures reliable and predictable performance

- Highest efficiency. Built-in accelerators for storage, virtualized, and containerized environments

- Ultra-low latency. True cut-through latency

- Lowest power consumption. Improves operational efficiency

- Dynamically shared, flexible buffering. Flexibility to dynamically adapt and absorb micro-bursts and avoid congestion

- Advanced load balancing. Improves scale and availability

- Predictable performance. High Tb/s switching capacity offering wire-speed performance

- Low cost. Superior value

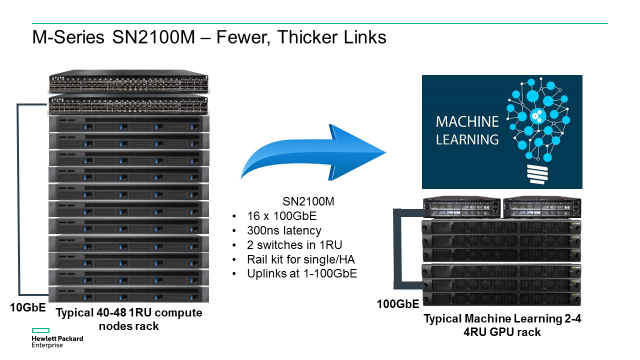

Value delivered by HPE M-series

With unique half-width switch configuration and break-out (splitter) cables offered by the M-series further extend its feature set and functionality. The breakout cable enables a single 200Gb split into two 100Gb links, or any 100Gb port on a switch to breakouts four 25Gb ports. This is accomplished by linking one switch port to up to four adapter cards in servers, storage, or other subsystems. At a reach of 3 meters, they can accommodate connections within any rack.

Combine this with half-width form factors and it provides the ideal combination of performance, rack efficiency, and flexibility for today’s storage, hyperconverged, and machine learning environments, as well as processing at the Edge. Many of these environments don’t require 48 ports in a single rack, especially at 25/100GbE speeds. Typically, for redundant purposes, two 48+4-port switches are installed at the TOR, with more than half of each switch ports unutilized and two rack U of space taken up. The M-series SN2100M with a half-width design, deliver high availably and increased density by allowing side-by-side placement of two switches in a single U slot of a 19 inch rack. Unique break-out cables can be used to split ports up to a 1-to-4 ratio for a port density of up to 64 10/25GbE ports in a single rack U. This provides both high port density and allows for higher capacity and efficiency, simplifying scale-out environments, as well as providing intelligent process of data at the edge, saving on total cost of ownership. It also presents an easy migration path to next-gen networking.

Summary

Whether or not you are supporting AI/ML or DA workloads today, most modern data centers are highly virtualized and running on solid state storage. NVMe storage is just around the corner and slated to further push performance standards. For both fast NVMe storage and all computational inferencing workloads, predictable and reliable data delivery depends on fast and accurate data delivery – and that starts at the network.

Rapid improvement of data center computing and low-latency storage solutions have transferred data center performance bottlenecks to the network. Today’s data centers should be designed to handle this anticipated bandwidth with a low latency, lossless Ethernet fabric. Beyond the performance advantages, the economic benefits of running NVMe and AI workloads over Ethernet are substantial. HPE M-series Ethernet switch family delivers the highest levels of performance at all the relevant speeds, including 1, 10, 25, 40, 100 & 200Gb/s. They have the lowest latency of any mainstream switch, feature zero packet loss (no avoidable packet loss, for example, due to traffic microbursts), and deliver that performance fairly and consistently across any packet size, mix of port speeds, or combination of ports.

The M-series Ethernet switch meets and exceeds the most demanding criteria for wire-speed performance so real-time decisions from the most robust cognitive computing applications can thrive.

Find out why HPE M-Series Ethernet Switch Family and HPE Alletra are a perfect match.

[1] Computational fluid dynamics is a branch of fluid mechanics that uses numerical analysis and data structures to analyze and solve problems that involve fluid flows.

Meet Around the Storage Block blogger Faisal Hanif, Product Manager, HPE Storage and Big Data. Faisal leads Product Management and Marketing for next generation products and solutions for storage connectivity and network automation & orchestration. Follow Faisal on Twitter @ffhanif .

Storage Experts

Hewlett Packard Enterprise

twitter.com/HPE_Storage

linkedin.com/showcase/hpestorage/

hpe.com/storage

- Back to Blog

- Newer Article

- Older Article

- Back to Blog

- Newer Article

- Older Article

- haniff on: High-performance, low-latency networks for edge an...

- StorageExperts on: Configure vSphere Metro Storage Cluster with HPE N...

- haniff on: Need for speed and efficiency from high performanc...

- haniff on: Efficient networking for HPE’s Alletra cloud-nativ...

- CalvinZito on: What’s new in HPE SimpliVity 4.1.0

- MichaelMattsson on: HPE CSI Driver for Kubernetes v1.4.0 with expanded...

- StorageExperts on: HPE Nimble Storage dHCI Intelligent 1-Click Update...

- ORielly on: Power Loss at the Edge? Protect Your Data with New...

- viraj h on: HPE Primera Storage celebrates one year!

- Ron Dharma on: Introducing Language Bindings for HPE SimpliVity R...