- Community Home

- >

- Company

- >

- Advancing Life & Work

- >

- For sale: Memory-Driven Computing

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

Re: For sale: Memory-Driven Computing

By Curt Hopkins, Staff Writer, Enterprise.nxt

In November of 2014, Hewlett Packard Labs announced The Machine project, out of which was born the company’s vision for a new computer architecture called Memory-Driven Computing. In 2017, Hewlett Packard Enterprise demonstrated that the Memory-Driven Computing architecture works by unveiling a prototype called The Machine. With 160 terabytes of shared memory, it remains the biggest single-memory computer ever built.

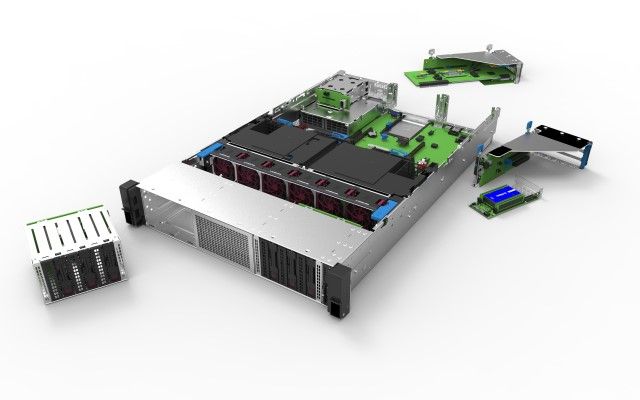

This June, HPE CEO Antonio Neri has announced the next big step towards a Memory-Driven future: The company will soon be taking orders for Memory-Driven Computing development kits for delivery early next year. The kits will combine prototype hardware and software tools built on the company’s legendary ProLiant platform.

“This is the latest in a series of successive approximations,” says Kirk Bresniker, Chief Architect, Hewlett Packard Labs. “It's not an end state because we're never done improving.”

This dev kit does not exist in a vacuum. It’s part of the Memory-Driven Computing continuum, which can scale from a tiny IoT device all the way to exascale – supercomputers that will dwarf what exists today.

The most basic explanation of the dev kits is that they are ProLiant servers built with Memory-Driven Computing architecture. Its modular, high-performance fabric will allow the user, as Bresniker says, to “build for purpose with precision.”

As modules become available, users will be able to plug in many different kinds of microprocessors, accelerators, and memory technologies. The Memory-Driven Computing software tools will allow customers’ advanced development teams to harness these diverse elements in novel ways because the modules can all communicate at the fastest possible speed – the speed of memory.

With such a prototype, a user can make, for instance, an AI-driven intelligent factory controller, then scale seamlessly without changing a line of code. They will be able to make a smart grid controller for millions of smart edge devices or synthesize live and historical medical imaging into a haptic AR experience.

“We've always said that Memory-Driven Computing is going to go from embedded to exascale,” says Bresniker. “We started out at the rack, and we have now worked from there to aisle and to data center. Now we're starting to drive the technology in the other direction, to drive out instead of up. Out towards the edge, out towards where all that data is going to live.”

Featured article

Photo by Rebecca Lewington

- Back to Blog

- Newer Article

- Older Article

- Back to Blog

- Newer Article

- Older Article

- MandyLott on: HPE Learning Partners share how to make the most o...

- thepersonalhelp on: Bridging the Gap Between Academia and Industry

- Karyl Miller on: How certifications are pushing women in tech ahead...

- Drew Lietzow on: IDPD 2021 - HPE Celebrates International Day of Pe...

- JillSweeneyTech on: HPE Tech Talk Podcast - New GreenLake Lighthouse: ...

- Fahima on: HPE Discover 2021: The Hybrid Cloud sessions you d...

- Punit Chandra D on: HPE Codewars, India is back and it is virtual

- JillSweeneyTech on: An experiment in Leadership – Planning to restart ...

- JillSweeneyTech on: HPE Tech Talk Podcast - Growing Up in Tech, Ep.13

- Kannan Annaswamy on: HPE Accelerating Impact positively benefits 360 mi...