- Community Home

- >

- HPE Community UK, Ireland, Middle East & Africa

- >

- HPE Blog, UK, Ireland, Middle East & Africa

- >

- Memory-driven computing: Dealing with today’s expo...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

Memory-driven computing: Dealing with today’s exponentially-increasing data

In late 2017, I wrote an article for the British Computer Society on the subject of Memory-Driven Computing. Given the pace of development of the architecture and its components, product announcements and documented case studies, I thought it would be appropriate to refresh the article with some of these latest milestone achievements.

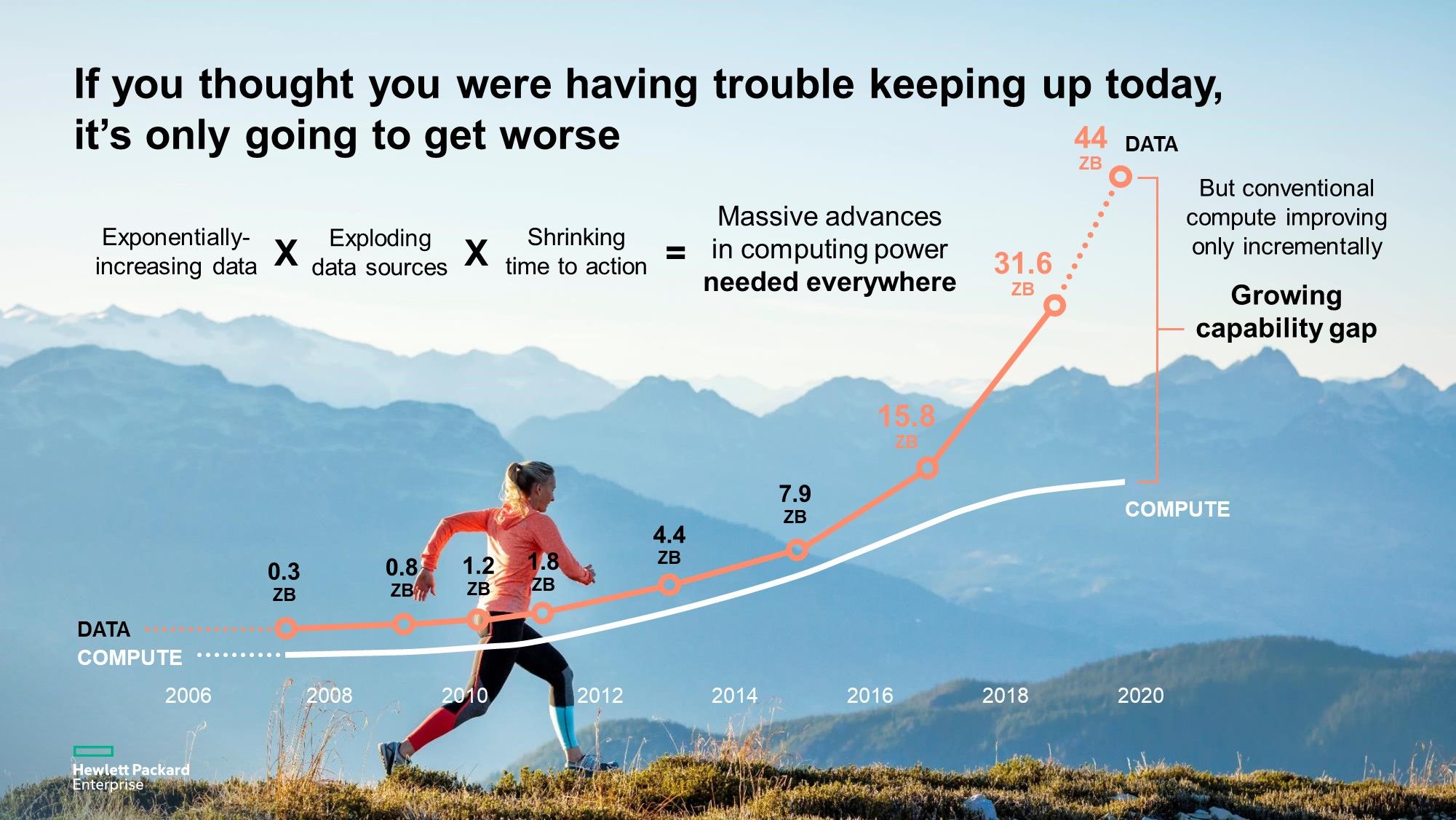

By 2020, around one hundred billion connected devices will generate data at a rate that far outpaces our ability to process, store, manage and secure it with today’s technologies. Simply put, our legacy systems won’t be able to keep up because the fundamental architecture of computing hasn’t changed in more than sixty years and will soon reach its physical and computational limits.

In today’s computers, as much as 80% of their work is devoted to shuffling information between the separate tiers of memory and storage. Computers typically depend on several different data storage technologies simultaneously, each tier overcoming the limitations of the others.

And, as we move from data generated by “Systems of Record” e.g. book keeping, through “Systems of engagement” e.g. social media, to the approaching and much bigger “Systems of action” e.g. device-generated data from the internet of things (IoT), we face a reality that in many cases we cannot afford to bring the data back to the data center for analysis. By the time we have processed it, its value is out of date. We may need to consider processing where the data is created and bringing back the results of the analysis rather than the data itself, i.e. the actual created data lives and dies at the edge.

Memory-Driven Computing more data less time

There is still a need for large amounts of data to be processed in a timely manner, whether at the edge or at the core, or cloud, and current technology and architecture are unable to achieve this.

Hewlett Packard Enterprise (HPE) believes that only by redesigning the computer from the ground up—with memory at the centre— will we be able to overcome existing performance limitations. HPE calls this architecture 'Memory-Driven Computing'. Instead of one general central processor reaching out to multiple data stores, data is held in large non-volatile memory pools at the centre, to be accessed by multiple task-specific processors.

Key features of Memory-Driven Computing are:

- Scalability, non-volatile memory pools for fast, persistent and virtually unlimited memory

- Photonics for greater throughput

- A Memory Semantic Fabric, Energy-efficient, and

- High security built-in

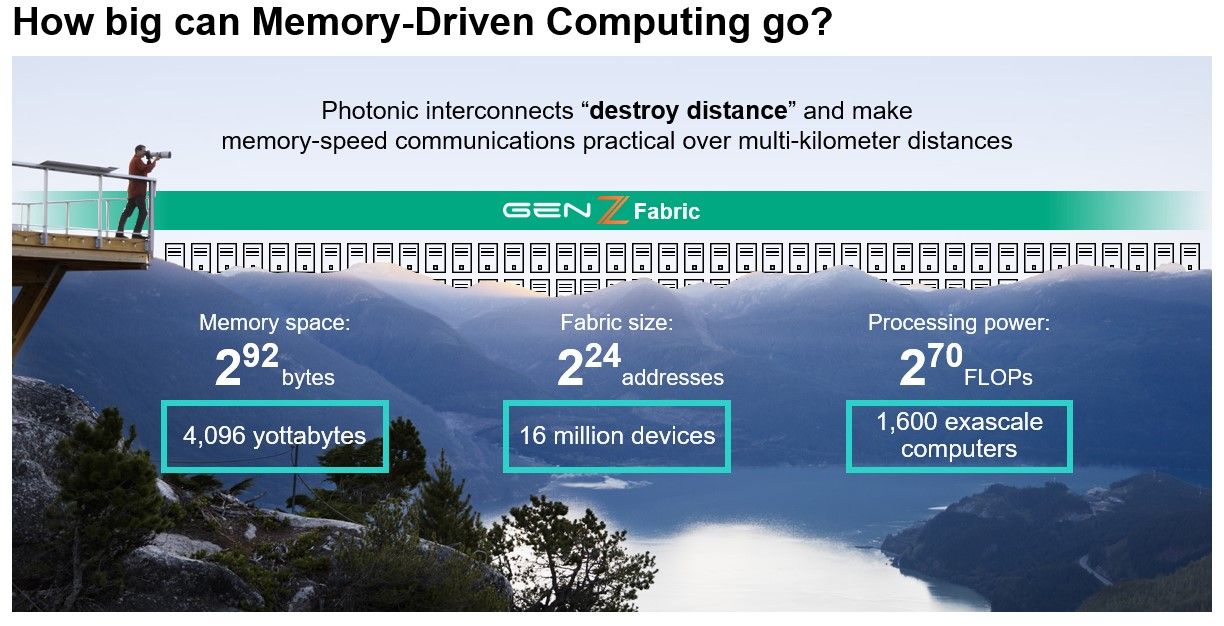

So how large can these non-volatile memory pools be?

Very large! … along with an correspondingly large memory fabric to enable interconnection with many microprocessors and accelerator types.

Memory-Driven Computing memory speed communications

A consortium was formed to solve the challenges associated with processing and analysing huge amounts of data in real time. The result: Gen-Z is a universal interconnect standard. Their core specification enables microchip designers and fabricators to begin the development of products enabling Gen-Z technology solutions.

Simulations predict the speed of this architecture would improve current computing by multiple orders of magnitude. New software programming tools have illustrated improved execution speeds of up to 8,000 times on a variety of workloads. HPE expects to achieve similar results as it expands the capacity of the prototype with more nodes and memory.

Components such as Photonics, Fabric and SoC are already being integrated into the development paths of current products to provide a step-function in their capabilities.

HPE has now introduced the HPE Superdome Flex (a result of our acquisition of SGI) which offers up to 48TB of in-memory for production environments; for example, it has enabled advances in the field of genomics to achieve important results in Alzheimer’s research.

HPE has also made several acquisitions that will support and enhance the architecture further. And, at our recent June 2019 HPE Discover event, we showed that the architecture is picking up pace, and announced the forthcoming availability of a Memory-Driven Computing development kit, where developers can create new ways of re-architecting their large data workloads and solve problems that cannot be solved using today’s limitations. The development kit will leverage many different kinds of microprocessors, accelerators and memory technologies.

The real gains, though, will come from understanding the full possibilities of the new architecture and changing the way you program to maximise its capabilities. To support and simplify this, we provide the developer-toolkit where we are exposing familiar programming environments like Linux and Portable Operating System Interface APIs with programming languages like C/C++ and Java.

So, in summary, if you’re experiencing concerns about how to handle and process current or future larger and larger datasets, I would recommend you explore further how Memory-Driven Computing might provide a more powerful and cost-effective way to gain insights faster.

Dave Davies

Hewlett Packard Enterprise

twitter.com/HPE_UKI

linkedin.com/company/hewlett-packard-enterprise

hpe.com/uk

- Back to Blog

- Newer Article

- Older Article

- Mohamad El Qasabi on: How HPE is accelerating digital transformation in ...

- MargaretN on: Welcome to the Middle East Region Community Blog

- Martin Visser on: Everything-as-a-Service: Is your organisation read...

- Kevin Barnard on: Planning for what is next – Overcoming current cha...

- Chris_Ibbitson on: Multi-cloud in Financial Services

- DJMutch on: Think global. Act circular. The circular economy a...

- BrianJenkinson on: NVMe alone is not enough, it’s time for Storage Cl...

-

Coffee Coaching

6 -

Technologies

292 -

What's Trending

62 -

What’s Trending

155 -

Working in Tech

147