- Community Home

- >

- Software

- >

- HPE Ezmeral: Uncut

- >

- HPE Ezmeral simplifies data processing and analysi...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

HPE Ezmeral simplifies data processing and analysis

--Hardware-in-the-loop Simulation with HPE Ezmeral Data Fabric and MoSMB—

Automotive manufacturers and suppliers generate and process mountains of data every day. These businesses use that data to improve the safety, performance, and user experience of their vehicles. They apply artificial intelligence/machine learning (AI/ML) techniques to process, manage, and analyze data from a multitude of sources. Ultimately, that data is leveraged to improve vehicle design, manufacturing processes, the buyer experience at the car dealership, the driver/passenger experience, and so on.

Beyond processing and analyzing all of this data for the purposes listed above, the realization of autonomous driving presents numerous additional challenges for automotive manufacturers and suppliers. It is imperative to quickly develop new and sophisticated concepts to meet consumer needs, address safety concerns, and remain relevant. Considering how rapidly research and development must happen, the technology powering those innovations is critical.

Millions of physical miles have to be driven. It is essential that all telemetry and sensor data is recorded and transferred to the data center. That data then needs to be indexed, processed, and labeled to use it for simulation, ML, and AI. Additionally, all data is required to be retained due to regulations and compliance.

Problem Statement/Current Situation

The requirements that the IT environment must handle for these types of businesses are massive. Not only do IT administrators need to figure out how to store the tremendous amounts of real-world data captured in the logs from the sensors mounted to the cars, they must also ensure that all that data can be uploaded and easily accessed across diverse environments. The environment must not only scale to petabytes, but it also must be capable of running data-intensive applications for processing and analyzing the test data. IT administrators need a platform that creates a single space to connect all their data and tools so they can process, analyze, and understand that data.

Customers are using data platforms, such as Hadoop implementations or scale-out file storage concepts (e.g. Data Lakes), to process, manage, and analyze their engineering data. The engineering teams need to be able to execute any type of analytic workflows on that data—wherever it’s located—without also being burdened with how to access or manage the data.

In the engineering world, in-the-loop testing and process simulation are common procedures. For example, hardware-in-the-loop testing can be used to simulate sensors, actuators, and mechanical components to meet tight development schedules and to improve the effectiveness of testing. While process simulation software can help guide and minimize experimental research by using models that introduce approximations and assumptions that might not be covered by real data, these devices and software need to be calibrated or validated using existing sensor or engineering data.

Also, many customer environments are diverse. For example, the systems accessing the data could be Linux-based, while the in-the-loop stations are Windows-based. Parallel access to the engineering data is essential so that the various teams can collaborate on processing and understanding the data without creating multiple copies of the same data or data silos. Clearly, a solution is needed that will allow parallel access to the engineering data in these types of diverse environments.

Solution Components and Validation

In the HPE Fort Collins test lab, the HPE Solutions Engineering team built a data platform consisting of an HPE Ezmeral Data Fabric (formerly known as the MapR Data Platform) environment connected to an HPE Ezmeral Container Platform environment. (Both of these products are part of the HPE Ezmeral Software Portfolio, a collection of solutions that allow you to run, manage, control, and secure the apps, data, and IT that run your business—from edge to cloud.) The HPE Ezmeral Data Fabric is a scale-out, persistent storage layer that supports multiple use cases. The HPE Ezmeral Data Fabric simplifies sharing data generated from the edge using multiple protocols and APIs.

The HPE Ezmeral Container Platform was used to spin-up containerized environments that deployed data-intensive applications for analyzing the data. The HPE Ezmeral Data Fabric in combination with the HPE Ezmeral Container Platform gives the foundation to support various automotive ML/AI use cases. This environment proved that HPE has a solution that addresses the customer’s need to access a globally distributed data store via multiple protocols, like HDFS, NFS, Amazon S3, SMB, etc.

The in-the-loop stations were Windows clients that accessed the data platform using either the Amazon S3 or the SMB3 protocols. Data was ingested from the HPE Ezmeral Data Fabric to the testing stations by mounting network drives or using a web browser to access S3 buckets containing the data to be shared. The HPE Ezmeral Data Fabric was secured (option selected during install), and all hosts were joined to the same domain.

The Windows-based testing stations accessed the HPE Ezmeral Data Fabric using either Windows Explorer or a web browser. Different components were required for each type of connectivity, but having multiple options for sharing and accessing the data ensured that all authorized team members would be able to run simulations or re-process the data without troubling themselves with how to access it. The scenarios described demonstrate how the engineering data could easily be shared between colleagues in different cities or countries—with no additional software required on any Windows client.

The prerequisites for accessing data in the HPE Ezmeral Data Fabric using a web browser via the Amazon S3 protocol were:

- The HPE Ezmeral Data Fabric Object Store + HPE Ezmeral Data Fabric POSIX client were installed on a node in the HPE Ezmeral Data Fabric environment.

- Two JSON files were configured to be used by the object store (tenants.json and minio.json).

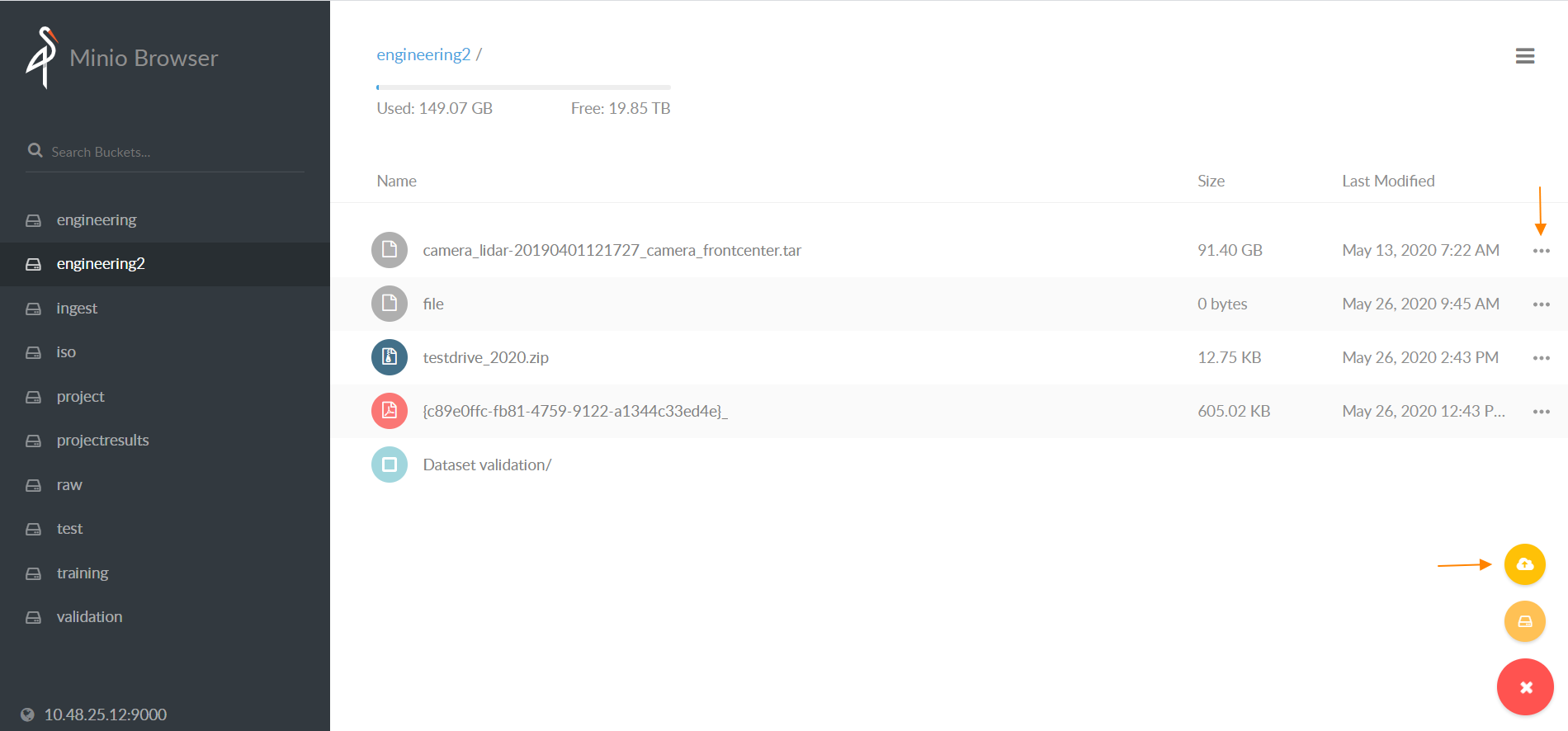

With the prerequisites completed, you could log in to a Windows testing station, launch your preferred web browser, enter the URL of the Minio Browser (provided when starting the HPE Ezmeral Data Fabric object store service), and log in using the credentials that were entered in the tenants.json file. You could then access S3 buckets that you were authorized for and download or upload data. No additional software has to be installed on the Windows testing station—you just use your preferred web browser to access the data. This method of sharing the engineering data was best for quick access or sharing of smaller files.

Here’s what the Minio browser looks like:

To access data in the HPE Ezmeral Data Fabric using Windows Explorer via the SMB3 protocol, the prerequisites were:

- The HPE Ezmeral Data Fabric POSIX client was installed on two additional nodes (three total).

- MoSMB software (3rd-party product) was installed on the three nodes with the HPE Ezmeral Data Fabric POSIX client now installed.

- Multiple entries for the HPE Ezmeral Data Fabric fuse.conf file were updated.

- Round-robin DNS was configured for the three nodes.

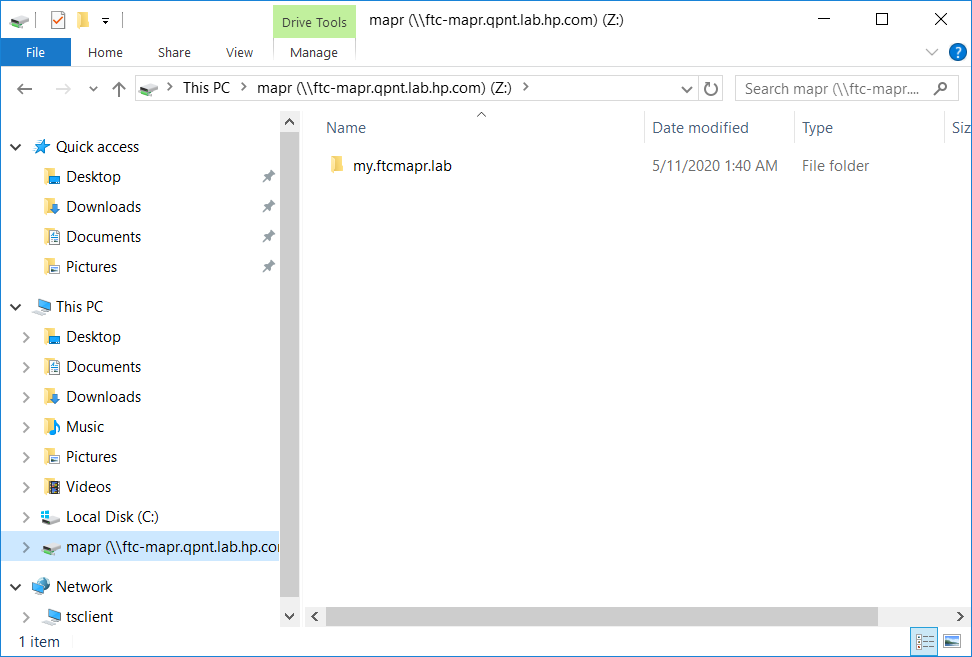

With all the prerequisites completed, a network drive could be mapped for the Windows test station using the round-robin DNS name. This was done to provide high availability. If one node wasn’t accessible, then another node could handle requests from clients. Windows Explorer could then be used to access the HPE Ezmeral Data Fabric and download or upload data. Similar to accessing the data using the Minio browser, no additional software had to be installed on the Windows client. This method for sharing the engineering data is particularly ideal for larger files because the transfer will be faster than using the S3 protocol.

While there are multiple possibilities for connecting Windows-based testing stations to the HPE Ezmeral Data Fabric, how can we connect the Linux world with the Windows-based appliance?

We already had the HPE Ezmeral Data Fabric POSIX client installed on multiple nodes to enable using MoSMB software so that the Windows testing stations could connect to the HPE Ezmeral Data Fabric using the SMB3 protocol. By installing the HPE Ezmeral Data Fabric POSIX client on a Linux-based node, the Linux client was able to read and write data directly and securely to the HPE Ezmeral Data Fabric, similar to a Linux file system.

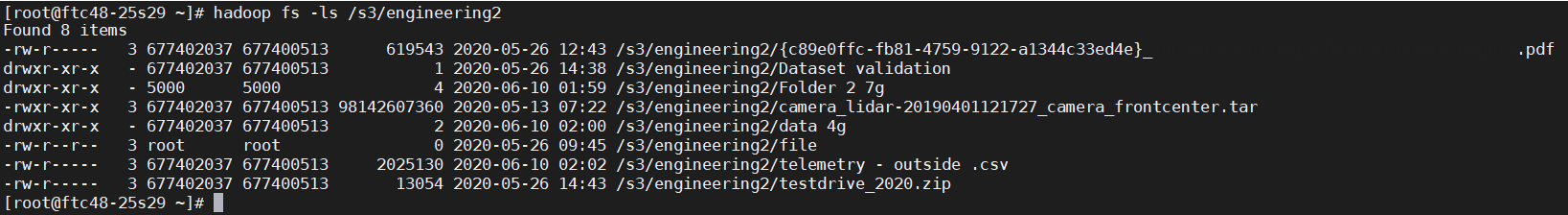

Hadoop API commands were used to interact with the same volumes that the Windows testing stations had accessed:

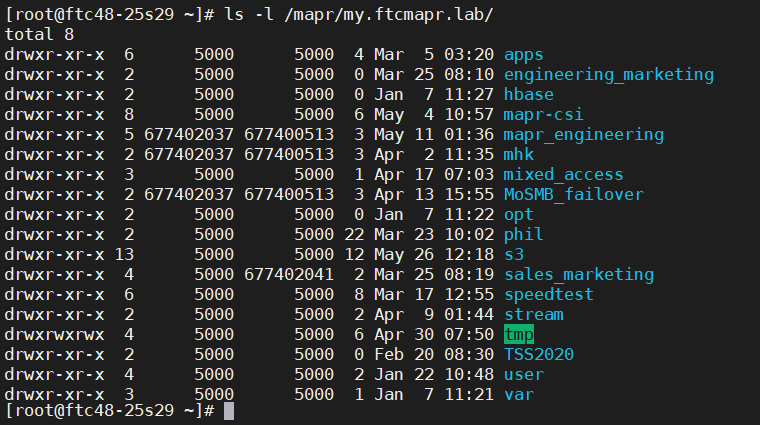

Again, because the HPE Ezmeral Data Fabric POSIX client was installed, volumes from the HPE Ezmeral Data Fabric were viewed as though they were directly mounted on this Linux client:

Now that members of the various teams could access the test data from either Windows-based or Linux-based clients, we can assume the analysts would then want to work on that data. We used the HPE Ezmeral Container Platform to deploy analytics tools for that purpose.

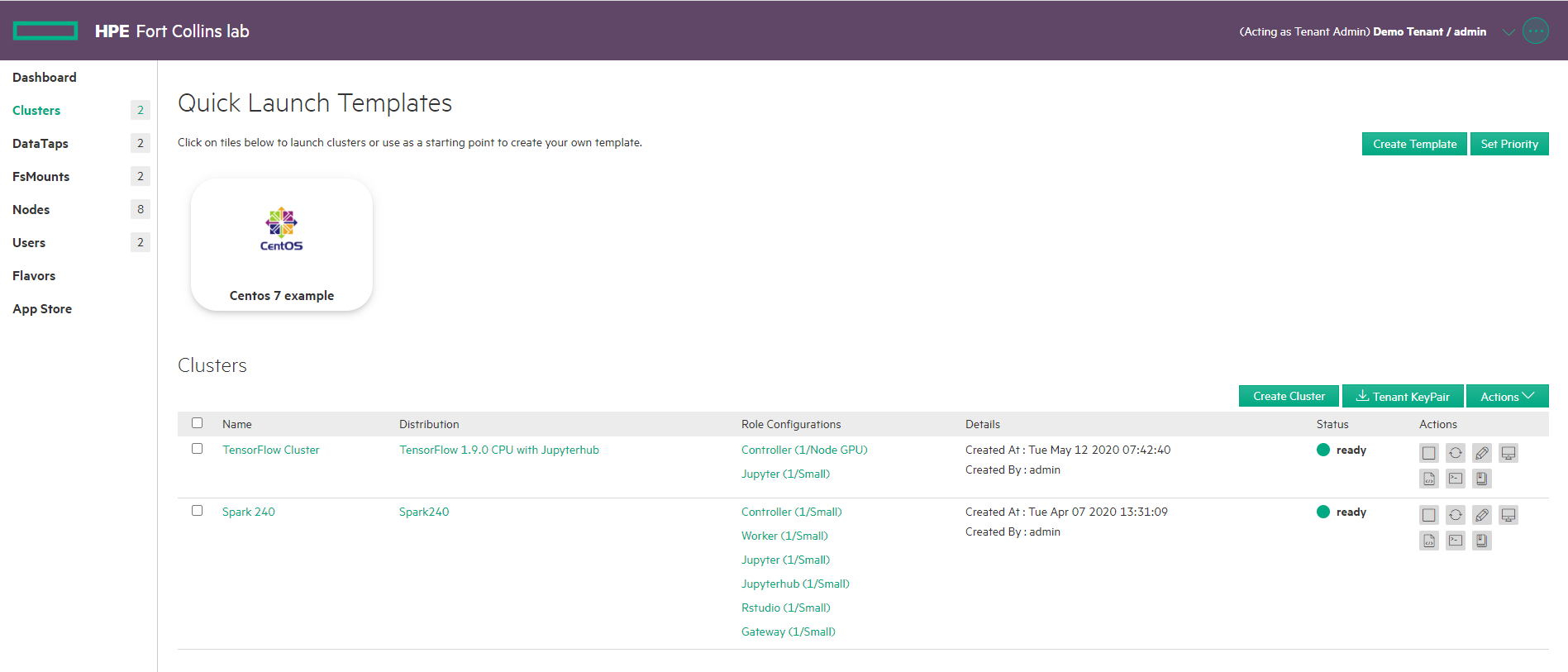

The process for deploying different clusters only required providing the login credentials for every host. Kubernetes hosts also required confirming the default storage selections (ephemeral and persistent) that the HPE Ezmeral Container Platform had made. The selected software was then installed, and the EPIC App Store was used to select what analytic tools would be deployed:

TensorFlow and Spark clusters were deployed for analyzing the test data:

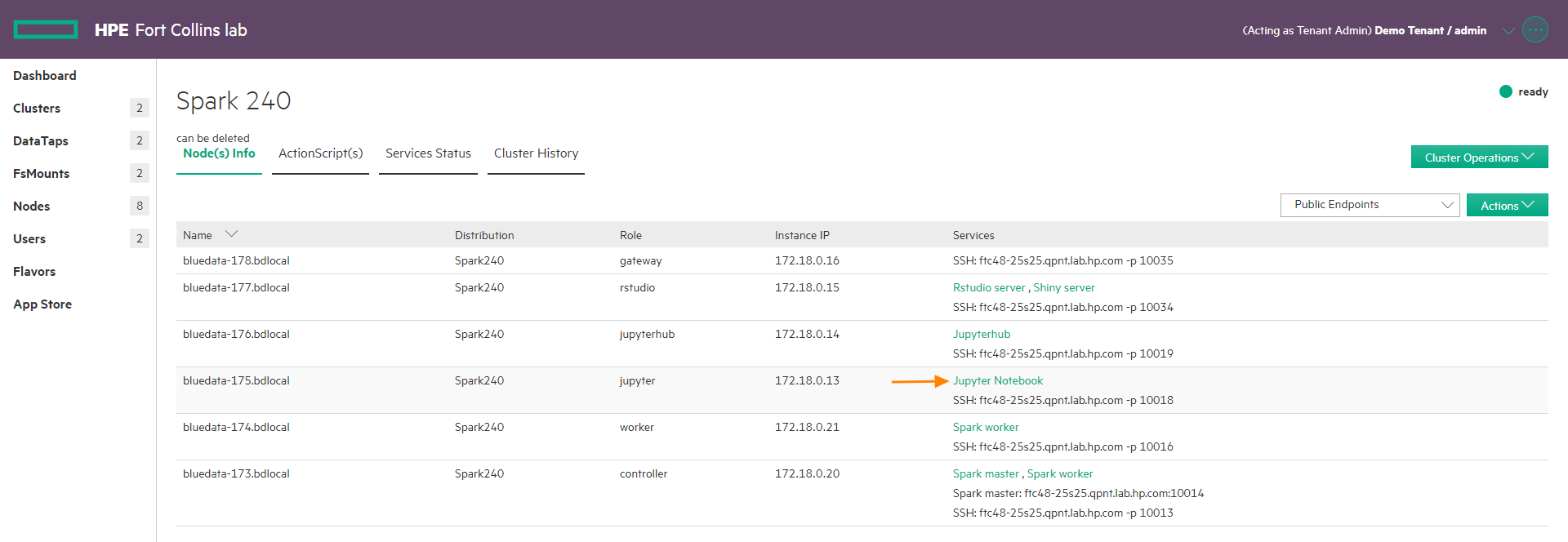

When viewing the Spark cluster, the Jupyter Notebook interface was launched using the highlighted link below:

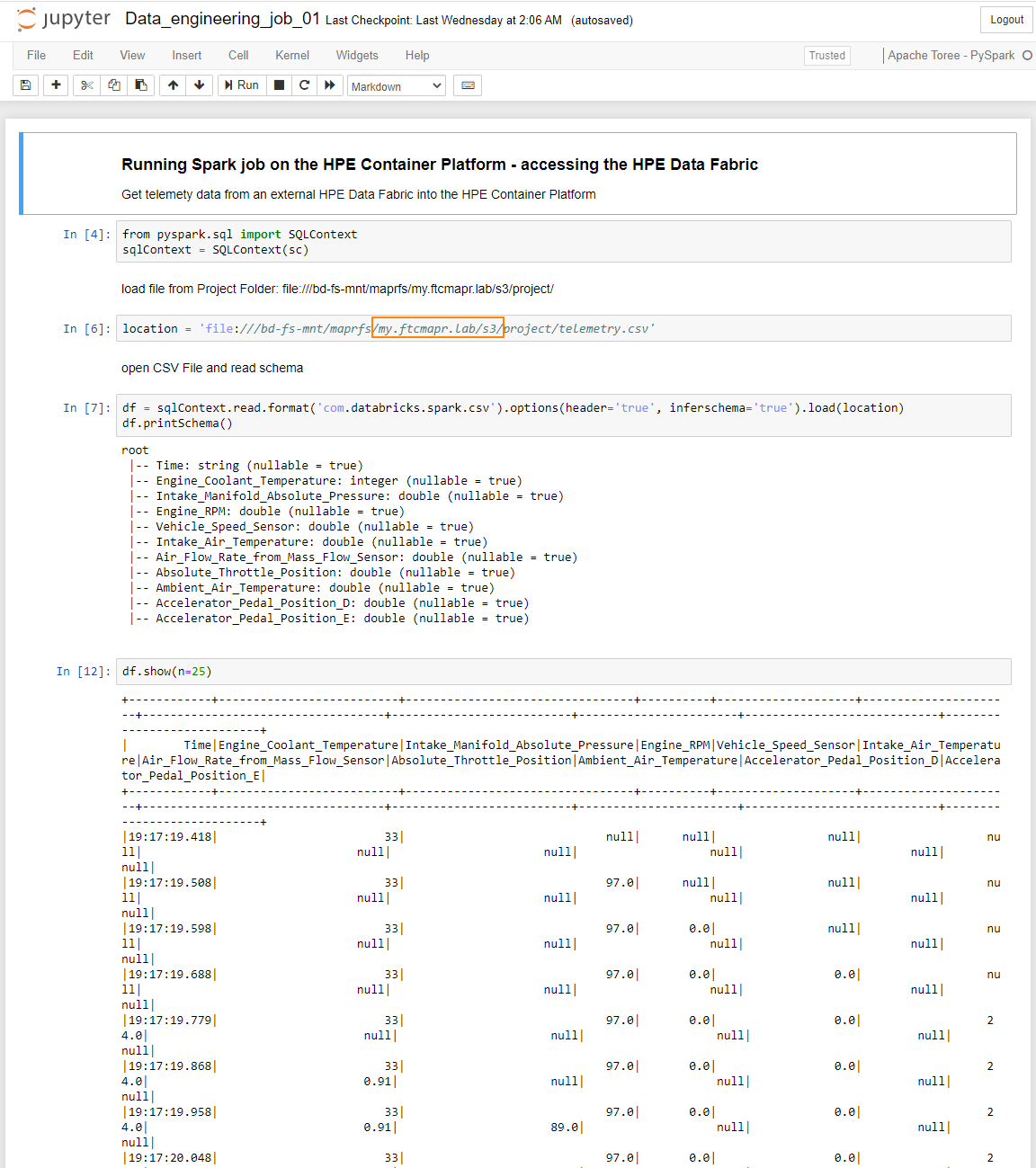

Data from the HPE Ezmeral Data Fabric—that was previously accessed by both Windows-based and Linux-based clients (path highlighted by the orange rectangle)—was processed using Jupyter Notebook:

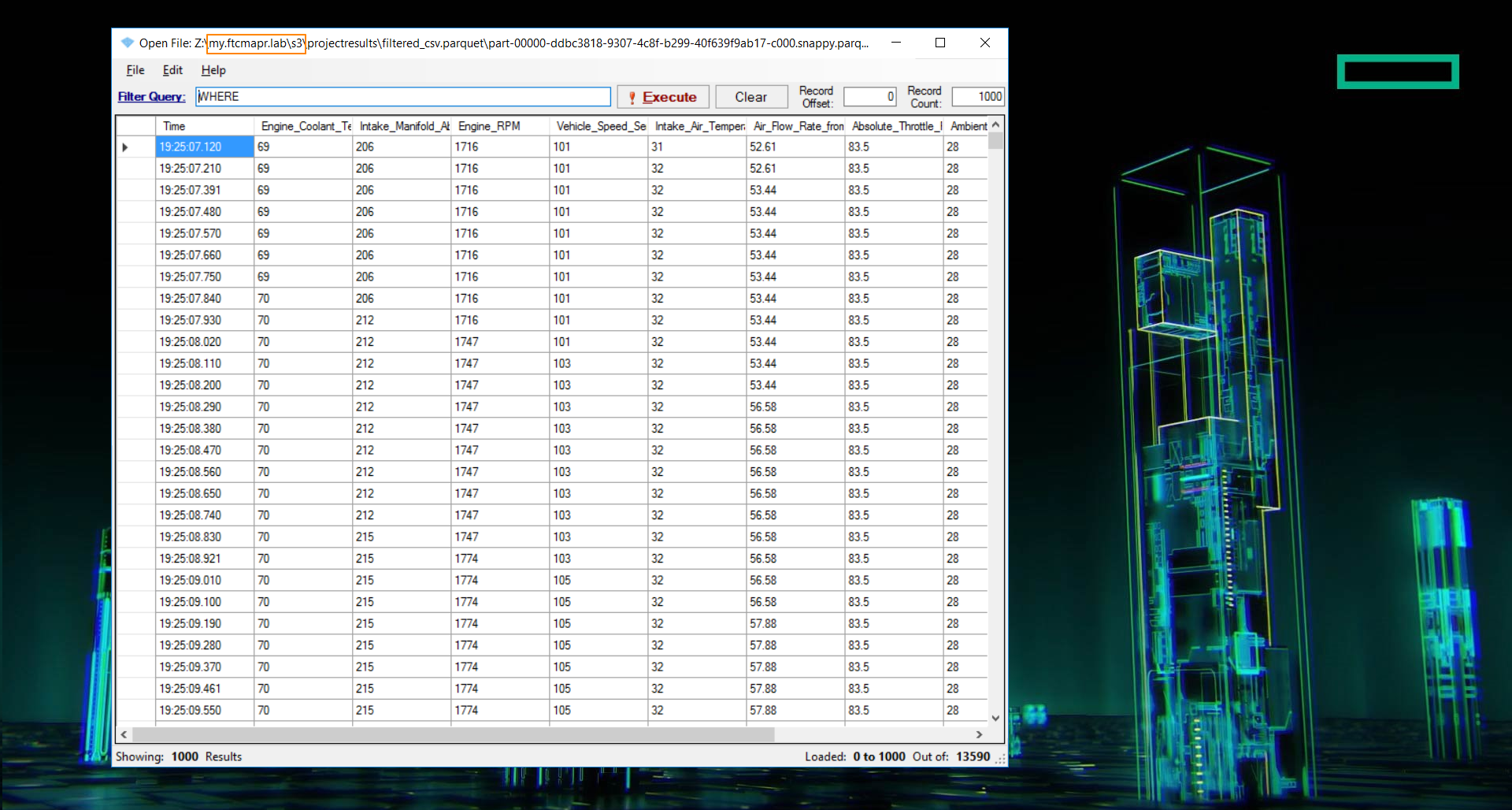

The filtered CSV file was viewed on a Windows testing station using a Parquet file viewer—note the path highlighted by the orange rectangle. The use case being that one team had analyzed the data, then saved it to a parquet file. Another team needed to analyze those results using a Windows-based application.

This demonstrates how the HPE Ezmeral Data Fabric was used to simplify sharing data generated from the edge with various teams in diverse environments using multiple protocols and APIs. The data could be shared with any user, at any location using their preferred method of accessing the data.

To analyze the data, the HPE Ezmeral Container Platform was used to spin up TensorFlow + Spark clusters to be used. Data uploaded from a client was processed using a Jupyter Notebook deployed in the HPE Ezmeral Container Platform. A Parquet file viewer on another client was used to examine the output from the Jupyter Notebook.

Using the HPE Ezmeral Data Fabric with the HPE Ezmeral Container Platform enables various teams to process, analyze, manage, and store data—demonstrating that all types of analytics workflows can run on the data wherever it's located. The HPE Ezmeral Data Fabric in combination with the HPE Ezmeral Container Platform simplifies big data environments by creating a single space that connects all the data plus tools for processing, analyzing, and understanding your data.

To learn more, visit the HPE Ezmeral Software website or read more HPE Community Blogs for HPE Ezmeral Software.

Eric

Eric_J_Brown

Eric Brown is a Solutions Engineer for Hewlett Packard Enterprise (HPE) with more than 20 years of experience installing and configuring HPE Storage products. Eric has produced a variety of technical documentation to enable field engineers, customers, and pre-sales. These include product best practices and guidance for performing complex operations.

- Back to Blog

- Newer Article

- Older Article

- SFERRY on: What is machine learning?

- MTiempos on: HPE Ezmeral Container Platform is now HPE Ezmeral ...

- Arda Acar on: Analytic model deployment too slow? Accelerate dat...

- Jeroen_Kleen on: Introducing HPE Ezmeral Container Platform 5.1

- LWhitehouse on: Catch the next wave of HPE Discover Virtual Experi...

- jnewtonhp on: Bringing Trusted Computing to the Cloud

- Marty Poniatowski on: Leverage containers to maintain business continuit...

- Data Science training in hyderabad on: How to accelerate model training and improve data ...

- vanphongpham1 on: More enterprises are using containers; here’s why.

- data science course on: Machine Learning Operationalization in the Enterpr...