- Community Home

- >

- Storage

- >

- Around the Storage Block

- >

- Kubernetes Inter-Cluster Workload Transitions with...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

Kubernetes Inter-Cluster Workload Transitions with Google Anthos on HPE Nimble Storage dHCI

In modern Kubernetes deployments it’s very common to treat clusters like cattle. This makes it easy to refresh the cluster, both from a software and hardware standpoint. As tools are becoming more mature to provision and decommission clusters we can clearly see this pattern is becoming more mainstream. One outcome of this paradigm is that we’re becoming more workload focused. The workload become the new pet and we need to have intricate knowledge how it’s deployed and managed throughout its lifecycle. The immediate challenge become how to transition workloads between cluster refresh lifecycles. This blog post discusses how we can address inter-cluster workload transitions using the data services available in the HPE Volume Driver for Kubernetes FlexVolume Plugin.

The Data Gravity Challenge

Inherently Kubernetes has the ability to declare workloads from a set of specifications. These specifications embed wiring how to run a particular container image and its dependencies. Since the workloads are containerized, we know that the particular workload will run anywhere. In your private cloud, public cloud and on your laptop. Until you start thinking about stateful workloads, we can consider workloads are mobile and portable. Data has gravity. When you attach what is called a Persistent Volume (PV) in Kubernetes to your workload, your stateful application is anchored in that cluster. Depending on the persistent storage solution employed by the cluster, your data may even be trapped on the cluster nodes themselves. Ensuring data is stored outside of the cluster nodes is imperative in the attempt of recovering an application during a cluster outage.

HPE Nimble Storage Core Features

A highly differentiated feature of our HPE Nimble Storage arrays is the ability to transfer ownerships of volumes between two discrete array groups using asynchronous replication. This allows higher level constructs to employ these APIs to perform workload transitions. We’ve demonstrated numerous times in the past how to leverage the asynchronous replication between HPE Nimble Storage and HPE Cloud Volumes to enable Hybrid Cloud data services. Those capabilities are still in Tech Preview. What became Generally Available in version 3.0 of the HPE Volume Driver for Kubernetes FlexVolume Plugin is the ability to perform seamless inter-cluster workload transitions of stateful applications.

We’ve recorded a demo on how to perform the transition but let’s walk through what’s going to happen.

Transitioning workloads between HPE Nimble Storage dHCI systems

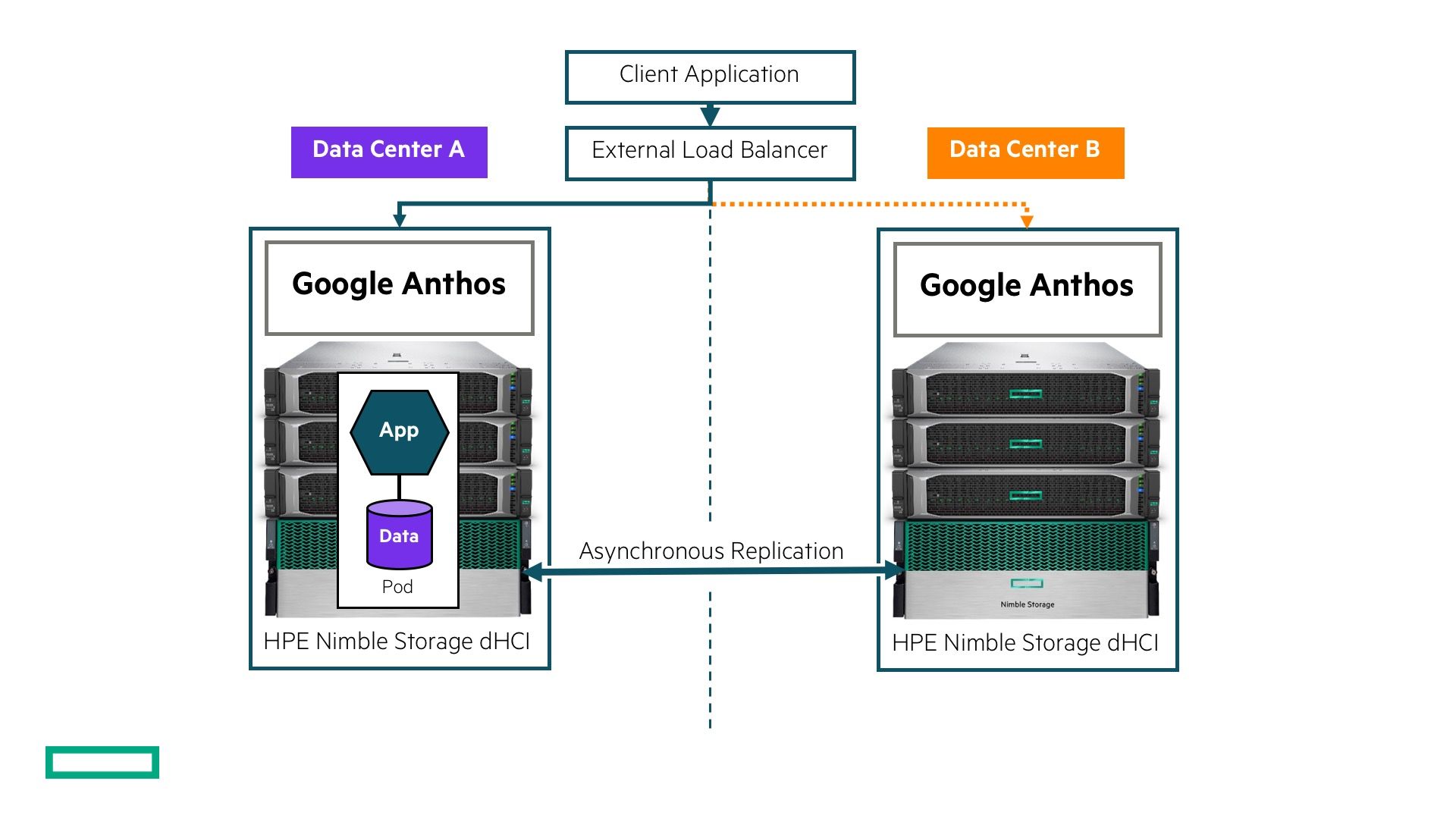

Transitioning workloads between HPE Nimble Storage dHCI systemsIn this setup we have two HPE Nimble Storage dHCI systems deployed running Google Anthos in two separate data centers. Each system is an island on its own and the two details that tie them together is the asynchronous replication and external load balancer. We’ll declare the workload in data center A. Once the application is up and responding, we transition it to data center B and later back to the origin in data center A. With up to the last transaction integrity.

Kubernetes API objects

The key new capability we introduced is called “syncOnUnmount”. It essentially ensures that the delta between the tip of the volume and last snapshot gets transferred to its replication partner during workload teardown. This will guarantee that both sides of the replication relationship is in sync when the workload has shutdown.

Both Kubernetes clusters in each data center needs a specific StorageClass API object crafted. Let’s walk through it.

---

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: hpe-transition

provisioner: hpe.com/nimble

parameters:

allowOverrides: importVol,forceImport,takeover,reverseRepl,description,perfPolicy

protectionTemplate: "Workload-Transition"

syncOnUnmount: "true"

destroyOnRm: "false"allowOverrides: As we require certain annotations on the Persistent Volume Claims (PVC), we need to allow the application owners to override certain StorageClass parameters.

protectionTemplate: This is the protection template that contains the replication partnership between the HPE Nimble Storage systems.

syncOnUnmount: Required as per the introduction of this section.

destroyOnRm: This parameter is set to “false” by default. Setting it explicitly as it’s required is consider good practice.

The initial creation of the PVC doesn’t contain anything out of the ordinary. We’ll just override the description and ensure we have an optimized Performance Policy.

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mariadb

annotations:

hpe.com/description: "My volume to be transitioned"

hpe.com/perfPolicy: "SQL Server"

spec:

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 16Gi

storageClassName: hpe-transitionThe workload we’re deploying is a MariaDB database. The application accessing the database externally through the load balancer is called “dping”. It inserts a row, commits and subsequently reselect the last row inserted. All the declarations and “dping” are available on GitHub.

Transitioning the workload once it has been declared has two distinct steps per cluster.

On Cluster A:

- Copy the PV name backing the PVC.

- Delete the source, including the PVC.

On Cluster B:

- Create the destination PVC, annotating the PVC of the volume to import.

- Create the workload on the destination.

The destination PVC contains a few more annotations. The key “hpe.com/importVol” contains the actual HPE Nimble Storage volume name that is derived from the origin StorageClass. The remaining keys ensures the volume will be taken over by the destination array.

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mariadb

annotations:

hpe.com/importVol: "hpe-transition-<UUID>.docker"

hpe.com/description: "My volume to be transitioned"

hpe.com/reverseRepl: "true"

hpe.com/takeover: "true"

hpe.com/forceImport: "true"

spec:

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 16Gi

storageClassName: hpe-transitionTransitioning the workload back to the origin location after the origin cluster is back online is simply repeating the process, starting on Cluster B.

Let’s get to the demo shall we?

Summary

This is by far one of the most powerful capability the HPE Nimble Storage container integration provide. In the same manner, these constructs can be leveraged for semi-automatic disaster recovery (DR) scenarios. There will we a window of data loss as the replication is asynchronous but it might be more desirable than a complete outage. We’ll discuss fully automatic DR scenarios once we’re shipping Peer Persistence (synchronous replication) support with the container integration. That’s another blog for another day.

Get started today!

We just shipped version 3.1 of the HPE Volume Driver for Kubernetes FlexVolume Plugin that brings support for HPE Cloud Volumes with both Amazon EKS and Azure AKS along with BYO Kubernetes, including Rancher. Today it’s really easy to get started with both Kubernetes and HPE storage solutions through the integration with Helm, the standard package manager for Kubernetes.

Recently we also published a demo on how to install the volume driver with Helm on HPE Nimble Storage dHCI using Google Anthos. Check it out!

- Back to Blog

- Newer Article

- Older Article

- haniff on: High-performance, low-latency networks for edge an...

- StorageExperts on: Configure vSphere Metro Storage Cluster with HPE N...

- haniff on: Need for speed and efficiency from high performanc...

- haniff on: Efficient networking for HPE’s Alletra cloud-nativ...

- CalvinZito on: What’s new in HPE SimpliVity 4.1.0

- MichaelMattsson on: HPE CSI Driver for Kubernetes v1.4.0 with expanded...

- StorageExperts on: HPE Nimble Storage dHCI Intelligent 1-Click Update...

- ORielly on: Power Loss at the Edge? Protect Your Data with New...

- viraj h on: HPE Primera Storage celebrates one year!

- Ron Dharma on: Introducing Language Bindings for HPE SimpliVity R...