- Community Home

- >

- Storage

- >

- HPE Nimble Storage

- >

- HPE Nimble Storage Solution Specialists

- >

- VVol, any limit on connections?

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-12-2020 12:34 AM - edited 02-12-2020 02:30 AM

02-12-2020 12:34 AM - edited 02-12-2020 02:30 AM

VVol, any limit on connections?

Hello,

We had an incident yesterday where one ESXi host lost connection to the Nimble array when backup(3.party SW using vCenter snapshots) started. My co-worker who handled the incident logged a case to Nimble support and the support guy said there was too many connections. My guess is that the support guy was not used to VVols and thought about VMFS connection limits, so I am curious about the opinion of the good people following this forum.

Our top ESXi host have 2504 connection when viewing the initiator group, which for VMFS would go above the limit of 2048 paths in ESXi 6.5(4096 in 6.7), but this shouldn’t be an issue for VVols as VVols share the connection to the PE.

In the System Limits in the Nimble Administration Guide I don’t see any limits for VVol connections mentioned. Is it unlimited? Does anyone know what number has been tested in QA or what is the highest you have seen?

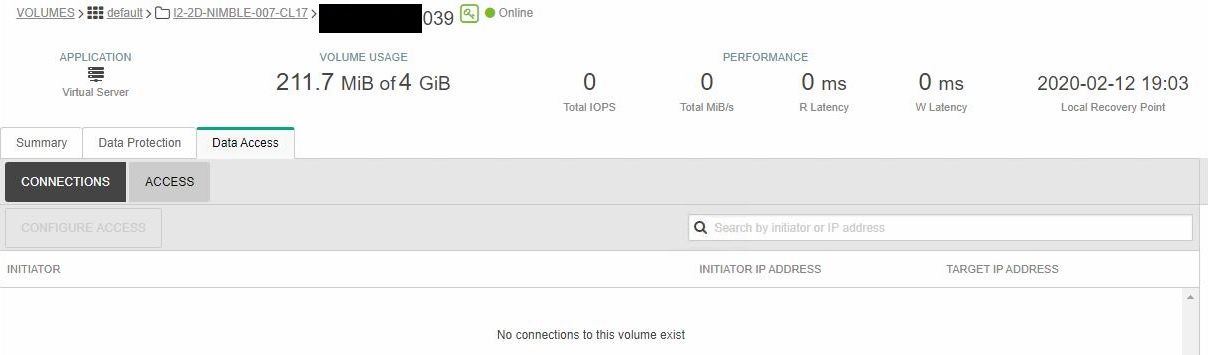

The top host under Data Access in the Nimble array GUI:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-12-2020 10:10 AM

02-12-2020 10:10 AM

Re: VVol, any limit on connections?

Hello k_erik again!

The path and device limit on the ESXi is what matters more. The PE is one device on the ESXi. You can look at the PE serial number, and then check how many paths exist on ESXi, and how many of those go to the PE. "esxcfg-mpath -L | wc -l" and "esxcfg-mpath -L -d eui.serial-num-of-PE | wc -l"

If you'd like us to lookup your case with support, we will need a case number or your array serial at least. Feel free to post it or PM it.

--m

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-12-2020 11:25 AM - edited 02-12-2020 11:36 AM

02-12-2020 11:25 AM - edited 02-12-2020 11:36 AM

Re: VVol, any limit on connections?

Hello again!

Thanks, first one resulted in 25 and the second command was 8 for each of the arrays. So safe within limits there.

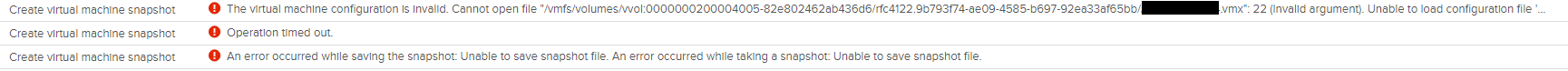

We hit the issue again today on a different ESXi host. It seems that the ESXi host which was running the VM is not able to lock the VVol(see errors from vvold.log further down). In the Nimble array GUI it shows no connections to the vvol.

Here is what we typically see in vCenter on this issue.

On the ESXi host there are lots of messages trying to get a user lock.

[root@esxhost:/var/log] cat vvold.log | grep 7a4781163fa0

2020-02-12T18:32:04.102Z info vvold[2099864] [Originator@6876 sub=Default] Came to SI::BindVirtualVolume: esxContainerId 00000002-0000-4005-82e8-02462ab436d6 VVol Id rfc4122.38319541-e9bc-4b91-baa3-7a4781163fa0 bindType Normal (isConfigVvol: true)

2020-02-12T18:34:50.674Z info vvold[11390100] [Originator@6876 sub=Default] Came to SI::BindVirtualVolume: esxContainerId 00000002-0000-4005-82e8-02462ab436d6 VVol Id rfc4122.38319541-e9bc-4b91-baa3-7a4781163fa0 bindType Normal (isConfigVvol: true)

2020-02-12T18:35:50.744Z info vvold[5618503] [Originator@6876 sub=Default] Came to SI::BindVirtualVolume: esxContainerId 00000002-0000-4005-82e8-02462ab436d6 VVol Id rfc4122.38319541-e9bc-4b91-baa3-7a4781163fa0 bindType Normal (isConfigVvol: true)

2020-02-12T18:36:50.818Z info vvold[11390067] [Originator@6876 sub=Default] Came to SI::BindVirtualVolume: esxContainerId 00000002-0000-4005-82e8-02462ab436d6 VVol Id rfc4122.38319541-e9bc-4b91-baa3-7a4781163fa0 bindType Normal (isConfigVvol: true)

2020-02-12T18:37:50.885Z info vvold[11390068] [Originator@6876 sub=Default] Came to SI::BindVirtualVolume: esxContainerId 00000002-0000-4005-82e8-02462ab436d6 VVol Id rfc4122.38319541-e9bc-4b91-baa3-7a4781163fa0 bindType Normal (isConfigVvol: true)

2020-02-12T18:38:33.587Z info vvold[2101649] [Originator@6876 sub=Default] QueryVirtualVolumeInt vvolUuid = rfc4122.38319541-e9bc-4b91-baa3-7a4781163fa0

2020-02-12T18:38:33.587Z info vvold[2101649] [Originator@6876 sub=Default] SI:QueryUuidForFriendlyName uuid:rfc4122.38319541-e9bc-4b91-baa3-7a4781163fa0 name:*snip*

containerId:00000002-0000-4005-82e8-02462ab436d6 cached:false

2020-02-12T18:38:50.957Z info vvold[11390069] [Originator@6876 sub=Default] Came to SI::BindVirtualVolume: esxContainerId 00000002-0000-4005-82e8-02462ab436d6 VVol Id rfc4122.38319541-e9bc-4b91-baa3-7a4781163fa0 bindType Normal (isConfigVvol: true)

2020-02-12T18:39:51.149Z info vvold[2099799] [Originator@6876 sub=Default] Came to SI::BindVirtualVolume: esxContainerId 00000002-0000-4005-82e8-02462ab436d6 VVol Id rfc4122.38319541-e9bc-4b91-baa3-7a4781163fa0 bindType Normal (isConfigVvol: true)

2020-02-12T18:40:51.218Z info vvold[2099821] [Originator@6876 sub=Default] Came to SI::BindVirtualVolume: esxContainerId 00000002-0000-4005-82e8-02462ab436d6 VVol Id rfc4122.38319541-e9bc-4b91-baa3-7a4781163fa0 bindType Normal (isConfigVvol: true)

2020-02-12T18:40:54.052Z info vvold[11390069] [Originator@6876 sub=Default] VVolDevice (3) rfc4122.38319541-e9bc-4b91-baa3-7a4781163fa0, SC: 00000002-0000-4005-82e8-02462ab436d6 Bind state (233496239,200129248 (unknown:true)) Bind info (,) Device Open Count 1

2020-02-12T18:40:54.052Z info vvold[11390100] [Originator@6876 sub=Default] Enter VVolUnbindManager::UnbindDevice vvolID:rfc4122.38319541-e9bc-4b91-baa3-7a4781163fa0 runningThreadCount:1

2020-02-12T18:40:54.052Z info vvold[11390100] [Originator@6876 sub=Libs] 11390100:VVOLLIB : VVolLib_UserLockVVol:9605: Re-trying user lock on VVol rfc4122.38319541-e9bc-4b91-baa3-7a4781163fa0 (curTime:15411080 startTime:15411080 timeoutSec:60)

2020-02-12T18:40:55.054Z info vvold[11390100] [Originator@6876 sub=Libs] 11390100:VVOLLIB : VVolLib_UserLockVVol:9605: Re-trying user lock on VVol rfc4122.38319541-e9bc-4b91-baa3-7a4781163fa0 (curTime:15411081 startTime:15411080 timeoutSec:60)

2020-02-12T18:40:56.056Z info vvold[11390100] [Originator@6876 sub=Libs] 11390100:VVOLLIB : VVolLib_UserLockVVol:9605: Re-trying user lock on VVol rfc4122.38319541-e9bc-4b91-baa3-7a4781163fa0 (curTime:15411082 startTime:15411080 timeoutSec:60)

2020-02-12T18:40:57.058Z info vvold[11390100] [Originator@6876 sub=Libs] 11390100:VVOLLIB : VVolLib_UserLockVVol:9605: Re-trying user lock on VVol rfc4122.38319541-e9bc-4b91-baa3-7a4781163fa0 (curTime:15411083 startTime:15411080 timeoutSec:60)

2020-02-12T18:40:58.060Z info vvold[11390100] [Originator@6876 sub=Libs] 11390100:VVOLLIB : VVolLib_UserLockVVol:9605: Re-trying user lock on VVol rfc4122.38319541-e9bc-4b91-baa3-7a4781163fa0 (curTime:15411084 startTime:15411080 timeoutSec:60)

2020-02-12T18:40:59.060Z info vvold[11390100] [Originator@6876 sub=Libs] 11390100:VVOLLIB : VVolLib_UserLockVVol:9605: Re-trying user lock on VVol rfc4122.38319541-e9bc-4b91-baa3-7a4781163fa0 (curTime:15411085 startTime:15411080 timeoutSec:60)

2020-02-12T18:41:00.061Z info vvold[11390100] [Originator@6876 sub=Libs] 11390100:VVOLLIB : VVolLib_UserLockVVol:9605: Re-trying user lock on VVol rfc4122.38319541-e9bc-4b91-baa3-7a4781163fa0 (curTime:15411086 startTime:15411080 timeoutSec:60)

Since we are able to access the VMs on other hosts, we logged a case with VMware. We might log a case with Nimble support later, but currently we are focusing our efforts with VMware support.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-12-2020 11:34 AM

02-12-2020 11:34 AM

Re: VVol, any limit on connections?

Alright, doesn't look like a connection problem from the host, looking at limited information. Ideally we need to co-ordinate from the array side as well.

The snapshot fails because the VM seems "invalid". Is it in that "invalid" state before the snap is attempted?

When you decide to follow-up with Nimble support, please make sure ESXi logs are retained and passed on to Nimble support.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-12-2020 11:58 AM

02-12-2020 11:58 AM

Re: VVol, any limit on connections?

It was running fine before the snapshot was attempted, it's after the snapshot it becomes invalid.

Ok, will log a case with Nimble support as well now.