- Community Home

- >

- Storage

- >

- Midrange and Enterprise Storage

- >

- StoreVirtual Storage

- >

- Re: upgrading physical storage used by SoreVirtual...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-19-2018 11:12 AM

06-19-2018 11:12 AM

upgrading physical storage used by SoreVirtual VSA??

HPE tech support has not been helpful in my quest for understanding how to upgrade/increase StoreVirtual VSA physical storage. In other words, HPE tech support has not helped at all.

We have two ESX hosts, each with 11 x 600GB SAS disks, using SoreVirtual 12.6, everything is in Network RAID 10.

Each ESX host has one VSA and a FOM is running on separate storage.

My understanding is that one way to increase storage is to replace all the 600GB disks with 1TB or 2TB SATA disks depending on costs.

What I have thought of so far and would like assistance or validation that I am on the right track planning-wise:

I thought about it for awhile and it seems to me the best process and procedure is, please add any notes or comments or improvements as you see fit:

How should it be revised and improved??

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-19-2018 11:57 AM

06-19-2018 11:57 AM

Re: upgrading physical storage used by SoreVirtual VSA??

Tom:

I think you will have problems with your procedure as there are some steps in between that you are missing so let's run though one way you can do this:

1. Make sure things are working properly first using the CMC (Install and update if you need on a separate machine like a laptop or physical server running windows). Also check your VSA licenses to make sure you are not going to surpass the licensed about. You can surpass it, but you'll only be able to manage up to the total amount of the licenses.

2. Confirm, by checking the vsa VM settings, that the VSA's are either using vmdk's or RDM's and make note of this.

3. Confirm that your FOM is running and that there are 3 "managers" running (use the CMC for this).

4. Evacuate all VM's from the first host.

5. At this point you have a few options. The cleanest would likely be to remove the vsa running on thr server tyou cleared off from the cluster, vis the CMC. You could also treat it as a failed disk scenario.

6. Assuming you remove the vsa from the cluster, all of your volumes should move from NR10 to NR0. If you don't remove it from the cluster, the disks will simply go into degraded mode when you shutdown the vsa.

7. Shutdown the vsa, shutdown the ESXI Server, replace the drives.

8. Turn on the server, assuming it is a ProLiant, run the system utilities or intelligent provisioning to get into the SSA/ACU. Rebuild a raid array and logical drive(s)

9. Allow ESXi to boot back up.

10. Modify the settings of the VSA to either include the new logical drive as an RDM, or create a datastore on the ESXi host and create new vmdk's for the VSA.

11. Boot up the VSA, check the CMC for when it is back up and running.

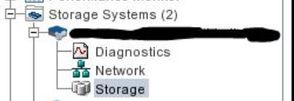

12. Go to the Storage Section in the CMC for that host and rebuilt the raid.

13. Add the node back into the cluster (if you removed it), otherwise once the raid is rebuilt, the other node should start replicating data to it once it is running "normal"

14. Wait for all volumes to be normal and running NR10, complete the same process for node 2.

This could take some time, depending upon the amount of data you have.

HP Master ASE, Storage, Servers, and Clustering

MCSE (NT 4.0, W2K, W2K3)

VCP (ESX2, Vi3, vSphere4, vSphere5, vSphere 6.x)

RHCE

NPP3 (Nutanix Platform Professional)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-19-2018 12:04 PM

06-19-2018 12:04 PM

Re: upgrading physical storage used by SoreVirtual VSA??

Oh, that is so much better, you are a genius. :) :)

Thank you. At least I was sort of on the right track.

I will copy this and think about it and figure out my questions before replying again.

I thought earlier it would make more sense to reinstall the VSA one by one, I know I need some clarification on step #10.

I know for sure I need to know which parts of this procedure I can and can not get HPE support for.

Thank you, Tom

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-22-2018 05:56 AM

06-22-2018 05:56 AM

Re: upgrading physical storage used by SoreVirtual VSA??

Hello,

Thank you again for replying.

I reviewed all your comments and put in some questions, if you or someone else is willing and able to reply.

I underlined my important parts that I would like to know more about.

1. Make sure things are working properly first using the CMC (Install and update if you need on a separate machine like a laptop or physical server running windows). Also check your VSA licenses to make sure you are not going to surpass the licensed about. You can surpass it, but you'll only be able to manage up to the total amount of the licenses.

2. Confirm, by checking the vsa VM settings, that the VSA's are either using vmdk's or RDM's and make note of this.

they use vmdks, would rdms be better?? i don't have any experience with rdms.

3. Confirm that your FOM is running and that there are 3 "managers" running (use the CMC for this).

4. Evacuate all VM's from the first host.

5. At this point you have a few options. The cleanest would likely be to remove the vsa running on the server you cleared off from the cluster, via the CMC. You could also treat it as a failed disk scenario.

read up on how to do this part,

here, do you mean uninstalling the VSA from vSphere or just removing the VSA from the CMC?? i *think* you mean the latter.

6. Assuming you remove the vsa from the cluster, all of your volumes should move from NR10 to NR0. If you don't remove it from the cluster, the disks will simply go into degraded mode when you shutdown the vsa.

this means no network raid

7. Shutdown the vsa, shutdown the ESXI Server, replace the drives.

8. Turn on the server, assuming it is a ProLiant, run the system utilities or intelligent provisioning to get into the SSA/ACU. Rebuild a raid array and logical drive(s)

it IS a Proliant.

9. Allow ESXi to boot back up.

10. Modify the settings of the VSA to either include the new logical drive as an RDM, or create a datastore on the ESXi host and create new vmdk's for the VSA.

this means going back into the CMC and reconfiguring the removed VSA?? or recreating the VSA??

and recreating the vmdks in the CMC?? this is while the VSA has not yet been started??

11. Boot up the VSA, check the CMC for when it is back up and running.

this means wait for the VSA appliance to start??

12. Go to the Storage Section in the CMC for that host and rebuild the raid.

read up on how to do this part

just tell the system again it is NR10??

13. Add the node back into the cluster (if you removed it), otherwise once the raid is rebuilt, the other node should start replicating data to it once it is running "normal"

read up on how to do this part

just tell the system again it is NR10??

14. Wait for all volumes to be normal and running NR10, complete the same process for node 2.

do one node per weekend??

or per day??

This could take some time, depending upon the amount of data you have.

each node is 11 x 600GB SAS drive in RAID5 now, they would be 11 x 2TB in RAID5 if I replaced all the SAS disks

raid rebuild would not take a long time

resync would occur at what rate???

then let things run quietly, redo the process for node 2, perhaps the following weekend or day??

ALSO:

would it be possible to replace just some of the SAS disks with bigger SATA disks?? -- then turn on adaptive optimization?? having both SAS and SATA disks??

does this require separate RAIDs for the different disks??

then separate LUNs (or whatever) within the VSA??

presently AO is not permitted b/c all the disks are the same, but we do have the license

I hope and plan to upgrade both hosts to vSphere 6.0u3 first before doing any of the above.

Thank you, Tom

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-25-2018 08:12 AM

06-25-2018 08:12 AM

Re: upgrading physical storage used by SoreVirtual VSA??

Hello Tom,

With RDM you can fully utilize your RAID capacity (so you can utilise more capacity of your disks).

With vmdk's, you'll need to keep small percentage as free space on the datastore.

RDM have issues with Veeam snapshots though. So if you want to utilise Veeam snapshots, configure the VSA with VMDK's.

Performance wise, there isn't much difference between the two.

You can mix SATA with SAS on VSA with AO. You'll need two RAID arrays.

With VSA, you cannot configure more then 2 tiers. So choose wisely. (if you ever intend to add SSD's, don't mix)

Kind regards,

Thanh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-25-2018 08:25 AM

06-25-2018 08:25 AM

Re: upgrading physical storage used by SoreVirtual VSA??

@ThanhDatVo wrote:Hello Tom,

With RDM you can fully utilize your RAID capacity (so you can utilise more capacity of your disks).

With vmdk's, you'll need to keep small percentage as free space on the datastore.

RDM have issues with Veeam snapshots though. So if you want to utilise Veeam snapshots, configure the VSA with VMDK's.

Performance wise, there isn't much difference between the two.You can mix SATA with SAS on VSA with AO. You'll need two RAID arrays.

With VSA, you cannot configure more then 2 tiers. So choose wisely. (if you ever intend to add SSD's, don't mix)

Hello, thank you for replying! :)

We don't need to backup or take snapshots of the VSAs themselves but I am more familiar with VMDKs, I may as well keep it simple.

Wrt mixing SAS/SATA, you're saying within the Proliant's RAID configuration, I must configure 1 array for the SATA drives, one array for the SAS drives??

Then how is the VSA configured -- as one big "vmdk" containing both arrays??

Regarding AO, does the software "know" when it's AO-capable?? (I also can ask support)

We won't add SSDs, too expensive for Gen8 servers.

Since there's 11 disks, I'd probably use 4 600GB disks per host in RAID 5, then the remaining 7 disks would be replaced with 2TB or 4TB SATA disks in RAID 5, depending on disk cost.

Thank you, Tom

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-27-2018 11:46 PM

06-27-2018 11:46 PM

Re: upgrading physical storage used by SoreVirtual VSA??

Hello Tom,

Yes. Create two datastores. One for SATA and another for SAS disks.

You can add two VMDK's to VSA (one for each RAID array)

AO is possible as long as you have the correct licenses. (make sure you check this before puchasing any drives).

Also check the max capacity of your current VSA license. The usable storage may not exceed your current max capacity for the license. (Having more raw storage on the datastores is ok)

I prefer to work with RDM's (we use SSD's and want to fully utilize the capacity of the expensive storage)

Configurationwise, RDM is quite simple to setup.

Since you have SATA disks for capacity as Tier 1, i'd recommend using RAID10 for the SAS disks for performance and use that as Tier 0. With AO, you'll get more performance that way with a small loss in capacity. (i'd prefer my more performant tier to have 1TB RAID10 then 1.5TB RAID5)

Kind regards,

Thanh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-28-2018 05:14 PM

06-28-2018 05:14 PM

Re: upgrading physical storage used by SoreVirtual VSA??

2 - As previously stated, using RDM's allows you to utilize the entire disk. The only issue here is, you need to store the VM somewhere so there needs to be at least 1 vmfs datastore available for re-installation.

5 - Correct, the latter. There are 3 levels on installation. Installing the VSA on an ESXi system (done already). Adding the VSA to a Management Group and Adding the VSA to a cluster. i am suggesting you back out 1 level, take the VSA out of the storage cluster within the CMC. Redo the disks, then add it back into the cluster.

6 - Correct, for the duration of the upgrade of that node.

10 -From vCenter, you would reconfigure the VM's disks, removing the old one's and adding the new ones. (Should probably remove the old one's at step 7, before shutting down the host). Yes, before you start the VSA.,

11 - Yes

12 - No. You have to go to the storage section of the server and "Reconfigure RAID". When you expand the storage system, look at the storage section. On the menu you may need to click the "Reconfigure RAID" option.

13 - No, right click the Management Group or Cluster and "Add Node to Cluster"

14 - Depends on how long it takes. to copy the data to the "new" node.

If you wanted to replace just some of the disks, that is doable as well. Since you cannot decrease the size of the datastore, you would follow the ame process, just replacing the disks you want, building the new raid and re-configured 2 new vmdk's or rdms.

Separate RAIDS? Physically you need to have separate RAID Arrays. (On the server) Each with a single Logical Unit. Once the vsa is back online, you can configure the tiering before placing the vsa back into the cluster. The lun id's are sometimes confusing and it would be better to get it straightened out first before adding to the cluster.

I think I covered all your questions/concerns. Let me know if I missed something, or if you have any more!!!

HP Master ASE, Storage, Servers, and Clustering

MCSE (NT 4.0, W2K, W2K3)

VCP (ESX2, Vi3, vSphere4, vSphere5, vSphere 6.x)

RHCE

NPP3 (Nutanix Platform Professional)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-29-2018 06:57 AM

06-29-2018 06:57 AM

Re: upgrading physical storage used by SoreVirtual VSA??

Thank you a ton for responding, I will next week take all this and organize it better so it becomes a usable working document.

Thank you, Tom

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-03-2018 12:01 AM

07-03-2018 12:01 AM

Re: upgrading physical storage used by SoreVirtual VSA??

Just wanted to add an important point, that would make the process even easier and quicker.

5. At this point you have a few options. The cleanest would likely be to remove the vsa running on thr server tyou cleared off from the cluster, vis the CMC. You could also treat it as a failed disk scenario.

6. Assuming you remove the vsa from the cluster, all of your volumes should move from NR10 to NR0. If you don't remove it from the cluster, the disks will simply go into degraded mode when you shutdown the vsa.

6.1 Put the VSA in repair mode, rather than remove it from the cluster. Use the following article. https://support.hpe.com/hpsc/doc/public/display?docId=mmr_kc-0112618

In this way you would not need to put volumes in NR0 (save time on restripe in cluster ) and you can safely reconfigure the VSA which will be in available systems. After reconfig of the VSA you can bring it back to MG, start the manager on the VSA and exchange it with the place holder (ip) in the cluster.

The newly reconfigured VSA will resync with the node in the cluster.

I am an HPE employee

[Any personal opinions expressed are mine, and not official statements on behalf of Hewlett Packard Enterprise]