- Community Home

- >

- Storage

- >

- Around the Storage Block

- >

- Data Protection for Containers: Part I, Backup

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

Data Protection for Containers: Part I, Backup

An influx of conversations with customers and colleagues recently has made me aware that data protection for containers is not to be taken for granted. We want to break down our monoliths for brownfield applications and deploy fresh 12-factor greenfield microservices. Both stateful and ephemeral. Now, not next year. Mission-critical production, not just for dev and test! Just because your application changes its runtime environment doesn’t mean that the data protection requirements changes. How do you ensure data is properly backed up and recoverable according to current policies on a Kubernetes cluster?

There are many aspects of a container environment that needs robust disaster recovery routines and contingency planning such as protecting the state of the cluster, container image registries and runtime information. In part one of this blog post I will primarily talk about managing and defining a data protection scheme for applications deployed on a Kubernetes cluster. We are making further strides in this area and we’ll announce a few forward looking statements on what we have in the oven in the Future section and what to expect in part two.

Background

Traditional agent-based backup software won’t work natively with a container orchestrator such as Kubernetes. A Persistent Volume (PV) may be attached to any host at any given time in the cluster. Having a backup agent hogging the mount path on the host will lead to unpredictable behavior and most certainly failed backups. Applying the traditional backup paradigm to the containerized application paradigm will simply not work. Backup schemes need to be consumed as a data service provided by the cluster orchestrator and the underlying storage infrastructure. In this blog you’ll learn how HPE Nimble Storage provide these data protection services for Kubernetes and how cluster administrators, traditional storage architects and application owners that are part of a DevOps team may design and consume these data protection services.

Before we dig in, the very loaded word “Backup” needs to be defined with a touch of the 21st century. I’m using the define: operator on Google search to help me:

So, “the procedure for making extra copies of data in case the original is lost or damaged”. HPE Nimble Storage leverages snapshot copies of your data volumes with short retention (RPO) and quick recovery (RTO). Since the inception of data volume snapshots we’ve been taught that snapshots are not backups unless the snapshots are stored independently of the source volume. HPE Nimble Storage fulfill that criteria by replicating the delta between snapshots to either a secondary array (preferably in a remote data center in a different disaster zone) or to a Replication Store using HPE Cloud Volumes available on AWS and Azure. In the next few sections we’re going through how to “make extra copies” with snapshots and in part two of this blog deliberately “lose and damage the data” to demonstrate a recovery of said backup.

Meet the team

In any self-service infrastructure provided to an organization, there needs to be some ground rules established. I’ve put together my virtual team to better illustrate each of the subject matter expert domains.

The Storage Architect (SA)

The SA is responsible that SLAs and SLOs are upheld and that established RTOs and RPOs can be met. At his disposal is one primary all-flash HPE Nimble Storage array and a secondary hybrid-flash array in a remote data center. This is the production tier. The SA defines Protection Templates (more on this below) for the cluster administrator to consume.

The Cluster Administrator (CA)

The CA is responsible of designing and deploying robust Kubernetes clusters to satisfy the needs of the organization. For all production workloads, Red Hat OpenShift is being used (learn about the HPE Nimble Storage Integration Guide for Red Hat OpenShift and OpenShift Origin). The CA ensures that storage classes are defined and setup to consume the data protection services defined by the SA . The CA has deployed the HPE Nimble Kube Storage Controller and FlexVolume driver that uses the HPE Nimble Storage Docker Volume plugin that is all part the HPE Nimble Storage Linux Toolkit (NLT).

The Application Owner (AO)

The AO deploys applications to the Kubernetes clusters and is responsible for responding to queries from the end-user of the application. Tasks range from application lifecycle management to end-user support requests which can include restoring lost or corrupt data from backups. The AO simply uses Persistent Volume Claims (PVC) and only cares about the capacity of the volumes provisioned (most of the time) and expects the cluster to satisfy the PVC when deploying new applications.

These roles are vastly simplified but could very well represent a small DevOps team maintaining containerized applications.

Serve and Protect

Provisioning storage to a Kubernetes pod is accomplished by creating a PVC and referencing it in the deployment specification. PVCs are namespaced, meaning that they’re only referenceable from pods scheduled in the same namespace. The AO may or may not provide a storageClassName to request a specific type of storage. The CA creates storage classes, a StorageClass is cluster-wide. A good practice is to create a default StorageClass that represents the most common type of storage that all PVCs without a storageClassName will be provisioned against. The CA consults with the SA what parameters the storage classes should utilize according to the current storage and data protection practices being exercised. The AO may want certain storage optimizations applied according to the best practices of the application vendor. This needs to be communicated amongst the team to ensure the SA understands the requirements and implements those for the CA to reference in a StorageClass.

Before we dig into OpenShift, let’s explore the building blocks provided by the HPE Nimble Storage array controlled by the SA.

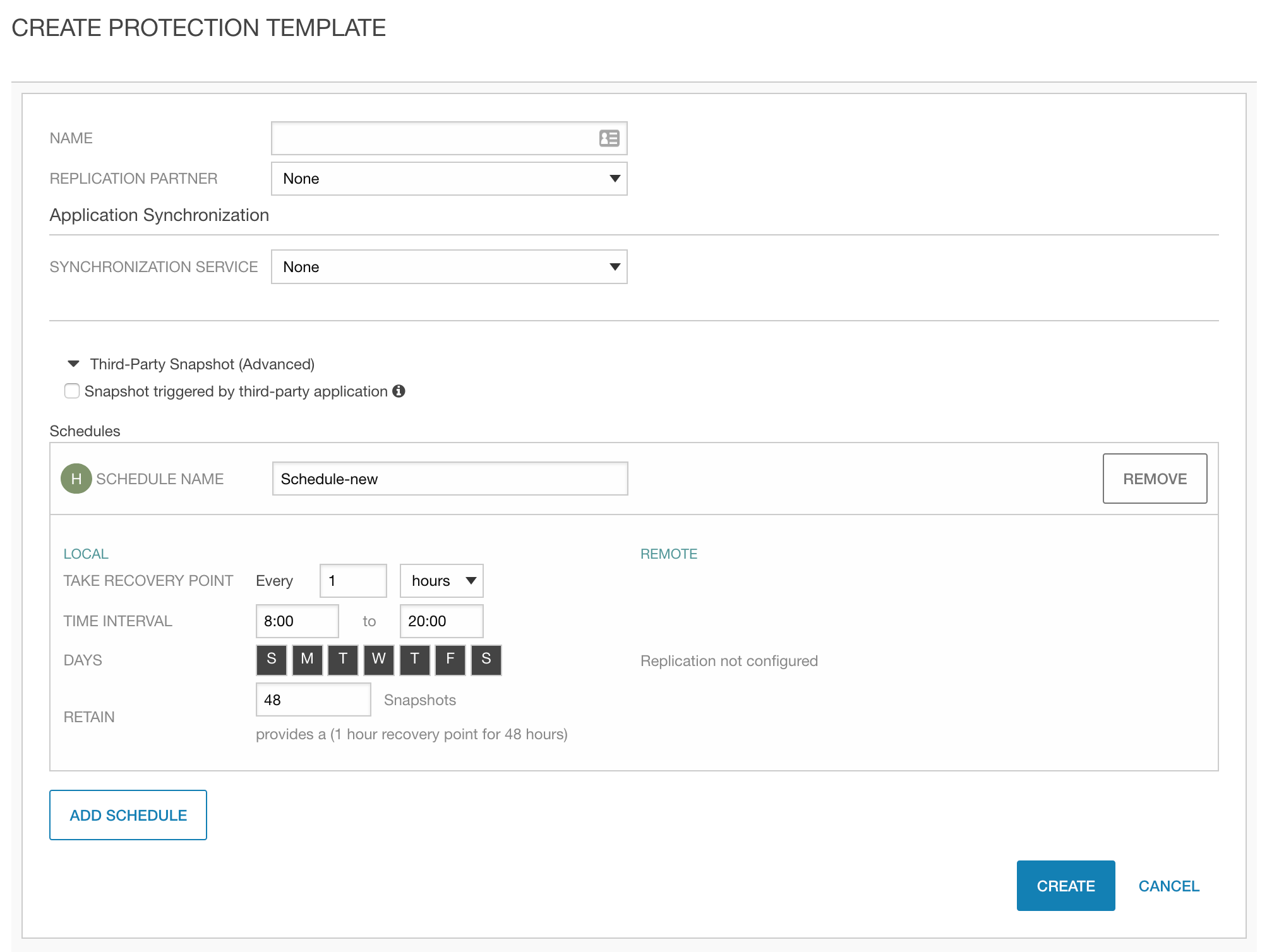

Protection Templates

A protection template (PT) is data protection policy tool used by a SA to tailor protection parameters to meet the RPO/RTO for provisioned volumes. It contains one or multiple snapshot schedules that defines intervals and time ranges. This is also where you would configure replication destinations and application integrated snapshots. The following screenshot gives a decent overview:

After the PT has been defined, the next step would be to create a Volume Collection (VC) with the PT as input. A VC may be compared to a consistency group and allows volumes to become members of the VC to have snapshots created with referential integrity between its members. For our container platform integration we create one VC per volume and the StorageClass only references the PT when provisioning volumes. A future capability discussed in the tail section of this blog discusses the ability to reference a VC or create a named VC when provisioning volumes.

The key take-away is the CA only need to reference the actual PT in the StorageClass created by the SA to ensure backup policies are being honored and does not need to be entangled in micro-managing snapshot schedules/retentions and replication destinations. The AO has no means of knowing the minutia behind the intricacies of data protection other than inspecting the StorageClass to assure that data protection is being used for the PVCs created.

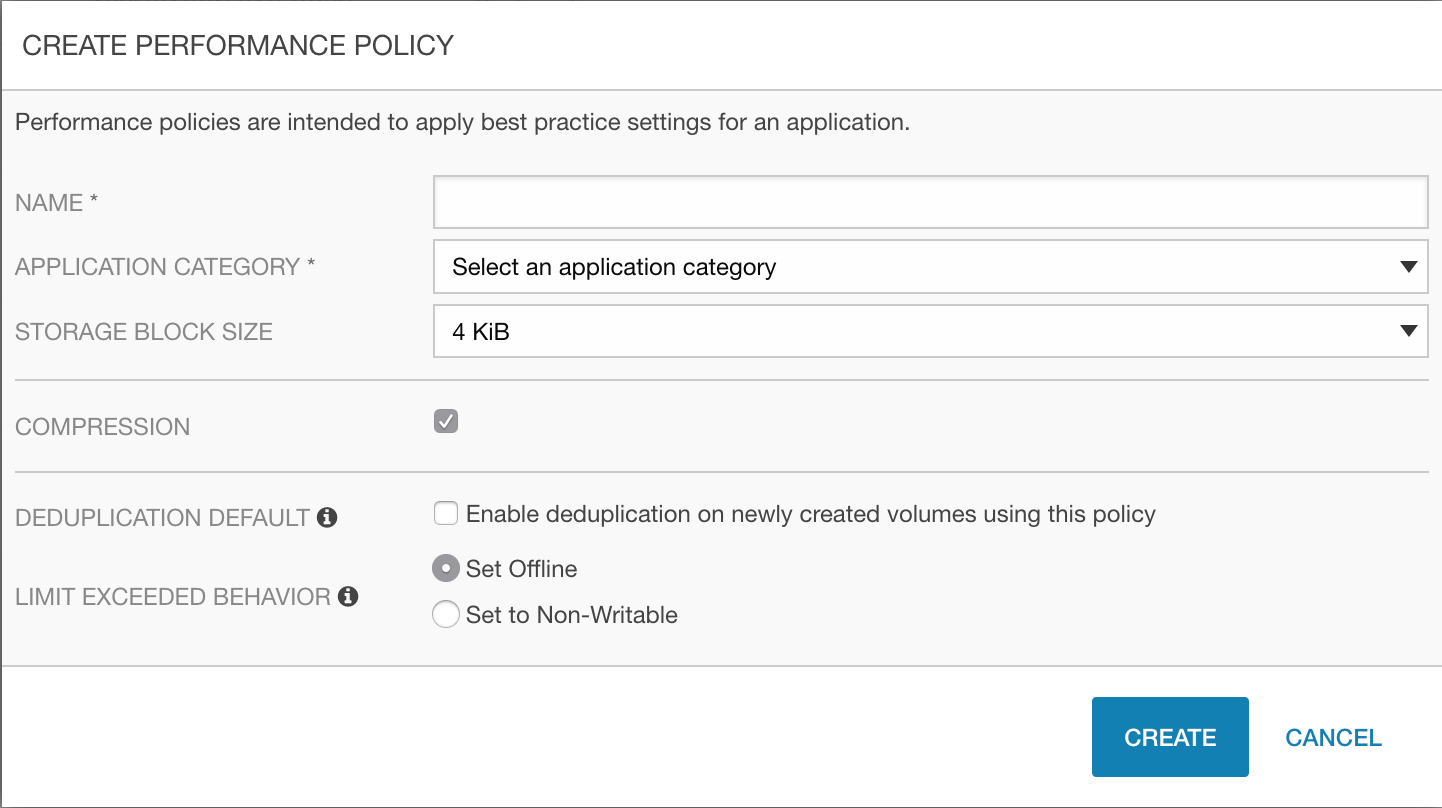

Performance Policies

While Performance Policies (PP) aren’t related to data protection, they’re highly relevant when creating distinguished storage classes which is part of the exercises below. NimbleOS ships with a set of pre-defined PPs that have been refined based on performance data gathered over the years and analyzed by our data scientists on the HPE InfoSight team. PPs provides a set of defaults, such as block size, compression, deduplication and what behavior to adopt when the volume run out of space.

We’ll be creating custom PPs for our application examples below.

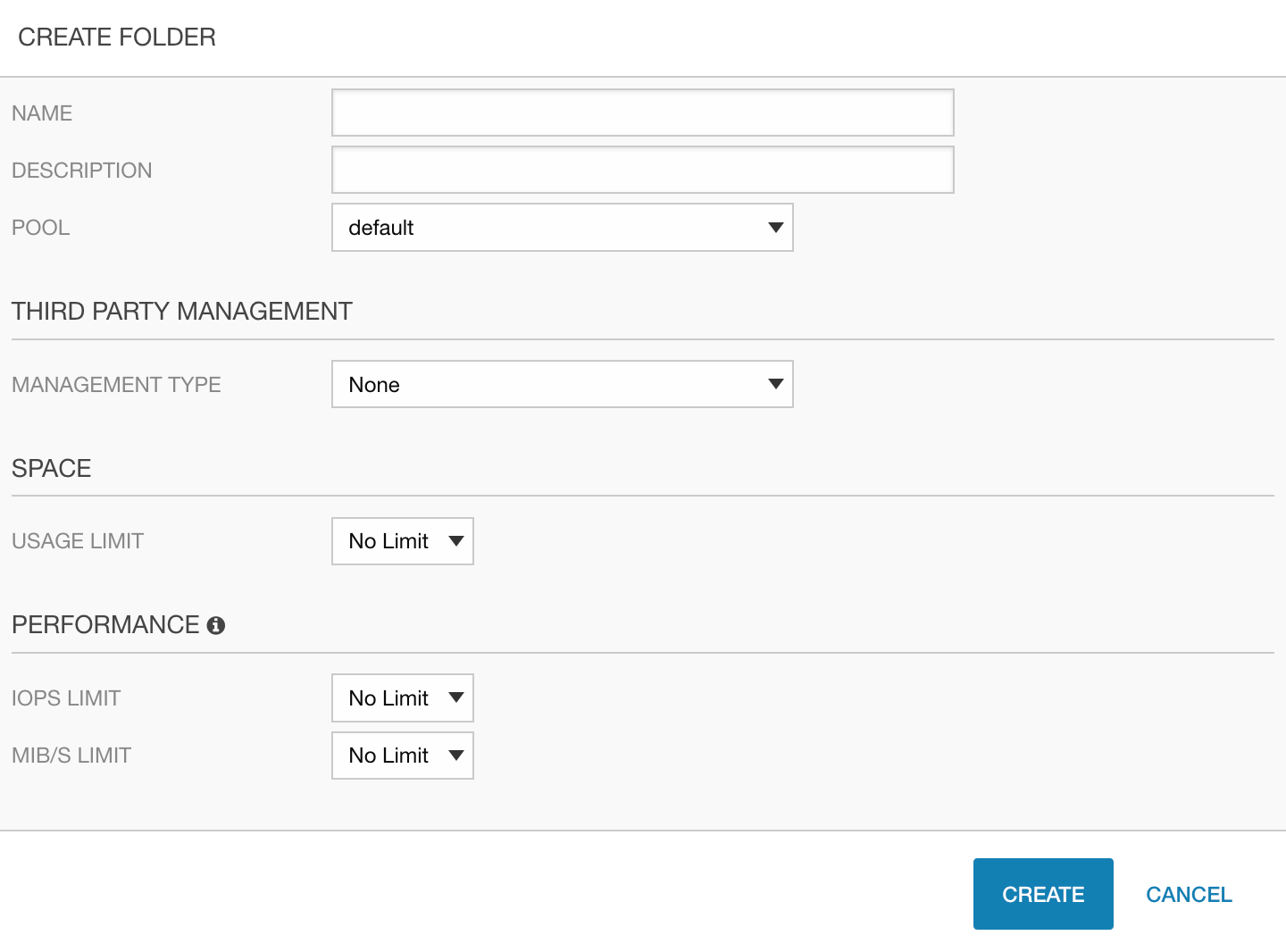

Folders

NimbleOS provide a very simple construct to compartmentalize storage resources to better household with performance and capacity. The SA creates the folder and controls the parameters. The folder may then be referenced in the StorageClass by the CA. The AO is then constrained to the limitations set on the folder. The folder may also be confined to a certain pool of storage in the Nimble group, such as hybrid-flash or all-flash.

Our storage classes below will be referencing folders to distinguish the different data types to further help refine the characteristics needed for the application.

Assumptions

As mentioned above, we’ll use a Red Hat OpenShift cluster for the environment, replicating all data to a remote array with the intention to demonstrate restoring data from both local snapshots and the remote array.

Examples are hosted on GitHub in the container-examples repo. The rest of the guide assumes:

$ git clone https://github.com/NimbleStorage/container-examples

$ cd container-examples/blogs/data-protection

Prepare the HPE Nimble Storage array

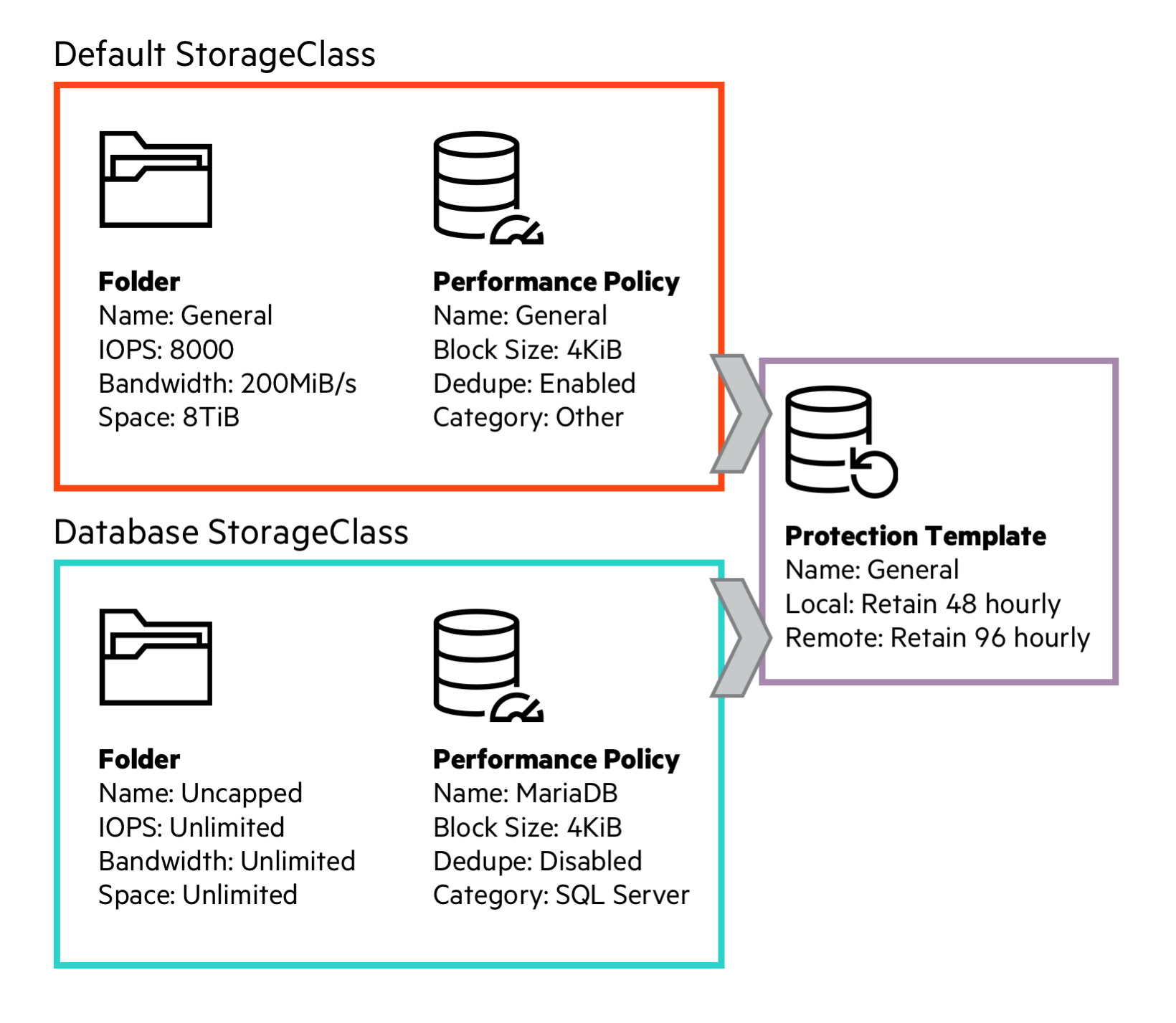

Wearing the hat of the SA, for this exercise we create a Folder, Performance Policy and Protection Template to serve our default StorageClass. Also, we create an application optimized Performance Policy for our specific brand of database: MariaDB. Since we’re resource limiting the default StorageClass on a Folder level, we also create an unconstrained Folder for the prioritized workloads.

Create StorageClass resources

We’ll be creating two storage classes initially, one default and one application optimized for the database used in the example application deployment. We switch hats to the CA:

Default StorageClass:

[admin@master]$ tee /dev/stderr < sc-general.yaml | oc create -f-

---

kind: StorageClass

apiVersion: storage.k8s.io/v1beta1

metadata:

name: general

annotations:

storageclass.beta.kubernetes.io/is-default-class: "true"

provisioner: hpe.com/nimble

parameters:

description: "Volume provisioned from default StorageClass"

fsMode: "0770"

protectionTemplate: General

perfPolicy: General

folder: General

storageclass "general" created

Database StorageClass:

[admin@master]$ tee /dev/stderr < sc-database.yaml | oc create -f-

---

kind: StorageClass

apiVersion: storage.k8s.io/v1beta1

metadata:

name: database

provisioner: hpe.com/nimble

parameters:

description: "Volume provisioned from database StorageClass"

fsMode: "0770"

protectionTemplate: General

perfPolicy: MariaDB

folder: Uncapped

storageclass "database" created

Validate our storage classes:

[admin@master]$ oc get sc

NAME PROVISIONER AGE

database hpe.com/nimble 3s

general (default) hpe.com/nimble 15s

We’re now ready to switch over to the AO role. Today we’re deploying Wordpress which requires one PV for the application server for whom we’ll use the default StorageClass. Our MariaDB will utilize the database StorageClass.

gem:dp mmattsson$ tee /dev/stderr < pvcs.yaml | oc create -f-

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: wordpress

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Ti

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mariadb

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 16Gi

storageClassName: database

persistentvolumeclaim "wordpress" created

persistentvolumeclaim "mariadb" created

Validate volumes:

gem:dp mmattsson$ oc get pvc

NAME STATUS VOLUME CAPACITY STORAGECLASS AGE

mariadb Bound database-.. 16Gi database 4s

wordpress Bound general-... 1Ti general 4s

We can now deploy our app (input not shown):

gem:dp mmattsson$ oc create -f wp.yaml

secret "mariadb" created

deployment "mariadb" created

service "mariadb" created

deployment "wordpress" created

service "wordpress" created

Note: The MariaDB image is stock, the Wordpress image is slightly modified to allow execution under the restricted SCC in OpenShift. Please see the Dockerfile for details.

We can now connect to the Wordpress instance. There isn't a host route setup so let’s grab the host port from the service:

gem:dp mmattsson$ oc get svc/wordpress

NAME TYPE CLUSTER-IP PORT(S) AGE

wordpress LoadBalancer 172.30.156.50 8080:31674/TCP 5s

By visiting any of the nodes with a web browser at http://mynode1.example.com:31674 we should be able to see something similar to this:

The application is now deployed. The AO has no means to validate that data is actually being snapshotted or replicated other than peeking at the StorageClass. The CA has access to the Docker Volume API on the host and can inspect the actual volumes on the cluster. Checking out the database volume for our application:

[admin@master]$ sudo docker volume inspect database-...

[

{

"Driver": "nimble",

"Labels": null,

"Mountpoint": "/",

"Name": "database-fd963c3b-39e0-11e8-80f1-52540099902e",

"Options": {},

"Scope": "global",

"Status": {

...

"Snapshots": [

{

"Name": "database-fd963c3b-39e0-11e8-80f1-52540099902e.kubernetes-Schedule-new-2018-04-06::15:00:00.000",

"Time": "2018-04-06T22:00:00.000Z"

}

],

...

"VolumeCollection": {

"Description": "",

"Name": "database-fd963c3b-39e0-11e8-80f1-52540099902e.kubernetes",

"Schedules": [

{

"Days": "all",

"Repeat Until": "20:00",

"Replicate To": "group-sjc-array869",

"Snapshot Every": "1 hours",

"Snapshots To Retain": 48,

"Starting At": "08:00",

"Trigger": "regular"

}

]

},

...

}

}

]

The characteristics of the volume reveals that we have snapshots, automatic retention and downstream replication setup. We can also see our custom placement in a specific Folder and the Performance Policy we setup in previous steps.

In essence, the AO and CA can rest assured that application data is being protected both locally and remotely with very simple means completely tailored to meet the criteria of a defined data protection strategy. As usual, the SA puts his feet on the table and let HPE InfoSight do the work.

Future

In this blog we learned how to take advantage of the protection capabilities provided by HPE Nimble Storage integration with Kubernetes. The examples above illustrated a common deployment scenario using Red Hat OpenShift. The next blog in the series, we’ll explain how to recover data in the event of data loss or disaster recovery.

There are a bunch of new storage capabilities in the design phase for Kubernetes. Snapshots are becoming first class citizens where end-users will be able to create new volumes from. As a band-aid we’ll soon have the ability to annotate a PVC to request a volume to be created from a known snapshot.

We’re continuing to exploit platform capabilities of NimbleOS to expose to Kubernetes. Our Volume Collections today are a one-to-one mapping from a PV perspective. We’re ensuring that Kubernetes end-users will have the ability to control which volumes should retain referential integrity by annotating the PVC. Like the examples above with Wordpress, you ideally want snapshots taken on both the database and application server simultaneously.

HPE Nimble Storage has the simplest and safest method I’ve ever witnessed to effortlessly reverse replication relationships. I’m delighted to see this core functionality being exposed to Kubernetes in the next iteration. It essentially means you can have a standby cluster running offsite or in the public cloud via HPE Cloud Volumes to resurrect workloads for disaster recovery purposes and safely move them back when the disaster have been remedied.

The volume lifecycle controls we have in place for our container integration allows us to do volume restores quite easily and can be exploited in a multitude of ways to quickly revert to a known state.

Start designing your data protection policies for your applications today. We’ll ensure the safety of your data when Murphy comes around but, before that, we’ll have the tools to safely recover your data.

Update: Part II is now available.

- Back to Blog

- Newer Article

- Older Article

- haniff on: High-performance, low-latency networks for edge an...

- StorageExperts on: Configure vSphere Metro Storage Cluster with HPE N...

- haniff on: Need for speed and efficiency from high performanc...

- haniff on: Efficient networking for HPE’s Alletra cloud-nativ...

- CalvinZito on: What’s new in HPE SimpliVity 4.1.0

- MichaelMattsson on: HPE CSI Driver for Kubernetes v1.4.0 with expanded...

- StorageExperts on: HPE Nimble Storage dHCI Intelligent 1-Click Update...

- ORielly on: Power Loss at the Edge? Protect Your Data with New...

- viraj h on: HPE Primera Storage celebrates one year!

- Ron Dharma on: Introducing Language Bindings for HPE SimpliVity R...