- Community Home

- >

- Services

- >

- The Cloud Experience Everywhere

- >

- Cloud-native monitoring with Prometheus: Demystify...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

Cloud-native monitoring with Prometheus: Demystifying multitenancy with open-source tools

Effective metrics collection is essential for understanding the health and performance of microservices in multitenant environments. Here’s how to achieve it with open-source solutions, and how HPE Services can help.

By Alexandros Doulgkeris, Chief Solutions Architect, Hybrid Infrastructure Technology, Cloud Native Computing Practice Area, HPE Services

Nowadays there is no doubt that monitoring is an essential block in modern cloud native architectures, otherwise there is no health insight for your microservices in general. Cloud-native monitoring becomes more demanding than ever with all the different pieces that microservices architecture brings.

Consider how many different microservices your applications consist of, all of them interacting with each other. Now if you extrapolate that to the number of applications each enterprise is managing today, you can see that the need for a reliable monitoring system is paramount.

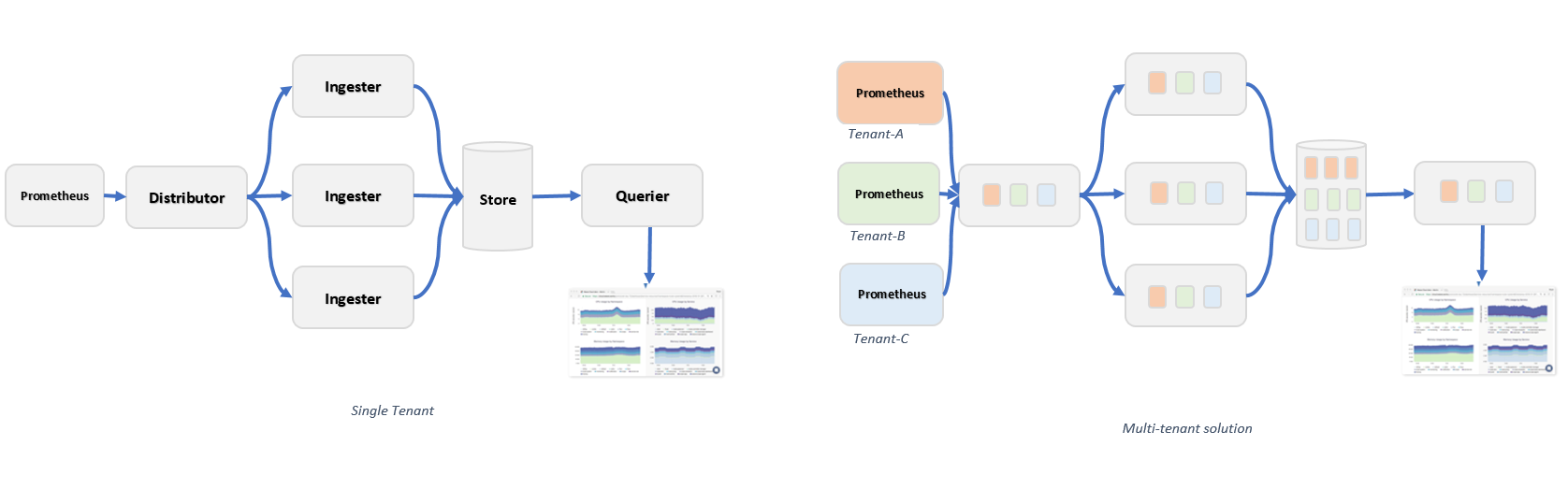

One of the most commonly used tools to collect metrics from your applications is Prometheus. Prometheus was designed to be very simple in its architecture and it just scrapes your target applications for metrics. But what happens if you have multiple applications that belong to different teams or even companies? How can we achieve data segregation so that each tenant can only see the metrics from the applications it owns. How can we ensure that monitoring resources consumed by a tenant are not affecting another?

One solution would be to have a dedicated Prometheus instance per team/organization, but as you can imagine soon enough you will end up maintaining a farm of Prometheus instances.

Cortex: a solution to multi-tenancy with Prometheus

Cortex is an open-source tool under Apache License 2.0 licence and a CNCF incubation-level project that provides out-of-the box multitenancy, high availability, horizontal scalability, and long-term storage for metrics scraped using Prometheus. Once you run Prometheus in HA mode there are a bunch of issues such as data duplication, achieving a single pane of glass for duplicate data, etc. To solve these problems, Cortex was born.

Multitenancy is woven into the very fabric of Cortex. All time series data that arrives in Cortex from Prometheus instances is marked as belonging to a specific tenant in the request metadata. From there, that data can only be queried by the same tenant. Alerting is a multi-tenanted feature as well, with each tenant able to configure its own alerts using Alertmanager configuration.

In essence, each tenant has its own “view” of the system, its own Prometheus-centric world at its disposal. And if you do use Cortex in a single-tenant fashion, you can expand out to an indefinitely large pool of tenants at any time.

Cortex architecture

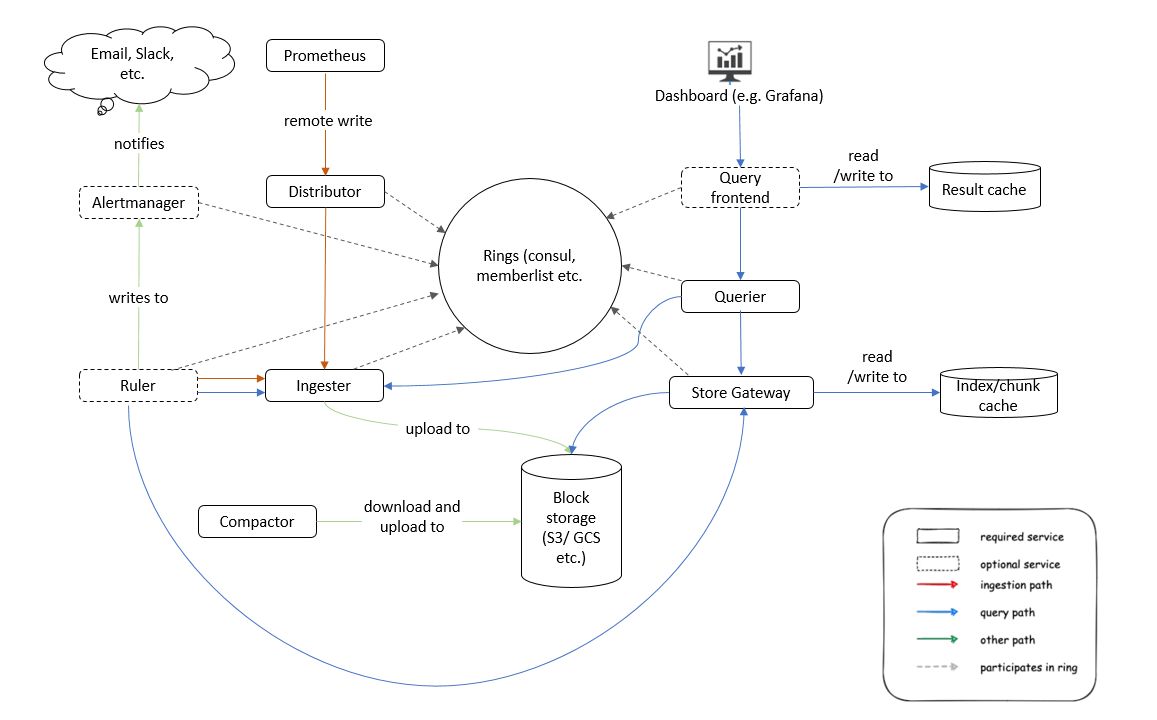

Cortex follows the microservices architecture paradigm, with its essential functions split up into single-purpose components (aka microservices) that can be independently scaled:

- Distributor – Handles time series data written to Cortex by Prometheus instances using Prometheus’ remote write API. Incoming data is automatically replicated and sharded, and sent to multiple Cortex ingesters in parallel.

- Ingester – Receives time series data from distributor nodes and then writes that data to long-term storage backends, compressing data into Prometheus chunks for efficiency.

- Ruler – Executes rules and generates alerts, sending them to Alertmanager (Cortex installations include Alertmanager).

- Querier – Handles PromQL queries from clients (including Grafana dashboards), abstracting over both ephemeral time series data and samples in long-term storage.

- Store gateway – queries series from blocks over the storage bucket.

- Compactor – compacts multiple blocks of a given tenant into a single optimized larger block. This helps to reduce storage costs (deduplication, index size reduction), and increase query speed (querying fewer blocks is faster). It also Keep the per-tenant bucket index updated.

Each of these components can be managed independently, which is key to Cortex’s scalability and operations story. You can see a basic diagram of Cortex and the systems it interacts with below:

Prometheus instances scrape samples from various targets and then push them to Cortex (using Prometheus’ remote write API). That remote write API emits batched Snappy-compressed Protocol Buffer messages inside the body of an HTTP PUT request

Cortex requires that each HTTP request bear a header specifying a tenant ID for the request. Request authentication and authorization are handled by an external reverse proxy.

Incoming samples (writes from Prometheus) are handled by the distributor. Once the distributor receives samples from Prometheus, each sample is validated for correctness to ensure that it is within the configured tenant limits, falling back to default ones in case limits have not been overridden for the specific tenant. Valid samples are then split into batches and sent to multiple ingesters in parallel. The ingester service is responsible for writing incoming series to a long-term storage backend on the write path and returning in-memory series samples for queries on the read path.

A hash ring (stored in a key-value store) is used to achieve consistent hashing for the series sharding and replication across all ingesters. The ingesters register themselves into the hash ring with a set of tokens they own; each token is a random unsigned 32-bit number. Each incoming series is hashed in the distributor and then pushed to the ingester owning the tokens range for the series hash number plus N-1 subsequent ingesters in the ring, where N is the replication factor. The supported KV stores for the hash ring are Consul, Etcd and Gossip member-list.

On the other hand, incoming reads (PromQL queries) are handled by the querier or optionally by the query frontend, an optional service providing the querier’s API to accelerate the read path. Queriers fetch series samples both from the ingesters and long-term storage: the ingesters hold the in-memory series which have not yet been flushed to the long-term storage. Because of the replication factor, it is possible that the querier may receive duplicated samples; to resolve this, for a given time series the querier internally deduplicates samples with the same exact timestamp.

The block storage is based on Prometheus TSDB. It stores each tenant’s time series into their own TSDB which write out their series to a on-disk Block (defaults to 2h block range periods). Each Block is composed by a few files storing the chunks and the block index.

The TSDB chunk files contain the samples for multiple series. The series inside the Chunks are then indexed by a per-block index, which indexes metric names and labels to time series in the chunk files.The blocks storage doesn’t require a dedicated storage backend for the index. The only requirement is an object store for the Block files, which can be:

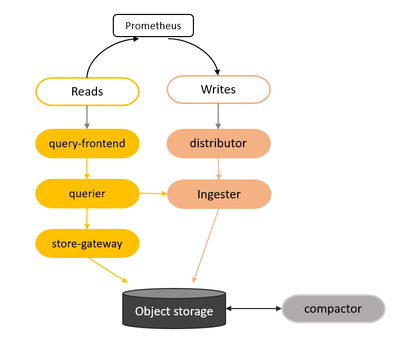

Mimir: a solution to multi-tenancy with Prometheus

Mimir is another solution that addresses multi-tenancy for Prometheus. Originally developed by Grafana labs under AGPLv3 licence, it got recently introduced as a sandbox project to CNCF graduation program. Mimir has been built as a fork of Cortex and combines features from Cortex (e.g. out-of-the box multitenancy, high availability, horizontal scalability, and long-term storage) but also features from GEM (aka Grafana Enterprise Metrics) and Grafana Cloud at massive scale, like unlimited cardinality using a horizontally scalable “split” compactor that provides fast high-cardinality queries through a sharded query engine.

Grafana Mimir Architecture

Grafana Mimir follows a microservices architecture, and it has multiple horizontally scalable components (aka microservices) that can run separately and in parallel. All its components are the same as with Cortex; remember, this is a Cortex-forked project, meaning that most of the microservices are stateless and do not require any data persisted between process restarts. The ones that are stateful rely on non-volatile storage to prevent data loss between process restarts.

On the read path, queries coming into Grafana Mimir arrive at the query-frontend. The query-frontend then splits queries over longer time ranges into multiple, smaller queries. The query-frontend next checks the results cache. If the result of a query has been cached, the query-frontend returns the cached results.

Queries that cannot be answered from the results cache are put into an in-memory queue within the query-frontend. The queriers act as workers, pulling queries from the queue. The queriers connect to the store-gateways and the ingesters to fetch all the data needed to execute a query. After the querier executes the query, it returns the results to the query-frontend for aggregation. The query-frontend then returns the aggregated results to the client.

On the write path ingesters receive incoming samples from the distributors. Each push request belongs to a tenant, and the ingester appends the received samples to the specific per-tenant TSDB that is stored on the local disk. The samples that are received are both kept in-memory and written to a write-ahead log (WAL). If the ingester abruptly terminates, the WAL can help to recover the in-memory series. The per-tenant TSDB is lazily created in each ingester as soon as the first samples are received for that tenant. The in-memory samples are periodically flushed to disk, and the WAL is truncated, when a new TSDB block is created. By default, this occurs every two hours.

Comparison: Cortex vs Mimir

Both tools solve more or less the same problems, with one (Mimir) being a fork of the other (Cortex). Let’s see what are the differences and the many similarities that both solutions offer.

|

|

Cortex |

Mimir |

|

Licence |

Apache 2.0 |

AGPLv3 |

|

Multi-tenancy |

Yes |

Yes |

|

High availability |

Yes |

Yes |

|

Horizontal scalability |

Yes |

Yes |

|

Long term storage on object storage |

Yes |

Yes |

|

High cardinality queries |

No |

Yes |

|

API for identifying high cardinality |

No |

Yes |

|

Unlimited cardinality with two-stage compactor |

No |

Yes |

|

Supports Graphite, OpenTelemetry, Influx and Datadog-compatible metrics ingestion |

No |

Yes |

Conclusion

As we can see, Cortex and Mimir complement Prometheus very well, by bridging the gap of all the features Prometheus is lacking (multitenancy, horizontal scaling, high-availability, long-term storage). Mimir even goes one step further and supports metrics ingestion not only from Prometheus but also Graphite, OpenTelemetry, Influx and Datadog-compatible systems and also by providing high and unlimited cardinality with the 2-stage compactor. That way the queries per tenant are even faster and we tackle the problem of one tenant consuming resources from another.

No matter which solution you pick up, we should stress the fact that monitoring and observability are essential components of the cloud native paradigm, and it is very nice to see continued convergence around some of the core primitives that have organically emerged from the Prometheus community. Both solutions are used in production by many companies – such as AWS, EA, Etsy, Digital Ocean and many more – at massive scale, meaning they use more than millions of active series.

How HPE Services can assist you in your cloud native monitoring journey

HPE Services can accelerate your journey to seamlessly integrating state-of-the-art tools and best practices for containers adoption, including cloud-native monitoring. The HPE Observability Service brings together the power of many open-source tools like Prometheus, Cortex, Mimir, Thanos, Grafana, Alert manager and many others to build the right monitoring platform that fits your company’s needs. Enable enterprise-level insight into your microservices infrastructure and applications today.

Learn more about advisory and professional services from HPE Services.

Services Experts

Hewlett Packard Enterprise

twitter.com/HPE_Services

linkedin.com/showcase/hpe-services/

hpe.com/services

- Back to Blog

- Newer Article

- Older Article

- Deeko on: The right framework means less guesswork: Why the ...

- MelissaEstesEDU on: Propel your organization into the future with all ...

- Samanath North on: How does Extended Reality (XR) outperform traditio...

- Sarah_Lennox on: Streamline cybersecurity with a best practices fra...

- Jams_C_Servers on: Unlocking the power of edge computing with HPE Gre...

- Sarah_Lennox on: Don’t know how to tackle sustainable IT? Start wit...

- VishBizOps on: Transform your business with cloud migration made ...

- Secure Access IT on: Protect your workloads with a platform agnostic wo...

- LoraAladjem on: A force for good: generative AI is creating new op...

- DrewWestra on: Achieve your digital ambitions with HPE Services: ...