- Community Home

- >

- Storage

- >

- Entry Storage Systems

- >

- MSA Storage

- >

- MSA 2050 Virtua Disk theorical performance

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-20-2022 02:50 AM

03-20-2022 02:50 AM

Dear HPE Community,

We have on one of our solutions a MSA 2050 Storage with 14 SAS disks model EG001200JWJNQ. This disk is presenting with the following performane statistics:

Bps,IOPS (in bold, respectively)

1.1 WFK3DA7Q 22201 30.7MB 387 25195176 6577851 2529.3GB 809.0GB 0B 0B 2022-03-18 13:54:40

1.2 WFK3FAW9 22201 30.7MB 389 25866989 6686747 2529.3GB 809.1GB 0B 0B 2022-03-18 13:54:40

1.3 WFK3DBZ2 22201 30.7MB 389 25850522 6750169 2529.3GB 809.1GB 0B 0B 2022-03-18 13:54:40

1.4 WFK3BSTL 22201 30.7MB 390 25850449 6782619 2529.3GB 809.1GB 0B 0B 2022-03-18 13:54:40

1.5 WFK3DC6Z 22201 30.7MB 389 25853522 6761658 2529.3GB 809.1GB 0B 0B 2022-03-18 13:54:40

1.6 WFK3DA2W 22201 30.7MB 390 25854218 6779723 2529.3GB 809.1GB 0B 0B 2022-03-18 13:54:40

1.7 WFK3DANB 22201 30.7MB 391 25857470 6873284 2529.3GB 809.1GB 0B 0B 2022-03-18 13:54:40

1.8 WFK3DBL6 22201 30.7MB 391 25701885 6808290 2498.6GB 804.0GB 0B 0B 2022-03-18 13:54:40

1.9 WFK3KC2Z 22198 30.7MB 390 25702162 6745543 2498.6GB 804.0GB 0B 0B 2022-03-18 13:54:40

1.10 WFK3FAGJ 22201 30.7MB 387 25059504 6571071 2498.6GB 804.0GB 0B 0B 2022-03-18 13:54:40

1.11 WFK3F7NR 22202 30.7MB 391 25059805 6827148 2498.6GB 804.0GB 0B 0B 2022-03-18 13:54:40

1.12 WFK3BSW8 22201 30.7MB 391 25058957 6817976 2498.6GB 804.0GB 0B 0B 2022-03-18 13:54:40

1.13 WFK3D8FR 22201 30.7MB 391 25057847 6821250 2498.6GB 804.0GB 0B 0B 2022-03-18 13:54:40

1.14 WFK3D9R4 22201 30.7MB 390 25044545 6777423 2498.6GB 804.0GB 0B 0B 2022-03-18 13:54:40

I see for a physical disk a very good performance.

Based on required partitions sizes they are in a unique Disk-Group in RAID5 allowig 15.5Tb of data to be distributed on Virtual Volumes. Of course we do have a single controller active , or 16Gbps of FC throughtput, but we also have a more parallel IOPS in more parallel disks.

I have being reconfguring our storage and then having a chance to do performance tests on it, or unique I/O tests in a single Virtual Volume.

The test consist in create a large Virtual Volume, or 7Tb, around half of my Pool, but it is expected that Virtual Volume feature would distriubute its I/O in many parallel disks. Since the FC port is a 16Gbps port type, and my server also has a card withh 32Gbps, and retro speed compatibility, we have a single controller 16Gbps, or expected bandwidth of 1600MBs of disks usage.

It is true I am writting in RAID5 but a large test to fill up a file inside this 7Tb Virtual Volume started with around 1200MBs, but this because of cache on the controller. Soon it recuded to around 400MBs, and the final statistics is :

11801255936 bytes (12 GB) copied, 11.491852 s, 1.2 GB/s

..

6841947537408 bytes (6.8 TB) copied, 14091.999298 s, 486 MB/s

dd: error writing ‘/mnt/tmp/largeZero.tmp’: No space left on device

835242772+0 records in

835242771+0 records out

6842308780032 bytes (6.8 TB) copied, 14092.9 s, 486 MB/s

real 234m52.951s

user 12m53.360s

sys 108m44.882s

On the storage, I see:

Name Serial Number Bps IOPS Reads Writes Data Read Data Written Allocated Pages % Performance

% Standard % Archive % RC Reset Time

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

lvol_backup 00c0ff29b52e0000c3c1346201000000 486.4MB 465 15018 8799486 154.3MB 9.1TB 478193 0

100 0 0 2022-03-18 13:53:44

and on controller:

Durable ID CPU Load Power On Time (Secs) Bps IOPS Reads Writes Data Read Data Written Num Forwarded Cmds Reset Time

Total Power On Hours

-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

controller_A 17 99132 494.6MB 473 44780 9179820 1073.2MB 9.4TB 0 2022-03-18 13:53:44

22201.45

controller_B 1 99829 0B 0 15466 0 391.9MB 0B 0 2022-03-18 13:42:06

22201.14

-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

Success: Command completed successfully. (2022-03-19 17:26:41)

I cannot understand with so many parallel disks, which one with good performance, and even using a unique controller, I cannot get more close to the theoretical performance botthe neck by the FC transport, or around 1600MB/s.

I see an increment to iowait when massively writting into storage. I lost the instantaneous IOWAIT during my test but we can see after last night zero I/O the average remains larger than zero, or:

$ iostat

Linux 3.10.0-1062.el7.x86_64 (cgw01) 03/20/2022 _x86_64_ (24 CPU)

avg-cpu: %user %nice %system %iowait %steal %idle

0.18 0.00 1.16 1.98 0.00 96.67

During write it goes above 8%, something also not clear to me since I am with this system just for testing, no parallel I/Os with storage.

It is much less, almost like a single local pair of disk in RAID. I was expecting a performance, in average, much better. It is not a bad performance, but I would like to understand the theorical performance expected from MSA2050.

Any comment about it?

Just trying to better understand what could affect performance when defining configuration on the storage.

Final comments is that both Server(DL360 Gen10) as well MSA2050 all up to date regarding firmware and drivers.

Other comment is that we also have other systems with 3PAR, which uses RAID6 natively. 3PAR performance is something exceptional.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-20-2022 03:51 AM

03-20-2022 03:51 AM

Query: MSA 2050 Virtua Disk theorical performance

System recommended content:

1. HPE MSA 2050 Storage - Supported Configurations

Please click on "Thumbs Up/Kudo" icon to give a "Kudo".

Thank you for being a HPE valuable community member.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-20-2022 03:06 PM

03-20-2022 03:06 PM

Re: Query: MSA 2050 Virtua Disk theorical performance

Dear Support and Community

I had already look into these documents. as well others. On MSA 2050 SAN Storage it also states:

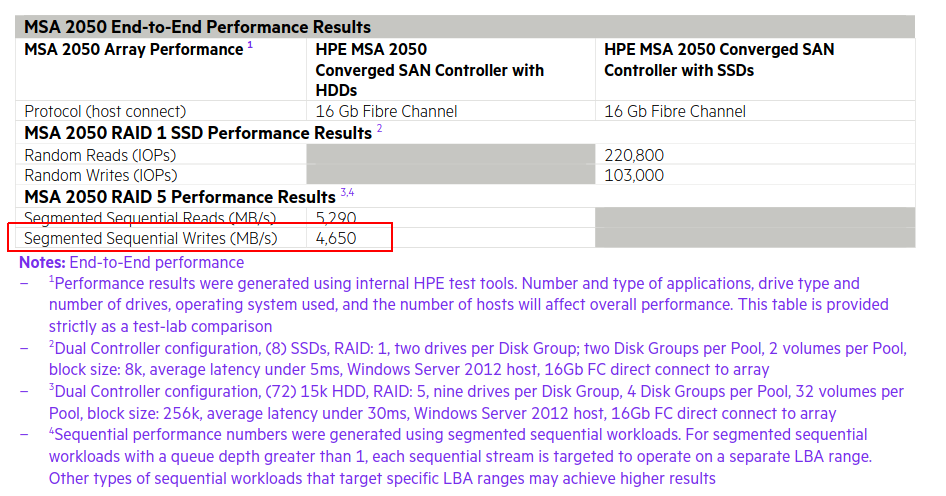

Note the performance with 4 DG and usng 4 ports is supposed to be 4650MBs, or less than the theorical 4x16Gbps FC ports.. In terms of FC ports the throughput is supposed to be no more than 4x1,6GBs, or 6.4GBs. With RAID5 writte is not so good so 4.65GBs is pretty ok.

With my configuration I expected to have something around 1/4 of this speed, since although I do not have 4 DGs and 4 FC ports simultaneouslu in use, I do have more disks in Disk Group where bottleneck is expected to be on FC itself, and a little less on RAID 5 write, as I wrote in previous paragrapher.

But what I see is much less, since if I avoid cache on OS level, or direct write, I see performance no bigger than 500MBs.

Direto no device do Multipath(storage)

Direto no Device LVM

Throughput (R/W): 1KiB/s / 491,902KiB/s

Events (dm-13): 525,267 entries

Skips: 0 forward (0 - 0.0%)

Throughput (R/W): 1KiB/s / 491,906KiB/s

Events (dm-26): 117,866 entries

Skips: 0 forward (0 - 0.0%)

This is the real MSA 2050 performance, with 14 disks in RAID 5 on 1 DG and one pool, of course..

I was expecting at least 1GBs, since I have no other I/O on this system.

I start with more, if not testing direct, but this is the SO I/O cache. But if file is too large, it converges to the real I/O performance.

All best practices, as possible are in place. I cannot use multiple Pools since I have need for partition sizes which does not allows me to divide into two Disk Groups.

The performance is not a problem, just not understand why do I have a much lower performance with a single Pool/DG then theorical. As I state before, I expected at least 1GBs, but I have no more than 500MBs throughput.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-21-2022 06:55 AM

03-21-2022 06:55 AM

Query: %%[message__c_5f9795997e5ff11a38abc2b0:Query_Title]

System recommended content:

1. %%[message__c_5f919f02b9a73b2dbc2cb508:Coveo_Article_Title_1]

2. %%[message__c_5f924affb9a73b2dbc49f97f:Coveo_Article_Title_2]

Please click on "Thumbs Up/Kudo" icon to give a "Kudo".

Thank you for being a HPE valuable community member.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-23-2022 09:11 AM

03-23-2022 09:11 AM

SolutionHi Rodrigo,

The disk groups are not configured as per best practice recommendations. From MSA best practices guide pages 18 and 19:

https://www.hpe.com/psnow/doc/a00015961enw?jumpid=in_lit-psnow-red

For optimal write sequential performance, parity-based disk groups (RAID 5 and RAID 6) should be created with “the power of 2” method. This method means that the number of data drives (nonparity) contained in a disk group should be a power of 2.

I have seen significant sequential write performance improvement when disk groups are configured as per this method.

I dont think the sequential write throughput mentioned in quickspecs could be achieved with single disk group.

MSA 2050 RAID 5 Hard Disk Drive (HDD) sequential results: Dual Controller configuration, (72) 15K HDD, 9 drives

per disk group, 4 disk groups per pool, 4 volumes per pool

While I am an HPE Employee, all of my comments (whether noted or not), are my own and are not any official representation of the company

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-02-2022 11:43 AM

04-02-2022 11:43 AM

Re: Query: MSA 2050 Virtua Disk theorical performance

ArunKKR,

Sorry my later answers but initially thanks a lot for the comments.

The issue is this solution was originally created with a single DG where "space" requirements does not allows me to break it into at least two DGs, based on volumes and space requirements.

For example, if I divide this in 2 Disk Groups, I would not have a single Volume larger than the size of half of these disks, or around 6,5TB. One issue is that I have on thi solution a backup partition of 7TB or larger, even pretty close, to a volume to be created in a single DG. So I would not be able to create, or distribute I/Os anyway, since backup will not be always in use. Based on sizing, and avoid to have one more parity disk, decide to let a single DG, since we cannot easily arrange more disks right now.

I see your point and make sense. I did not expect to reduce this much in sequential write. My expectation, having more disks than on the HPE tests, was that limit would be in the FC port speed, of course reducend by around 30% or a little more. This would match the around 4.3Gbps/4, or around 1Gbps per 4DG/2Pool all 4 ports used with a server.

But I got your idea where probably without SSD and witn the originally dimension we will need to use the around 550MBs throughput we see on tests. Of course OS cache also increases this speed if not writting all the time into storage.

Thanks a lot for the clarification and where we can improve in this particular configuration with a single DG.