- Community Home

- >

- Solutions

- >

- Tech Insights

- >

- HPE Machine Learning Development System: Real-worl...

Categories

Company

Local Language

Forums

Discussions

Knowledge Base

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

HPE Machine Learning Development System: Real-world NLP & computer vision model benchmarks

Learn the details of a recent benchmarking experiment with HPE Machine Learning Development System versus a leading cloud provider, featuring two of the most popular open source libraries with cutting-edge deep learning models: HuggingFace for Natural Language Processing and MMDetection for computer vision. By Evan Sparks, VP of HPC & AI at HPE

Cloud or not to cloud: An empirical opinion

As we designed the HPE Machine Learning Development System, we followed these key tenets:

- The target users are machine learning (ML) engineers and researchers.

- The system should be built for scale, using the best available industry-standard components – and it should be performant out of the box.

- Given the target user personas, we made sure that the workloads we designed for were real-world applications, not benchmarks that have been extensively tuned but didn't match real-world tasks.

Establishing the workloads

We selected two representative workloads from two of the most popular open source libraries with cutting edge deep learning models: HuggingFace for natural language processing (NLP), and MMDetection for computer vision. Models like

Object detection models are used to help self-driving cars “see” everything from pedestrians to stoplights. Both libraries are available in the Determined Model Hub. We used the PyTorch implementations for these workloads.

NLP workload:

- We selected one of the top BERT models from HuggingFace.

- We trained on the XNLI dataset with a base batch size of 64.

Computer vision workload:

- We selected a Faster RCNN implementation from MMDetection with a ResNet-50 backbone.

- We trained on the VOC dataset with a base batch size of 4.

Both models are trained using an AdamW optimizer and trained data parallel. Importantly, we are showing weak scaling throughput results for these models. That is, the total batch size grows linearly with the number of GPUs. Because of the difference in ratios of model weights (and therefore inter-node bandwidth requirements) to compute required to perform distributed training between these two workloads, the NLP workload is much more communication heavy. This will be important as we examine the results.

Establishing benchmark environments

HPE Machine Learning Development System was compared against a leading public cloud provider, using a standard instance type with NVIDIA 8xA100 GPUs, a similar configuration to the Apollo 6500 Gen 10 Plus systems that serve as a building block for the HPE Machine Learning Development System. On this cloud provider, the networking options are for these nodes to be equipped with standard 100 Gbps links.

On the other hand, the HPE Machine Learning Development System is equipped with 8XNVIDIA 80GB A100 GPU’s on each compute node. It’s connected via InfiniBand fabric, giving the system an 800 Gbps of aggregate bandwidth per node.

The results

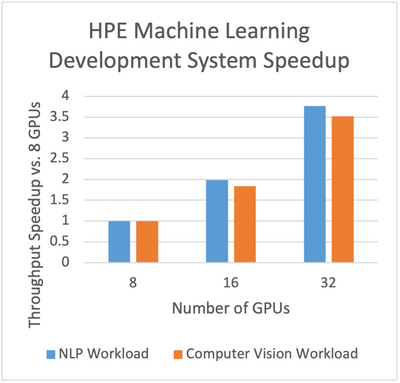

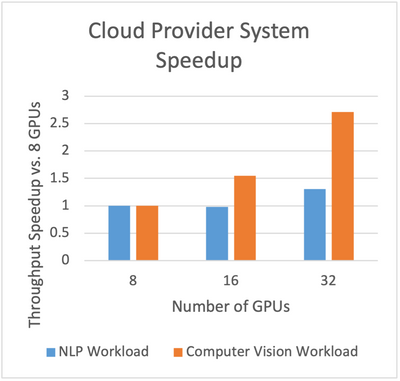

For these real-world benchmarks, the HPE Machine Learning Development System compares extremely favorably with the large cloud provider system, particularly on the NLP workload.

As compared with the cloud provider system, the HPE Machine Learning Development System scales extremely well up to 32 GPUs – and delivers approximately 90% scaling efficiency for both workloads, owing primarily to the communication fabric differences and its effect on this workload. The NLP workload is network-bottlenecked on the cloud provider system and it shows. The communication overhead introduced by gradient exchange – while nodes are exchanging local updates to the model weights required after a forward and backward pass of the model – means that moving the training workload to two nodes on the cloud provider system slows down training on two nodes instead of one using the cloud provider system. With the HPE Machine Learning Development System, we see near linear scalability up to 32 GPUs on the NLP Workload. Both systems scale well on the computer vision task, because it is not nearly as network constrained at this batch size.

Overall, the HPE Machine Learning Development System has 5.7X faster throughput than the cloud provider system on 32 GPUs on the NLP workload. It's slightly faster on the computer vision workload.

Pushing to scale

We decided to test the HPE Machine Learning Development System on a realistically sized customer cluster, and we continue to see excellent performance as we scale to hundreds of GPUs. Specifically, we see 72-to-73% of ideal scaling performance on 256 GPUs (32 nodes) for both workloads.

Key benchmark takeaways

The HPE Machine Learning Development Environment is purpose-built for large-scale machine learning and is performant out of the box for the most demanding real-world workloads.

Overall, what we’ve shown is that the HPE Machine Learning Development System provides a high-performance platform for model developers to train real-world models in a fast, scalable manner. Efficient scale-out means that training runs faster. And when training runs faster, ML engineers and researchers can iterate more quickly on their ideas.

Faster iteration times means more models tried in a shorter period. In the case of computer vision, this could mean safer autonomous vehicles. In the case of NLP, it could mean better conversational experiences for your application’s end users.

Learn more about the HPE Machine Learning Development System

Watch the video:

Read the press release: Hewlett Packard Enterprise Accelerates AI Journey from POC to Production with New Solution for AI Development and Training at Scale

Meet Evan Sparks, VP of HPC & AI at HPE

Insights Experts

Hewlett Packard Enterprise

twitter.com/HPE_AI

linkedin.com/showcase/hpe-ai/

hpe.com/us/en/solutions/artificial-intelligence.html

- Back to Blog

- Newer Article

- Older Article

- Amy Saunders on: Smart buildings and the future of automation

- Sandeep Pendharkar on: From rainbows and unicorns to real recognition of ...

- Anni1 on: Modern use cases for video analytics

- Terry Hughes on: CuBE Packaging improves manufacturing productivity...

- Sarah Leslie on: IoT in The Post-Digital Era is Upon Us — Are You R...

- Marty Poniatowski on: Seamlessly scaling HPC and AI initiatives with HPE...

- Sabine Sauter on: 2018 AI review: A year of innovation

- Innovation Champ on: How the Internet of Things Is Cultivating a New Vi...

- Bestvela on: Unleash the power of the cloud, right at your edge...

- Balconycrops on: HPE at Mobile World Congress: Creating a better fu...

-

5G

2 -

Artificial Intelligence

101 -

business continuity

1 -

climate change

1 -

cyber resilience

1 -

cyberresilience

1 -

cybersecurity

1 -

Edge and IoT

97 -

HPE GreenLake

1 -

resilience

1 -

Security

1 -

Telco

108