- Community Home

- >

- Storage

- >

- HPE Nimble Storage

- >

- Array Performance and Data Protection

- >

- Read IOPS on Nimble Storage

Categories

Company

Local Language

Forums

Discussions

Knowledge Base

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-21-2015 02:43 AM

09-21-2015 02:43 AM

Hello,

We used to read about how Nimble can leverage the write performance by sequentializing random write data to SATA HDDs

At the result, each 7k2 HDD can reach up to 10.000 write IOPS (CS700) and the difference about write performance between CS-array is depend on the amount of CPU core for the speed of sequentializing random write

But for the Read IOPS performance on Nimble Arrays, it doesn't base on the speed of CPU like write IOPS performance.

And the Read Way is go to NVRAM, DRAM first and then SSD.

If MLC SSDs can be up about 5000 IOPS per drive, so that 4x SSDs will be 20.000 Read IOPS on each Array? Is it the same performance on all CS-array because they have the same 4x SSD drives?

Please explain it more detail for me.

Thanks

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-23-2015 03:53 AM

09-23-2015 03:53 AM

Re: Read IOPS on Nimble Storage

Hi there, thanks for the question.

We have a great youtube video which describes the read/write IO path for random and sequential data which will most likely answer the majority of your questions. But to summarise, Nimble's CASL file system does not bottleneck on SSD (we get far more than 5000 IOPS per SSD), the bottleneck for read/write IO is compute within the controller - therefore it's feasible to achieve 15K random read IOPS on a CS2xx, yet with the same SSDs in a CS500 we can achieve >90K random read IOPS.

twitter: @nick_dyer_

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-23-2015 04:04 AM

09-23-2015 04:04 AM

Re: Read IOPS on Nimble Storage

Thanks for your answer.

But i still don't understand. I watched your video many times.

For the write IO, it's easy to know.

But for the read IO, what exactly are computed in the CS controller to reach >30K random read IOPS on CS300 or > 90K random read IOPS on CS500 with only 20.000 backend IOPS on 4 x SSD drives (4x 5000 IOPS which you mention about SSD performance) ?

I mean for 100% read, if we got 90.000 IOPS to controller, after that 90.000 IOPS will come to SSD drive with only 20.000 IOPS? Why don't have the bottleneck here? Should the performance be only 20.000 IOPS?

If not, could you please explain more detail.

Thanks so much

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-24-2015 01:50 AM

09-24-2015 01:50 AM

Re: Read IOPS on Nimble Storage

Hi Tran,

An SSD drive can deliver far more than 5000 read iops, more like 50K iops when using 4K blocks. SSD's are slower on writes because of write amplification and garbage collection, to put a raid on top also make the problem worse.

Nimble use SSD's for what they do best, -random reads without raid overhead. The highest number Ive seen in a CS700 using only 4x SSD's is 185K iops on random read, thats close to 45K iops per drive and its all because of CASL, our optimized filesystem where every type of media is optimized and utilized in the most efficient way.

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-24-2015 02:06 AM

09-24-2015 02:06 AM

Re: Read IOPS on Nimble Storage

Hi Peder,

I knew CASL is very good and optimized for every type of media and adapt to various block size, right?

But at here, I need to understand what exactly CASL did in controllers to make it difference on each CS model even all CS model (except CS700) used the same SSD drive type MLC SSD and same number of SSD drive (4 drive)

You said "An SSD drive can deliver far more than 5000 read iops, more like 50K iops when using 4K blocks" . I don't agree with you , I believe that the 5000 random read iops on each normal MLC SSD at 4K block size.

Thanks,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-24-2015 07:22 AM

09-24-2015 07:22 AM

Re: Read IOPS on Nimble Storage

Many consumer level SSDs, even at the budget level, can do 90K+ IOPS when doing 4k random read.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-24-2015 07:22 AM

09-24-2015 07:22 AM

Re: Read IOPS on Nimble Storage

Based on the information HERE, an MLC SSD drive can do 38000 random read IOPS.

With that information, it is quite easy to see how a CS-array can achieve very high random read throughput with only 4 SSD.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-24-2015 09:37 PM

09-24-2015 09:37 PM

Re: Read IOPS on Nimble Storage

Hi Sullivan

Could you ensure that SSD drive use on Nimble Storage, because there are many types of MLC SSD on the storage market.

You just guess about type of SSD that using on Nimble Storage and can you prove it or any public document told about the number IOPS per SSD?

For me, I searched so many Nimble Document and found out the MLC SSD can reach about ~5000 random read IOPS (Nick Dyer also confirmed this above and he told that the random read based on controller??)

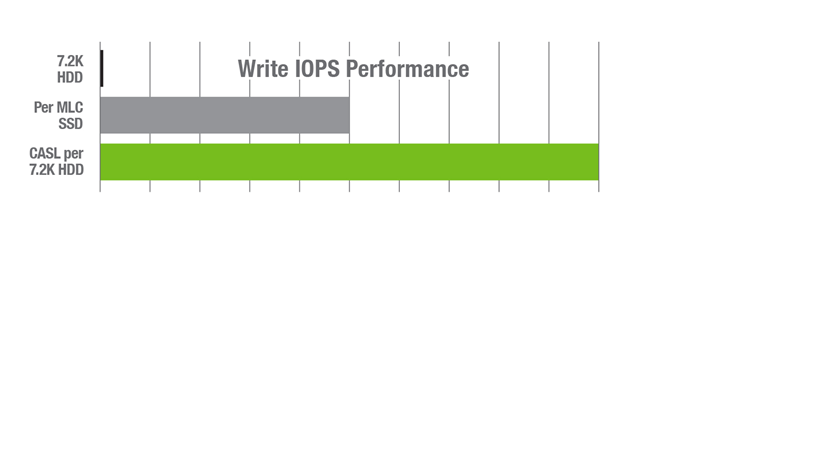

For example: You can see this picture on Nimble Presentation. The IOPS per MLC SSD can reach 5000 IOPS, IOPS per 7.2K HDD on CS700 can reach 10.000 IOPS.

It seems you're not correct?

In addition, if Nimble Storage based on the number of SSD drives and types of SSD drive for random read IOPS, it won't make the difference between Nimble Storage and other vendors. Besides, if Nimble Storage based on number of SSD drives and types of SSD drive, it also increases the random read IOPS when we upgrade CS with AFS ?? It's very similar to the traditional architecture and not specially.

Finally, what I need is what actually Nimble Controller did for leverage random read IOPS without depend the number of SSD drives and types of SSD?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-24-2015 11:46 PM

09-24-2015 11:46 PM

Re: Read IOPS on Nimble Storage

Hello,

There is no technical limit of 5000 IOPS per SSD for MLC flash. As the other posters mention, it's entirely feasible to get 40-50K IOPS random read (4k blocks) from a single MLC SSD. It's more the case that other, legacy enterprise storage vendors cannot achieve that level of performance due to their traditional, disk-based file systems, which is why it's capped at 2500-5000 IOPS per SSD (e.g. EMC and Dell, respectively).

Also, adding more SSDs to a Nimble solution does NOT increase the IOPS, it merely increases the working set for hot data (ie we can service more blocks from SSD to ensure ~97% flash read, and to achieve the top-end IOPS figure the array is capable of).

It might be best to have one of our local SEs reach out to you to discuss this in more detail; it's very hard to describe over a forum. Can you please message me with your contact details and location?

twitter: @nick_dyer_

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-25-2015 12:54 AM

09-25-2015 12:54 AM

Re: Read IOPS on Nimble Storage

Hi Nick,

I'm also SE at Viet Nam. I need this information to explain for my customer.

I asked someone from Nimble at Singapore, but so far no one could explain more detail for me what detail Controller did to leverage the RANDOM READ IOPS.

I mean what the difference thing inside Nimble controller with other traditional vendor's controller.

I knew your information "Also, adding more SSDs to a Nimble solution does NOT increase the IOPS". I agreed that, therefore I need exactly why Nimble can increase RANDOM READ IOPS without adding more SSDs?

You mention about disk-base file systems so that could you give me some document or some link about that they're cause to limit the IOPS per single MLC SSD to 2500-5000 instead of 40K-50K?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-25-2015 05:07 AM

09-25-2015 05:07 AM

Re: Read IOPS on Nimble Storage

Hi An.Tran,

I did find some internal information indicating we did use that particular SSD drive at one point, however, we do not publicly provide these kinds of details. Provided that both you and your customer have signed an non-disclosure agreement, I am sure the local Nimble Storage SE's could provide more details.

However, the exact model of SSD is not relevant because of your assertion that a single SSD can only do 5000 4k IOPS is not true. By researching just the Intel models at the same link I provided you can find that a single SSD, not just that model can do significantly more than that. In effect, your primary question that there must be some secret method in CASL to achieve this performance is not based on accurate facts. If you don't accept that a single SSD can produce more than 5000 4k random IOPS then I will never be able to show you how we do it.

The figure you show is actually for write IOPS, which is confusing to me since this is a discussion about read throughput. Perhaps you can provide a figure that relates to read IOPS.

The reason that the addition of AFS to a CS-array does not increase the number of IOPS of the array is because we scale performance and capacity independently. The addition of the AFS, as Nick mentions, only serves to add capacity to the flash cache area which allows us to save a great deal more data on flash, meaning more read operations are served from that media. Performance is scaled by adding more and faster CPU's which allow us to then service more of those requests. If you look at the CS-series arrays, the primary difference in performance is the number and speed of the CPU's and not the amount of disks they have. Disk based storage systems scale by adding disks for random read workloads.

If you look at most traditional vendors controllers, these systems use CPU much differently. Also the code on these controllers was written many years ago and does not have the same ability as Nimble to take advantage of the larger number of cores in each modern CPU. They cannot provide the same level of parallel processing as in the Nimble operating system without completely re-writing their own operating systems. As a result, Nimble is more efficient and better able to process data quickly and efficiently. Another area where traditional disk based storage systems are not optimized for flash is in the way that they use the SSD in their storage systems. Because they are simply replacing HDD's with SSD's, they use the same RAID architecture on the SSD's. This means that they have to do many more operations to write data to the SSD which reduces the overall performance of the system and limits their throughput as Nick mentions also.

Because Nimble does not implement RAID on the SSD's in the system and uses them for reads instead of writes, we are able to provide much better throughput from SSD's than traditional architectures.

I am more than happy to continue this discussion or help you find someone locally who can explain this to you. However, as mentioned before, you need to agree that a single SSD can provide more than 5000 4k random IOPS. Unless we can agree on that point I'm afraid I won't be able to describe sufficiently how CASL and Nimble provide the documented performance we do.

Craig

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-25-2015 06:12 AM

09-25-2015 06:12 AM

Re: Read IOPS on Nimble Storage

Hi Craig,

First, I'm very trust in single SSD can reach more than 5000 IOPS random read , I said one more time that I'm so believe it, OK?

But at here, the important thing is not about how many Random Read IOPS per single MLC SSD can provide, because of the traditional architecture, they have also based on the Random Read IOPS per SSD and absolutely it can reach up to 1.000.000 Random Read IOPS with 40K-50K Random IOPS per SSD drive (EMC, NetApp) even they just used the oldest O, OK?

I want to mention for 5000 IOPS per SSD drive in CASL because I really wanna underline about the POWER of CASL controller to leverage the very worst SSD drive with only the smallest number IOPS for reaching the very huge number of Random Read IOPS (even if the actually Random IOPS per SSD drive can more than 5000 IOPS so much, OK?)

I need what CASL did in controller to convince the customer. We can not said that "Sorry, we just proved the difference for Write IO by sequentializing the Random Write and for Read IO, this is our secret information.

Second, please don't tell anymore the benefit of CASL for scaling performance and capacity independent because I also understood it!! I My purpose when mention about upgrade AFS is I need to know about the difference for Read Random IOPS which almost based on the POWER of controller, not the number of SSD drive like the traditional architecture!!! OK bro

It seems you still not understood what I ask???

Finally, What I need is understand about what CASL did internally actually in Nimble Controller to leverage Random Read IOPS with any types of SSD drives even if the SSD drive is only 5000 or only 10.000, or 14.000 or 20.000!! OK bro???

If you said that because SSD can reach more than 40K IOPS so that it is quite easy to see how a CS-array can achieve very high random read throughput with only 4 SSD, it's very the same way other vendors did like I mention above NetApp, EMC can reach up to 1.000.000 Random Read IOPS with 40K-50K Random IOPS per SSD drive and IT'S NOT SHOW THE SPECIAL THING CASL CAN DO !!!!!

Thanks,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-25-2015 06:15 AM

09-25-2015 06:15 AM

Re: Read IOPS on Nimble Storage

Wanted to chime, because this is something that I've often struggled to understand and in turn explain when speaking with people about Nimble magic. I could be off base but I'll throw this out there. When CASL writes the compressed, sequential 4.5MB stripes down to disk, that is taking a large number of input/output operations and encapsulates them within each stripe. When a read request comes in, Nimble validates checksum, uncompress, and serves up the data.

So with that decompression; does that also accelerate/inflate the IOPs provided to the requesting Host?

Anyone else want to weigh in on this? ![]()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-25-2015 06:28 AM

09-25-2015 06:28 AM

Re: Read IOPS on Nimble Storage

Just to add on:

- Increasing the cache size does not necessarily increase performance. It certainly can increase application performance as more data can be in the cache, but from a performance ceiling perspective, it has no impact until the active data size exceeds the cache size.

- Nimble has Redirect-on-Write snapshots and no LUNs to manage; this results in the need for the metadata journal on the array to know where blocks of data are located and what blocks of data belong to which volume or snapshot.

- This is the primary bottleneck under normal working conditions and the reason that performance is limited by the CPUs of the storage controller. Faster processors and more cores allow the storage controllers to find more data faster and decompress it to send the response to the requesting device.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-25-2015 06:49 AM

09-25-2015 06:49 AM

SolutionAn.Tran,

I think we are on the same page now. However, we also need to understand that we don't use lower performance SSD's because they don't have the throughput customers require and with slower SSD's we would need more of them to meet the performance requirements which would make the solution more expensive. We developed the CS-array and CASL to more effectively use the high performance of SSD's in a very efficient way. We use SSD to do what they are very good at, very fast read operations. Yes companies like NetApp and EMC can provide high throughput with their systems because the SSD's themselves are fast. However, at some point they are limited because of the way they arrange the data on the SSD, put RAID on the SSD, etc. Those numbers with millions of IOPS are also with multiple controllers in scale-out configurations with many SSD's.

So the magic of CASL is this:

- Use SSD's for caching data for reads

- Use intelligent caching algorithms, aggressive caching, and volume pinning to increase the number of reads that actually come from SSD.

- Increase the size of the cache with AFS to allow for more data to be stored increasing the likelihood that a read request is served from SSD.

- Use the SSD to store metadata to make the lookup of data blocks faster.

- Take advantage of high speed multi-core processors to make the management of data, compression, IOPS faster.

I hope this helps.

Craig

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-25-2015 09:11 AM

09-25-2015 09:11 AM

Re: Read IOPS on Nimble Storage

Hi Craig,

OK thank a lot for your explanation.

I would like to summarize everything:

Random Read IOPS on CASL are leveraged by the both of Controller and the types of SSD drives.

But the most of SSD drives on Nimble Storage can deliver the Random IOPS more than 30.000 IOPS so that Random Read IOPS will absolutely depend on the Difference on Processors each CS-array to calculate exactly number for Read Random IOPS

Thank you again