- Community Home

- >

- Storage

- >

- HPE Nimble Storage

- >

- Array Performance and Data Protection

- >

- Seeing slow performance with Hyper-V guests using ...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-16-2016 09:57 AM

03-16-2016 09:57 AM

We’ve been doing some performance testing with Nimble volumes and various configurations both inside & outside of Hyper-V. Slowest performance for any of our tests involved writing to Nimble volume on a Hyper-V guest VM using direct-connected iSCSI, following Nimble best practices. I'd like to find out if anyone else has seen this and what steps you took to resolve. Thank you.

Solved! Go to Solution.

- Tags:

- guest iscsi

- Hyper-V

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-17-2016 01:47 AM

03-17-2016 01:47 AM

Re: Seeing slow performance with Hyper-V guests using in-guest connected iSCSI (per Nimble best practices). Has anyone else experienced this, and if so how to resolve?

Hi Matt,

Could you provide a bit more information please:

- What are the tests you are running?

- What is the delta you're seeing from other connectivity methods?

- What are those other connectivity methods?

- What NimbleOS version of code is the array on?

- Do you have Nimble Connection Manager installed within the VM?

- Have you tweaked the Windows registry settings as per Nimble's best practice for performance testing with Windows?

twitter: @nick_dyer_

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-21-2016 08:15 AM

03-21-2016 08:15 AM

Re: Seeing slow performance with Hyper-V guests using in-guest connected iSCSI (per Nimble best practices). Has anyone else experienced this, and if so how to resolve?

Hi Nick,

We’ve been testing with Nimble volumes and various configurations both inside & outside of Hyper-V.

Basically, we’ve been using our backup solution (AppAssure) to blow data to our test volumes, and here’s what we’ve seen so far:

TEST #1

RESTORE from AA server to DASD on (Filespeed) physical server (No NIMBLE). Start time 12:19 finish 12:38 total = 19 minutes.

106 GB / 19 = 5.58 GB /minute

TEST # 2

RESTORE from AA server to (Filespeed) physical server /w Iscsi drive via NIMBLE –Compression ENABLED. start 2:46 finish 3:14 total = 30 minutes

106 GB / 30 = 3.46 GB /minute

TEST # 3

RESTORE from AA server to (Filespeed) physical server /w Iscsi drive via NIMBLE –Compression DISABLED. Start time 2:56 Finish 3:17 = 21 minutes

106 GB / 21 = 5.05 GB /minute

TEST # 4

AA server RESTORE after reconfig of (Hyper-V guest VM) 8 mem 4 proc START TIME 11:53 finish 12:24 31 min

106 GB / 31min = 3.42 GB /min

TEST # 5

AA server RESTORE D: drive of (Hyper-V guest VM - Fileserver) to physical server FILESPEED /w Iscsi drive via NIMBLE –Compression DISABLED 3TB volume.

1979.64 GB / 634 minutes = 3.12 GB /min.

TEST # 6

AA server RESTORE of (Hyper-V guest VM - Fileserver) to Hyper-V guest residing completely on Nimble storage WLKW-2K8R2TEST and iSCSI direct-connected to this guest VM using NCM.

This test was cancelled after 20 hrs time to restore and only 51% COMPLETE.

So the main thing that concerns me is that the worst time for any of our tests was #6, which involved sending data to a Hyper-V guest VM using direct-connected iSCSI, with Nimble Connection Manager installed on the guest, following Nimble best practices. The restore time was painfully slow.

We are running Version 2.3.7.0-280146

Nimble Connection Manager is installed within the VM

We did not do any registry tweaks at this point.

Thanks Nick, would be very interested to hear what you think.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-22-2016 03:58 AM

03-22-2016 03:58 AM

SolutionHello,

Factors at play here:

1. Management network you are running the restore over.

2. The data network - connecting the direct attached iscsi disks.

3. Other competing resources on the above resources and sequential operations on the disks, i.e. other backups.

I assume that your backup repository doesn't sit on the Nimble array.

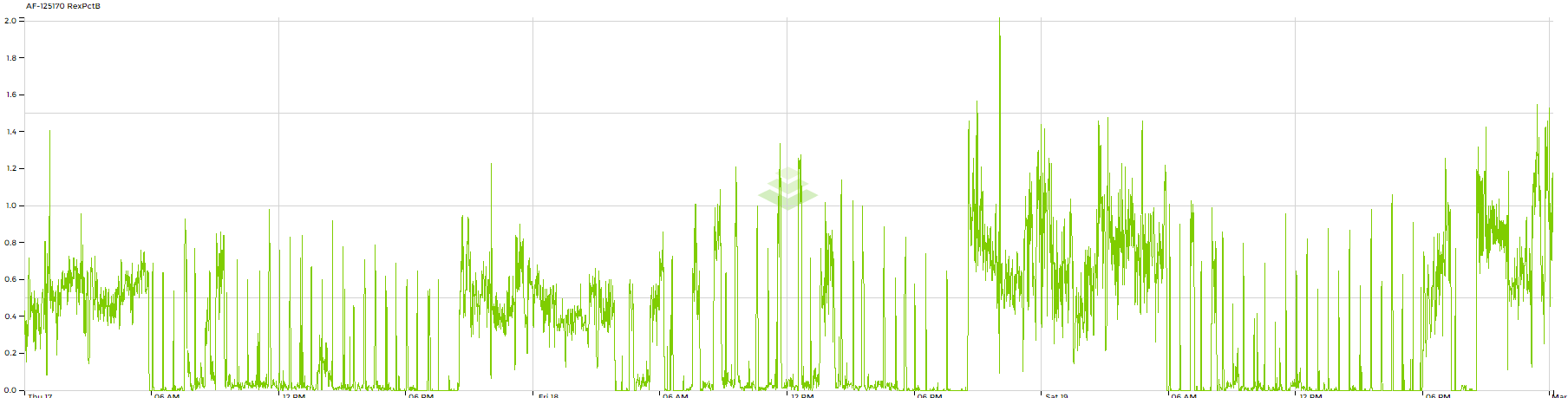

90% of the time these issues are networking related, looking at the transmits on your network, retransmits are highest during your backup windows, this is a sign of saturating the switches, I do not have oversight of your management network however.

The array itself isn't breaking a sweat so no problems in that regard.

I would suggest checking the netstat -s counters on the VM, look for high retransmits.

Reaching out to support would be the best plan of attack.

Many thanks,

Chris

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-24-2016 09:41 AM

03-24-2016 09:41 AM

Re: Seeing slow performance with Hyper-V guests using in-guest connected iSCSI (per Nimble best practices). Has anyone else experienced this, and if so how to resolve?

Thanks for your reply Chris.

For me, the bottom line comes down to this:

What's the best overall approach for Hyper-V guests running on Nimble?

Direct ISCSI connections to the guest VMs that "need" it (SQL? Exchange? Windows File servers?) as per Nimble best practice...

OR

Retain use of .VHDX format with guest VMs -- Does anyone at all use .VHDX for Hyper-V guests running SQL or Exchange, and if so, is recovery viable?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-29-2016 02:42 PM

09-29-2016 02:42 PM

Re: Seeing slow performance with Hyper-V guests using in-guest connected iSCSI (per Nimble best practices). Has anyone else experienced this, and if so how to resolve?

May I ask what you ended up doing?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-30-2016 09:29 AM

09-30-2016 09:29 AM

Re: Seeing slow performance with Hyper-V guests using in-guest connected iSCSI (per Nimble best practices). Has anyone else experienced this, and if so how to resolve?

Hi Lee,

We did have some Hyper-V network issues which we cleaned up and basically reconfigured from scratch, so that was certainly part of the problem.

In addition, I would say that restoring from backup direct to an iSCSI connected VM is not the best method for a restore.

We are only doing in-guest connected iSCSI on our file servers, which allows us to do granular file restores from Nimble snapshots when necessary.

At least for now, running Exchange and SQL as .vhdx disks -- we're about to move to Office365 for Exchange, and running regular SQL backups in addition to Nimble snaps for SQL. FYI, I have created cloned copies of SQL VMs from Nimble snapshots for testing, and the cloned VMs have run fine without issues.

Hope this helps.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-03-2016 12:21 PM

10-03-2016 12:21 PM

Re: Seeing slow performance with Hyper-V guests using in-guest connected iSCSI (per Nimble best practices). Has anyone else experienced this, and if so how to resolve?

I used to do in guest iSCSI for things that could use integration with my backup software for SAN based snapshots or live expansion of VM storage. Typically this would be SQL, Exchange, and file servers. This was back in the day of Microsoft Virtual Server 2005 R2, Hyper-V 2008/R2, and EqualLogic. With advances with Microsoft's hypervisor, you can now to online expansion of VHDX. For me, the only really good use case for in-guest iSCSI is a Microsoft failover cluster that requires shared storage and online expansion of the storage. Microsoft doesn't support online expansion of a shared VHDX until Hyper-V 2016. Once that is released, I can't really think of any situation where you couldn't use VHDX for any Hyper-V VM.