- Community Home

- >

- Storage

- >

- HPE Nimble Storage

- >

- Array Setup and Networking

- >

- iSCSI Set up on N9K with ACI - vPC

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-16-2017 03:09 PM

05-16-2017 03:09 PM

iSCSI Set up on N9K with ACI - vPC

Has anyone set up iSCSI off a N9K ACI Core switch - I'm having issues when trying to use vPCs

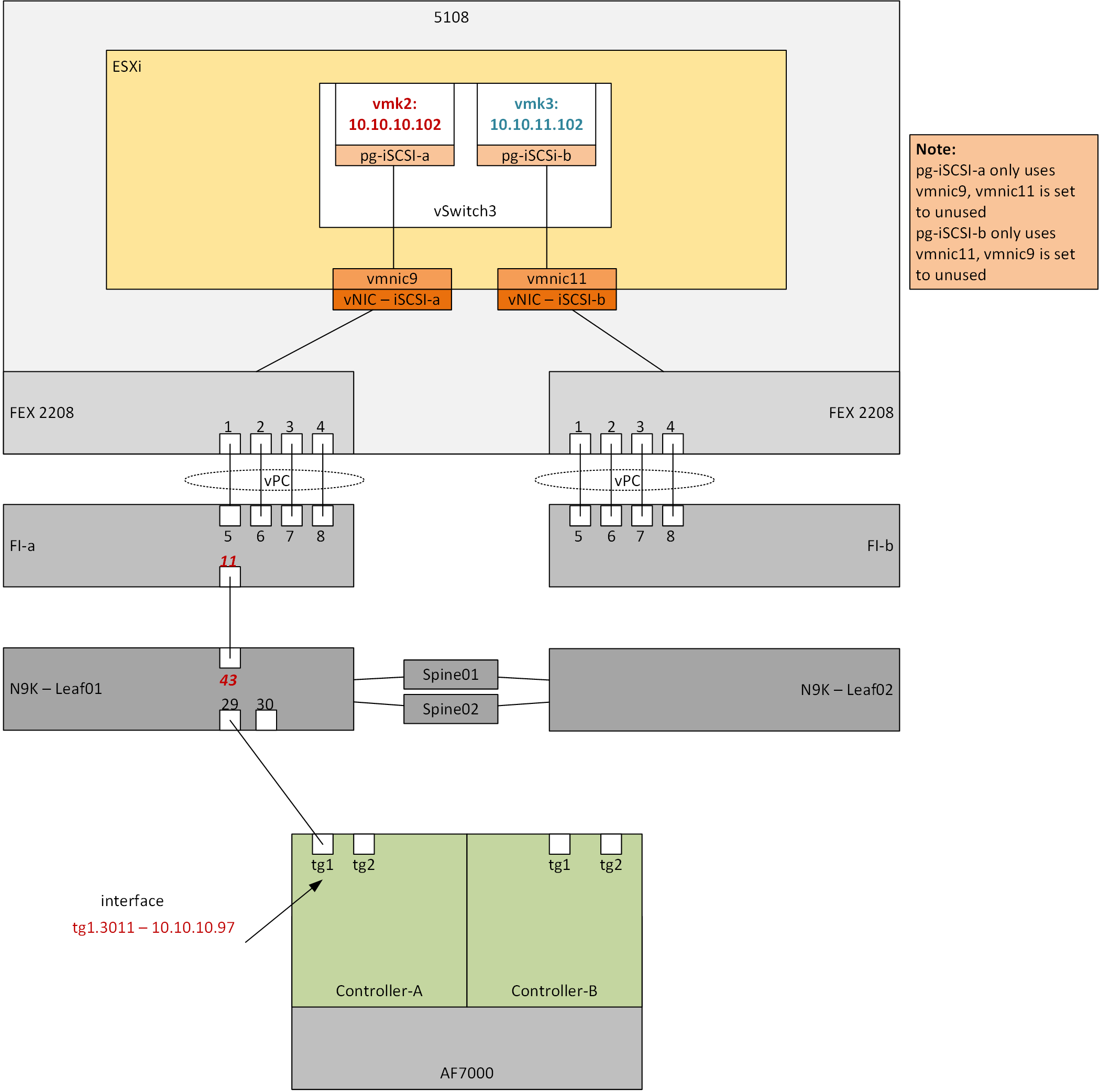

I managed to get connectivity to the Nimble array without any vPCs on the N9Ks

I used Port 11 on the FI and port 43 on the N9K Leaf01 – as documented below

As soon as you configure any vPCs, traffic stops

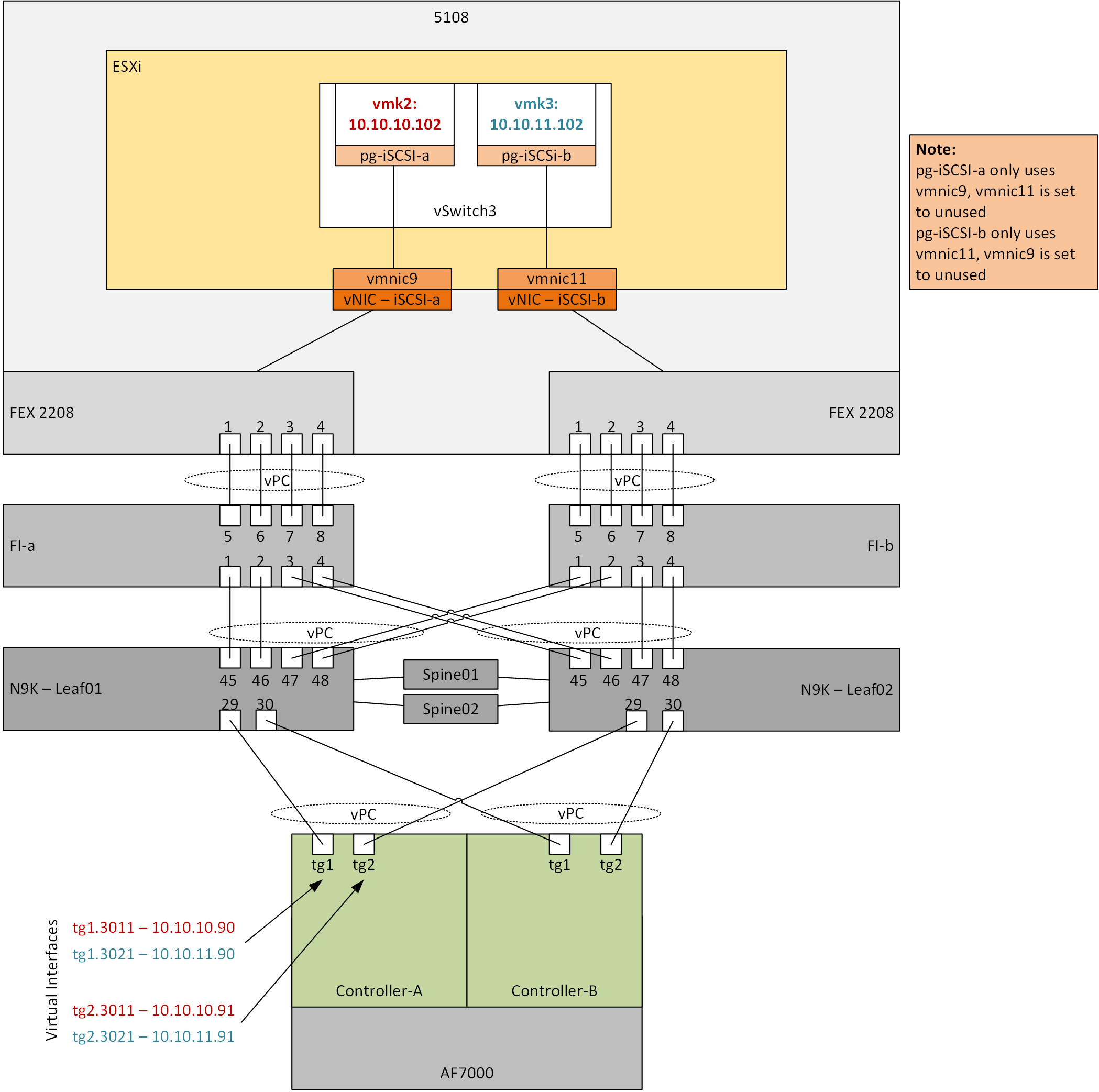

Here is how we have configured – BTW what I cannot see is how to set the Nimble Virtual Interfaces to LACP-Active mode

And the Virtual Interface set up looks weird, I would expect the IP address to float across both adapters

Cheers

Mike

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-17-2017 07:28 AM

05-17-2017 07:28 AM

Re: iSCSI Set up on N9K with ACI - vPC

Hi Mike,

I'm curious if there is 'newer' documentation out there that is telling people to use vPCs on interfaces going to the Nimble tg ports. Can you post the documentation you're using to do this? The reason I ask, is because I think you should be only using vPC for VLANs that are used for VM network traffic. Not iSCSI VLANs. If you create a vPC domain between your 9Ks, (unless things have changed), do NOT allow your two iSCSI VLANs to traverse that vPC. And use only a port-channel (LACP) on each switch locally for their respective interfaces.

Can someone from Nimble weight in on this?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-17-2017 05:20 PM

05-17-2017 05:20 PM

Re: iSCSI Set up on N9K with ACI - vPC

Hi Alex, thanks for the reply,

We looked into this a bit more yesterday - in short, no vPC to the Nimble array, I'll post a detailed reply shortly.

With respects to your comments - are you suggestion connectivity like such?

Cheers

Mike

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-17-2017 07:57 PM

05-17-2017 07:57 PM

Re: iSCSI Set up on N9K with ACI - vPC

Yesterday we tinkered a bit more with the setup and had a reply from Nimble Support as well.

I can see from the email below that you're able to attached the Nimble iSCSI array to port 43 on the N9K Leaf01, which is a supported design. However, when you said you wanted to configure Nimble Virtual Interfaces to LACP-Active mode, this would not work since Nimble does not support LACP. That's the reason Nimble would not be able to be attached to Cisco ACI a vPC-attached node.

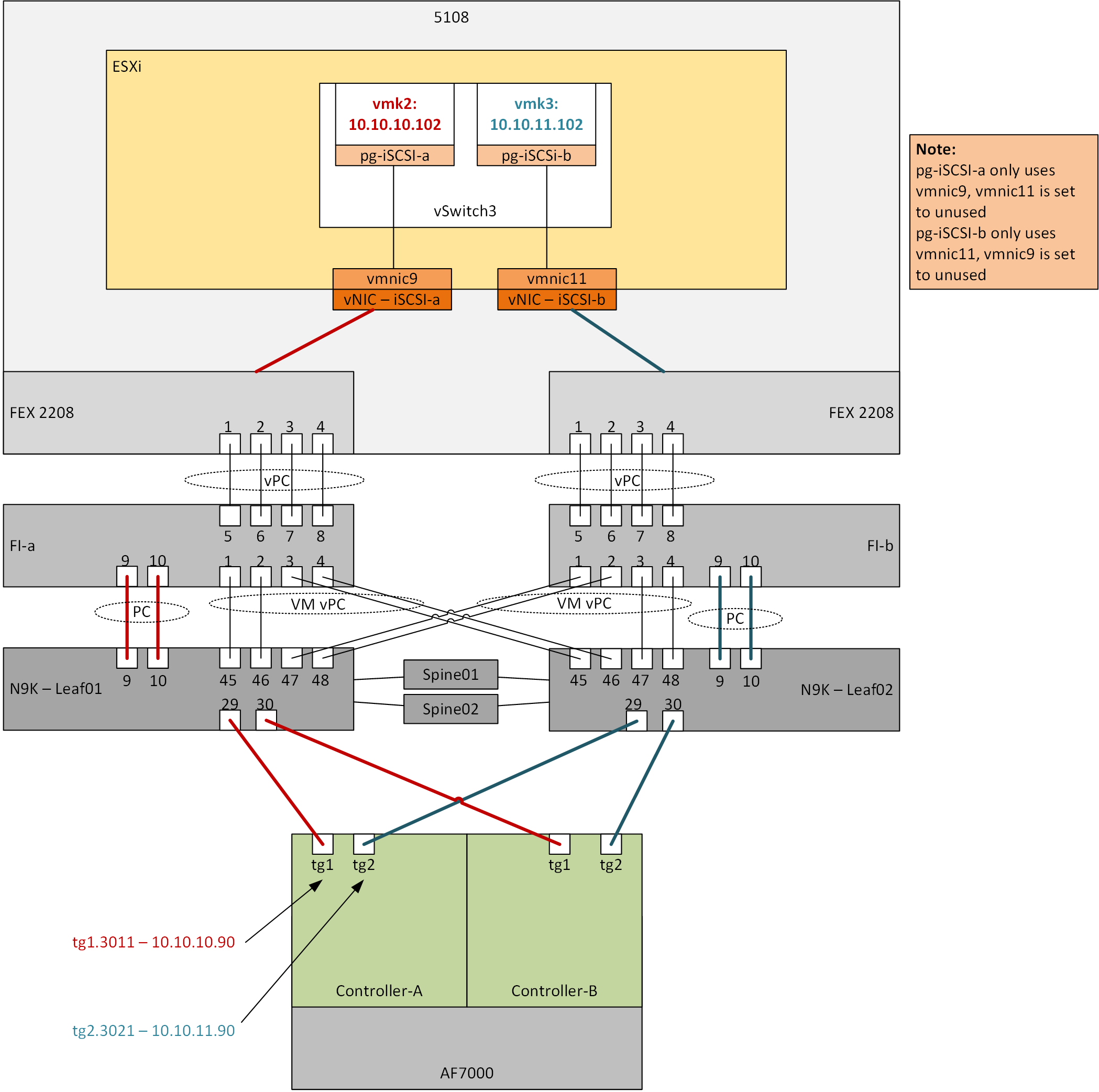

We've removed the vPC on the Nimble side and managed to get traffic flowing on iSCSI-a and iSCSI-b

Essentially here is the config as it stands now

ACI - No contracts - working

or ACI with Contracts - will consider a move to this later

Connectivity

Traffic Path

I believe under “Normal circumstances” traffic should flow as below, ie not traverse the vPC peer links (in this case the Spines) - in the process of trying to prove.

Results

~ # vmkping -s 8972 -I vmk2 10.10.10.90

PING 10.10.10.90 (10.10.10.90): 8972 data bytes

8980 bytes from 10.10.10.90: icmp_seq=0 ttl=64 time=0.296 ms

8980 bytes from 10.10.10.90: icmp_seq=1 ttl=64 time=0.233 ms

8980 bytes from 10.10.10.90: icmp_seq=2 ttl=64 time=0.233 ms

~ # vmkping -s 8972 -I vmk2 10.10.10.91

PING 10.10.10.98 (10.10.10.91): 8972 data bytes

8980 bytes from 10.10.10.91: icmp_seq=0 ttl=64 time=0.229 ms

8980 bytes from 10.10.10.91: icmp_seq=1 ttl=64 time=0.241 ms

8980 bytes from 10.10.10.91: icmp_seq=2 ttl=64 time=0.265 ms

Traffic will/should traverse the vPC peer links (in this case the Spines) if there is an outage as below

Results

~ # vmkping -s 8972 -I vmk2 10.10.10.90

PING 10.10.10.90 (10.10.10.90): 8972 data bytes

8980 bytes from 10.10.10.90: icmp_seq=0 ttl=64 time=0.298 ms

8980 bytes from 10.10.10.90: icmp_seq=1 ttl=64 time=0.234 ms

8980 bytes from 10.10.10.90: icmp_seq=2 ttl=64 time=0.240 ms

~ # vmkping -s 8972 -I vmk2 10.10.10.91

PING 10.10.10.91 (10.10.10.91): 8972 data bytes

8980 bytes from 10.10.10.91: icmp_seq=0 ttl=64 time=0.272 ms

8980 bytes from 10.10.10.91: icmp_seq=1 ttl=64 time=0.244 ms

8980 bytes from 10.10.10.91: icmp_seq=2 ttl=64 time=0.225 ms

Looking at the results for 10.10.10.90 above even if it did transverse the Spine the delay is not evident - hard to say what that might be under load?

Keen to hear thoughts and ideas??

Cheers

Mike

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-19-2017 12:56 PM

07-19-2017 12:56 PM

Re: iSCSI Set up on N9K with ACI - vPC

Did Nimble support give you any help on this? You are correct on the non-LACP. I was wrong.

I think this discussion needs attention from the SmartStack group, if they want customers to have a flawless configuration.

Maybe you can help me understand your diagram, or the design you have. Why are you tagging two different VLANs on one Nimble interface?