- Community Home

- >

- Storage

- >

- HPE Nimble Storage

- >

- Array Setup and Networking

- >

- To vPC peer or NOT vPC peer Nexus for UCS/Nimble S...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-05-2015 09:39 AM

01-05-2015 09:39 AM

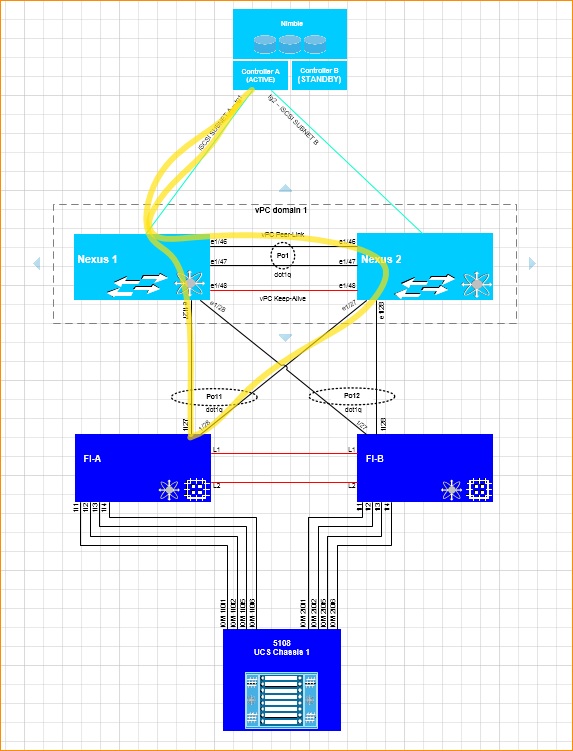

The Nimble KB doc says to vPC peer the Nexus but when working recently with a Nimble SE he recommended not to vPC peer the Nexus in order to avoid iSCSI traffic crossing the peer link.

The scenario would be as follows:

dual iSCSI subnets/VLANs (best practice according to Nimble SE). One for FI-A and one for FI-B

both of those VLANs of course would reside on both Nexus due to vPC requirements.

Nimble, TG-1 (iSCSI-A) conencted to Nexus 1, TG-2 (iSCSI-B) connected to Nexus 2 (not vPCd)

FI's vPC'd to each Nexus

In my picture below, the yellow lines represent iSCSI-A traffic coming from FI-A. Any thoughts?

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-08-2015 09:23 AM

01-08-2015 09:23 AM

Re: to vPC peer or NOT vPC peer Nexus for UCS/Nimble Smart Stack.

It depends on where you want to have the Nimble connected. We opted to connect as appliance ports on the FI. For upstream communication to non-UCS hosts we created appliance port uplink port channels.

Going the appliance port route allows you make sure you have an A/B storage path config. If all of your storage consumers are on the UCS, it makes it even more efficient. The only downside would be burning FI port licenses.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-08-2015 10:49 AM

01-08-2015 10:49 AM

Re: to vPC peer or NOT vPC peer Nexus for UCS/Nimble Smart Stack.

Nimble is connected to the 2 x Nexus. Our company best practice is to not attach storage to the FIs.

The Nimble KB doc shows both methods, FI Applicance port, or to a 10G switch which is a Nexus vPC pair.

In the Nexus vPC pair example, they have the Nexus paired as a vPC as i mentioned in my original post. This still would cause traffic to cross the vPC peer link.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-08-2015 11:00 AM

01-08-2015 11:00 AM

SolutionYou could try setting up a second set of uplink port-channels from the FI and use vlan pinning to assign the storage traffic to the uplinks.

On our initial Nimble implementation we were using a single subnet(1.4 days) and connecting to a Nexus 5K and having the storage traffic ride over the FI uplinks with all the other traffic. We had a chance to do a ground up redesign over the summer and went with the appliance ports and 2 data vlans in the new implementation. I now feel comfortable using Jumbo frames in the fabric since I only have the FI, hosts, and array to worry about.