- Community Home

- >

- Storage

- >

- Midrange and Enterprise Storage

- >

- HPE Primera Storage

- >

- Re: HPE Primera: Comparing IOPS with an HPE 3PAR a...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-16-2019 09:57 AM - edited 09-16-2019 09:59 AM

09-16-2019 09:57 AM - edited 09-16-2019 09:59 AM

HPE Primera: Comparing IOPS with an HPE 3PAR array using HPE Synergy compute nodes

New HPE Primera delivers significant benefits for customers running Oracle database

The new HPE Primera storage array delivers significant increased IOPS from the earlier HPE 3PAR arrays. This means reduced latencies and accelerated storage throughput. In addition, an HPE Primera array has 100% availability with a easy-to-use graphical interface. These improvements are a significant benefit for Oracle database users. This blog describes a test where HPE Storage Solutions Engineering performed a comparison of a maximum IOPS Oracle workload for multitenant database deployments on HPE Synergy compute nodes. The purpose of the test was to demonstrate significant IOPS on multiple compute nodes running an Oracle database and to show the improvement in performance compared to an HPE 3PAR 8450 array. The intent was not to imply databases all run at such a high IOPS requirement but to show the capabilities when needed and the minimized latency.

Enterprise databases must be available to process transactions and service them quickly. Data centers can have many databases running when reliability and performance are a must. Even in the new paradigm of AI and Big Data, transactional and relational databases remain critically important as well as needed for newer technologies like machine learning. The implementation for reliable and high-performance storage is increasing every day. This test demonstrated how HPE Primera storage can easily handle hundreds of thousands of IOPS from multiple database servers and still keep the latencies at the array less than one millisecond. This enables database servers to be scaled up as well as scaled out.

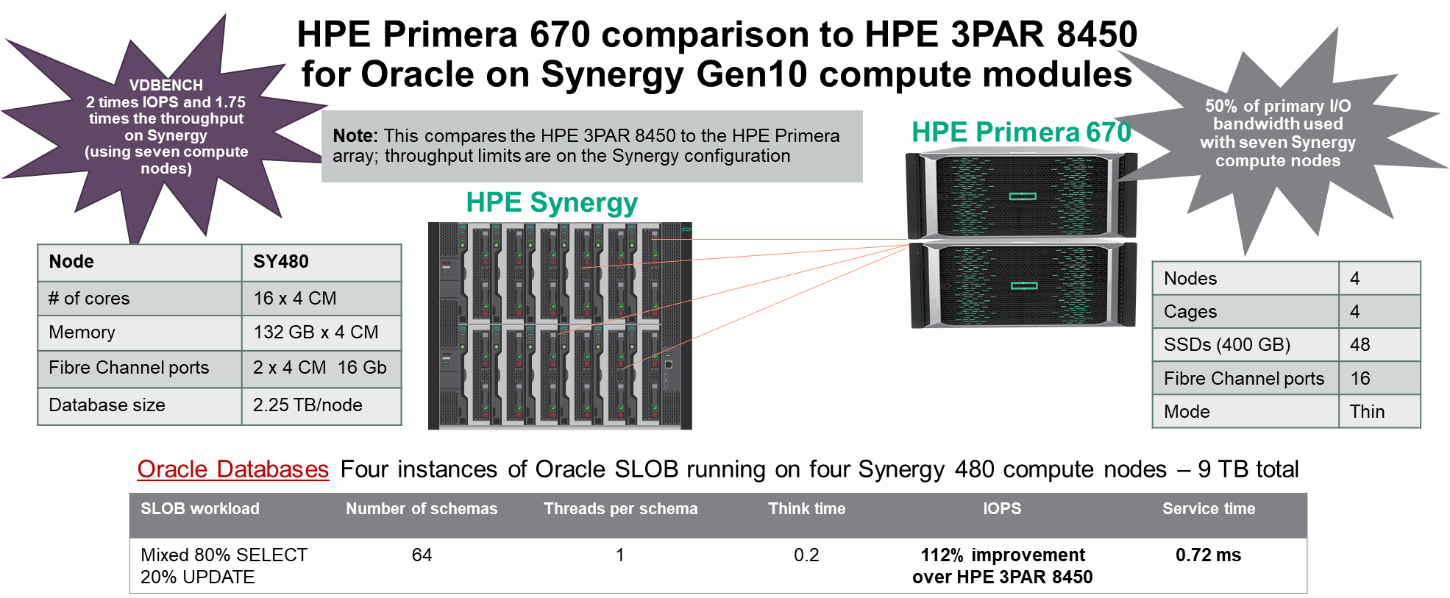

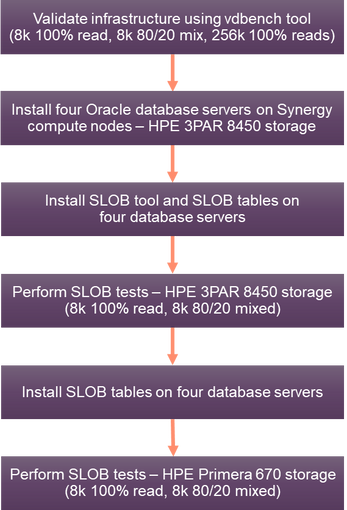

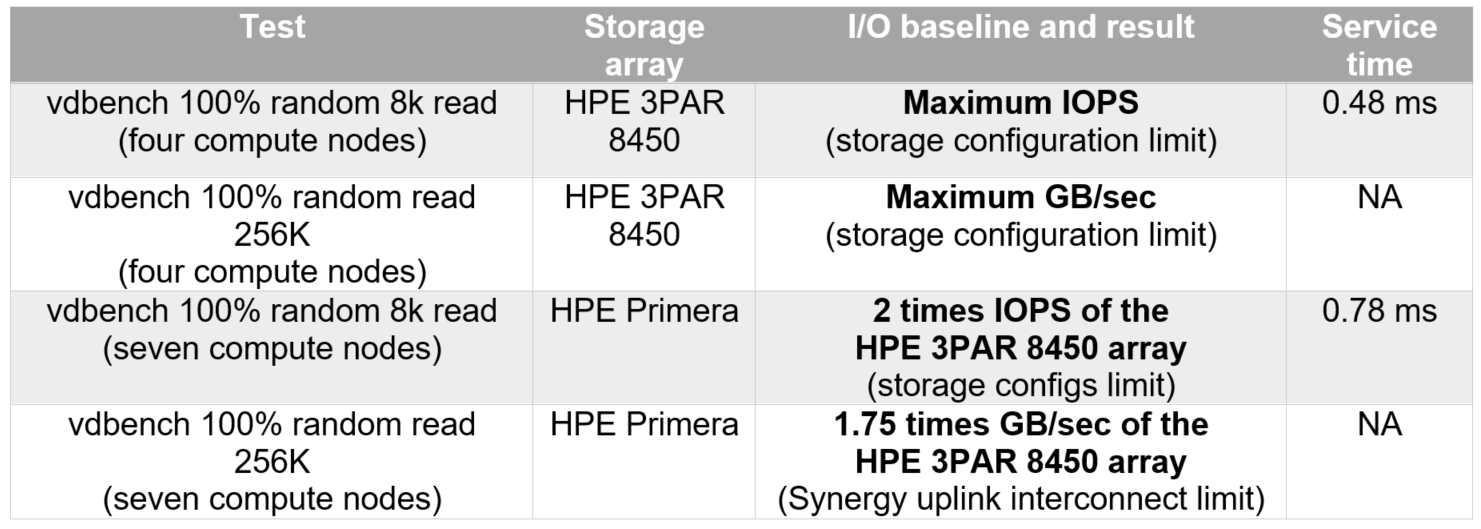

The following diagram shows the overall results of the test. Two main I/O performance tools were used. The first tool was vdbench. This tool was used to validate the server/storage setup with some simple 100% small-block reads (8k) for maximum IOPS and large-block reads (256k) for maximum throughput. The results were compared to the expected result to validate the setups. In our vdbench tests we observed 8k block size random read IOPS as high as twice what is specified for an HPE 3PAR 8450 array with a similar configuration and workload profile. Large 256k block throughput was 1.75 times higher than specified for the HPE 3PAR 8450 array. The maximum throughput is close to the limit of the HPE Synergy chassis we configured for the test but not near the HPE Primera limit. In comparing the same general configuration between the HPE 3PAR 8450 and HPE Primera arrays, performance approximately doubles.

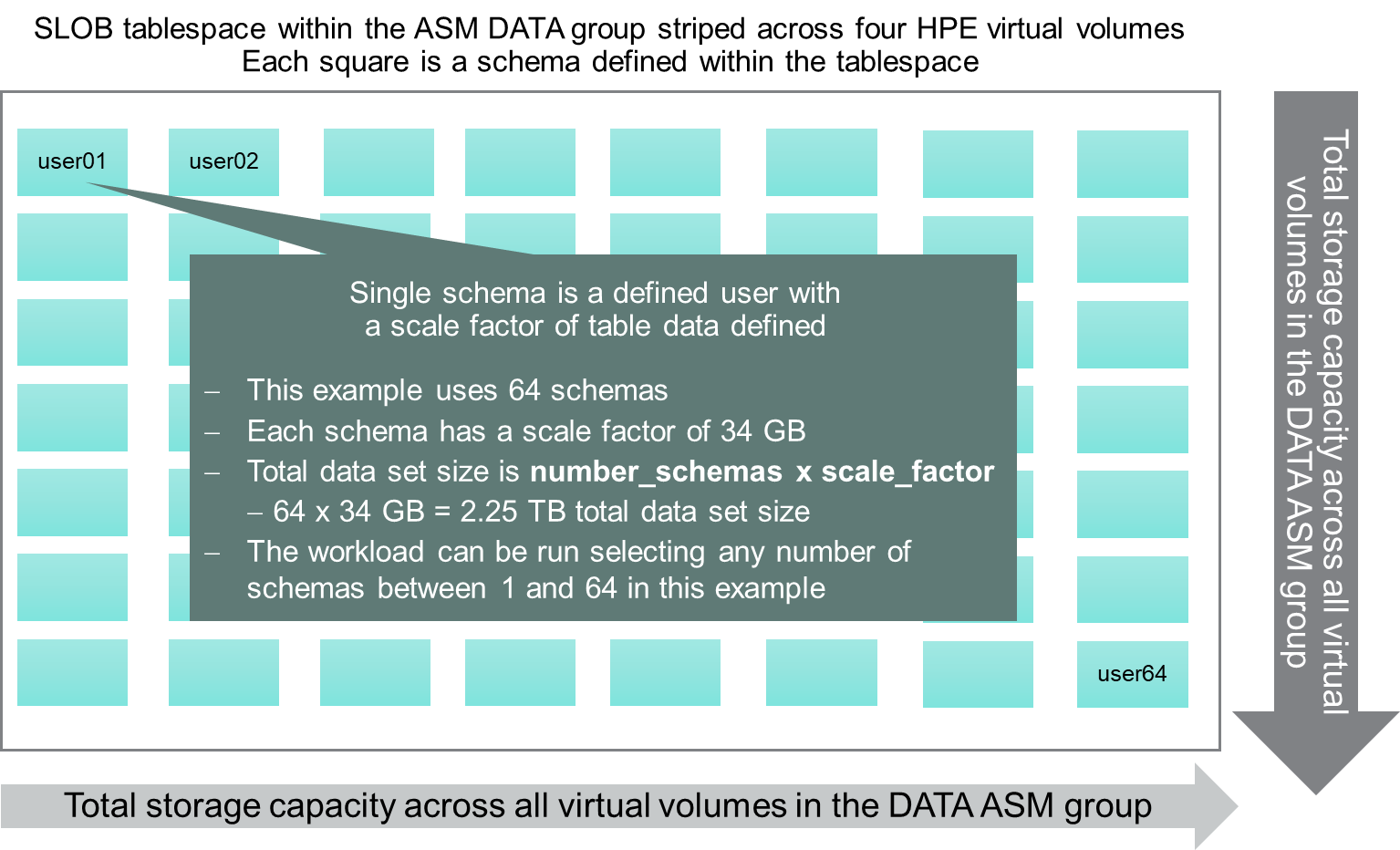

The second tool is a publicly available Oracle based I/O characterization tool called Silly Little Oracle Benchmark (SLOB). The SLOB tool allows you to characterize I/O with an Oracle database. It is generally used for OLTP workload profiling. Even though the tool has “benchmark” in the name, it’s more commonly used for calibrating or characterizing I/O in a particular server/storage environment.

Figure 1. Summary of the test results

Some of the vdbench tests used up to seven HPE Synergy 480 Gen10 compute nodes at the same time. Each Synergy compute node had 16 cores. Hyper-threading was disabled because the benefit is minimal at high I/O rates. The two SLOB workloads observed for maximum IOPS were 100% read and 80/20% read/write mixed workloads.

The details

The vdbench tool was used to validate the Synergy + HPE Storage infrastructure. The 100% read tests validated the maximum IOPS and throughput for the HPE 3PAR 8450 array. The 80/20 read/write tests approximated a typical IOPS profile for an OLTP workload.

- The HPE 3PAR 8450 array configuration: Four nodes, 24 host ports, 48 SSDs, nine 2.25 TB virtual volumes for Oracle data, four 15 GB virtual volumes for redo logs, and eight 16 Gb Fibre Channel dual-ported host bus adapters (HBAs) for a total of eight 16 Gb ports. All volumes were Thin Provisioned RAID 6.

- The HPE Primera array configuration: Four nodes, 16 host ports, 48 SSDs, nine 2.25 TB virtual volumes for Oracle data, four 15 GB virtual volumes for redo logs, and eight 16 Gb Fibre Channel dual-ported HBAs.

The maximum IOPS and throughput (read only) for the HPE 3PAR 8450 array were used as a baseline.

First, the vdbench tests were performed and then the Oracle database was installed on four Synergy compute nodes. SLOB was also installed on each of the four servers. SLOB tests were run for an 8k OLTP I/O profile.

The following table lists the results for both the vdbench tests and the SLOB tests.

Table 1. Test results

The schema objects of SLOB consisted of 64 schemas, 34 GB each. They ran on all four database servers for a total 9 TB data set. Each server had a data set size of 2.25 TB.

Summary

The HPE Primera array provided a 112% improvement in the mixed workload over the HPE 3PAR 8450 array.

HPE Primera storage arrays are ideal for Oracle workloads with much improved performance, 100% availability support promise, and other features like ease of use, support for HPE InfoSight, and many other new features. For a full list of features, refer to https://www.hpe.com/us/en/storage.html for more details on HPE Primera.

Please put any questions you might have in the comments section of this blog. Any general discussions are always welcome!

Best regards,

Todd – HPE Technical Marketing Engineering – Oracle Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-03-2019 10:39 AM

12-03-2019 10:39 AM

Re: HPE Primera: Comparing IOPS with an HPE 3PAR array using HPE Synergy compute nodes

Hi TODD,

Thanks for this article.

this comparaison can be the same for PRIMERA 650 ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-03-2019 10:55 AM

12-03-2019 10:55 AM

Re: HPE Primera: Comparing IOPS with an HPE 3PAR array using HPE Synergy compute nodes

This configuration should give you similar results with the 650 as long as you use the same number of SSD's, nodes and FC host ports. Variations can occur if the workload is getting different cache benefits but in this case will be minimal differences, even with more cache. But your customer workload could benefit from more cache. It just depends on what is happening in the database. You should be comfortable using this for IO characterizqation.

Thanks!

Todd

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-20-2020 07:39 PM

02-20-2020 07:39 PM

Re: HPE Primera: Comparing IOPS with an HPE 3PAR array using HPE Synergy compute nodes

There is not any useful messages about IOPS,just know it's better than 8540.As the 8450 is very older product, so customer also don'w know the performance gap between 9450 and Primera 670.

Most of customer using AFA in OLTP,but the test don't show performance in mixed workload.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-20-2020 10:03 PM

02-20-2020 10:03 PM

Re: HPE Primera: Comparing IOPS with an HPE 3PAR array using HPE Synergy compute nodes

Hi,

The purpose of the article was not so much to prove a general sizing for all array configurations but rather a blog to demonstarte how the SLOB tool could be used to help characterize a specific configuration prior to using a specific workload. In this scenario the result will vary. I attempted to emphasize the process of characterizing a particular configuration but maybe wasn't clear enough with it.

That being said, we did review the 8450 results with our internal sizing data and for this particular workload would have been close to a 9450. We were not making any broad statement about all array configurations, just observing the results against the arrays we were working with at the time. More focus was on the process and the results of the process will vary depending on the configuration. For measured performance sizing information it's best to talk to your HPE storage representative and he can get these results based on your deesired needs.

With your mixed workload observation, if you are refering to R/W ratios the bottom of the figure in the blog mentions an 80/20 mix. If you are refering to OLTP versus large block reads/writes, this would require a completely different workload. It would be interesting but not the process we tested.

Hope this clarifies