- Community Home

- >

- Servers and Operating Systems

- >

- Operating Systems

- >

- Operating System - HP-UX

- >

- iscsi vs FC performance

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-23-2021 06:00 AM

11-23-2021 06:00 AM

I have recently been playing around with iscsi library to get some comparison performance numbers and I'm little surprised how much slower it is from FC. I have a dedicated lab setup where no other workloads come to play and in my tests FC ends being 50% faster than iscsi. In both cases have NVME storage behind which in other conditions (general linux) has been giving me 550MB/s on read/write under stress, but on hpux I'm tapping out at 50MB/s on iscsi. I use poor men's test commands such as dd or prealloc to compare results between different configs. My goal isn't to really get metrics on the storage appliance, but rather comparison on metrics between FC and Iscsi under same/similar conditions.

What I'm seeing right now is the either dd or prealloc will go sleep state for 70-80% of the execution ( I guess ) waiting on IO when running through iscsi, but there is plenty of rooom to push through, which is puzzling to me. I've toyed around with kctune params and vxtunefs params, but the needle has only moved a little bit.

Has anyone else run into this problem where iscsi performance is subpar?

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-23-2021 07:01 AM

11-23-2021 07:01 AM

Query: iscsi vs FC performance

System recommended content:

1. HPE 3PAR StoreServ iSCSI Best Practices Configuring iSCSI with DCB

2. iSCSI Cookbook for Virtual Connect

If the above information is helpful, then please click on "Thumbs Up/Kudo" icon.

Thank you for being a HPE community member.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-23-2021 07:24 PM - edited 11-23-2021 09:01 PM

11-23-2021 07:24 PM - edited 11-23-2021 09:01 PM

Re: Query: iscsi vs FC performance

Is there a documentation that you know how to set up initiators on HPUX OS for the emulex cards? The docs only go through esxi and windows.

I was able to add the target during boot and discover them. But LUNs aren't showing once the system boots. I can't find any referrences on what to do besides the setup in prior to boot.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-25-2021 06:45 PM

11-25-2021 06:45 PM

Re: iscsi vs FC performance

Fibre will be always be faster than iSCSI. Rather than a shared resource with protocol overhead, fibre is runs with very low overhead in the server, whereas iSCSI runs through a network stack along with all other network activities. Even with a dedicated network, the TCP encapsulation around iSCSI is significant. And CPU loading is much lower for fibre than TCP.

Some advantages for iSCSI: distance. Fibre is limited to typical SAN connections, where TCP is not distance limited with routers and WANs.

Also, iSCSI shares already existing networking, albeit upgraded to at least gigabit, possibly 10 Gbit. Interconnects are easier since switches and routers are likely in place. But iSCSI still shares the party line nature of TCP.

The wait times for data seem to indicate a driver or handshake issue. Use Glance to look at where the time delay is occurring. You will definitely need the latest patches for iSCSI, possibly overall networking too.

Bill Hassell, sysadmin

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-26-2021 07:02 AM

11-26-2021 07:02 AM

Re: iscsi vs FC performance

To add to what has already been stated...

The iSCSI stack on Linux/Windows/ESXi has continued to be developed over the last 15 years, including code to take advantage of offload into hardware functions in NICs. There is quite a prevalence of iSCSI in the x86 world which was always more price sensitive than commercial UNIX world. It's also often used as the mechanism fro delivering IO in HCI stacks as well.

The HP-UX iSCSI software initiator is almost completely untouched apart from bug fixes since it was originally released (some time around 2005 I think). It was to my knowledge never recommended by HP/HPE as a serious solution for delivering block IO performance on HP-UX. So for example I would be very surprised if anyone in HP ever reviewed the code/performance of the HP-UX iSCSI software initiator when 10GbE became more ubiquitous. I have only ever come across one customer who used it, and they moved off it in about 2012

TL:DR - if you want block IO performance on HP-UX, use a FC stack - that's what 99% of other HP-UX customers do. I bet it's what 99% of AIX and Solaris customers do to.

I am an HPE Employee

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-29-2021 06:28 AM

11-29-2021 06:28 AM

Re: iscsi vs FC performance

Thank you for recommendation on going FC. Based on test results in one of docs shared in first response, it seems they were able to achieve comparable (in some cases even faster) performance. I'd like to try to get it to work with hardware acceleration as I think that is what was used in the tests. I'm having trouble getting that to work.

What I have done so far is:

- create a profile in VCM with iscsi uplink

- During boot there is a menu to configure and discover iscsi target, which is done as well (targets are discovered)

- Not sure here if I need a driver or what, but luns from iscsi aren't showing in the system after OS is loaded.

Point #3 is where I'm stuck at this point. Have you been able to get that to work ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-30-2021 02:42 AM - edited 11-30-2021 02:43 AM

11-30-2021 02:42 AM - edited 11-30-2021 02:43 AM

Re: iscsi vs FC performance

>> During boot there is a menu to configure and discover iscsi target, which is done as well (targets are discovered)

Wait are you doing this in EFI on an Integrity BL8x0c blade? I'm sure you can do it on an x86 blade, but I never heard of anyone doing it on a HP-UX blade.... sort of suggests you are trying to boot off iSCSI? That certainly isn't supported in HP-UX - see this guide:

https://support.hpe.com/hpesc/public/docDisplay?docId=c02037108

And specifically the bottom of p38 where it is stated:

The resolution of the ordering problems described above has placed limitations on the iSCSI SWD. Because network initialization is performed using the /var directory, the /var directory cannot be on an iSCSI target. Also,the boot, root, primaryswap, and dumpfile systems are not supported on iSCSI volumes.

If you've really done this in EFI on an Integrity Blade, I can only assume it was there to support iSCSI boot for Windows/Linux when there were IA64 versions of those Operating Systems.

I am an HPE Employee

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-30-2021 04:03 AM

11-30-2021 04:03 AM

Re: iscsi vs FC performance

Yes this is on 8x0c blades.

What im trying to get working is iscsi hardware acceleration through virtual connect manager.

My understanding was that by offloading the iscsi connectivity to vcm one would see the targets show up in the OS. Are you saying thats not possible while booting from local disk?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-30-2021 07:15 AM

11-30-2021 07:15 AM

Re: iscsi vs FC performance

Where did you get the idea you could do that?

Are you thinking that as you can take a CNA in your blade and through configuration turn it into a FC HBA, then you could also turn a CNA into an iSCSI accelerated connection? Well you can't - CNAs can either be configured as FC ports or ethernet ports. If you want to use iSCSI, you define your CNA as an ethernet NIC and it will show up in the HP-UX as an ethernet NIC. Then you need to stick an IP stack on it in the usual way and only then can you start using the HP-UX iSCSI software initiator. Instructions for which are in the manual I posted in my previous response.

As I said previously, the iSCSI stack in HP-UX is software only. There is no hardware accelration beyond that offered by a standard Ethernet NIC.

I am an HPE Employee

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-30-2021 09:06 AM - edited 11-30-2021 09:18 AM

11-30-2021 09:06 AM - edited 11-30-2021 09:18 AM

Re: iscsi vs FC performance

I've read that in the iSCSI Cookbook for Virtual Connect., but I maybe misunderstand something. They talked about accelerated iscsi and accelerated iscsi boot- I'm interested in the first one.

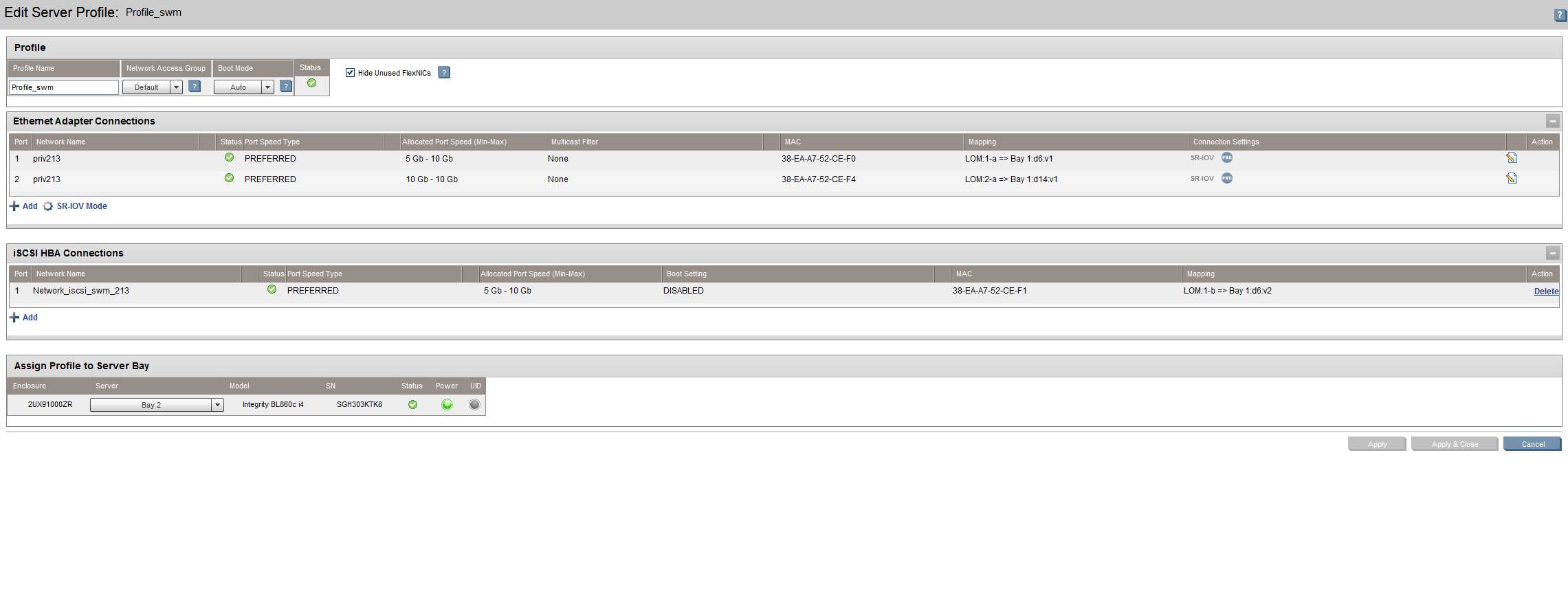

The hardware acceleration that I was talking about is just on the nic side. Take a look at my vcm config below, I have the boot disabled. My question is how would one really leverage this config in the OS?

iSCSI Cookbook for Virtual Connect., - this doc also only shows windows and esxi configuration.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-01-2021 06:13 AM

12-01-2021 06:13 AM

Re: iscsi vs FC performance

If you read that document, you managed to miss the most important line in it for you. Right there on p10:

Note: HP BladeSystem c-Class Integrity Server Blades do not support Accelerated iSCSI and Accelerated iSCSI boot with Virtual Connect.

The only parts of that document that are relevant to you are the parts that talk about generally how you setup Ethernet and your uplinks to best support iSCSI. You may as well stop reading at p 44, and even the material before that tells you nothing about how any of it pertains to HP-UX iSCSI initiators.

I am an HPE Employee

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-01-2021 07:12 AM

12-01-2021 07:12 AM

Re: iscsi vs FC performance

Thank you for pointing that out and yes certainly I missed that part.

I do have two more questions if I may: Is there a hardware acceleration for c-class blades without vcm ?

Do you think its feasible to port a modern iscsi library say from debian/ubuntu to hpux?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-01-2021 10:45 AM - edited 12-01-2021 10:47 AM

12-01-2021 10:45 AM - edited 12-01-2021 10:47 AM

SolutionFor Integrity C class blades? (BL860/870/890) - No there isn't. There may be something for ProLiant C class blades (BL460 for example), but I'm not aware of anything.

Porting an IO stack from another OS? I would say that would be VERY hard. The kernel is substantially different from Linux and isn't open source. Take a look at the packages on the HP-UX Porting and Archive Center http://hpux.connect.org.uk/hppd/packages.html - this is pretty mcuh a complete list of all open source software which has been succesully ported and maintained on HP-UX. You will see a complete lack of any ports of open source filesystems, IO stacks, network stacks etc... you really need to be a HP-UX kernel developer working at HPE to have any chance of doing that, and as the whole OS is going end of lab support in less than 5 years, I can't see them ever doing that now. (yes I work for HPE, but not in the HP-UX labs). The HP-UX iSCSI software initiator which I pointed you at the manual for in one of my previous posts is the ONLY way you are ever going to be able to use iSCSI on HP-UX. The fact it doesn't perform great is not that surprising to be honest.

As I said in my first post, and still holds true: TL:DR - if you want to do high performance block IO on a HP-UX box, use fibre channel.

I am an HPE Employee

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-01-2021 07:01 PM

12-01-2021 07:01 PM

Re: iscsi vs FC performance

Thank you for your help. FC it is then.