- Community Home

- >

- Servers and Operating Systems

- >

- Operating Systems

- >

- Operating System - OpenVMS

- >

- OpenVMS system crash down after configure the clus...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-12-2016 06:33 PM

09-12-2016 06:33 PM

Dear:

We meet a new problem when configuring the OpenVMS cluster.

The cluster contains 2 nodes(FCNOD1 and FCNOD2), using TCP/IP to communicate.

I configure the FCNOD1 at first, and then it waits for the second node.

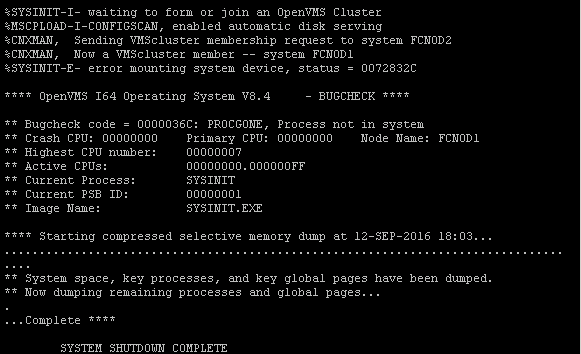

When I have configured the FCNOD2, the FCNOD1 can find it. But soon it goes to coredump state, the detail is as follows:

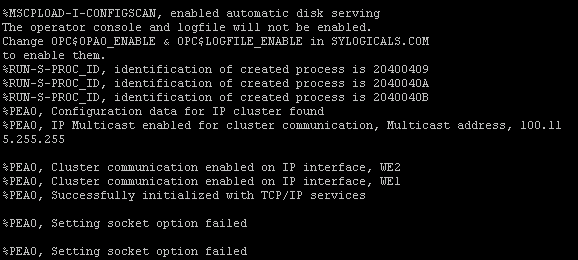

Now the FCNOD2 is in the waiting state , the detail is as follows:

I have restarted the FCNOD1 for serval times , but each time it enters the same abnormal state.

Could you please tell me why the first node will have a coredump problem?

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-12-2016 09:15 PM

09-12-2016 09:15 PM

Re: OpenVMS system crash down after configure the cluster service

Others' opinions may differ, but I find it much easier to deal with

plain text than with pictures of plain text.

> [...] status = 0072832C

alp $ exit %x0072832C

%MOUNT-F-DIFVOLMNT, different volume already mounted on this device

help /mess DIFVOLMNT

> The cluster contains 2 nodes [...]

Not a very detailed description of anything. Given that error

status, it might be helpful to know something about the system disk(s)

of these two systems: what, where, connected how, label(s), and so on.

> [...] I64 [...]

Some hints as to the server model(s) (beyond "I64") might also be

interesting.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-13-2016 12:28 AM

09-13-2016 12:28 AM

Re: OpenVMS system crash down after configure the cluster service

Thanks for your reply.

Some more information about the cluster is as follows:

FCNOD1: installed in HP C7000 blade server with 2 local disk:DKA100 &DKA200. The OpenVMS 8.4 OS is installed in DKA200.

FCNOD2:installed in HP C7000 blade server with 2 local disk:DKA100 &DKA200. The OpenVMS 8.4 OS is installed in DKA200.

I connect these two nodes to a SAN storage system, and map 6 LUN to these two nodes, then I use the second LUN as the quorum disk in both nodes.

Please check if the above information is enough.

Looking forward to your reply.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-13-2016 01:52 PM

09-13-2016 01:52 PM

Re: OpenVMS system crash down after configure the cluster service

Hi,

So you are using a local disk for the system disk on each system? That is not normally how a "regular" VMS cluster is done - it is usually a single common system disk that both systems boot off of in shared storage (SAN disk, in your case) - albeit each system has it's own "root" that it boots into. You may wish to review the "Guidelines for OpenVMS Cluster Configurations" manual.

If you do intend for each system to have it's own local system disk, which makes things like applying patches twice as hard, then you will want to rename the volume label on at least one of your system disks, as it would seem that your configuration is trying to share those system disks across the cluster to the other node and can't mount the second one coming up because they have the same label. The easiest way to change the label is probably to boot off the DVD, get to the place to issue DCL commands and use:

$ SET VOLUME/LABEL=x DKA200:

where x is the new name.

Regards.

Dave

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-13-2016 07:08 PM

09-13-2016 07:08 PM

Re: OpenVMS system crash down after configure the cluster service

Dear Dave:

Thanks for your reply.

I have changed the volume label of the two server as follows:

- FCNOD1$DKA200 VOLUME LABEL=FCNOD1

- FCNOD2$DKA200 VOLUME LABEL=FCNOD2

However, the problem still happens.

When I configure the cluster, I have set the alloclass parameter of both node to the same value.

Should I set them to different value when both servers are set to boot server?

Looking forward to your reply.

Thanks very much!

Br

Tong

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-13-2016 08:52 PM

09-13-2016 08:52 PM

Re: OpenVMS system crash down after configure the cluster service

> I have changed the volume label of the two server [...]

Have you shut down both systems since this change? (A cluster member

can remember many things, even when other cluster members go away and

then return.)

> Should I set them to different value when both servers are set to boot

> server?

I believe that ALLOCLASS is significant when systems use shared

storage devices. I would not expect the device you boot from to affect

this.

I have a small collection of Alpha and IA64 systems, each of which

has its own system (boot) disk. I have no such trouble with my cluster.

So long as each disk has (and has had since the first cluster member

booted) a unique label, I would not expect a problem like this.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-13-2016 08:53 PM

09-13-2016 08:53 PM

SolutionWe use a different allocation class for each node in the cluster as well as a different volume label for local disks. Typically we also make all of the disk devices on a SAN use the same allocation class (eg $1$dga100, $1$dga101 etc) and another allocation class for tape drives (eg $2$mga2)

If each of the nodes has a dka200 local scsi disk then you will see them as $3$dka200, $4$dka200, $5$dka200 and so on when you do a show device d

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-14-2016 02:25 AM

09-14-2016 02:25 AM

Re: OpenVMS system crash down after configure the cluster service

Finally I change the allocation class to different value, and now it works.

The cluster can start up successfully.

Thanks very much for your help.

BR

TONG