- Community Home

- >

- Solutions

- >

- Tech Insights

- >

- From DevOps to AIOps with the power of HPC: Why an...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

From DevOps to AIOps with the power of HPC: Why and how it’s time to make the move

Learn how AIOps uses AI, ML, and DL with the power of HPC to simplify IT operations management while accelerating and automating problem resolution in complex modern IT environments.

Looking for something compelling to read in the new year? If you are interested in DevOps, The Phoenix Project: A Novel About IT, DevOps, and Helping your Business Win is a must-read book

This entertaining novel takes you from the very familiar chaos of daily IT operations to the three underlying principles of the DevOps movement: systems thinking, amplifying feedback loops and creating a culture of continuous experimentation and learning. Since the book’s publication in 2013, the DevOps movement has come a long way, but some of the challenges of operating and managing IT environments have been getting worse:

- The complexity of enterprise IT environments is growing exponentially, with distributed edge, hybrid cloud, and multi cloud environments.

- With cloud-native and microservices architectures, the ever-increasing number of different technological components involved in a business service makes troubleshooting unmanageable with traditional, manual approaches. As the number of moving parts grows, so does the time to diagnose issues.

- With the adoption of CI/CD pipelines, the speed of change is also exponentially increasing. In 2009, Allspaw and Hammond gave their famous 10+ Deploys Per Day: Dev and Ops Cooperation at Flickr presentation.1 Today, companies like Amazon, Google and Netflix deploy code thousands of times per day.

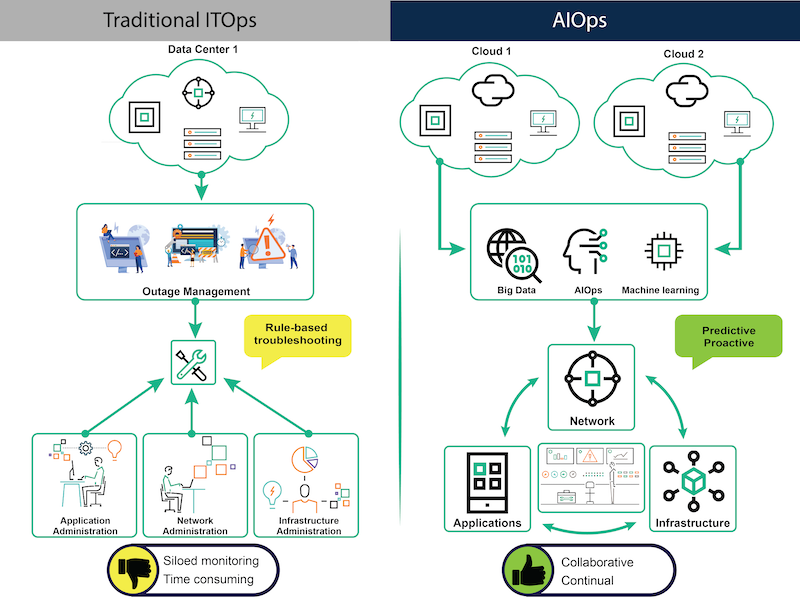

- Detection of issues is based on monitoring systems that are often siloed by technology layer (infrastructure, network, application) and/or by location (on-premises data centers, edge, private, and public clouds), each one subject to creating its own noise.

Artificial intelligence to the rescue

Because of the increased complexity and speed of change of IT environments, the amount of data that operations teams need to analyze to perform their work is also growing exponentially. These huge amounts of data are harder to manage, and harder to make sense of. This is where artificial intelligence (AI) comes into play—using machine learning (ML) and deep learning (DL) algorithms to process, analyze and gather insights from data.

AIOps is the term that describes the use of AI to simplify IT operations management and accelerate and automate problem resolution in complex modern IT environments. AIOps is not a replacement for DevOps, but an enhancement.

AIOps is about automating problem resolution and accelerating performance efficiency. It cuts through noise and identifies, troubleshoots, and resolves common issues within IT operations. It brings together data from diverse sources and performs a real-time analysis at source. It understands and analyzes historical as well as current data, linking anomalies and observed patterns to relevant events via ML. Finally, it initiates appropriate automation-driven action, which can yield uninterrupted improvements and fixes. Here’s a diagram that shows differences between the traditional approach and the AIOps approach:

AIOps use cases can help IT organizations with many current challenges, such as:

- Reducing the noise and prioritizing business-critical issues, by correlating events with the same root-cause and eliminating duplicates

- Reducing mean time to detect (MTTD), by automatically identifying trends that point to impending issues with real-time anomaly detection

- Reducing mean time to repair (MTTR) with root-cause analysis

- Predicting workload capacity requirements to optimize resource usage and cost

- Supporting the speed of application releases and DevOps processes by gaining visibility of change impact with unified dashboards for services monitoring

Key components for AIOps solutions

The two main components for AIOps solutions are:

- Data platform—AIOps requires a scalable data platform that allows the ingestion, storage, and analysis of the variety, velocity, and volume of data generated by IT, at the right speed for each use case, without creating silos.

- Machine learning models and management framework—Different types of supervised, semi-supervised, and unsupervised ML and DL models can be involved in AIOps use cases. For example, trend identification with ML models enable real-time anomaly detection and predictive capabilities. But model building is just a step of the ML model lifecycle, which also needs to consider data preparation, model training, model deployment, model monitoring, and model retraining/redeployment.

To dig deeper, download this technical white paper: Artificial Intelligence for IT Operations—A study on high-performance compute.

Case Study: AIOps for HPC systems

Today, HPE is using AI/ML to develop advanced, non-threshold-based real-time analytics to reduce data center downtime via rapid and early anomaly detection that performs at scale, speed, and automatically. HPE is also developing predictive capabilities to improve data center energy efficiency and sustainability with initial focus on power usage effectiveness (PUE), predictive scheduling of cooling for large jobs, water usage effectiveness (WUE), carbon usage effectiveness (CUE), and such. The effort encompasses both IT systems and the supporting facility.

To support this AIOps initiative, HPE is also developing a generic high-performance system monitoring framework. This next-generation system monitoring framework for HPC machines, called Kraken Mare, was developed under the HPE/DOE PathForward2 project. It is designed to collect, move, and store vast amounts of data without any assumption of static data sources in a distributed, highly scalable way, and to provide different access patterns (such as streaming analytics or traditional data analysis using long-term storage) in a fault-tolerant way.

Following a 3-step methodology to speed AIOps design and deployment

Wherever you are on your AIOps journey, the expert AI consultants with HPE Pointnext uses a three-point methodology to guide you through your specific use case. The focus is on operations problem-solving and improvements.

- Explore—We work with you to understand the outcomes and challenges AI brings. We ground teams on common AI terminology, fostering shared understanding and selecting the best use cases. The goal is to clearly align technology with the business, so the initiative benefits from having the business buy-in early on.

- Experience—We identify the data sources that will be required for the use case and create a high-level roadmap for use case implementation. This is followed by a proof-of-value (POV) to demonstrate how the solution would be deployed into a production environment. This POV is tested and the outcome is validated.

- Evolve—Now we are ready to work with you to evolve and scale the AI solution. Leveraging HPE’s optimized data center infrastructure that spans from AI edge to cloud coupled with HPE GreenLake pay-per-use consumption models makes this a much easier part of the complete journey.

The delivery phases for your AI solution move from workshop to PoV and design to implementation, and operations. When you engage with HPE AI experts, you can discover ways to apply AI to your specific needs in weeks as opposed to months—so you can more quickly identify how to maximize the value of your data. This insight translates into tangible benefits that include limiting downtime, reduced costs through automation, and improved service quality.

Discover more about how data transformation services can help you meet transformation goals—from edge to cloud.

Meet our Compute Expert bloggers

Insights Experts

Hewlett Packard Enterprise

twitter.com/HPE_AI

linkedin.com/showcase/hpe-ai/

hpe.com/us/en/solutions/artificial-intelligence.html

1 10+ Deploys Per Day: Dev and Ops Cooperation at Flickr

2 PathForward is a project under the Exascale Compute Project (ECP) run by the U.S. Department of Energy (DOE) with the goal to accelerate technology development for upcoming exascale-class HPC systems.

- Back to Blog

- Newer Article

- Older Article

- Amy Saunders on: Smart buildings and the future of automation

- Sandeep Pendharkar on: From rainbows and unicorns to real recognition of ...

- Anni1 on: Modern use cases for video analytics

- Terry Hughes on: CuBE Packaging improves manufacturing productivity...

- Sarah Leslie on: IoT in The Post-Digital Era is Upon Us — Are You R...

- Marty Poniatowski on: Seamlessly scaling HPC and AI initiatives with HPE...

- Sabine Sauter on: 2018 AI review: A year of innovation

- Innovation Champ on: How the Internet of Things Is Cultivating a New Vi...

- Bestvela on: Unleash the power of the cloud, right at your edge...

- Balconycrops on: HPE at Mobile World Congress: Creating a better fu...

-

5G

2 -

Artificial Intelligence

101 -

business continuity

1 -

climate change

1 -

cyber resilience

1 -

cyberresilience

1 -

cybersecurity

1 -

Edge and IoT

97 -

HPE GreenLake

1 -

resilience

1 -

Security

1 -

Telco

108