- Community Home

- >

- Solutions

- >

- Tech Insights

- >

- Swarm learning, inspired by biology, leverages dis...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

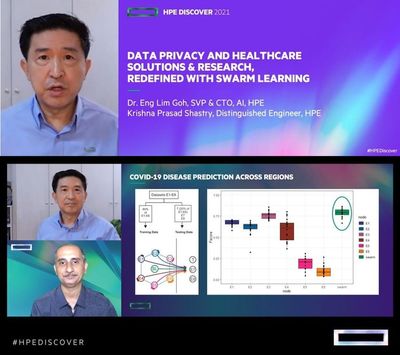

Swarm learning, inspired by biology, leverages distributed data in a secure, decentralized network

This new blog article from Kohei Kubo, EMEA & APJ Industry and Workload Marketing Manager, explores swarm learning, a machine learning concept from HPE Labs. It is based on blockchain and designed to ensure that only legitimate participants can join a decentralized learning network, enabling companies to leverage distributed data and ensure data integrity.

Today's machine learning: meeting the challenges

Today's machine learning: meeting the challenges

As more and more devices and equipment are connected to the network in the trend toward IoT, an enormous amount of data is being generated. This growth will be further accelerated by 5G, which requires effective utilization of the extracted value of data generated outside the central data center or cloud.

In today's machine learning, data is sent to a central data center or cloud for aggregation, training, and model creation. The models created at the central location are then deployed to the edge environment, where the data is generated. Here, inferences are determined and decisions are made based on real-time predictions, and autonomous actions are taken. This is a cumbersome process, however, as sending all the data collected at the edge to a central server for training may raise security and privacy issues, data latency, and network transmission costs.

Security and privacy issues

In general, the medical data of individuals is too confidential to be shared or transferred outside of a hospital or medical facility, and to move and consolidate the data puts the information in transit at risk of various attacks. But let's consider, for example, the case where an inference model is created to determine a patient’s health or illness, based on medical data provided from multiple healthcare or hospital facilities. Using this inference model, a diagnosis or treatment plan can be made based on the model.

Challenges of data latency and network transfer costs

Self-driving cars may have a number of sensors that generate several petabytes of data per day. When data is generated at large, distributed locations, it is very difficult to aggregate it to a central location, such as the cloud, for centralized machine learning.

Data that is siloed makes collaboration difficult

Data collaboration across organizational boundaries can be challenging across organizational and regional boundaries, even when aggregating information can uncover useful information.

For example, machine learning models for fraud detection and risk detection in financial institutions are developed using each financial institution's own data, but still have the potential for false positives. This is because training fraud detection models generally limit the number of fraudulent transactions, which may reduce the accuracy of the model. If data could be shared among financial institutions, there would be a greater potential to improve the accuracy of fraud detection.

Swarm learning and its benefits

Swarm learning and its benefits

To address these challenges, HPE Labs have developed a new technology called swarm learning.

Swarm learning is a secure, distributed machine learning solution that leverages blockchain for data in a distributed edge environment.

In swarm learning, both the training of models and the inference with the trained models are done in the edge environment. In addition, data security and privacy are greatly enhanced because only information such as the parameters of the retrieved model – rather than real data – is shared among the collaborating machine learning nodes.

You may have seen swarms of thousands of starlings, buzzing around in the sky, or fish doing the same in the water. The word "swarm" was inspired by the fact that various animals, usually for their own protection, exhibit a kind of decentralized behavior unrelated to the movements of the leader of their flock.

Inspired by biology, swarm learning is based on blockchain, and is designed to ensure that only legitimate participants join a decentralized learning network. This allows companies to leverage distributed data while protecting data privacy and security.

Swarm learning: Mechanism and workflow

Mechanism

As mentioned earlier, swarm learning is designed to allow nodes to collaboratively train a common machine learning model, without having to share the data itself; each node processes the training data locally. The parameters (weights) obtained by training the model on local data are shared by all nodes and merged to build the global model. The merging process is not performed by a fixed central coordinator, but by a temporary leader that is dynamically selected among the nodes, resulting in a distributed swarm network. This also provides better fault tolerance than traditional centralized frameworks.

Workflow

- Registration

The swarm learning process begins with the registration of each node to the swarm smart contract.

- Local model training

Each node trains its local model at the edge until it reaches a predetermined number of iterations. Once that number of iterations is reached, the parameter values in the file are exported and uploaded to the shared file system for another node to access. After that, it notifies another node that it is ready for the parameter sharing step.

- Parameter sharing

Here we start with the process of selecting a leader, who will be responsible for merging the parameters obtained after local training of all the nodes.

According to the predetermined leader selection algorithm, after one of the nodes performs the merging as the leader, the parameter files are downloaded from each participant so that the parameter merging step can be performed.

- Parameter merge

The leader will merge the downloaded parameter files. Multiple methods of merging are supported here, including average, weighted average, and median. The reader combines the parameter values of all nodes using the selected merge algorithm and informs the other nodes of the merged parameters. Each node then downloads a file from the leader, and updates its local model with a set of new parameter values.

- Check for stopping criteria

Finally, the nodes use the local data to evaluate the model with the updated parameter values and create evaluation criteria. When all merge participants are complete, the leader merges the local evaluation criteria values to calculate the number of global evaluation time criteria. The synchronization step is now marked as complete.

The current state of the system is then compared to the stop criteria, and if the criteria are found to be met, the swarm learning process is stopped. Otherwise, the steps of local model training, parameter sharing, parameter merging, and stopping criteria checking are repeated until the criteria are met.

Use cases: Medical applications

In the white paper, Swarm Learning: Gaining a Competitive Edge from Distributed Data, the following use case example is presented:

"Consider, for example, three breast cancer research institutions in the US, Europe, and Asia, each with its own limited dataset on breast cancer.

These institutions share a common goal of improving the accuracy of breast cancer diagnosis through the development and training of machine learning models. Since each institution's data is limited in size and may be biased towards certain age groups, they want to share their data in a manageable way that is ideally limited to only useful information related to breast cancer."

In this bona fide effort to collaborate and improve the overall health of humanity, a centralized machine learning approach faces significant risks in terms of regulatory compliance. Existing regulations governing medical records in the countries where each institution is located may hinder the sharing of real data and obtaining approval for its transfer to a central location that could possibly be outside the country or origin.

Swarm learning minimizes the risk of regulatory compliance by eliminating the need to transfer real data, and solves boundary challenges by providing training close to the data.

DZNE (German Center for Neurodegenerative Diseases)

The exchange of medical research data between different locations and countries is subject to regulations regarding data protection and data sovereignty. In addition, there are technical barriers. For example, when transferring large amounts of data digitally, the performance of data lines can quickly reach its limits. As a result, many medical research studies are limited to a region and cannot take advantage of data available in other regions. Swarm learning, developed jointly by HPE and DZNE (The German Center for Neurodegenerative Diseases), is opening up exciting new possibilities for collaboration, and greatly accelerating medical research.

Data sharing is key to scientific breakthrough, especially in medical research. Yet, the divide between what is possible and what global privacy legislation allows, has widened. Learn how HPE’s Swarm Learning fundamentally changes the ML paradigm, is distinguished by blockchain, and opens novel opportunities for collaboration across boundaries.

For more in-depth information on the fascinating topic of swarm learning, please be sure to check out the following:

Improving Machine Learning Models with Swarm Learning (Discover 2019 Video)

DZNE press release - AI with Swarm Intelligence

The Big Shift: Healthcare Research Redefined (Video)

Swarm learning and the artificially intelligent edge (enterprise.nxt feature article)

Swarm Learning – Turn Your Distributed Data into Competitive Edge (Discover 2019 Video)

Blog author Kohei Kubo, EMEA & APJ Industry and Workload Marketing Manager, is a frequent - and popular - contributor to the HPE Community. He is responsible for driving edge to cloud solutions in the Industry and Workload Marketing team. Kohei Kubo is based in Tokyo, Japan.

Connect with Kohei Kubo on Twitter and LinkedIn!

- Back to Blog

- Newer Article

- Older Article

- Amy Saunders on: Smart buildings and the future of automation

- Sandeep Pendharkar on: From rainbows and unicorns to real recognition of ...

- Anni1 on: Modern use cases for video analytics

- Terry Hughes on: CuBE Packaging improves manufacturing productivity...

- Sarah Leslie on: IoT in The Post-Digital Era is Upon Us — Are You R...

- Marty Poniatowski on: Seamlessly scaling HPC and AI initiatives with HPE...

- Sabine Sauter on: 2018 AI review: A year of innovation

- Innovation Champ on: How the Internet of Things Is Cultivating a New Vi...

- Bestvela on: Unleash the power of the cloud, right at your edge...

- Balconycrops on: HPE at Mobile World Congress: Creating a better fu...

-

5G

2 -

Artificial Intelligence

101 -

business continuity

1 -

climate change

1 -

cyber resilience

1 -

cyberresilience

1 -

cybersecurity

1 -

Edge and IoT

97 -

HPE GreenLake

1 -

resilience

1 -

Security

1 -

Telco

108