- Community Home

- >

- Storage

- >

- Around the Storage Block

- >

- Experience the data pipeline from edge to core to ...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

Experience the data pipeline from edge to core to cloud

Many businesses today are experiencing the influx of data from the edge – generated by devices, sensors and machines. Some of this data is expected and planned for, other times, it is more than can be handled.

Dealing with data is a consistent challenge and in this new world, from a data perspective, there are two primary challenges that customers are facing today, with regards to rationalizing and optimizing their time to value:

- Hybrid IT – how core infrastructure and core business applications will function in a hybrid IT world where they are being driven by increased times around deployment and increased levels of agility spinning up new applications to respond to the velocity and the variety of data types that are merging from the intelligent edge

- Intelligent Edge – how to deal with a wealth of devices and the data that they generate and send (including the size of the amount of data and the different data types)

When, where and how we analyze data is also changing. Analytics is a key area of focus for businesses - being able to analyze this new type of data, with the new speed of data being generated at the intelligent edge and do so in a hybrid world. It starts at the intelligent edge with data being generated from a multitude of sensors and devices. Sometimes that data is collected and analyzed at the device level itself, sometimes there’s an aggregation point and that aggregation point might be a car, a remote site (such as a hospital with patient medical sensors), or simply the PC of a data scientist.

Some form of that data is often sent back to the core. If it’s coming from multiple streams, multiple devices, it needs to be analyzed in real time. This type of analytics in the core can also be happening in the cloud by customers who are looking for special purpose type functionality such as spinning up GPU-focused test beds to do machine learning that are short term projects, as well as longer term storage and creating a tiered storage environment.

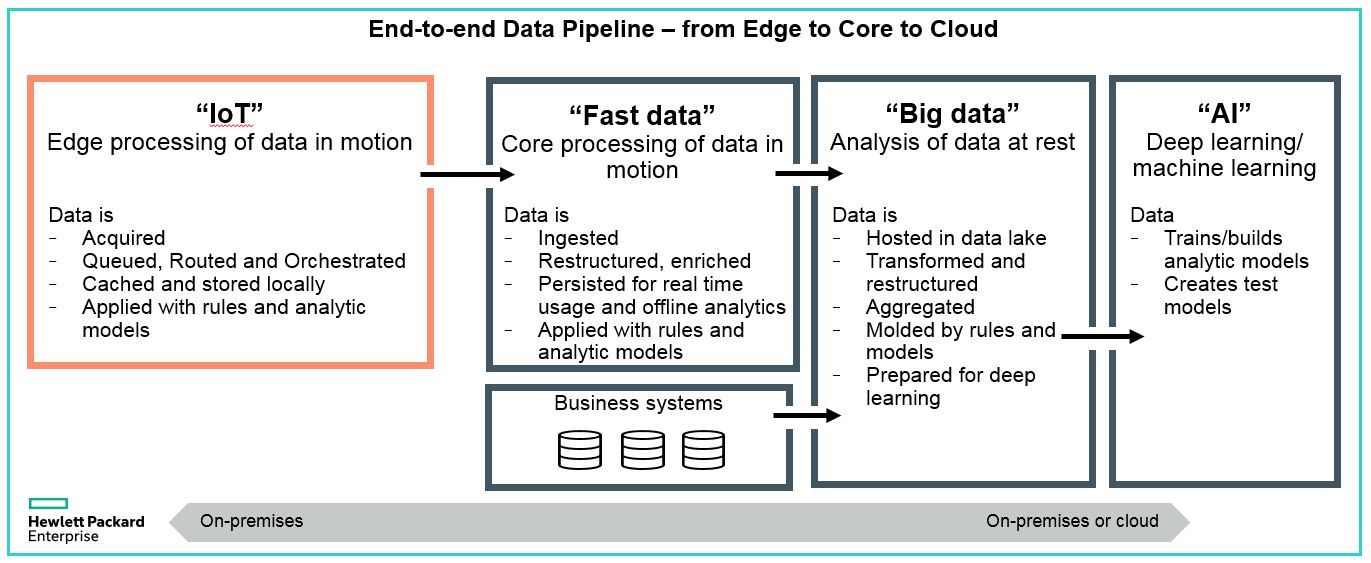

All of this creates the data pipeline.

The data flow from edge to core to cloud needs routing – through a data pipeline – which provides an infrastructure for data to not just flow bi-directionally but to also allows for the implementation of analytic processes in real-time, near-real time, and at rest, as well as AI modeling.

HPE is uniquely positioned to help customers address their challenges of building a data pipeline from the edge to core to cloud.

HPE provides tested solution architectures that are purpose-built or workload optimized, from the intelligent edge to the core, and a combination of services both from a support perspective and from a perspective of professional advisory services. It’s the products as well as the expertise where we can help customers build these richer data pipelines and accelerate their outcomes based on the next generation of analytics.

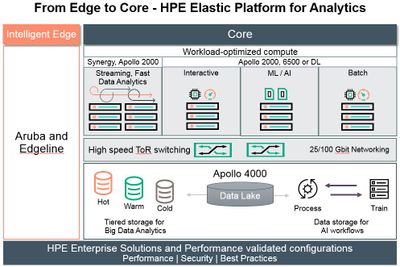

At the heart of the analytic infrastructure is the HPE Elastic Platform for Big Data Analytics (or EPA for short), built primarily on the Apollo platform family, which takes innovation we have around workload optimized nodes and disaggregates storage and compute within the cluster.

For more info, Here’s @patrick_osborne with an overview on the Apollo family and EPA architecture:

For more info on HPE Platform for Big Data Analytics, visit hpe.com/info/bigdata-ra

- Back to Blog

- Newer Article

- Older Article

- haniff on: High-performance, low-latency networks for edge an...

- StorageExperts on: Configure vSphere Metro Storage Cluster with HPE N...

- haniff on: Need for speed and efficiency from high performanc...

- haniff on: Efficient networking for HPE’s Alletra cloud-nativ...

- CalvinZito on: What’s new in HPE SimpliVity 4.1.0

- MichaelMattsson on: HPE CSI Driver for Kubernetes v1.4.0 with expanded...

- StorageExperts on: HPE Nimble Storage dHCI Intelligent 1-Click Update...

- ORielly on: Power Loss at the Edge? Protect Your Data with New...

- viraj h on: HPE Primera Storage celebrates one year!

- Ron Dharma on: Introducing Language Bindings for HPE SimpliVity R...