- Community Home

- >

- Storage

- >

- Around the Storage Block

- >

- High-performance, low-latency networks for edge an...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

Re: High-performance, low-latency networks for edge and co-location data centers

Data is at the epicenter of Digital Transformation and considered one of the most highly valued assets of an organization. Unlocking actionable insights from data is considered crucial to improving the customer experience, driving both innovation and competitive advantage. It also means that an organization can achieve more speed and agility by processing that data closer to where it’s generated.

Location, location, location!

Location, location, location!

Location matters when it comes to where data is generated. Location matters where data needs to be processed – and depending on the type of the data – proximity matters as to where the data is processed, in relation to the source data. Location is also a significant factor where data needs to be stored for post-processing and archiving.

Data is at the epicenter of Digital Transformation, and considered to be a highly valuable asset. Because of this, interaction with the data is becoming increasingly important for enterprises. Unlocking actionable insights from the data is considered crucial to improving customer experience, driving more innovation and competitive advantage. It also means that an organization can achieve more speed and agility by processing that data closer to where it’s generated.

Edge computing allows data from any number of devices to be analyzed at the edge before being sent to the data center. Using intelligent edge technology can help maximize a business’s efficiency. For example, instead of sending data to a central data center, analysis is performed at the location where the data is generated. Micro data centers at the edge – integrated with storage, compute and networking – delivers the speed and agility needed for processing the data closer to where it’s created.

According to Gartner, the vast majority of data will be processed outside traditional centralized data centers by 2025. Also, with the rapid growth of IoT, IDC forecasts that more than 150 billion devices will be connected across the globe by 2025, most of which will be creating data in real time. At the same time, real-time data is forecast to grow to nearly 30 percent by 2025.

Now, with the availability of faster servers and storage systems connected with high speed Ethernet networking, intelligent processing of this data can be more efficiently done at the edge. This is where artificial intelligence applied at the edge – enabled by high speed networking – can transform how we collect, transport, process and analyze data with greater speed and agility.

As data becomes more distributed, with billions of devices at the edge generating data requiring real time processing and analyzing, it is imperative to draw actionable insights. There has created a paradigm shift, from collect-transport-store-analyze to collect-analyze-transport-store, all happening in real time.

Intelligent edge can enable the processing power closer to where the data is being created. It can further solve the latency challenge for real time applications. Streaming analytics can provide the required intelligence needed at the edge, where data can be cleansed, normalized and streamlined before being transported to the central data center or cloud for storage, post-processing, analytics and archiving.

Once data is sent to the central data center from multiple edge locations, it can be further combined and correlated for trend analysis, anomaly detection, projections, predictions, and insights – using machine learning and artificial intelligence.

Edge data centers will generally be placed near end devices they are networked to. These data centers will have a much smaller footprint, while having the same components as a traditional data center. Edge data centers will typically house mission-critical data that needs low latency. An edge data center may be one in a network of other edge data centers, or be connected to a larger, central data center.

Let’s take a look at some of the top use-cases for intelligent edge data centers.

Telecom: With increased deployment for 5G across the globe, edge data centers can help provide low latency by providing better proximity to connected mobile devices and sensors. The intelligent edge provides superior quality of experience (QoE) to subscribers with edge processing. Without edge computing, 5G is simply a fast network technology. 5G was designed to carry massive amounts of data while providing low latency, high reliability, and immense speed or bandwidth.

Internet of Things (IoT): With billions of devices and sensors generating data at an unprecedented speed, there is strong demand for processing data at the edge, in real time, instead of sending it to centralized data center, due to latency constraints.

The Forth Industrial Revolution: The forth industrial revolution is taking advantages of advances in artificial intelligence, robotics, and the internet of things (IoT), providing increased automation at scale, for traditional manufacturing and factories. It is opening doors to preventive maintenance with increased use of smart, low-cost connected sensors. Edge data centers can provide the low-latency connectivity needed for processing data closer to where it’s created.

Healthcare: Today – and into the future – telemedicine, smart wearables, and robotic surgeries will create an increased need and dependency on the low latency links that provide actionable insights in real time.

Autonomous vehicles. Edge data centers are more suited for applications that rely on quick response time. Autonomous vehicles will require computation at the edge in order to reduce the amount of data that needs to be transmitted, and eliminate latency.

Artificial Intelligence. AI applied at the edge has no limits when it comes to potential use cases. Combining computation at the edge with AI can provide more and differentiated actionable insights, requiring faster network response times.

Edge computing brings multiple benefits. It can significantly reduce the load on the network by reducing the data that needs to be transported to the central data center. It can significantly improve the reliability of the network by distributing and load balancing between edge and central data center location(s). It can improve the customer experience for latency sensitive applications, and reduce total cost of ownership (TCO) by optimizing the infrastructure hosted at central location with lower cost edge infrastructure.

Data center co-location

Let’s start with a brief definition of data center co-location. Co-location provides privately owned server, storage and networking equipment in a third-party data center. According to the July 2021 report published by Allied Market Research, the global data center co-location market generated $46.08 billion in 2020.

As data continues its explosive growth rates – with a need to draw actionable insights in real time – the data center market continues to evolve to provide the required infrastructure for increased real time processing. The emergence of co-location data centers will continue to provide an option for the on-demand availability of such infrastructure.

Some of the top drivers for data center co-location are:

- High ownership costs associated with private data centers

- Enormous growth in data generation, requiring increased real-time processing closer to edge

- Cloud on-ramp adoption

- Disaster mitigation

Adoption rates for co-location data centers have accelerated in these verticals:

- Retail

- BFSI

- IT & Telecom

- Healthcare

- Media & Entertainment

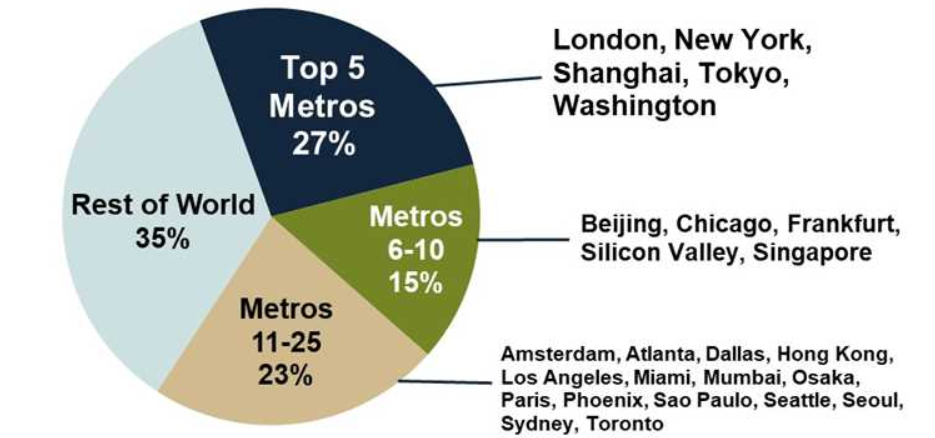

General IT requirements and the telecom vertical generate the highest revenue in the data center co-location industry. At the same time, cloud providers continue to rely on co-location data centers to help better serve major clients in key cities. Data from a September 2020 report from Synergy Research Group shows that just 25 metro areas account for 65% of worldwide retail and wholesale co-location revenues.

A wholesale data center is where a single customer rents a facility owned by a third party, whereas a retail co-location facility is where multiple customers are housed in a shared space. Enterprises co-locate inside multi-tenant data centers for:

- Disaster recovery or interconnection to telcos and other service providers. This approach provides a geographical alternative for disaster recovery.

- Simplified and secure hybrid cloud architecture. A simplified hybrid cloud architecture helps to relieve enterprise IT of the physical burdens of running and managing their own infrastructure. This approach provides power, cooling, failover – and even raw space – with room for expansion. This also provides a highly resilient space with power, based on an operational expense model for consumption.

- Cloud on-ramps are a cross-connect away. A wholesale data center is home to most of the cloud on-ramps used for direct connectivity to a distributed multi-cloud infrastructure.

- Colocation can also ease the complexity of networking. This is accomplished by providing high-speed, low-latency connectivity options.

The significance of high-performance networking for co-location data centers

Without leveraging networking capabilities, co-location data centers are little more than isolated islands. Significant transformational value is derived by:

- Networking within the co-location center

- Networking between the co-location centers

- Providing networking access to the co-location centers.

Edge computing coupled with artificial intelligence and machine learning requires amazing and far-reaching computational capabilities from servers and the network. Today’s screaming-fast multi-core processors, with GPU offload, provides a solution by accelerating computational processing. Consequently, all of this increased traffic places a huge demand on the network infrastructure.

Let’s take a moment to walk through a checklist of the needs of high performance networking infrastructure in data centers for the intelligent edge.

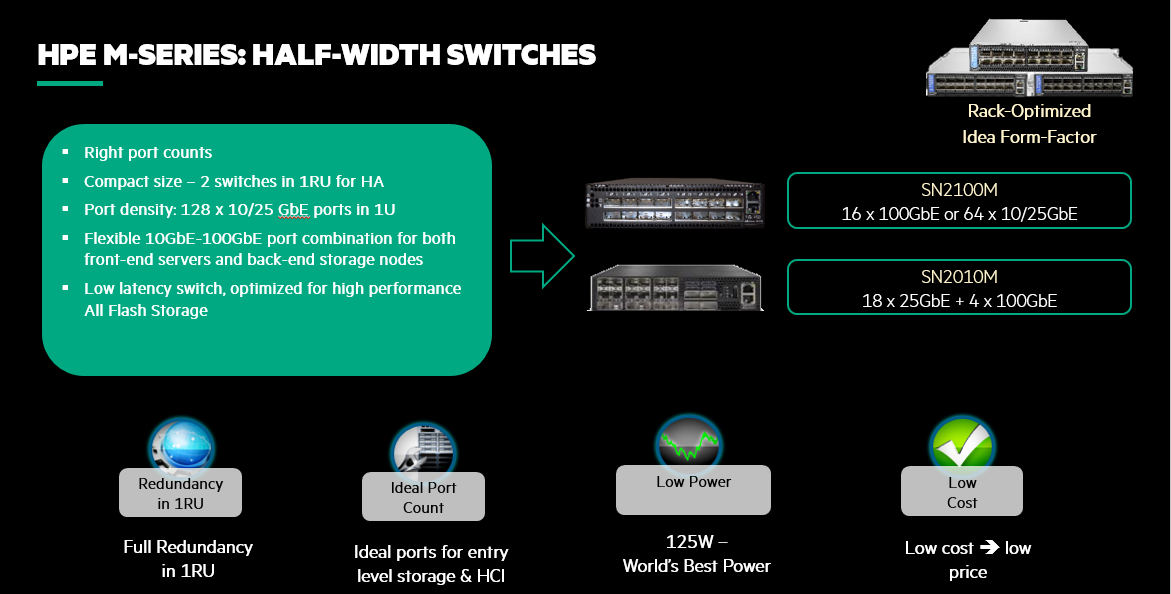

- Form-factor: Design must provide flexibility to fit into compact edge racks. Half-width form-factor with 1U height can provide full-redundancy in 1U.

- Ports-on-demand: Enable pay-as-you-grow with less unused ports. Also, fits into green data center initiatives.

- High bandwidth: 10/25/100/200GbE and upcoming 400GbE speeds.

- ZERO packet loss: Ensures reliable and predictable performance.

- Highest efficiency: Microburst absorption must be available, as well as built-in accelerators and offloads for storage, virtualized, and containerized environments.

- Ultra-low latency: Necessary for true cut-through latency.

- Low power consumption: Improves operational efficiency.

- Dynamically shared, flexible buffering: This approach provides the flexibility to dynamically adapt and absorb micro-bursts and avoid network congestion.

- Advanced load balancing: Improves scale and availability.

- Predictable performance: High Tb/s switching capacity offers wire-speed performance.

- Low cost with superior value.

HPE M-series delivers the ideal networking platform for intelligent edge and co-location

Unique half-width switch configuration and break-out (splitter) cables offered by the M-series further extend its feature set and functionality. Breakout cable enables a single 200GB split into two 100GB links, or any 100GB port on a switch to four 25GB breakout ports, linking one switch port to up to four adapter cards in servers, storage or other subsystems. At a reach of 3 meters, they accommodate connections within any rack. Combine this with half-width form factors and you have the ideal combination of performance, rack efficiency, and flexibility for today’s storage, hyperconverged, and machine learning environments, as well as processing at the edge.

Check out my companion blog, Need for speed and efficiency from high performance networks for Artificial Intelligence!

Follow Faisal on Twitter @ffhanif .

Storage Experts

Hewlett Packard Enterprise

twitter.com/HPE_Storage

linkedin.com/showcase/hpestorage/

hpe.com/storage

- Back to Blog

- Newer Article

- Older Article

- Back to Blog

- Newer Article

- Older Article

- haniff on: High-performance, low-latency networks for edge an...

- StorageExperts on: Configure vSphere Metro Storage Cluster with HPE N...

- haniff on: Need for speed and efficiency from high performanc...

- haniff on: Efficient networking for HPE’s Alletra cloud-nativ...

- CalvinZito on: What’s new in HPE SimpliVity 4.1.0

- MichaelMattsson on: HPE CSI Driver for Kubernetes v1.4.0 with expanded...

- StorageExperts on: HPE Nimble Storage dHCI Intelligent 1-Click Update...

- ORielly on: Power Loss at the Edge? Protect Your Data with New...

- viraj h on: HPE Primera Storage celebrates one year!

- Ron Dharma on: Introducing Language Bindings for HPE SimpliVity R...