- Community Home

- >

- Storage

- >

- Around the Storage Block

- >

- Tackling data management for containers

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

Tackling data management for containers

Take a deep dive into tackling data management for containers, with the right strategies and solutions to address developer needs, operations requirements, and hybrid cloud workload mobility criteria.

In these competitive industry environments, organizations are rapidly adopting containers to power modern web-scale or cloud-native applications that are inherently distributed in nature, built to scale, and resilient to market changes.

Containers provide the necessary abstraction to package application and their dependencies to drive consistent application deployment across any environments. It is very common for organizations that are building highly automated CI/CD pipelines, use containers as a way of reducing the friction in the application development and deployment cycle for rapid application iteration enabled by feedback loops.

Because, containers enable application portability through packaging, the notion of “develop once, deploy anywhere” is becoming a fast reality for companies that want to deploy and consume applications in a hybrid-cloud, multi-cloud delivery model.

Decoupling development and operations

Typically, Operations (Ops) are responsible for infrastructure (compute, network, storage, etc. as well as system resources (file system, runtime libraries, OS versions, etc.). Containers shift responsibilities of managing system resources to application development (Dev) teams removing the overhead (and potential conflicts) from Ops teams.

In addition, container orchestration tools add another layer of abstraction that controls the configuration of infrastructure elements (CPU, storage, memory, etc.) at run time defined by the application developers. All of this means that containers redraw some of the traditional dependency lines that existed between Dev and Ops teams—a key step in instilling DevOps practice throughout the organization.

Storage persistence and data management challenges

Containers are light weight and start rapidly—which makes them a perfect vehicle for deploying web-scale ephemeral, stateless applications.

Storage persistence and data management for containers are often rated as one of the top challenges for enterprises The (Docker) volume plug-in and persistent volume framework of orchestrators (Kubernetes) enable external volumes to be used for storing and accessing data.

While taking advantage of containerized architectures, enterprises often find themselves caught up balancing the needs of developers while simultaneously thinking of operationalizing containers at scale both on-premises and across cloud.

Some of the challenges to consider are:

Developer needs:

- Self-service and instant access to storage infrastructure in order for them to rapidly build, test, and iterate applications

- Quick access to production grade data sets for rapid troubleshooting rather than using synthetic data

- Ability to provision and tear down infrastructure when the pipeline job completes without interrupting Ops

Operations requirements:

- Simple tools to deploy and integrate storage with containerized workloads and orchestrators

- Deploying stateful containerized workloads without compromising any of the operational processes or resiliency, protection, or scaling requirements of applications

- Host containerized workloads alongside existing VM or bare metal apps without adding storage silos

Multi-cloud/hybrid cloud workload mobility criteria:

- Seamless bidirectional mobility of stateful containers—data has inertia and gravity and overcoming those is key to unlocking potential of portability of containers both on- and off-premises

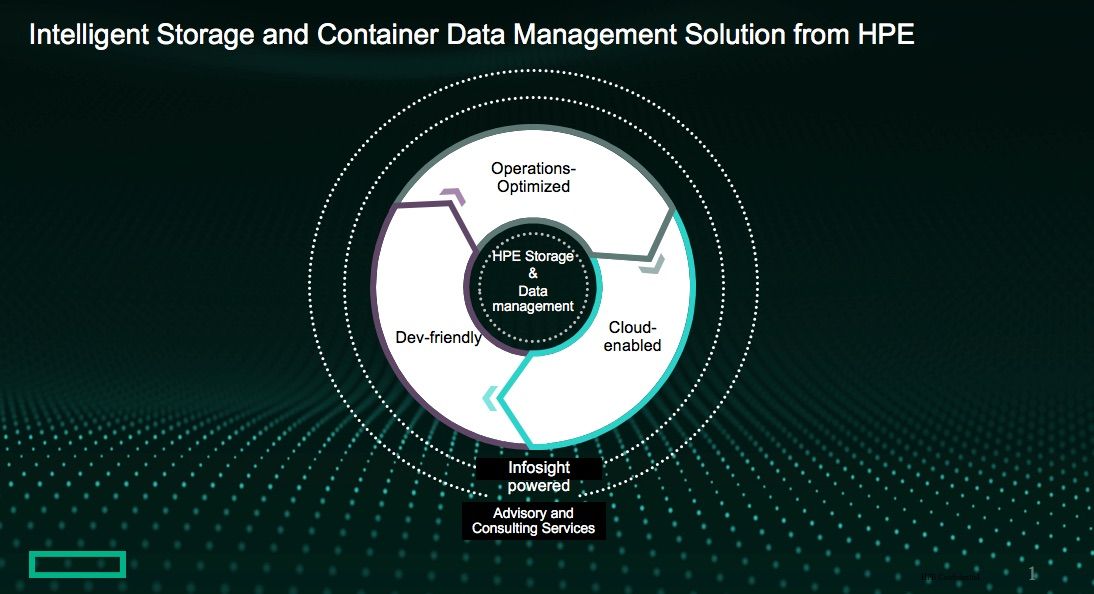

Intelligent storage and data management for containers

Three big attributes of storage and data management solutions that are key to successful adoption of containerized workloads are:

- (Must be) Developer-friendly experience

- (Is) Optimized for Operations

- (Should) Enable true hybrid cloud via bidirectional workload and data mobility

Let’s look closer at how we do this.

Developer-friendly experience

Self-service access to storage volumes:

Developers can instantly provision storage using the Kubernetes construct of “storageclass” which allows for dynamic provisioning of volumes on storage arrays (HPE 3PAR Storage, HPE Nimble Storage, and soon on HPE Primera).

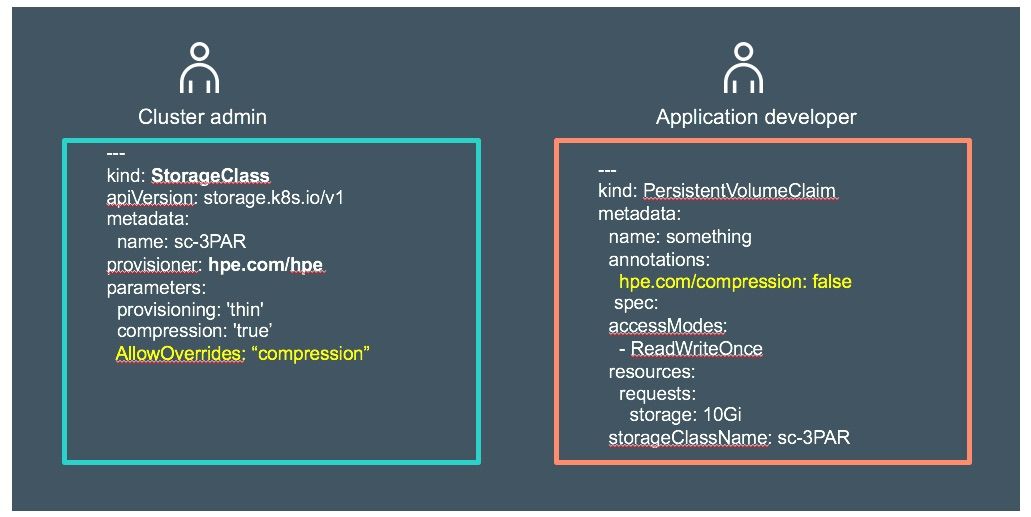

In the example below, when defining “storageclass,” the cluster admin can specify the dynamic provisioner to use for just-in-time provisioning of volumes. This example uses “hpe.com/hpe” which is the 3PAR dynamic provisioner.

In addition to that, the cluster admin can allow which of the parameters in the template can be overridden by the application developer to suit application needs. Here, StorageClass sc-3PAR enables compression by default but the admin has made it flexible enough to be turned off by application developer.

This is a great example of how a cluster/storage admin can enforce governance controls while still empowering developers for self-service consumption of storage resources.

Here is a complete set of storage parameters supported on 3PAR for block volumes.

Snapshots or clones of production data sets:

The developer productivity, code quality, and mean time to troubleshooting all improve dramatically when application team has access to fresh production quality data sets for development, QA or issue resolution. Similar to the example above, developers can create snapshots (or clones) on-demand of the production data sets using StorageClass parameter (virtualCopyOf for Snapshots, CloneOf for Physical Copies) on 3PAR (or Nimble) and attach those copies to CI pipeline to test code against more realistic data sets for simplicity we are omitting steps for scrubbing sensitive data).

Upon completion of pipeline execution, snapshots can be set to auto delete (upon container removal ) for cleanup.

The key here is empowering developers with access to storage data services without impacting Ops.

Operations-optimized container data management

Ops priorities are simple. Keep the business and applications running uninterrupted at a performance levels that meets Service Level Objectives.

That means protection, resiliency, scale and performance are paramount while deploying apps and containerized workloads are no exception.

Here’s how we enable it:

- Automated installation in under 5 minutes with the entire install and configuration process of the 3PAR Container provider automated using Ansible playbook. Within minutes, storage data services from 3PAR can be consumed by containerized apps. That is Day 0 simplicity for you.

- Enable Snapshot (one time or at regular intervals) or Clones as an entry in Kubernetes StorageClass. Volumes are protected at all times.

- Enable any volume to be replicated asynchronously or synchronously (with automated failover aka peer persistence). Simply define replication groups on 3PAR and specify those in StorageClass for volumes to be automatically replication upon creation. (See this example for more info. )

- Specify per volume QoS to deliver right level of performance to containerized apps consuming storage.

- UID/GID based controls (specified in StorageClass) to prevent unauthorized data access to data volumes.

- Deploy Kubernetes clusters on separate 3PAR Virtual domains for isolation of data sets for multi-tenancy.

- Scale to 100s of PODs per worker node.

- Deploy volumes across multiple 3PAR systems.

In addition, the 3PAR Container provider delivers both File and Block access. You can enable Read Write Once (RWO) access mode for block volumes and Read Write Many (RWM) for applications that require concurrent access to data volumes across cluster.

Block volumes can be accessed via FC or iSCSI based on your environment

Here is a complete set of file mode operations on 3PAR supported in Container environments.

Multi-cloud data mobility

Finally, for containers (stateful) to be truly portable, two things are absolutely critical:

- Data mobility between on-premises storage to cloud storage

- Consistent data services for applications regardless of the location.

Today, we enable true hybrid cloud solution for containerized workloads between many of our storage portfolio products including HPE Nimble Storage, HPE Nimble dHCI, and HPE Cloud Volumes. Container data volumes from on-premises storage arrays can be replicated (bidirectionally) to HPE Cloud Volumes.

Since HPE Cloud Volumes (HPE CV) sits at the edge of public cloud, you do not incur either egress or data transfer (bandwidth) charges when your application moves to or from the cloud or between clouds. This enables many use cases such as cloud-based disaster recovery, data analytics, application migrations or Hybrid CI/CD (develop in cloud, deploy on-prem, or vice versa).

Plus, you get to enjoy all of the same data services (snapshots, replication, QoS, performance, scale, etc.) in the cloud that you have come to enjoy on-premises. In other words, the StorageClass once defined can be used either on-prem or off-premises with same consistency of data services.

Recently, we deepened our partnership with Google to deliver true hybrid cloud solutions for customers.

HPE InfoSight intelligent data platform

Finally, all of our storage platforms are powered by HPE InfoSight predictive analytics for proactive identification and predictive support of issues before they occur. Every second, HPE InfoSight analyzes and correlates millions of sensors from all of our globally deployed systems. HPE InfoSight continuously learns as it analyzes this data, making every system smarter and more reliable.

HPE Pointnext advisory and consulting services

If you need help figuring out where to start your container journey—whether it is modernizing or refactoring existing apps to developing cloud-native apps, our experts at HPE Pointnext can guide you all the way from determining “Viability” to “Integration” and “Operationalizing” your containerized workloads both on-premises and off-premises.

Oh, did I say that 3PAR Container provider was available to all customers at no charge to all customers.

Download the latest plugin from Docker hub.

If you have made this far, congratulations! If you cannot wait to get started, then head over to github page. While you are at it, refer to these sample examples we have provided on the usage of 3PAR Container provider (aka 3PAR Volume plug-in for Docker).

Hit us up with your comments. Share the blog if you found this to be useful.

Follow on Twitter: @srikseshu

Connect on LinkedIn: https://www.linkedin.com/in/srikanthvenkataseshu/

Storage Experts

Hewlett Packard Enterprise

twitter.com/HPE_Storage

linkedin.com/showcase/hpestorage/

hpe.com/storage

- Back to Blog

- Newer Article

- Older Article

- haniff on: High-performance, low-latency networks for edge an...

- StorageExperts on: Configure vSphere Metro Storage Cluster with HPE N...

- haniff on: Need for speed and efficiency from high performanc...

- haniff on: Efficient networking for HPE’s Alletra cloud-nativ...

- CalvinZito on: What’s new in HPE SimpliVity 4.1.0

- MichaelMattsson on: HPE CSI Driver for Kubernetes v1.4.0 with expanded...

- StorageExperts on: HPE Nimble Storage dHCI Intelligent 1-Click Update...

- ORielly on: Power Loss at the Edge? Protect Your Data with New...

- viraj h on: HPE Primera Storage celebrates one year!

- Ron Dharma on: Introducing Language Bindings for HPE SimpliVity R...