- Community Home

- >

- HPE AI

- >

- AI Unlocked

- >

- To the edge and back again: Meeting the challenges...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

To the edge and back again: Meeting the challenges of edge computing

To better address the challenges of edge systems, it’s key to understand what happens at the edge, at the core, and in between.

Some people think of edge computing as a glorified form of data acquisition or a local digital process control. Yet, edge is much more than both of those.

Edge involves many data sources, usually at geo-distributed locations. But remember, it’s the aggregate of that data that holds the key to value and insights. Analysis of that data occurs at core data centers, and actions guided by the resulting insights often need to be done at edge locations. That means a surprising challenge of edge systems is the efficient traffic not only from edge to core but also back again.

The scale at which all this happens is also an issue. Incoming data at edge sources is often quite large; a huge amount of data from a large number of edge locations can create truly enormous amounts of data.

A classic example of dealing with extreme scale for edge computing is with autonomous car development. Car manufacturers need access to global data, working with many petabytes of data per day. They must also meet critical key performance indicators (KPIs), including measuring how long it takes to collect data from test cars, how long to process, and how long to deliver insights.

Of course, not all edge systems involve this extreme scale of data, but most edge situations involve too much data to transfer it all from edge to core. This means the data must be processed and reduced at the edge before sending it to the core. This type of data analysis, modeling, and data movement must be efficiently coordinated at scale.

To better understand the challenges of edge systems, let’s dig into what happens at the edge, at the core, and in between.

Living on the edge

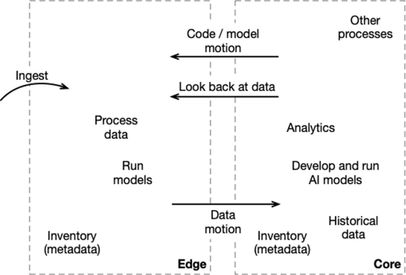

Edge computing generally involves systems in multiple locations, each doing data ingestion, temporary data storage, and running multiple applications for data reduction prior to transport to core data centers. I illustrate these tasks in the left half of Figure 1.

Figure 1. Graphical inventory of what edge and core need to do and the complex interaction between them.

Analytics applications are used for pre-processing and data reduction. AI and machine learning models are also employed for data reduction, such as making decisions about what data is important and should be conveyed to core data centers. In addition, models allow intelligent action to take place at the edge. Another typical edge requirement is to inventory what steps took place and what data files were created.

All this must happen at many locations, none of which will have a lot of on-site administration, so edge hardware and software must be reliable and remotely managed. With these needs, self-healing software is a huge advantage.

Training AI models and more--at the core

The activities that happen at the core, seen on the right side of Figure 1, resemble edge processes but with a global perspective, using collective data from many edge locations. Here analytics can be more in-depth. This is where deep historical data is used to train AI models. As at edge locations, the core contains an inventory of actions taken and data created. The core is also where the connection is made to high-level business objectives that ultimately underly the goal of edge systems.

Data infrastructure at the core must meet challenging requirements because data from all the edge systems converges there. Data from the edge (or data resulting from processing and modeling at the core) can be massive or can consist of a huge number of files. The infrastructure must be robust in handling large scale both in terms of number of objects as well as quantity of data.

Of course, analysis and model development workflows are iterative. As an organization learns from the global aggregate of edge data, new AI models are produced and updated. Also, analytics applications are developed that must be deployed at the edge. That brings us to the next topic, what needs to happen between edge and core.

Traffic moving between edge and core

Just as Figure 1 lists the key activities at the edge or in the core, it also shows the key interaction between the two: the movement of data. Obviously, the system needs to move ingested and reduced data from edge locations to the core for final analysis. Yet, people sometimes overlook an unexpected journey: moving new AI and machine learning models or updated analytics programs developed by teams at the core back to the edge.

In addition, analysts, developers, and data scientists sometimes need to inspect raw data at one or more edge locations. Having direct access from the core to raw data at edge locations is very helpful.

Almost all large-scale data motion should be done using the data infrastructure, but it can be useful to have direct access to services running at the edge or in the core. Secure service meshes are useful for this process, particularly if they use modern zero-trust, workload authentication methods such as the SPIFFE protocol.

Now that I’ve identified what happens at edge, core, and in between, let’s look at what data infrastructure needs to do to make this possible.

HPE Ezmeral Data Fabric: from edge to core and back

HPE is known for its excellent hardware, including the Edgeline series (specifically designed for use at the edge). Yet, HPE also makes the hardware-agnostic HPE Ezmeral Data Fabric software, designed to stretch from edge to core, whether on premises or in the cloud.

HPE Ezmeral Data Fabric lets you simplify system architectures and optimize resource usage and performance. Figure 2 shows how the capabilities of the data fabric are used to meet the challenges of edge computing.

Figure 2: Looking inside to see how HPE Ezmeral Data Fabric supports the actions and processes listed in Figure 1 from edge to core and back again. Triangles represent data fabric volumes, a key tool for data management. Built-in capabilities for fabric-level data motion include mirroring, table replication (shown in figure), and data fabric event stream replication (not shown).

Computation can be managed at edge or core using Kubernetes to manage containerized applications. HPE Ezmeral Data Fabric provides the data layer for such applications. And thanks to a global namespace for HPE Ezmeral Data Fabric, teams working at the data center can remotely access data that is still at the edge.

Learn more

To learn more about how customers are dealing with edge situations, listen to the recorded webinar “Stretching HPE Ezmeral Data Fabric from Edge-to-Cloud” with Jimmy Bates, Chad Smykay, and Fabian Wilckens. For practical tips on IoT and edge systems, watch the video “Solving Large Scale IoT Problems” with Ted Dunning. Additional information about data fabric can be found in the technical report “HPE Ezmeral Data Fabric: Modern infrastructure for data storage and management”.

Ellen Friedman

Hewlett Packard Enterprise

Ellen_Friedman

Ellen Friedman is a principal technologist at HPE focused on large-scale data analytics and machine learning. Ellen worked at MapR Technologies for seven years prior to her current role at HPE, where she was a committer for the Apache Drill and Apache Mahout open source projects. She is a co-author of multiple books published by O’Reilly Media, including AI & Analytics in Production, Machine Learning Logistics, and the Practical Machine Learning series.

- Back to Blog

- Newer Article

- Older Article

- Dhoni on: HPE teams with NVIDIA to scale NVIDIA NIM Agent Bl...

- SFERRY on: What is machine learning?

- MTiempos on: HPE Ezmeral Container Platform is now HPE Ezmeral ...

- Arda Acar on: Analytic model deployment too slow? Accelerate dat...

- Jeroen_Kleen on: Introducing HPE Ezmeral Container Platform 5.1

- LWhitehouse on: Catch the next wave of HPE Discover Virtual Experi...

- jnewtonhp on: Bringing Trusted Computing to the Cloud

- Marty Poniatowski on: Leverage containers to maintain business continuit...

- Data Science training in hyderabad on: How to accelerate model training and improve data ...

- vanphongpham1 on: More enterprises are using containers; here’s why.