- Community Home

- >

- Storage

- >

- Around the Storage Block

- >

- Data Protection for Containers: Part II, Restore

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

Data Protection for Containers: Part II, Restore

In the first part of our "Data Protection for Containers" series we discussed what you need to do from a container orchestrator perspective to ensure persistent volumes are protected by snapshots and properly backed up with replication. In this follow-up tutorial we’ll discuss how to recover and restore your data in the event of a lost/damaged file, corrupt database or even a ransomware attack.

Let’s ask Google what defines "data restore":

The examples used here are accessible from GitHub. All specifications references relies on HPE Nimble Storage Linux Toolkit 2.4.1, NimbleOS 5.0.5 and Red Hat OpenShift 3.10 installed according to our best practices found in the HPE Nimble Storage Integration Guide for Red Hat OpenShift and OpenShift Origin . Storage classes are manipulated by a Cluster Administrator and everything else in this tutorial is being run in a new blank project within OpenShift as a regular user.

It’s advisable to at least glance over the concepts described in part I to understand the roles and concepts we’re building these recovery scenarios from.

Start by checking out the container examples repo:

$ git clone https://github.com/NimbleStorage/container-examples

$ cd blogs/data-protection-part-II

Understanding the HPE Nimble Kube Storage Controller

Quite a few new parameters have been introduced in version 2.4.1 when in comes to empowering Application Owners. We’ve introduced the ability to allow the Cluster Administrator to define a list of parameters that Application Owners may override using the allowOverrides parameter in the StorageClass. Most likely these overrides are cherry picked by consulting with the Storage Architect to ensure just enough permissions to perform the needed functions for a particular use case. The Application Owner may then annotate their Persistent Volume Claims (PVCs) to override a particular parameter. This security model aligns well with OpenShift where Cluster Administrators may delegate control of a particular resource to an Application Owner.

A very good example of using allowOverrides is to allow Application Owners to specify a custom Performance Policy. This is normally a detail of the deployment only known to the Application Owner and it also help the Cluster Administrator to keep down the number of storage classes to keep things neat and tidy. Imagine having a StorageClass for every Performance Policy!

Reading tips: Below examples will use # for system:admin role and $ for the user role. No YAML is displayed in this post to help ease reading. Please click the .yaml links to display the raw file in a new window.

This is what such a StorageClass would look like (description thrown in to show how multiple overrides work):

# oc create -f sc-override.yaml

storageclass.storage.k8s.io "override" created

An Application Owner may then create the following PVC to override the Performance Policy (we’ll also create a new project for this tutorial):

$ oc new-project wp

$ oc create -f pvc-override.yaml

persistentvolumeclaim "override" created

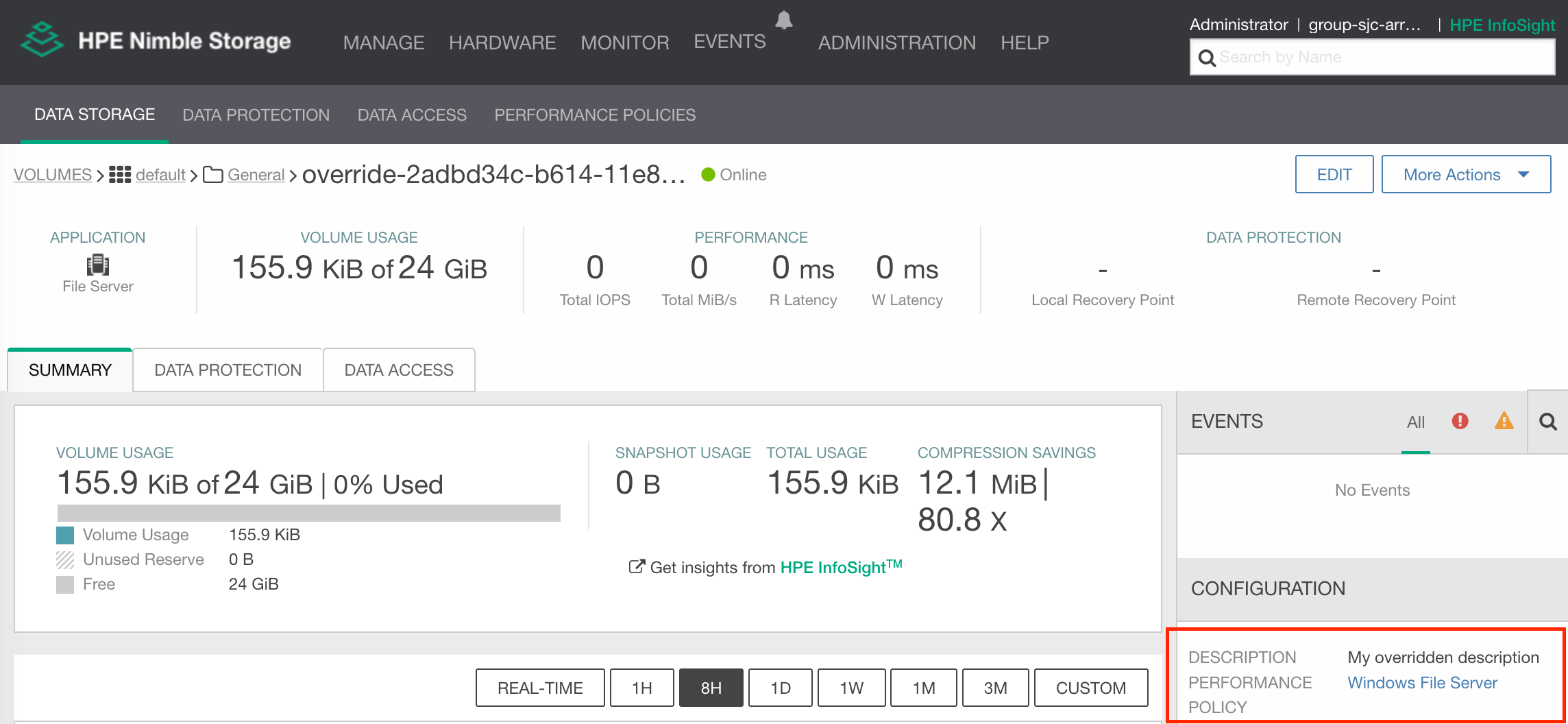

Now, creating the PVC and later inspecting the volume from either CLI or UI, we can clearly see that the Performance Policy and description have been overridden from the defaults defined in the StorageClass we called.

Using a proxy deployment to funnel data

For the restore execises we'll use a separate deployment to mount clones and copy data from. For the lack of an official nomenclature we call this a proxy. The proxy deployment will sit alongside a production application namespace to recover files from cloned snapshots and copy those files into the running deployment. Nothing prevents the Application Owner to attach the clone to the running deployment but understand that attaching and detaching volumes to running deployments will restart the container and may cause unnecessary outages.

One might have a preference for which base image to use for the proxy deployment depending on the use case. We’re only going to use the proxy deployment to inspect filesystems, so a standard bash shell and busybox will be more than enough. (The fine print: the tar command is required in the image)

Go ahead and create the proxy:

$ oc create -f proxy.yaml

deployment.extensions "proxy" created

Recovering parts of a filesystem

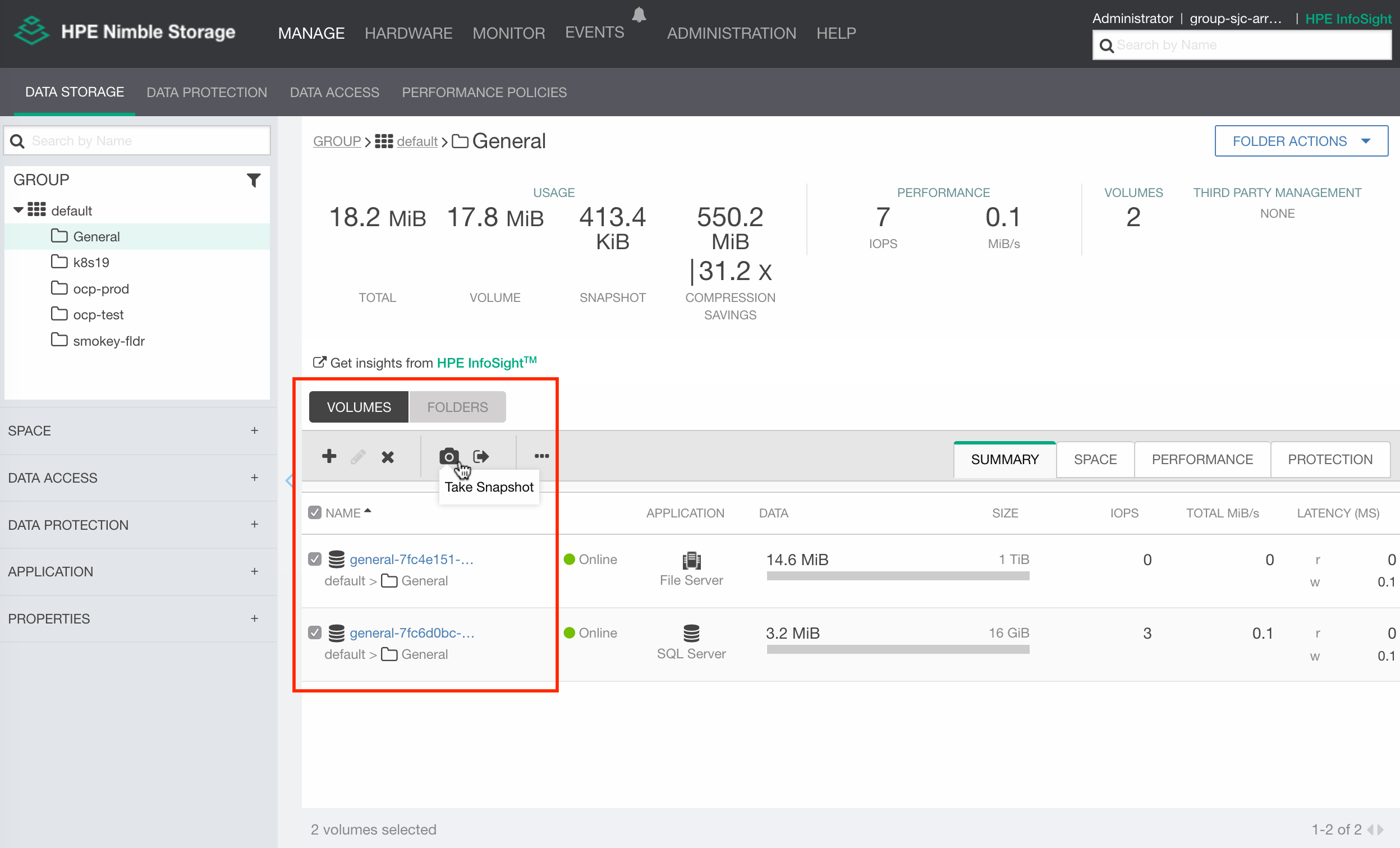

In an effort to not diverge too far from part I, we’ll use the same WordPress application to serve as an example. A somewhat modified default “General” StorageClass is being used to serve as class for both PVCs, only using the perfPolicy override to distinguish the characteristics. The class also contains all overrides needed for our examples discussed in this tutorial.

Create the StorageClass (as Cluster Administrator):

# oc create -f sc-general.yaml

storageclass.storage.k8s.io "general" created

Create the PVCs (as Application Owner):

$ oc create -f pvcs.yaml

persistentvolumeclaim "wordpress" created

persistentvolumeclaim "mariadb" created

… and the WordPress application:

$ oc create -f wp.yaml

secret "mariadb" created

deployment.extensions "mariadb" created

service "mariadb" created

deployment.extensions "wordpress" created

service "wordpress" created

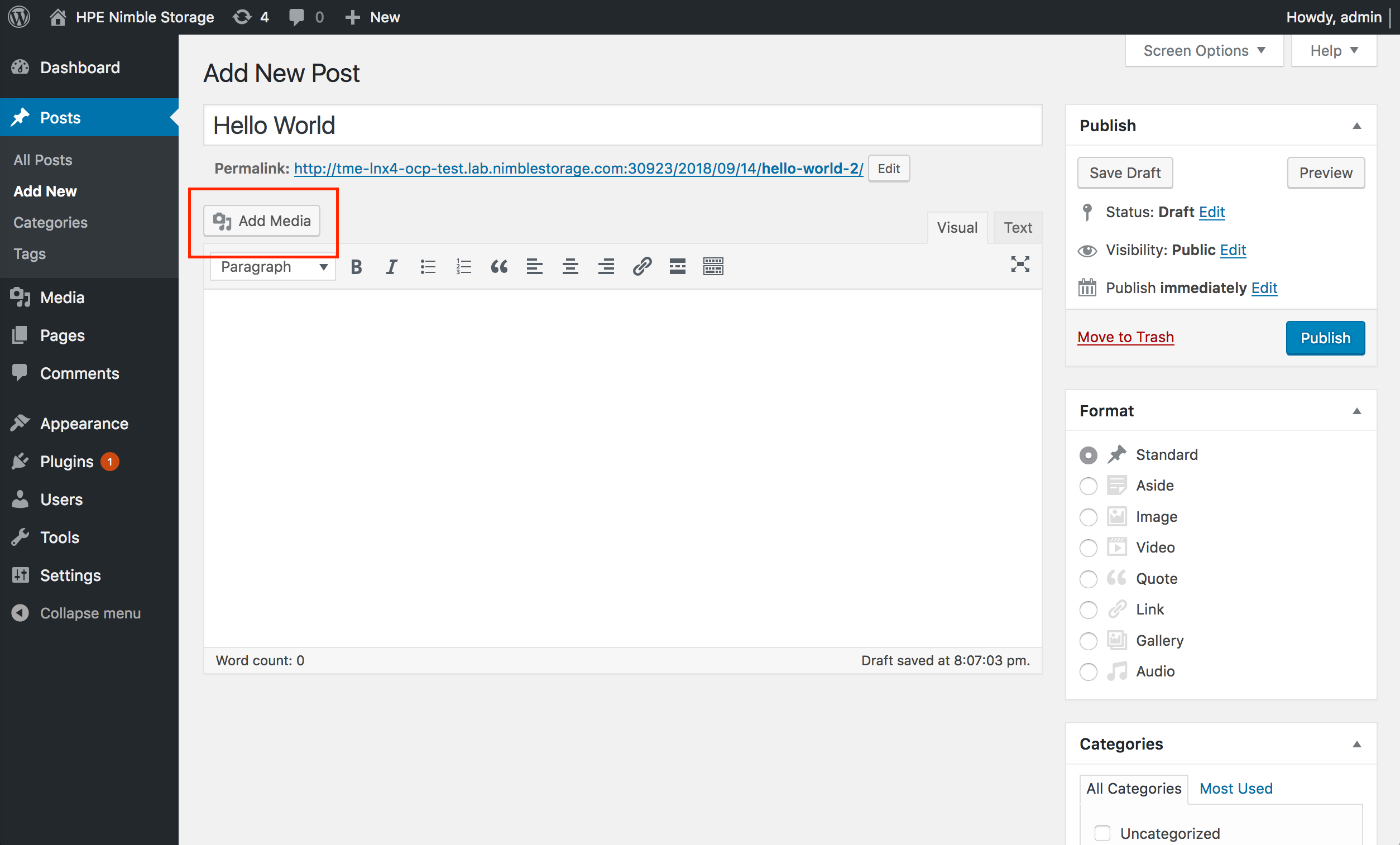

Run through the wizard to setup WordPress. Then, create some content in the WordPress application by adding a blog post. Make sure to upload a file with your blog post.

$ oc rsh deploy/wordpress find -name bitmap.png \

./wp-content/uploads/2018/09/bitmap.png

$ oc rsh deploy/wordpress rm \

./wp-content/uploads/2018/09/bitmap.png

Note: WordPress creates a series of thumbnails, make sure the right image asset gets deleted that you’re verifying against

Trying to download the file from WordPress should now give a 404. Now, to restore the file, we’ll use cloneOfPVC and specify which snapshot we want to clone the PVC from. We’ll use the nightly snapshot created previously.

$ oc create -f pvc-clone.yaml

persistentvolumeclaim "wordpress-clone" created

Attaching the wordpress-clone to the proxy pod is quite simple, just:

$ oc volume --add -t pvc --claim-name wordpress-clone -m /wordpress-clone \

--name wordpress-clone deploy/proxy

deployment.apps "proxy" updated

OpenShift provides two facilities to copy files out and into containers, cp and rsync. In this example we’re only copying a single file, if a complete directory structure is needed, rsync is the recommended facility to use.

$ oc cp proxy-6688dfc546-sfbgm:wordpress-clone/wp-content/uploads/2018/09/bitmap.png /tmp/bitmap.png

$ oc cp /tmp/bitmap.png wordpress-7dd955968f-fgksj:wp-content/uploads/2018/09/bitmap.png

Presto! The file is now restored, reload the 404 page to download the newly restored file.

Hint: Get the pod names by running 'oc get pod'

Remove the volume from the proxy deployment and delete it:

$ oc volume --remove --name wordpress-clone deploy/proxy

deployment.apps "proxy" updated

$ oc delete -f pvc-clone.yaml

persistentvolumeclaim "wordpress-clone" deleted

Restoring an entire volume to a known good state

Envision a WordPress malware attack that either defaces the entire website, plants a trojan or even ransomware. An immediate response would be to shut down the Deployment but also remove the external Route until the container images have been patched for the vulnerability, but how would you get your data back?

In the event of an upgrade of an application with a database by revving an image, failing halfway, how would one simply hit the undo button to correct course?

Nimble provide two ways of reverting the persistent storage associated with a Deployment, either clone from a known state and continue to run the application from the clone, or, do a true restore of the Nimble volume to a known snapshot. There are pros and cons with both methods, cloning will keep the parent around forever and there is a bunch of renaming procedures to follow before bringing the deployment back online. In this tutorial we’ll use the latter method.

Restoring the volume in OpenShift involves deleting the PVC from OpenShift which can make Application Owners uncomfortable. Nimble will by default not delete a volume from the array unless the Application Owner or Cluster Administrator explicitly request removals to be permanent. This is where it could get hairy. The Cluster Administrator might override deleteOnRm and the Application Owner can’t reliably know if a parameter is overridden. Therefor, communication is key if users are to use the volume restore functionality on cluster and deleteOnRm should be expressed in the StorageClass, even if it’s false by default. This must be a conscious choice by the Cluster Administrator and throughly tested on non-production volumes before letting Applications Owners leverage the functionality.

So, we’ll work from the same snapshot created in the single file restore scenario. This time just delete any blog post created and insert a "malicious" post. WordPress is now ready to have both the wordpress and mariadb deployment restored to a known state.

Before starting to dismantle the deployment and PVCs, make a note of the volume names used:

$ oc get pvc

NAME STATUS VOLUME CAPACITY STORAGECLASS AGE

mariadb Bound general-7fc6d0bc-... 16Gi general 1h

wordpress Bound general-7fc4e151-b84c-... 1Ti general 1h

Note: The PV name is the Docker Volume name and not the actual Nimble volume name. By default a ".docker" suffix is added to the name. Both suffix and prefix are configurable by a Cluster Administrator.

To be able to remove the production PVCs and prepare the restore, the deployments need to be taken down:

$ oc delete deploy/wordpress deploy/mariadb

deployment.extensions "wordpress" deleted

deployment.extensions "mariadb" deleted

Ensure pods are deleted (with oc get pods), then:

$ oc delete pvc/wordpress pvc/mariadb

persistentvolumeclaim "wordpress" deleted

persistentvolumeclaim "mariadb" deleted

Before re-creating the PVCs, we need to annotate the PVCs with the volume names to restore, edit the spec before continuing by using this oneliner:

$ sed -e 's/%WORDPRESS_VOLUME%/general-7fc4e151-....docker/' \

-e 's/%MARIADB_VOLUME%/general-7fc6d0bc-....docker/' \

pvcs-restore.yaml | oc create -f-

persistentvolumeclaim "wordpress" created

persistentvolumeclaim "mariadb" created

Note: A general behavior of Nimble restore functionality is that the volume need to be in an offline state. This is achieved by removing the volume from OpenShift with deleteOnRm=false.

By inspecting the PVCs, new PVs has been created, bring the deployments back up:

$ oc get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

mariadb Bound general-e8e4fd11-... 16Gi RWO general 7s

wordpress Bound general-e8e34e7e-... 1Ti RWO general 7s

$ oc create -f wp.yaml

deployment.extensions "mariadb" created

deployment.extensions "wordpress" created

Note: This will output some errors of existing objects, which is expected.

Refresh the WordPress site once the application is up and running again. The application is now restored from the nightly snapshot and this is presto moment number two!

Preparing a cluster for recovery from remote backups

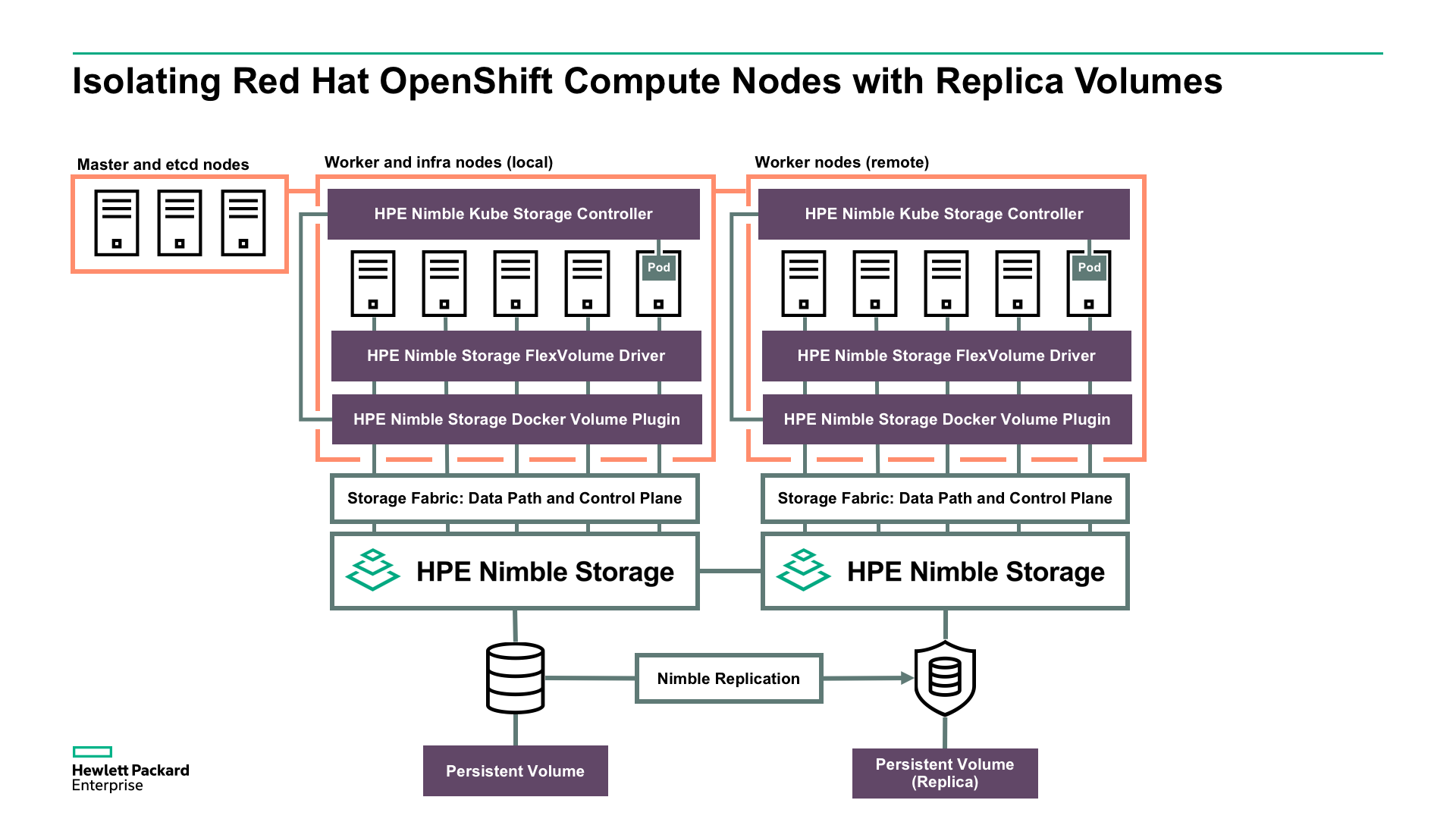

In the event of having a remote replica of a Nimble volume, either to a downstream Nimble array or HPE Cloud Volumes, it’s possible to isolate OpenShift compute nodes adjacent to the replication destination and access the data through the compute node. When labelled and tainted properly it’s possible to have these remote compute nodes part of the same OpenShift cluster for restoring files from snapshots with longer retention than is available on the local volume.

Once the API server is up and running again, mark the remote node unschedulable and observe the taint on the node:

# oc adm manage-node —schedulable=false remote-compute

NAME STATUS ROLES AGE VERSION

remote-compute Ready,SchedulingDisabled compute 41d v1.10.0+b81c8f8

# oc get node remote-compute -o yaml | grep -A4 taints

taints:

- effect: NoSchedule

key: node.kubernetes.io/unschedulable

timeAdded: 2018-09-07T15:26:17Z

unschedulable: true

With labels and taints in place, deploy the remote proxy:

$ oc create -f proxy-remote.yaml

deployment.extensions "proxy-remote" created

The HPE Nimble Kube Storage Controller listens to events that are destined to the hpe.com prefix. To help distinguish the “local” default controller we need to create a second one that listens on a different prefix.

Deploy the remote controller:

# oc create -f dep-kube-storage-controller-remote.yaml

deployment.extensions "kube-storage-controller-doryd-remote" created

Validate that there is a second instance of doryd and the proxy scheduled on the remote node:

# oc get pod -n kube-system -o wide

$ oc get pod -o wide

Pay attention to the NODE column.

We’ll also craft a StorageClass that allow Application Owners to import replica volumes as clones.

$ oc create -f sc-remote.yaml

storageclass.storage.k8s.io "remote" created

It’s also important to reconfigure NLT to connect to the remote array and have the FlexVolume driver serve the remote controller. The following steps assumes no prior group is configured with NLT.

# nltadm --group --add --ip-address <REMOTE GROUP MANAGEMEMT IP> \

--username <GROUP USERNAME> --password <GROUP PASSWORD>

# mv /usr/libexec/kubernetes/kubelet-plugins/volume/exec/hpe.com~nimble/nimble /usr/libexec/kubernetes/kubelet-plugins/volume/exec/remote.hpe.com~nimble/nimble

The key element through configuration of the remote location is the provisioner prefix. The HPE Nimble Kube Storage Controller, the HPE Nimble FlexVolume Driver and the StorageClass all need to be keyed against the same prefix, which in essence, could be at the Cluster Administrator’s discretion to configure as desired. And, most importantly, the PVC also need to map against the key in the annotations.

Note: HPE Cloud Volumes will be covered in a future post, but as a hint, the procedures will be identical, just a different FlexVolume driver name.

Recovering parts of a filesystem from a remote location

Remote replica volumes are offline and not known to OpenShift, that’s why we need separate a StorageClass with the remote prefix to import the replica volume. Inherently, this means we need to know the exact name of the replica volume when creating the PVC as well as which snapshot to use for the import. Most likely, the default snapshot, which is the latest snapshot, is probably not what’s desired. I quick e-mail to the Storage Architect, however, would sort that out.

Create the PVC and attach to the remote proxy once the details have been annotated:

$ sed -e 's/%WORDPRESS_REMOTE%/general-.../' \

-e 's/%WORDPRESS_REMOTE_SNAPSHOT%/gene...cker-Snapshot-18:21.../' \

pvc-remote.yaml | oc create -f-

persistentvolumeclaim "wordpress-remote" created

$ oc volume --add -t pvc --claim-name wordpress-remote \

-m /wordpress-remote --name wordpress-remote deploy/proxy-remote

deployment.apps "proxy-remote" updated

$ oc rsh deploy/proxy-remote ls /wordpress-remote

index.php ...

Copying data from the remote proxy is no different from the local proxy deployment as we’re still in the same cluster. This is the third presto of this tutorial!

Conclusion

We’re at a stage where Nimble is feature complete from a backup & recovery standpoint given the API interfaces we’ve been using to get here. This gives us the distinct advantage to have a robust CSI implementation with all the bells and whistles when CSI will come out of beta for OpenShift. Snapshots as native Kubernetes/OpenShift objects are far more intuitive for the Application Owner than having to rely on external references for a starter.

In the next part of "Data Protection for Containers" blog series we’ll discuss DR and features we provide to transition workloads between HPE Nimble Storage array groups and HPE Cloud Volumes. In the next major NimbleOS release we’ll also have something in store for said use cases. Stay tuned!

- Back to Blog

- Newer Article

- Older Article

- haniff on: High-performance, low-latency networks for edge an...

- StorageExperts on: Configure vSphere Metro Storage Cluster with HPE N...

- haniff on: Need for speed and efficiency from high performanc...

- haniff on: Efficient networking for HPE’s Alletra cloud-nativ...

- CalvinZito on: What’s new in HPE SimpliVity 4.1.0

- MichaelMattsson on: HPE CSI Driver for Kubernetes v1.4.0 with expanded...

- StorageExperts on: HPE Nimble Storage dHCI Intelligent 1-Click Update...

- ORielly on: Power Loss at the Edge? Protect Your Data with New...

- viraj h on: HPE Primera Storage celebrates one year!

- Ron Dharma on: Introducing Language Bindings for HPE SimpliVity R...