- Community Home

- >

- Storage

- >

- Around the Storage Block

- >

- Released: HPE Volume Driver for Kubernetes FlexVol...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

Released: HPE Volume Driver for Kubernetes FlexVolume Plugin 3.0 and more!

It’s been a remarkable journey going from a standalone Docker Volume Plugin exclusively for the Docker Engine and evolving through Docker Swarm, Mesos Marathon and Kubernetes. With the introduction of NimbleOS 5.1.3 we’re now ready to ship three distinct container integrations to support a broad set of container ecosystems and orchestrators.

- HPE Volume Driver for Kubernetes FlexVolume Plugin v3.0.0

- HPE Dynamic Provisioner for Kubernetes v3.0.0

- HPE Nimble Storage Volume Plugin for Docker v3.0.0

All integrations leverage a “Container Provider” architecture that is designed to support multiple backends. We’re already in the planning phases for the v3.1.0 release that will include additional HPE platforms. Also, to increase productivity between internal HPE teams, partners and our user base — all components have been open sourced under the Apache 2.0 license and all source code repositories with documentation are hosted on GitHub: FlexVolume Driver, Dynamic Provisioner and Docker Volume Plugin source code and documention.

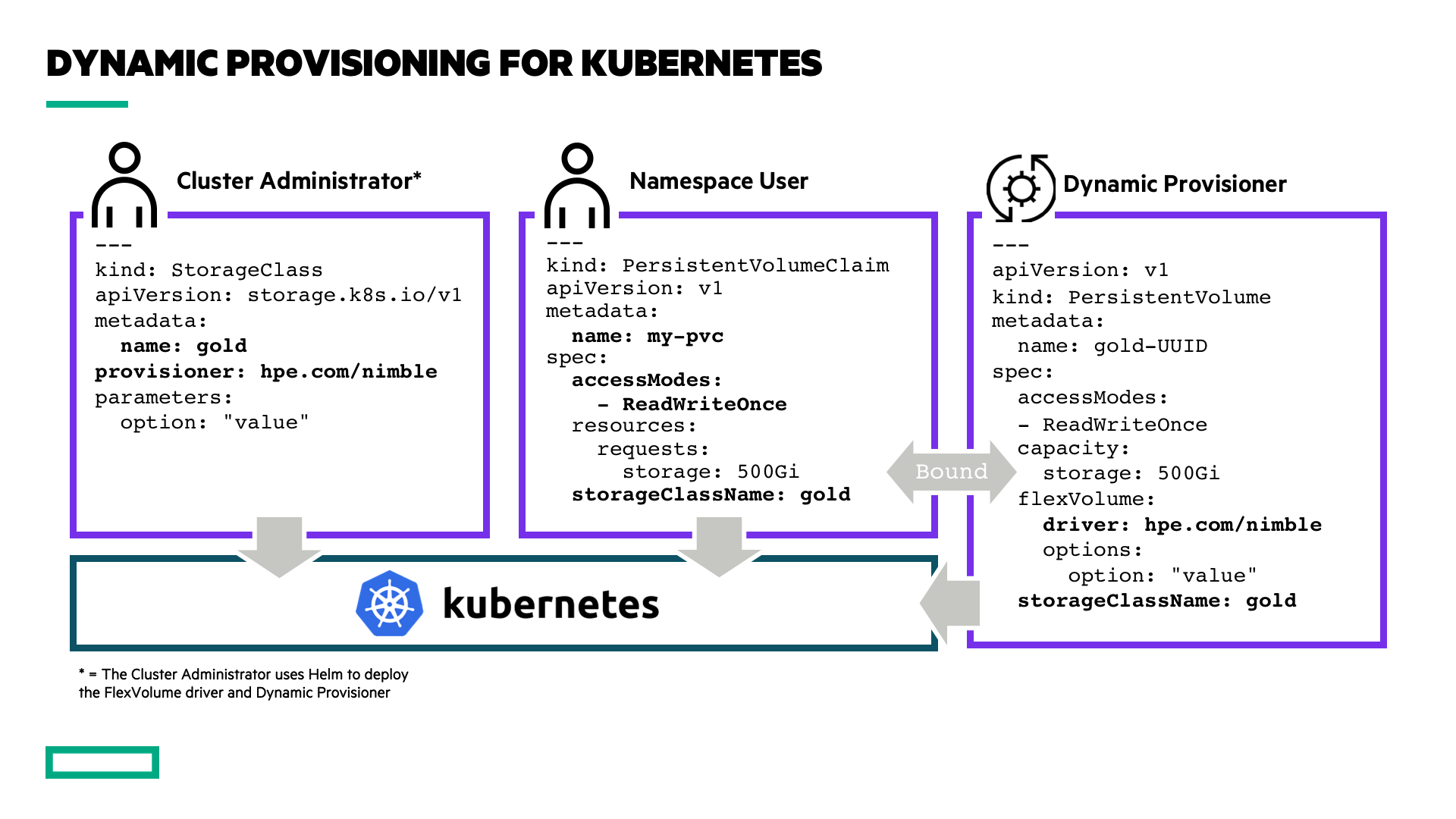

FlexVolume Driver and Dynamic Provisioner as a workload

The Kubernetes FlexVolume Plugin allow vendors to write binary drivers that handle volume attach/detach and mount/unmount for Kubernetes Pods. It’s a very primitive interface on its own and require a Dynamic Provisioner for Persistent Volumes to be useful.

We’ve vastly improved the delivery mechanism of the integrations by enabling Kubernetes administrators to deploy and manage the FlexVolume Driver and Dynamic Provisioner as native Kubernetes workloads. The workloads themselves may be declared directly with kubectl or deployed with Helm — the package manager for Kubernetes applications.

Detailed instructions are available in the FlexVolume Driver GitHub repository on how to deploy the integration either standalone or using Helm. An example installation workflow on GKE On-Prem could look like this (assuming Tiller has been installed and is working in your cluster):

Create a values.yaml file:

--- backend: "10.64.32.9" username: "admin" password: "admin" pluginType: "nimble" protocol: "iscsi" fsType: "xfs" storageClass: create: true defaultClass: true

Add Helm repo and deploy:

$ helm repo add hpe https://hpe-storage.github.io/co-deployments "hpe" has been added to your repositories $ helm install -f values.yaml --name hpe-flexvolume hpe/hpe-flexvolume-driver --namespace kube-system NAME: hpe-flexvolume LAST DEPLOYED: Mon Sep 23 11:00:28 2019 NAMESPACE: kube-system STATUS: DEPLOYED

The Helm chart also creates a default StorageClass as per the values.yaml file and users may immediately start creating PVCs:

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: my-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 32Gi

Paste in the PVC:

$ kubectl create -f- persistentvolumeclaim/my-pvc created $ kubectl get pvc/my-pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE my-pvc Bound hpe-standard-9037d5... 32Gi RWO hpe-standard 9s

The DaemonSet workload type automatically deploy one replica of the FlexVolume driver on each node of the cluster and ensures all the host packages are installed and necessary services are running prior to serving the cluster.

$ kubectl get ds/hpe-flexvolume-driver -nkube-system NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE AGE hpe-flexvolume-driver 3 3 3 3 3 11m

Scaling clusters with GKE On-Prem is done with a Machine Deployment API object. In the example below, my cluster is named "tme-dc". As we add nodes to the cluster, the FlexVolume driver will automatically be deployed as new nodes come online.

$ kubectl scale machinedeployment/tme-dc --replicas=5 machinedeployment.cluster.k8s.io/tme-dc scaled

After a few minutes, our DaemonSet is properly scaled across the two newly added nodes:

$ kubectl get ds/hpe-flexvolume-driver -nkube-system NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE AGE hpe-flexvolume-driver 5 5 5 5 5 15m

The new nodes that are joined to the cluster are automatically conformed and optimized to consume block storage from the HPE Nimble Storage array.

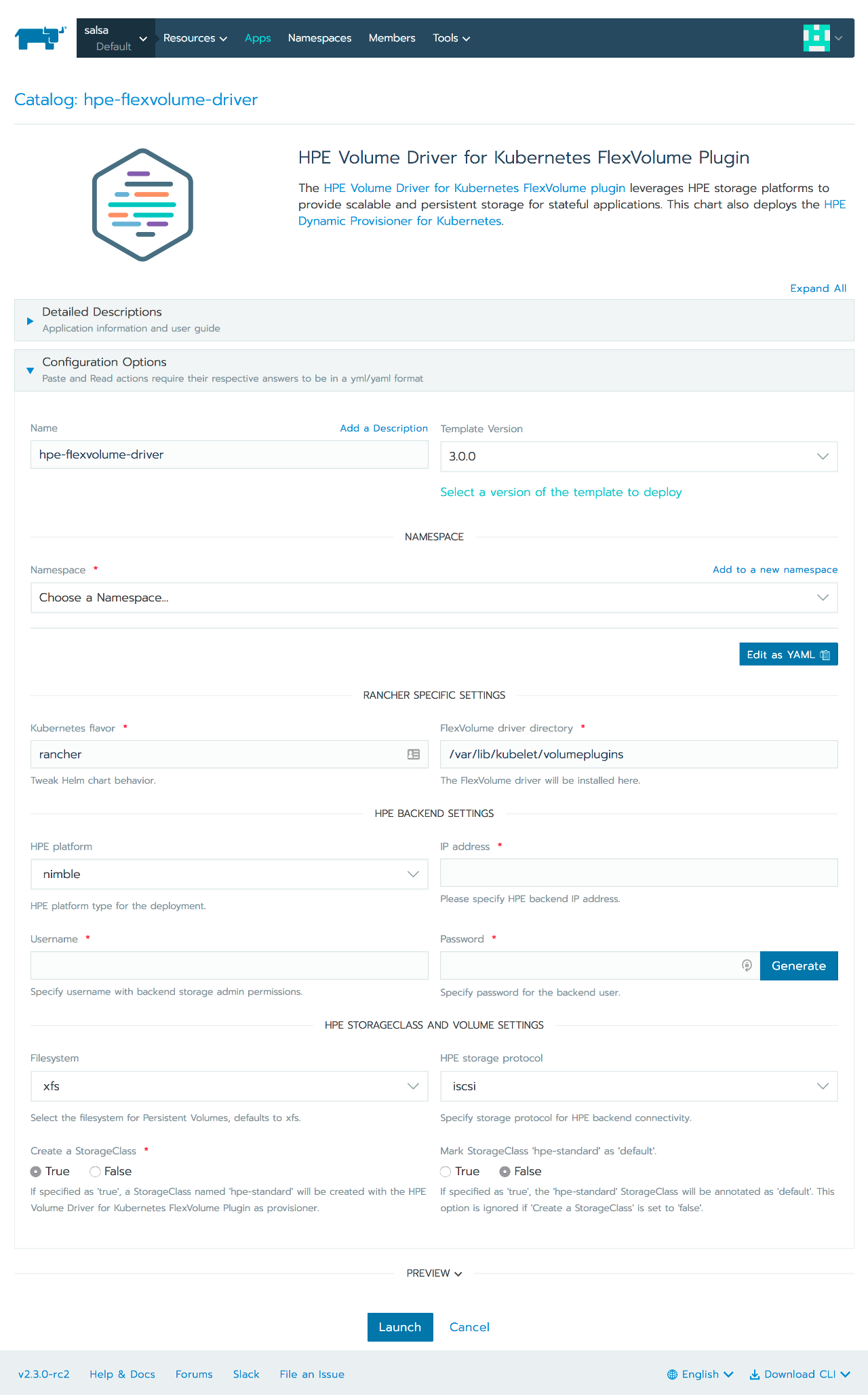

The FlexVolume Driver can also be deployed through the Rancher Catalog which provide an incredibly intuitive interface to deploy the driver.

Docker Volume Plugin

Moving forward the HPE Nimble Storage Volume Plugin for Docker will only be delivered as a managed plugin for Docker Swarm and the Docker Engine (see Summary below for deprecation of NLT). It provides the exact same feature set as the FlexVolume Driver with the simplicity of using either the Docker API, Docker CLI or Docker Compose to manage Docker Volumes on Nimble.

The Docker Volume Plugin is available as verified content on Docker Hub.

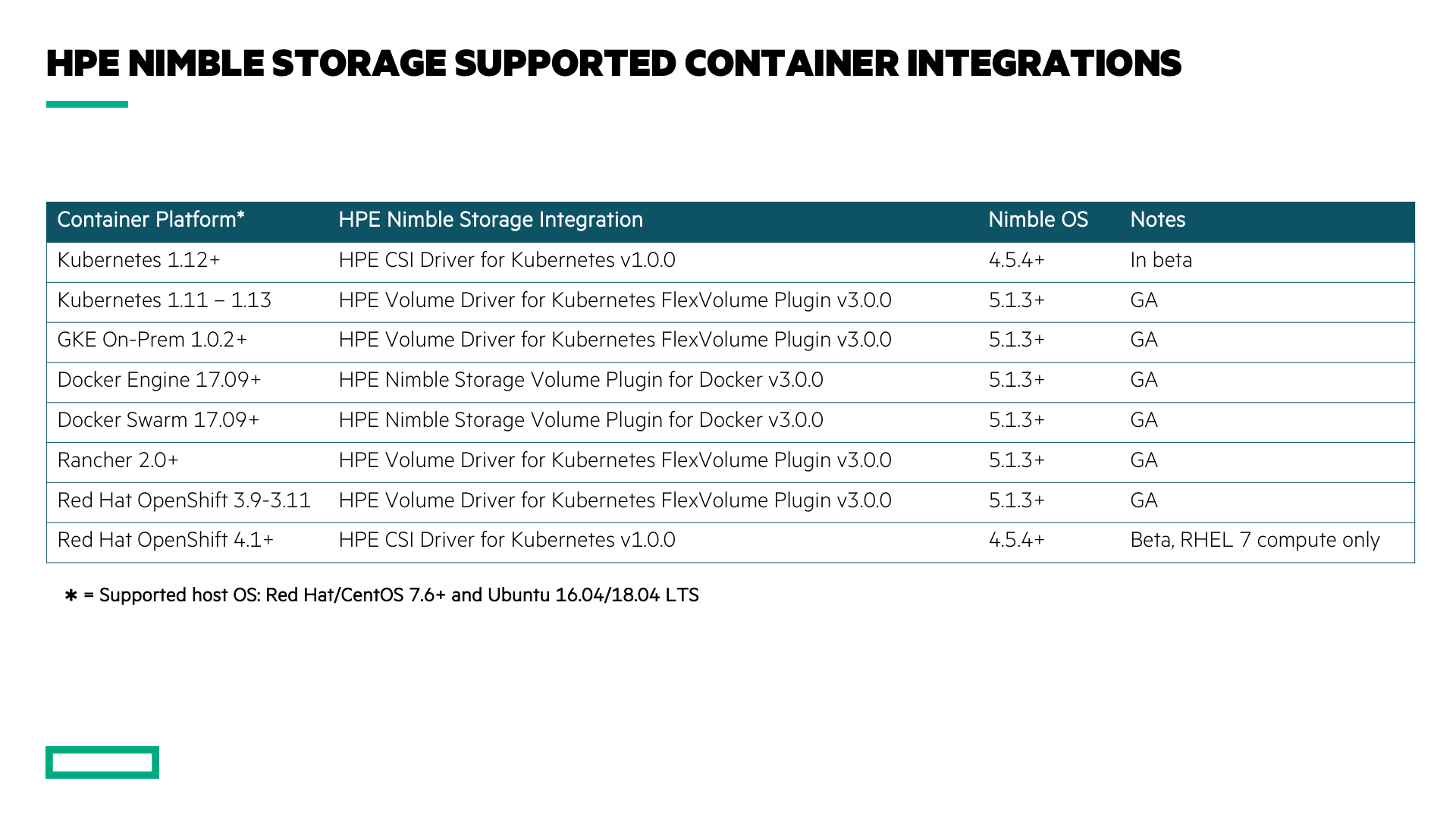

Supported Platforms

As there’s a lot of versions both northbound and southbound, we’ve put together this table as an overview what we take calls on.

The integrations may work on many other downstream Kubernetes distributions. Please reach out to your HPE representatives if your environment isn’t listed. A warm welcome Google and Rancher to the family!

What’s new in this release?

While HPE Nimble Storage are leaps and bounds ahead of the competition in terms of abstracting enterprise storage capabilities to container ecosystems. The focus for this release has primarily been to harden and broaden the support for container platforms to ensure a seamless experience for our customers.

That said, besides a ton of scalability, manageability and reliability improvements we introduced a new parameter: syncOnUnmount. This parameter is the foundation to provide inter-cluster workload transitions. To leverage this functionality, new volumes need to be provisioned with both syncOnUnmount and protectionTemplate with a remote HPE Nimble Storage array accessible by the cluster the workload is transitioning to. This capability is a game changer and we’ve demonstrated this in early Tech Previews with HPE Cloud Volumes. This is now a customer consumable capability between Nimble arrays. The feature also warrants its own blog post. Stay tuned to the HPE Storage Tech Insiders for full disclosure!

Summary

We’ll continue to support our install base with the FlexVolume Driver and Dynamic Provisioner as long as required along with the Docker Volume Plugin. The Container Storage Interface (CSI) was introduced in Kubernetes 1.12 and we recently published a beta version of our HPE CSI Driver for Kubernetes for early adopters and proof of concepts. The CSI driver will be the focus release for newer Kubernetes-based platforms once the CSI driver become Generally Available (GA). It may also be useful for our current customers that the legacy delivery method through the HPE Nimble Storage Linux Toolkit (NLT) is sunsetting where NLT-2.5.2 is the last release in the family to support containers.

Please reach out to your HPE representative for more information on HPE Nimble Storage and how we can help enable your container projects. Check out previous blogs on this topic to learn more about the use cases HPE enables for persistent storage. Also, come hang out with the team on Slack, you’ll find us in #kubernetes and #nimblestorage by signing up at slack.hpedev.io.

- Back to Blog

- Newer Article

- Older Article

- haniff on: High-performance, low-latency networks for edge an...

- StorageExperts on: Configure vSphere Metro Storage Cluster with HPE N...

- haniff on: Need for speed and efficiency from high performanc...

- haniff on: Efficient networking for HPE’s Alletra cloud-nativ...

- CalvinZito on: What’s new in HPE SimpliVity 4.1.0

- MichaelMattsson on: HPE CSI Driver for Kubernetes v1.4.0 with expanded...

- StorageExperts on: HPE Nimble Storage dHCI Intelligent 1-Click Update...

- ORielly on: Power Loss at the Edge? Protect Your Data with New...

- viraj h on: HPE Primera Storage celebrates one year!

- Ron Dharma on: Introducing Language Bindings for HPE SimpliVity R...