- Community Home

- >

- Servers and Operating Systems

- >

- Servers & Systems: The Right Compute

- >

- Comparing HPE on-premises infrastructure vs. Amazo...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

Comparing HPE on-premises infrastructure vs. Amazon Web Services (AWS): Workload and throughput

In Part 1 of our series, Lou Gagliardi provided an overview of the results from the HPE on-prem vs. AWS study and a brief primer on AWS. In Part 2, Lou examined the TCO for each of the solutions evaluated in the study. Here in Part 3, I discuss the makeup of the workload and provide details of the performance results while comparing each result to the others. You can download the complete technical white paper here: HPE On-Prem vs. AWS. (Registration is required.)

Overview of the cloud-scale advanced analytics workload

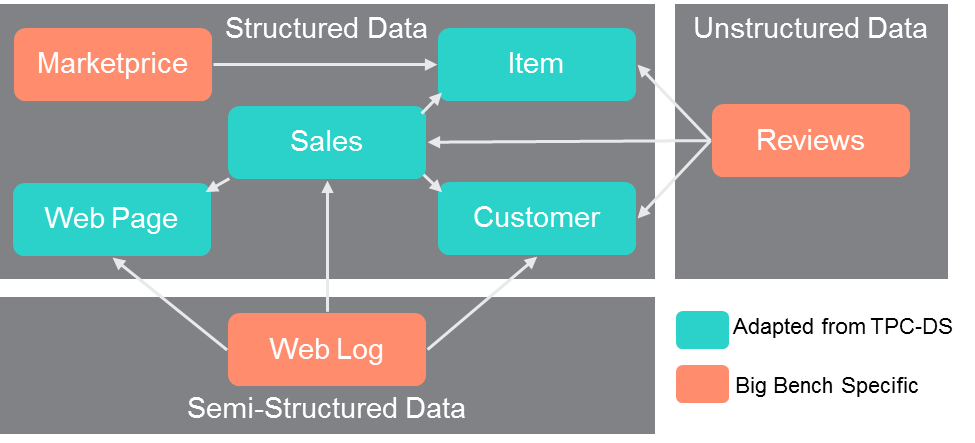

After careful consideration, we chose a workload derived from TPCx-BB, a recent benchmark standardized by the TPC (Transaction Processing Performance Council). This end-to-end application TPC benchmark was the result of a fruitful collaboration between companies and academic researchers. TPCx-BB is designed with a snowflake schema similar to TPC-DS using a retail model consisting of five fact tables representing three sales channels, store sales, catalog sales, and online sales (TPCx-BB Specification).

The workload includes advanced analytics and reporting queries on structured, semi-structured, and unstructured data. These queries can be classified into four areas: Pure Hive queries, Hive queries with MapReduce programs, Hive queries using natural language processing (NLP) and queries using Apache Spark MLlib. This includes a broad mix of real-world business functions as follows:

- Marketing—Cross-selling, customer micro-segmentation, sentiment analysis, enhancing multichannel consumer experiences

- Merchandising—Assortment optimization, pricing optimization

- Operations—Performance transparency, product return analysis

- Supply chain—Inventory management

- Reporting—Customers and products

Implemented with 30 advanced analytics queries:

- 14 Declarative queries (SQL)

- 7 Queries with Natural Language Processing

- 4 Queries with data preprocessing with Map/Reduce jobs

- 5 Queries with Machine Learning post processing

The workload implements several real-world use cases through a set of queries. An sample use case is: “Find the categories with flat or declining sales for in-store purchases during a given year and for a given store.”

The workload executes these business functions by running various combinations of 30 separate queries to structured, semi-structured and unstructured data sets.

For testing purposes, gaining access TBs of data is cost prohibitive and impractical. Instead, the test data is generated locally using a packaged data generator, which provides consistency from run to run and enables the workload to scale to environments of multiple TBs of data. The data generation runs as a MapReduce job and creates text-based data directly stored on HDFS. The volume of data generated is defined by the scaling factor, where a scaling factor of 1000 generates approximately 1TB of raw data and a scaling factor of 3000 generates approximately 3TB of raw data. The 30-query workload is divided into a “power” phase and a “throughput” phase.

Analysis of each architecture

In the configurations, we generated results for the two most recent Intel generations: Broadwell (E5) and Skylake (Scalable Processor Family). With the AWS infrastructure, we examined all of the available instance types and selected the m4.16xlarge, i3.16xlarge and m5.24xlarge instances for worker nodes. For the management nodes, we selected the comparable c4.8xlarge, and m5.12xlarge for Broadwell and Skylake configurations, respectively. The configurations were discussed in detail in Part 2 of this blog series. However, a detailed BOM (bill of materials) for each on-prem cluster is available in Appendix A of the On-Prem vs. AWS white paper.

We optimized and ran the workload on each of the target configurations. For each test configuration, we measured the throughput performance according to the average number of queries that complete per minute or Qpm (queries per minute). We ran our tests at the scaling factors of 1TB and 3TB. Additional detail about the performance metric formula can be obtained from Appendix D of the complete white paper.

Running the workloads on AWS is a smooth process, even though it requires certain settings specific to the environment. For example, each time we stop and start the instances, the IP addresses change as the addresses from the previous session are released back into the pool so they can be used by other EC2 tenants. Since we use a VPC, the private IP remains the same and the only public IP changes.

All AWS workloads ran using the on-demand purchase option, since it is unfeasible for us to purchase with long-term commitments simply for this evaluation. However, we have no reason to believe the purchase option would have a material effect on the throughput performance. In a typical environment, there are additional charges per unit of data for data transferred to and from AWS instances. However, our TCO comparison did not include additional data transfer charges.

During the test, we noticed an anomaly in the performance comparison between the shared and dedicated options, but this was seen only with the AWS m4x instances. The shared instance’s throughput performance result was found to be better than the dedicated instances, in the ranges of 5-to-20%, for both the 1TB and 3TB scale factor runs and depending on the day. One theory is that there may be a sensitivity in access latency to the EBS volumes caused by the logical distance across the network for accesses from the dedicated instances. Dedicated instances may be physically provisioned farther away from the EBS volumes than shared instances. We don’t have a certain cause for this difference due to the lack of visibility and control of the infrastructure.

The throughput measurements for each configuration in total Queries per Minute (Qpm)

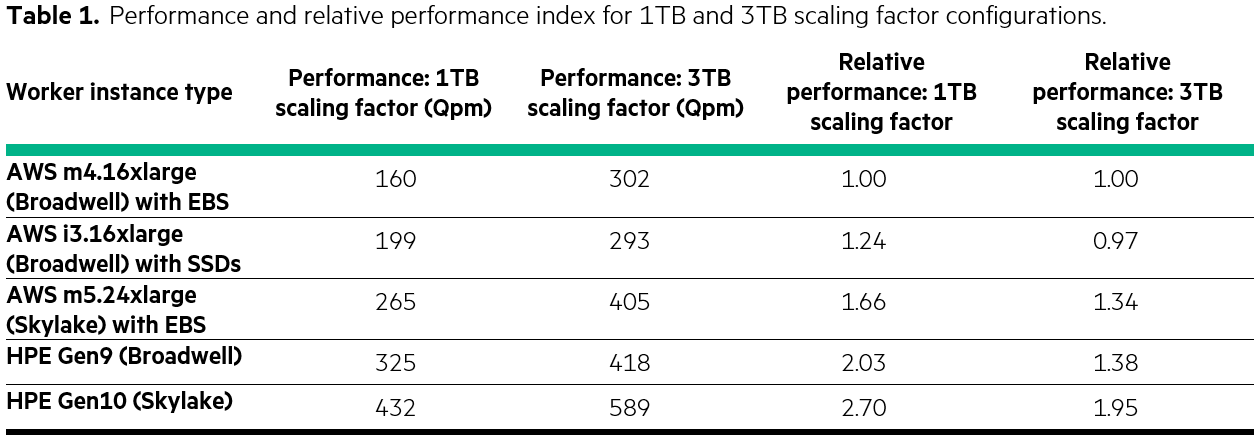

For comparison, we used the overall performance metric, Queries per minute (Qpm). In relative performance, we selected the m4.16xlarge cluster with EBS configuration as the normalized performance result. All results are normalized against this cluster’s performance value.

Here, Table 1 below shows the performance results in Qpm for each configuration and at the two different scaling factors (1TB and 3TB). For each scaling factor, we created a “relative performance index” metric column, which was normalized, i.e. 4th column for 1TB and 5th column for 3TB.

Using Qpm as our performance metric (highest is best), we compared the throughput performance of the AWS instances to both the HPE Gen9 and Gen10 configurations. We ran two performance tests, one using a scaling factor of 1TB and the other using a scaling factor of 3TB. Note: While the query rate (Qpm) fmay seem significantly lower than what you might expect if comparing to a rate for typical database queries, keep in mind for this workload, each query involves a set of complex analytics operations where data must be accessed, conditioned, analyzed and the outputs written.

As shown, the HPE Gen9 on-prem solution provided significantly higher Qpm than the AWS m4 and i3 configurations and the HPE Gen10 on-prem solution provided significantly higher Qpm than the AWS m5 configuration in each of the scaling factors. In fact, the HPE Gen9 (Broadwell generation) even outperformed the AWS m5 (Skylake generation).

Why is the performance of each of the AWS configurations significantly lower than the comparable HPE on-prem configurations?

Though we attempted to select AWS instances comparable to the HPE on-prem platforms, across the board, we found the throughput performance of the AWS systems to be substantially lower. This can be attributed to the AWS user’s inability to directly control several factors, including BIOS settings, platform power-efficiency settings, storage settings and several other control points traditionally used to optimize and tune such configurations.

In summary, the HPE on-premises solutions offer significantly stronger performance than each of the comparable AWS solutions at two different scale factors. In Part 4, Lou Gagliardi returns to the series, combining the TCO discussions from Part 2 with the throughput performance discussions here in Part 3 to examine and compare the TCO-performance ratios for each configuration explored in the study.

Follow the blog series

In this blog series, we present details and insights around the HPE and AWS comparison for the following topics:

Part 1: Introducing the HPE on-prem vs. AWS primer

- An overview of the study and the summary of findings in the comparison of HPE and AWS

- An AWS primer to provide a brief overview of what is available in AWS EC2 IaaS capabilities

Part 2: Total Cost of Ownership (TCO)

- The configuration options and selected configurations: HPE and AWS

- The “all-in” cost analysis for the on-prem configuration, including costs for maintenance labor, data center infrastructure, energy and cooling, carbon footprint and warranty

- The cost analysis for the AWS configurations based on reasonable configurations and purchase options

Part 3: The workload and throughput (performance)

- Overview of the cloud-scale advanced analytics workload

- The throughput measurements for each configuration in total Queries per Minute (Qpm)

- Analysis of each architecture and comparison of Local SSDs and EBS

Part 4: Price-performance and control

- Price-performance for each configuration

- A look at the cost of high-throughput EBS volumes

- Analysis of ability to control attributes of performance, data sovereignty, privacy, security

- Pay per use with variable payments based on actual metered usage

- Dynamic and instant growth flexibility

- Onsite extra capacity buffer

Meet Infrastructure Insights blogger Dr. Paul Cao, Master Technologist,

Paul joined Compaq Computer Corporation in 1997 as a team member in The Performance Engineering Lab. After graduating from Rice University with a Ph. D. in physics, he worked at Schlumberger, and PGS Tensor. His current investigation projects are in the area of ML/DL, advanced data analytics, performance accelerators, and simulation. He is a member of the TPC (Transaction Processing Performance Council), The Society of HPC and the eHRI. Paul’s hobbies include soccer, windsurfing, crispr, volleyball and table tennis.

Infrastructure Insights

Hewlett Packard Enterprise

- Back to Blog

- Newer Article

- Older Article

- PerryS on: Explore key updates and enhancements for HPE OneVi...

- Dale Brown on: Going beyond large language models with smart appl...

- alimohammadi on: How to choose the right HPE ProLiant Gen11 AMD ser...

- ComputeExperts on: Did you know that liquid cooling is currently avai...

- Jams_C_Servers on: If you’re not using Compute Ops Management yet, yo...

- AmitSharmaAPJ on: HPE servers and AMD EPYC™ 9004X CPUs accelerate te...

- AmandaC1 on: HPE Superdome Flex family earns highest availabili...

- ComputeExperts on: New release: What you need to know about HPE OneVi...

- JimLoi on: 5 things to consider before moving mission-critica...

- Jim Loiacono on: Confused with RISE with SAP S/4HANA options? Let m...

-

COMPOSABLE

77 -

CORE AND EDGE COMPUTE

146 -

CORE COMPUTE

172 -

HPC & SUPERCOMPUTING

141 -

Mission Critical

89 -

SMB

169